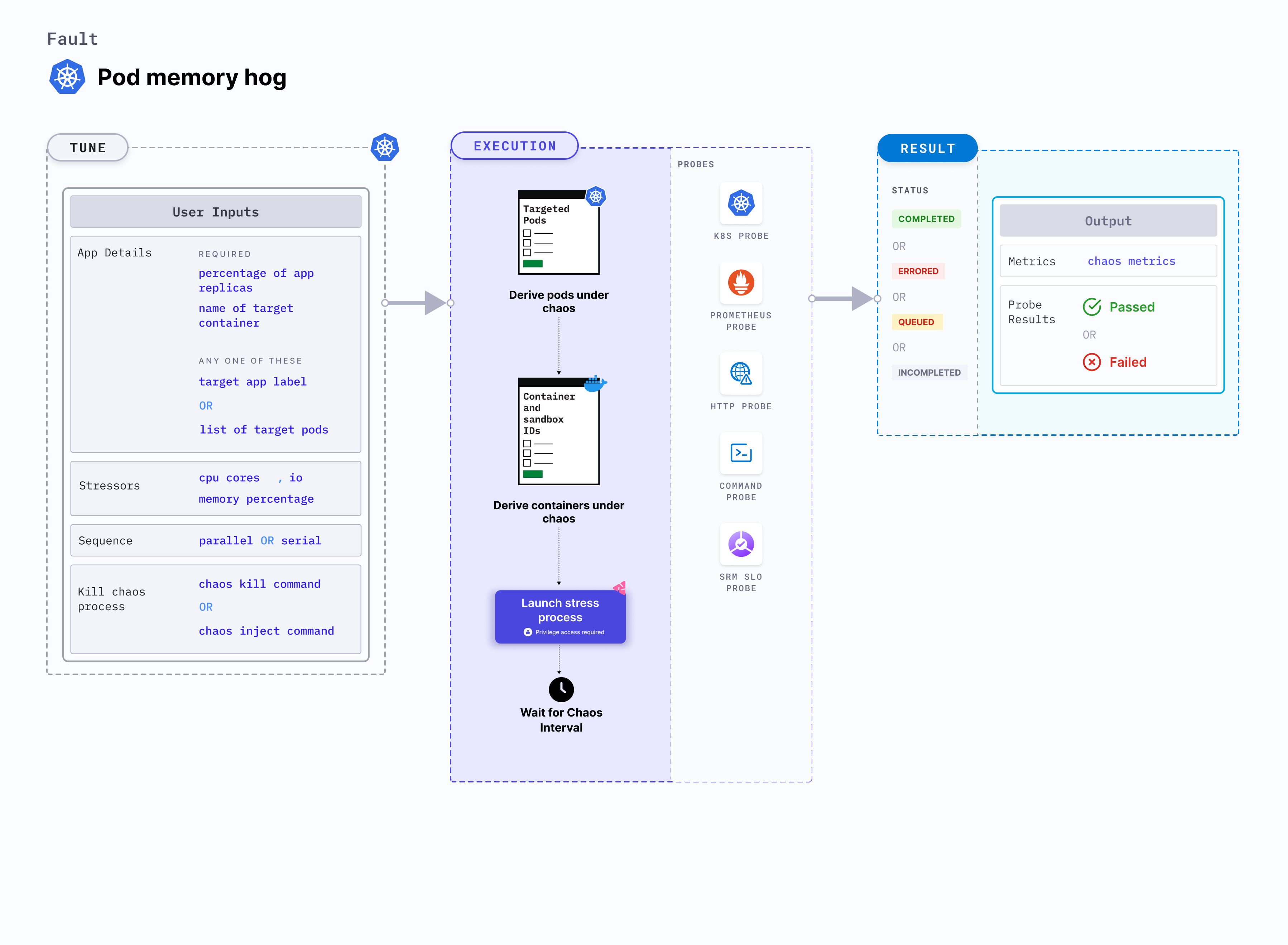

Pod memory hog

Pod memory hog is a Kubernetes pod-level chaos fault that consumes excessive memory resources on the application container. Since this fault stresses the target container, the primary process within the container may consume the available system memory on the node.

- Memory usage within containers is subject to various constraints in Kubernetes.

- When specification mentions the resource limits, exceeding these limits results in termination of the container due to OOM kill.

- For containers that have no resource limits, the blast radius is high which results in the node being killed based on the

oom_score.

Use cases

Pod memory hog exec:

- Simulates conditions where the application pods experience memory spikes either due to expected or undesired processes.

- Simulates the situation of memory leaks in the deployment of microservices.

- Simulates application slowness due to memory starvation, and noisy neighbour problems due to hogging.

- Verifies pod priority and QoS setting for eviction purposes.

- Verifies application restarts on OOM (out of memory) kills.

- Tests how the overall application stack behaves when such a situation occurs.

Permissions required

Below is a sample Kubernetes role that defines the permissions required to execute the fault.

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: hce

name: pod-memory-hog

spec:

definition:

scope: Cluster # Supports "Namespaced" mode too

permissions:

- apiGroups: [""]

resources: ["pods"]

verbs: ["create", "delete", "get", "list", "patch", "deletecollection", "update"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "get", "list", "patch", "update"]

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["deployments, statefulsets"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["replicasets, daemonsets"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["chaosEngines", "chaosExperiments", "chaosResults"]

verbs: ["create", "delete", "get", "list", "patch", "update"]

- apiGroups: ["batch"]

resources: ["jobs"]

verbs: ["create", "delete", "get", "list", "deletecollection"]

Prerequisites

- Kubernetes > 1.16

- The application pods should be in the running state before and after injecting chaos.

Supported environments

| Platform | Support Status |

|---|---|

| GKE (Google Kubernetes Engine) | ✅ Supported |

| EKS (Amazon Elastic Kubernetes Service) | ✅ Supported |

| AKS (Azure Kubernetes Service) | ✅ Supported |

| GKE Autopilot | ✅ Supported |

| Self-managed Kubernetes | ✅ Supported |

Optional tunables

| Tunable | Description | Notes |

|---|---|---|

| MEMORY_CONSUMPTION | Amount of memory consumed by the Kubernetes pod (in megabytes). | Default: 500 MB. For more information, go to memory consumption |

| NUMBER_OF_WORKERS | Number of workers used to run the stress process. | Default: 1. For more information, go to workers for stress |

| TOTAL_CHAOS_DURATION | Duration for which to insert chaos (in seconds). | Default: 60 s. For more information, go to duration of the chaos |

| TARGET_PODS | Comma-separated list of application pod names subject to pod memory hog. | If this value is not provided, the fault selects target pods randomly based on provided appLabels. For more information, go to target specific pods |

| TARGET_CONTAINER | Name of the target container. | If this value is not provided, the fault selects the first container of the target pod. For more information, go to target specific container |

| NODE_LABEL | Node label used to filter the target node if TARGET_NODE environment variable is not set. | It is mutually exclusive with the TARGET_NODE environment variable. If both are provided, the fault uses TARGET_NODE. For more information, go to node label. |

| CONTAINER_RUNTIME | Container runtime interface for the cluster. | Default: containerd. Supports docker, containerd and crio. For more information, go to container runtime |

| SOCKET_PATH | Path of the containerd or crio or docker socket file. | Default: /run/containerd/containerd.sock. For more information, go to socket path |

| PODS_AFFECTED_PERC | Percentage of total pods to target. Provide numeric values. | Default: 0 (corresponds to 1 replica). For more information, go to pod affected percentage |

| RAMP_TIME | Period to wait before and after injecting chaos (in seconds). | For example, 30 s. For more information, go to ramp time |

| LIB_IMAGE | Image used to inject chaos. | Default: harness/chaos-go-runner:main-latest. For more information, go to image used by the helper pod. |

| SEQUENCE | Sequence of chaos execution for multiple target pods. | Default: parallel. Supports serial and parallel. For more information, go to sequence of chaos execution |

Memory consumption

Amount of memory consumed by the target pod. Tune it by using the MEMORY_CONSUMPTION environment variable.

The following YAML snippet illustrates the use of this environment variable:

# define the memory consumption in MB

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: litmus-admin

experiments:

- name: pod-memory-hog

spec:

components:

env:

# memory consumption value

- name: MEMORY_CONSUMPTION

value: "500" #in MB

- name: TOTAL_CHAOS_DURATION

value: "60"

Workers for stress

Number of workers used to stress the resources. Tune it by using the NUMBER_OF_WORKERS environment variable.

The following YAML snippet illustrates the use of this environment variable:

# number of workers used for the stress

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: litmus-admin

experiments:

- name: pod-memory-hog

spec:

components:

env:

# number of workers for stress

- name: NUMBER_OF_WORKERS

value: "1"

- name: TOTAL_CHAOS_DURATION

value: "60"

Container runtime and socket path

The CONTAINER_RUNTIME and SOCKET_PATH envrionment variables to set the container runtime and socket file path, respectively.

CONTAINER_RUNTIME: It supportsdocker,containerd, andcrioruntimes. The default value iscontainerd.SOCKET_PATH: It contains path of containerd socket file by default(/run/containerd/containerd.sock). Fordocker, specify path as/var/run/docker.sock. Forcrio, specify path as/var/run/crio/crio.sock.

The following YAML snippet illustrates the use of these environment variables:

## provide the container runtime and socket file path

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: engine-nginx

spec:

engineState: "active"

annotationCheck: "false"

appinfo:

appns: "default"

applabel: "app=nginx"

appkind: "deployment"

chaosServiceAccount: litmus-admin

experiments:

- name: pod-io-stress

spec:

components:

env:

# runtime for the container

# supports docker, containerd, crio

- name: CONTAINER_RUNTIME

value: "containerd"

# path of the socket file

- name: SOCKET_PATH

value: "/run/containerd/containerd.sock"

- name: TOTAL_CHAOS_DURATION

VALUE: "60"