Harness Kubernetes services

This topic describes how to add and configure a Harness Kubernetes service.

A Kubernetes service represents the microservices and other workloads you want to deploy to the cluster.

Setting up a Kubernetes service involves the following steps:

- Add your manifests.

- Add the artifacts you want to deploy.

- Add any service variables you want to use in your manifests or pipeline.

Manifests

Harness supports the following manifest types and orchestration methods.

For more information about using Kubernetes manifests in Harness, go to Add Kubernetes manifests.

Kubernetes

Use Kubernetes manifests

You can use:

- Standard Kubernetes manifests hosted in any repo or in Harness.

- Values YAML files that use Go templating to template manifests.

- Values YAML files can use a mix of hardcoded values and Harness expressions.

Details

Watch a short video

Here's a quick video showing you how to add manifests and Values YAML files in Harness. It covers Kubernetes as well as other types like Helm Charts.- YAML

- API

- Terraform Provider

- Pipeline Studio

- Values YAML

Here's a YAML example for a service with manifests hosted in Github and the nginx image hosted in Docker Hub.Example

service:

name: Kubernetes

identifier: Kubernetes

serviceDefinition:

type: Kubernetes

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: Docker_Hub_with_Pwd

imagePath: library/nginx

tag: <+input>

identifier: nginx

type: DockerRegistry

manifests:

- manifest:

identifier: nginx

type: K8sManifest

spec:

store:

type: Github

spec:

connectorRef: harnessdocs2

gitFetchType: Branch

paths:

- default-k8s-manifests/Manifests/Files/templates

branch: main

valuesPaths:

- default-k8s-manifests/Manifests/Files/ng-values.yaml

skipResourceVersioning: false

gitOpsEnabled: false

Create a service using the Create Services API.Services API example

curl -i -X POST \

'https://app.harness.io/gateway/ng/api/servicesV2/batch?accountIdentifier=<Harness account Id>' \

-H 'Content-Type: application/json' \

-H 'x-api-key: <Harness API key>' \

-d '[{

"identifier": "KubernetesTest",

"orgIdentifier": "default",

"projectIdentifier": "CD_Docs",

"name": "KubernetesTest",

"description": "string",

"tags": {

"property1": "string",

"property2": "string"

},

"yaml": "service:\n name: KubernetesTest\n identifier: KubernetesTest\n serviceDefinition:\n type: Kubernetes\n spec:\n artifacts:\n primary:\n primaryArtifactRef: <+input>\n sources:\n - spec:\n connectorRef: account.harnessImage\n imagePath: library/nginx\n tag: stable-perl\n identifier: nginx\n type: DockerRegistry\n manifests:\n - manifest:\n identifier: myapp\n type: K8sManifest\n spec:\n store:\n type: Harness\n spec:\n files:\n - /Templates\n valuesPaths:\n - /values.yaml\n skipResourceVersioning: false\n enableDeclarativeRollback: false\n gitOpsEnabled: false"

}]'

For the Terraform Provider resource, go to harness_platform_service.Example

resource "harness_platform_service" "example" {

identifier = "identifier"

name = "name"

description = "test"

org_id = "org_id"

project_id = "project_id"

## SERVICE V2 UPDATE

## We now take in a YAML that can define the service definition for a given Service

## It isn't mandatory for Service creation

## It is mandatory for Service use in a pipeline

yaml = <<-EOT

service:

name: name

identifier: identifier

serviceDefinition:

spec:

manifests:

- manifest:

identifier: manifest1

type: K8sManifest

spec:

store:

type: Github

spec:

connectorRef: <+input>

gitFetchType: Branch

paths:

- files1

repoName: <+input>

branch: master

skipResourceVersioning: false

configFiles:

- configFile:

identifier: configFile1

spec:

store:

type: Harness

spec:

files:

- <+org.description>

variables:

- name: var1

type: String

value: val1

- name: var2

type: String

value: val2

type: Kubernetes

gitOpsEnabled: false

EOT

}

To add Kubernetes manifests to your service, do the following:

-

In your project, in CD (Deployments), select Services.

-

Select Manage Services, and then select New Service.

-

Enter a name for the service and select Save.

-

Select Configuration.

-

In Service Definition, select Kubernetes.

-

In Manifests, click Add Manifest.

-

In Specify Manifest Type, select K8s Manifest, and then click Continue.

-

In Specify K8s Manifest Store, select the Git provider.

The settings for each Git provider are slightly different, but you simply want to point to the Git account For example, click GitHub, and then select or create a new GitHub Connector. See Connect to Code Repo.

-

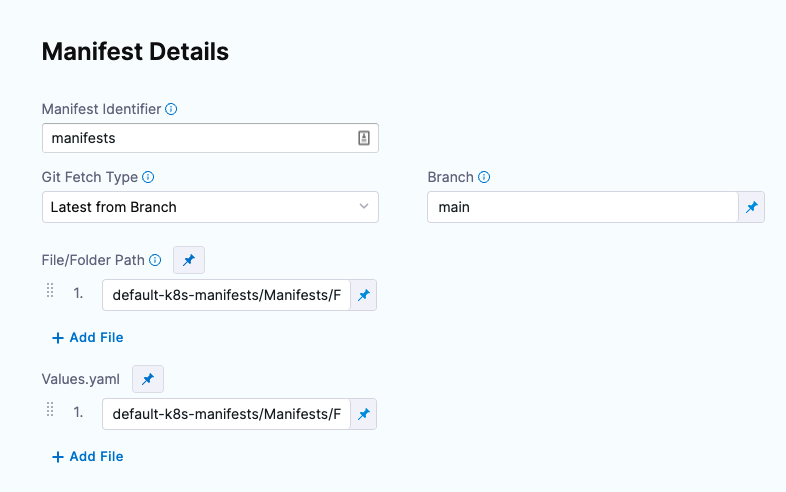

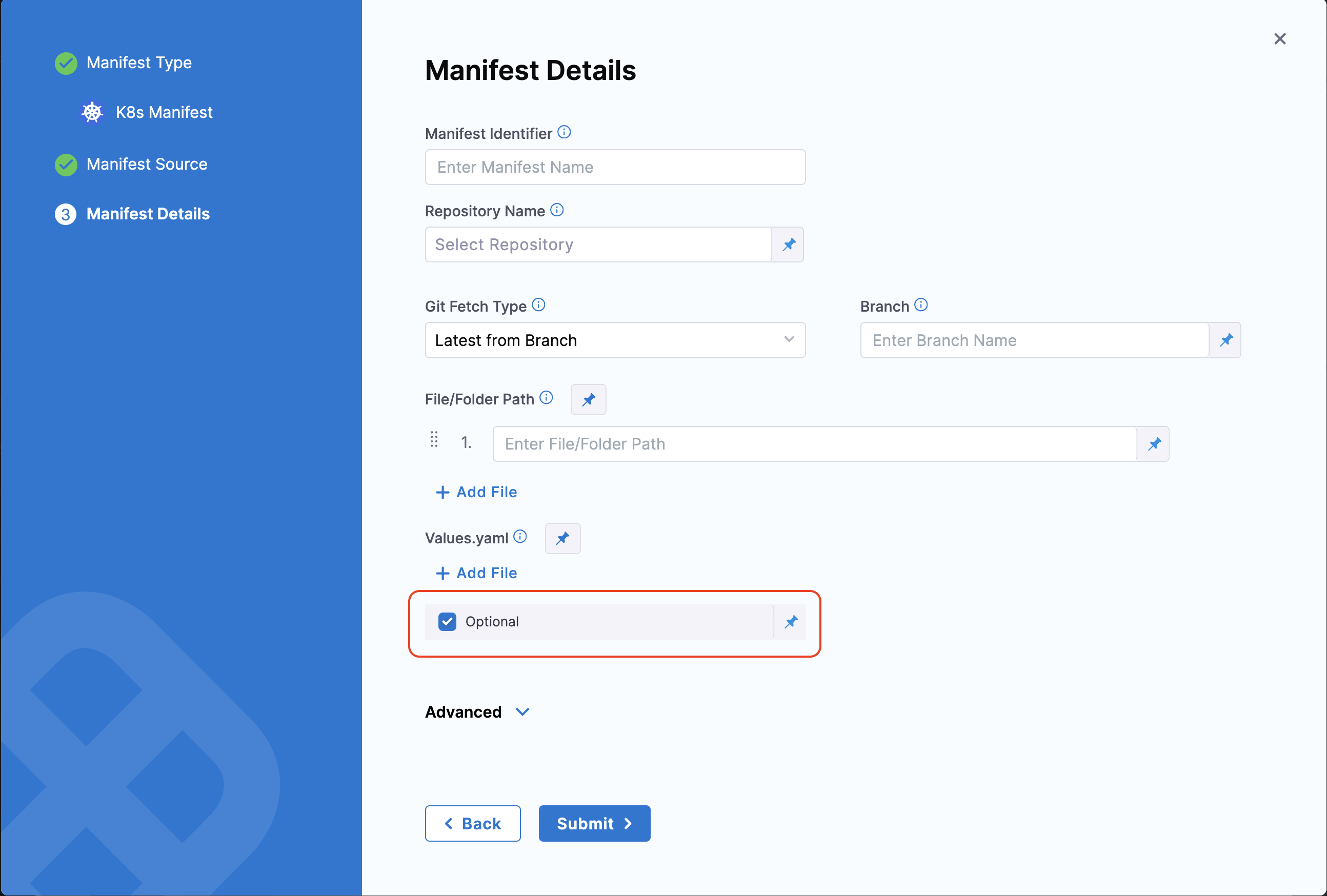

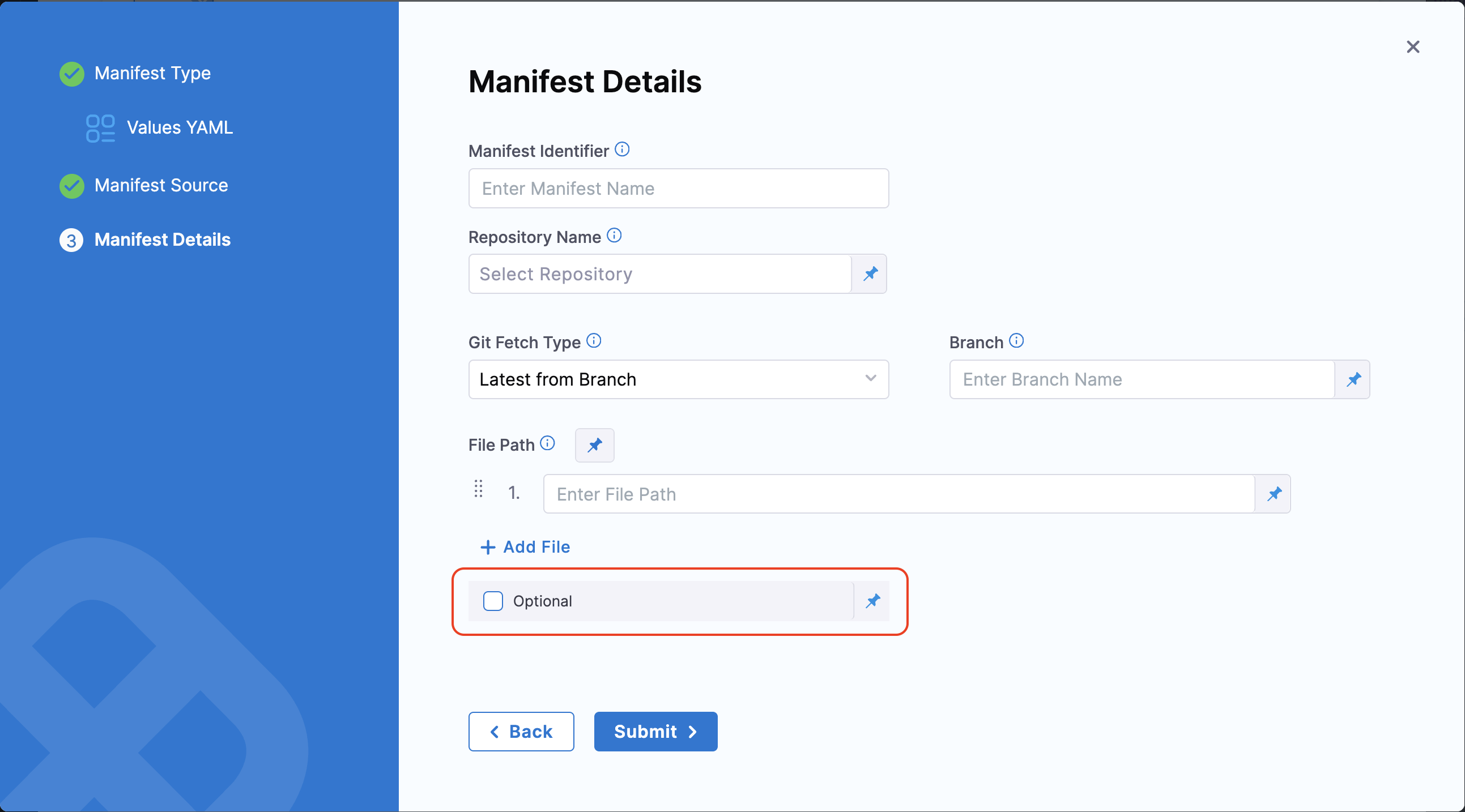

Click Continue. Manifest Details appears.

-

In Manifest Identifier, enter an Id for the manifest.

-

If you selected a Connector that uses a Git account instead of a Git repo, enter the name of the repo where your manifests are located in Repository Name.

-

In Git Fetch Type, select Latest from Branch or Specific Commit ID, and then enter the branch or commit Id for the repo.

-

For Specific Commit ID, you can also use a Git commit tag.

-

In File/Folder Path, enter the path to the manifest file or folder in the repo. The Connector you selected already has the repo name, so you simply need to add the path from the root of the repo.

If you are using a values.yaml file and it's in the same repo as your manifests, in Values YAML, click Add File.

-

Enter the path to the values.yaml file from the root of the repo.

Here's an example with the manifest and values.yaml file added.

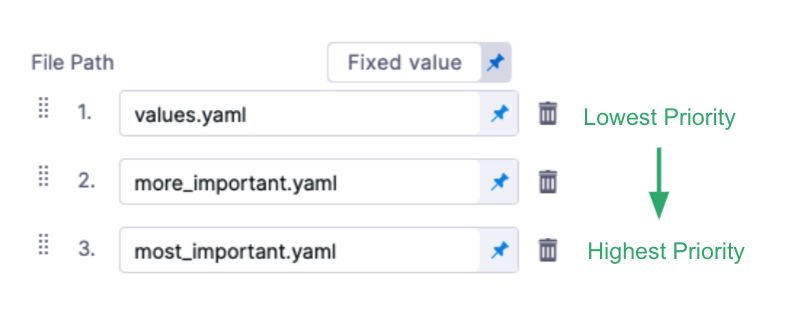

If you use multiple files, the highest priority is given from the last file, and the lowest priority to the first file. For example, if you have 3 files and the second and third files contain the same key:value as the first file, the third file's key:value overrides the second and first files.

note

noteAlternatively, you can proceed without configuring a

values.yamlfile.

For more information, refer to Manifest Optional Values. -

Click Submit. The manifest is added to Manifests.

Harness Kubernetes Services can use Values YAML files just like you would using Helm. Harness manifests can use Go templating with your Values YAML files and you can include Harness variable expressions in the Values YAML files.

If you are using a Values YAML file and it's in the same repo as your manifests, you can add it when you add your manifests, as described above (Values YAML --> Add File).

If you are using a Values YAML file and it's in a separate repo from your manifests, or you simply want to add it separately, you can add it as a separate file, described below.

You cannot use Harness variables expressions in your Kubernetes object manifest files. You can only use Harness variables expressions in Values YAML files.Add a Values YAML fileWhere is your Values YAML file located?

- Same folder as manifests: If you are using a values.yaml file and it's in the same repo as your manifests, you can add it when you add your manifests, as described above (Values YAML --> Add File).

- Separate from manifests: If your values file is located in a different folder, you can add it separately as a Values YAML manifest type, described below.

To add a Values YAML file, do the following:

-

In your project, in CD (Deployments), select Services.

-

Select Manage Services, and then select New Service.

-

Enter a name for the service and select Save.

-

Select Configuration.

-

In Service Definition, select Kubernetes.

-

In Manifests, click Add Manifest.

-

In Specify Manifest Type, select Values YAML, and click Continue.

-

In Specify Values YAML Store, select the Git repo provider you're using and then create or select a Connector to that repo. The different Connectors are covered in Connect to a Git Repo.

If you haven't set up a Harness Delegate, you can add one as part of the Connector setup. This process is described in Kubernetes CD tutorial, Helm CD tutorial and Install a Kubernetes delegate.

-

Once you've selected a Connector, click Continue.

-

In Manifest Details, you tell Harness where the values.yaml is located.

-

In Manifest Identifier, enter a name that identifies the file, like values.

-

If you selected a Connector that uses a Git account instead of a Git repo, enter the name of the repo where your manifests are located in Repository Name.

-

In Git Fetch Type, select a branch or commit Id for the manifest, and then enter the Id or branch.

- For Specific Commit ID, you can also use a Git commit tag.

- In File Path, enter the path to the values.yaml file in the repo.

noteYou can also proceed without configuring a

values.yamlfile.

For more information, refer to Manifest Optional Values.

You can enter multiple values file paths by clicking Add File. At runtime, Harness will compile the files into one values file.

If you use multiple files, the highest priority is given from the last file, and the lowest priority to the first file. For example, if you have 3 files and the second and third files contain the same key:value as the first file, the third file's key:value overrides the second and first files.

14. Click Submit.

14. Click Submit.

The values file(s) are added to the Service.

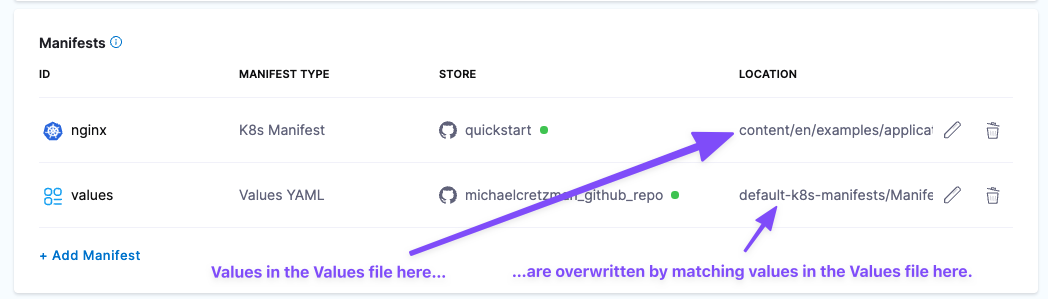

Values files in both the Manifests and Values YAML

If you have Values files in both the K8s Manifest File/Folder Path and the Values YAML, the Values YAML will overwrite any matching values in the Values YAML in the Manifest File/Folder Path.

Ignore a manifest file during deployment

You might have manifest files for resources that you do not want to deploy as part of the main deployment.

Instead, you can tell Harness to ignore these files and then apply them separately using the Harness Apply step. Or you can simply ignore them and deploy them later.

See Ignore a manifest file during deployment and Apply.

Notes

- If this is your first time using Harness for a Kubernetes deployment, see Kubernetes CD tutorial.

- For a task-based walkthroughs of different Kubernetes features in Harness, see Kubernetes How-tos.

- You can hardcode your artifact in your manifests, our add your artifact source to your Service Definition and then reference it in your manifests.

Helm Charts

Use Helm Charts

You can use Helm charts stored in an HTTP Helm Repository, OCI Registry, a Git repo provider, a cloud storage service (Google Cloud Storage, AWS S3, Azure Repo), a custom repo, or the Harness File Store.

- YAML

- API

- Terraform Provider

- Pipeline Studio

Here's a YAML example for a service with manifests hosted in Github and the nginx image hosted in Docker Hub.Example

service:

name: Helm Chart

identifier: Helm_Chart

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: nginx

type: HelmChart

spec:

store:

type: Http

spec:

connectorRef: Bitnami

chartName: nginx

helmVersion: V3

skipResourceVersioning: false

commandFlags:

- commandType: Template

flag: mychart -x templates/deployment.yaml

type: Kubernetes

Create a service using the Create Services API.Services API example

[

{

"identifier": "KubernetesTest",

"orgIdentifier": "default",

"projectIdentifier": "CD_Docs",

"name": "KubernetesTest",

"description": "string",

"tags": {

"property1": "string",

"property2": "string"

},

"yaml": "service:\n name: Helm Chart\n identifier: Helm_Chart\n tags: {}\n serviceDefinition:\n spec:\n manifests:\n - manifest:\n identifier: nginx\n type: HelmChart\n spec:\n store:\n type: Http\n spec:\n connectorRef: Bitnami\n chartName: nginx\n helmVersion: V3\n skipResourceVersioning: false\n commandFlags:\n - commandType: Template\n flag: mychart -x templates/deployment.yaml\n type: Kubernetes"

}

]

For the Terraform Provider resource, go to harness_platform_service.Example

resource "harness_platform_service" "example" {

identifier = "identifier"

name = "name"

description = "test"

org_id = "org_id"

project_id = "project_id"

## SERVICE V2 UPDATE

## We now take in a YAML that can define the service definition for a given Service

## It isn't mandatory for Service creation

## It is mandatory for Service use in a pipeline

yaml = <<-EOT

service:

name: Helm Chart

identifier: Helm_Chart

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: nginx

type: HelmChart

spec:

store:

type: Http

spec:

connectorRef: Bitnami

chartName: nginx

helmVersion: V3

skipResourceVersioning: false

commandFlags:

- commandType: Template

flag: mychart -x templates/deployment.yaml

type: Kubernetes

EOT

}

To add a Helm chart to your service, do the following:

-

In your project, in CD (Deployments), select Services.

-

Select Manage Services, and then select New Service.

-

Enter a name for the service and select Save.

-

Select Configuration.

-

In Service Definition, select Kubernetes.

-

In Manifests, click Add Manifest.

-

In Specify Manifest Type, select Helm Chart, and click Continue.

-

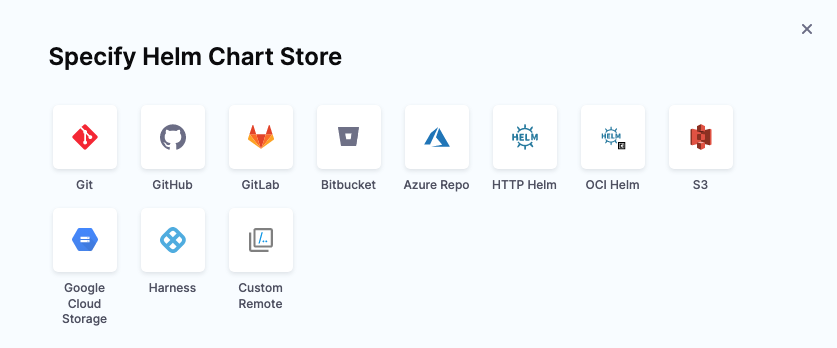

In Specify Helm Chart Store, select the storage service you're using.

Options for connecting to a Helm chart store

The options avialable to you to specify a Helm chart store depend on whether or not specific feature flags are enabled on your account. Options available without any feature flags or with specific feature flags enabled are described here:

- Feature flag disabled. Only one option is available: OCI Helm Registry Connector. This option enables you to connect to any OCI-based registry.

- Feature flag enabled. You can choose between connectors in the following categories:

- Direct Connection. Contains the OCI Helm Registry Connector option (shortened to OCI Helm), which you can use with any OCI-based registry.

- Via Cloud Provider. Contains the ECR connector option. This connector is specifically designed for AWS ECR to help you overcome the limitation of having to regenerate the ECR registry authentication token every 12 hours. The ECR connector option uses an AWS connector and regenerates the required authentication token if the token has expired.

For the steps and settings of each option, go to Connectors or Connect to a Git repo.

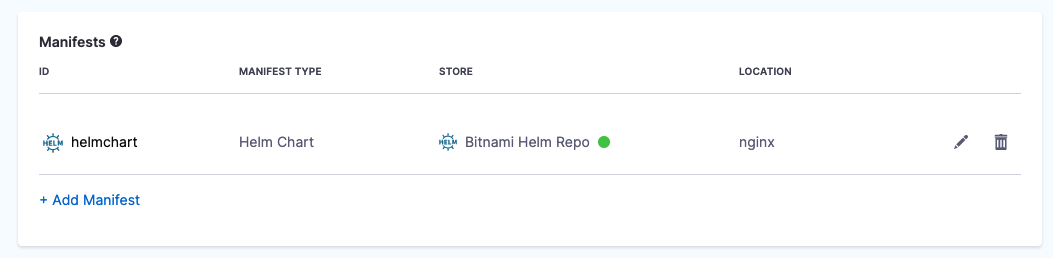

Once your Helm chart is added, it appears in the Manifests section. For example:

Important notes

- If this is your first time using Harness for a Helm Chart deployment, see Helm Chart deployment tutorial.

- For a detailed walkthrough of deploying Helm Charts in Harness, including limitations and binary support, see Deploy Helm Charts.

- Harness does not support AWS cross-account access for ChartMuseum and AWS S3. For example, if the Harness Delegate used to deploy charts is in AWS account A, and the S3 bucket is in AWS account B, the Harness connector that uses this delegate in A cannot assume the role for the B account.

- Harness cannot fetch Helm chart versions with Helm OCI because Helm OCI no longer supports

helm chart list. See OCI Feature Deprecation and Behavior Changes with Helm v3.7.0. - Currently, you cannot list the OCI image tags in Harness. This is a Helm limitation. For more information, go to Helm Search Repo Chart issue.

Kustomize

Use Kustomize

Harness supports Kustomize deployments. You can use overlays, multibase, plugins, sealed secrets, patches, etc, just as you would in any native kustomization.

- YAML

- API

- Terraform Provider

- Pipeline Studio

- Kustomize Patches

Here's a YAML example for a service using a publicly available helloword kustomization cloned from Kustomize.Example

service:

name: Kustomize

identifier: Kustomize

serviceDefinition:

type: Kubernetes

spec:

manifests:

- manifest:

identifier: kustomize

type: Kustomize

spec:

store:

type: Github

spec:

connectorRef: Kustomize

gitFetchType: Branch

folderPath: kustomize/helloworld

branch: main

pluginPath: ""

skipResourceVersioning: false

gitOpsEnabled: false

Create a service using the Create Services API.Services API example

[

{

"identifier": "KubernetesTest",

"orgIdentifier": "default",

"projectIdentifier": "CD_Docs",

"name": "KubernetesTest",

"description": "string",

"tags": {

"property1": "string",

"property2": "string"

},

"yaml": "service:\n name: Kustomize\n identifier: Kustomize\n serviceDefinition:\n type: Kubernetes\n spec:\n manifests:\n - manifest:\n identifier: kustomize\n type: Kustomize\n spec:\n store:\n type: Github\n spec:\n connectorRef: Kustomize\n gitFetchType: Branch\n folderPath: kustomize/helloworld\n branch: main\n pluginPath: \"\"\n skipResourceVersioning: false\n gitOpsEnabled: false"

}

]

For the Terraform Provider resource, go to harness_platform_service.Example

resource "harness_platform_service" "example" {

identifier = "identifier"

name = "name"

description = "test"

org_id = "org_id"

project_id = "project_id"

## SERVICE V2 UPDATE

## We now take in a YAML that can define the service definition for a given Service

## It isn't mandatory for Service creation

## It is mandatory for Service use in a pipeline

yaml = <<-EOT

service:

name: Kustomize

identifier: Kustomize

serviceDefinition:

type: Kubernetes

spec:

manifests:

- manifest:

identifier: kustomize

type: Kustomize

spec:

store:

type: Github

spec:

connectorRef: Kustomize

gitFetchType: Branch

folderPath: kustomize/helloworld

branch: main

pluginPath: ""

skipResourceVersioning: false

gitOpsEnabled: false

EOT

}

To add a kustomization, do the following:

-

In your project, in CD (Deployments), select Services.

-

Select Manage Services, and then select New Service.

-

Enter a name for the service and select Save.

-

Select Configuration.

-

In Service Definition, select Kubernetes.

-

In Manifests, click Add Manifest.

-

In your CD stage, click Service.

-

In Service Definition, select Kubernetes.

-

In Manifests, click Add Manifest.

-

In Specify Manifest Type, click Kustomize, and click Continue.

-

In Specify Manifest Type, select a Git provider, Harness File Store, or Azure Repo.

-

In Manifest Details, enter the following settings, test the connection, and click Submit.

- Manifest Identifier: enter kustomize.

- Git Fetch Type: select Latest from Branch.

- Branch: enter main.

- Kustomize Folder Path: kustomize/helloWorld. This is the path from the repo root.

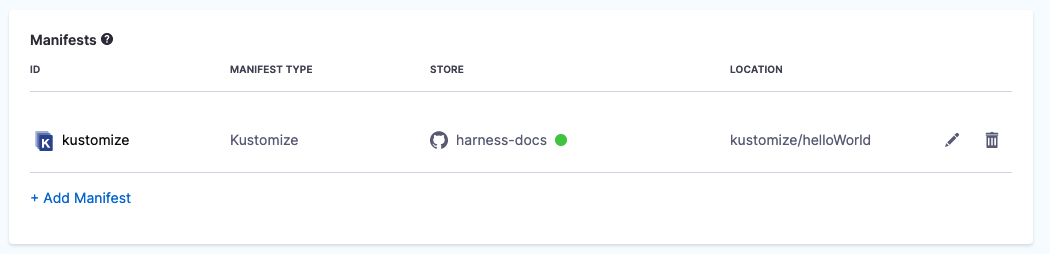

The kustomization is now listed.

You cannot use Harness variables in the base manifest or kustomization.yaml. You can only use Harness variables in kustomize patches you add in Kustomize Patches Manifest Details.

How Harness uses patchesStrategicMerge:

- Kustomize patches override values in the base manifest. Harness supports the

patchesStrategicMergepatches type. - If the

patchesStrategicMergelabel is missing from the kustomization YAML file, but you have added Kustomize Patches to your Harness Service, Harness will add the Kustomize Patches you added in Harness to thepatchesStrategicMergein the kustomization file. If you have hardcoded patches inpatchesStrategicMerge, but not add these patches to Harness as Kustomize Patches, Harness will ignore them.

For a detailed walkthrough of using patches in Harness, go to Use Kustomize for Kubernetes deployments.

To use Kustomize Patches, do the following:

-

In your project, in CD (Deployments), select Services.

-

Select Manage Services, and then select New Service.

-

Enter a name for the service and select Save.

-

Select Configuration.

-

In Service Definition, select Kubernetes.

-

In Manifests, select Add Manifest.

-

In Specify Manifest Type, select Kustomize Patches, and selectContinue.

-

In Specify Kustomize Patches Store, select your Git provider and Connector. See Connect to a Git Repo.

The Git Connector should point to the Git account or repo where you Kustomize files are located. In Kustomize Patches you will specify the path to the actual patch files.

-

Select Continue.

-

In Manifest Details, enter the path to your patch file(s):

- Manifest Identifier: enter a name that identifies the patch file(s). You don't have to add the actual filename.

- Git Fetch Type: select whether to use the latest branch or a specific commit Id.

- Branch/Commit Id: enter the branch or commit Id.

- File/Folder Path: enter the path to the patch file(s) from the root of the repo.

-

Click Add File to add each patch file. The files you add should be the same files listed in

patchesStrategicMergeof the main kustomize file in your Service.The order in which you add file paths for patches in File/Folder Path is the same order that Harness applies the patches during the kustomization build.

Small patches that do one thing are recommended. For example, create one patch for increasing the deployment replica number and another patch for setting the memory limit.

-

Select Submit. The patch file(s) is added to Manifests.

When the main kustomization.yaml is deployed, the patch is rendered and its overrides are added to the deployment.yaml that is deployed.

If this is your first time using Harness for a Kustomize deployment, see the Kustomize Quickstart.

For a detailed walkthrough of deploying Kustomize in Harness, including limitations, see Use Kustomize for Kubernetes Deployments.

Important notes

- Harness supports Kustomize and Kustomize Patches for Rolling, Blue Green and Delete steps.

- Harness does not use Kustomize for rollback. Harness renders the templates using Kustomize and then passes them onto kubectl. A rollback works exactly as it does for native Kubernetes.

- You cannot use Harness variables in the base manifest or kustomization.yaml. You can only use Harness variables in kustomize patches you add in Kustomize Patches Manifest Details.

- Kustomize binary versions:

- Harness includes Kustomize binary versions 3.5.4 and 4.0.0. By default, Harness uses 3.5.4.

- To use 4.0.0, you must enable the feature flag

NEW_KUSTOMIZE_BINARYin your account. Contact Harness Support to enable the feature.

- Harness will not follow symlinks in the Kustomize and Kustomize Patches files it pulls.

OpenShift templates

Use OpenShift templates

Harness supports OpenShift for Kubernetes deployments.

For an overview of OpenShift support, see Using OpenShift with Harness Kubernetes.

- YAML

- API

- Terraform Provider

- Pipeline Studio

- OpenShift Param

Here's a YAML example for a service using an OpenShift template that is stored in the Harness File Store.Example

service:

name: OpenShift Template

identifier: OpenShift

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: nginx

type: OpenshiftTemplate

spec:

store:

type: Harness

spec:

files:

- /OpenShift/templates/example-template.yml

skipResourceVersioning: false

type: Kubernetes

Create a service using the Create Services API.Services API example

[

{

"identifier": "KubernetesTest",

"orgIdentifier": "default",

"projectIdentifier": "CD_Docs",

"name": "KubernetesTest",

"description": "string",

"tags": {

"property1": "string",

"property2": "string"

},

"yaml": "service:\n name: OpenShift Template\n identifier: OpenShift\n tags: {}\n serviceDefinition:\n spec:\n manifests:\n - manifest:\n identifier: nginx\n type: OpenshiftTemplate\n spec:\n store:\n type: Harness\n spec:\n files:\n - /OpenShift/templates/example-template.yml\n skipResourceVersioning: false\n type: Kubernetes"

}

]

For the Terraform Provider resource, go to harness_platform_service.Example

resource "harness_platform_service" "example" {

identifier = "identifier"

name = "name"

description = "test"

org_id = "org_id"

project_id = "project_id"

## SERVICE V2 UPDATE

## We now take in a YAML that can define the service definition for a given Service

## It isn't mandatory for Service creation

## It is mandatory for Service use in a pipeline

yaml = <<-EOT

service:

name: OpenShift Template

identifier: OpenShift

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: nginx

type: OpenshiftTemplate

spec:

store:

type: Harness

spec:

files:

- /OpenShift/templates/example-template.yml

skipResourceVersioning: false

type: Kubernetes

EOT

}

To add an OpenShift Template to a service, do the following:

- In your project, in CD (Deployments), select Services.

- Select Manage Services, and then select New Service.

- Enter a name for the service and select Save.

- Select Configuration.

- In Service Definition, select Kubernetes.

- In Manifests, click Add Manifest.

- In Specify Manifest Type, select OpenShift Template, and then select Continue.

- In Specify OpenShift Template Store, select where your template is located.

You can use a Git provider, the Harness File Store, a custom repo, or Azure Repos.

- For example, click GitHub, and then select or create a new GitHub Connector. See Connect to Code Repo.

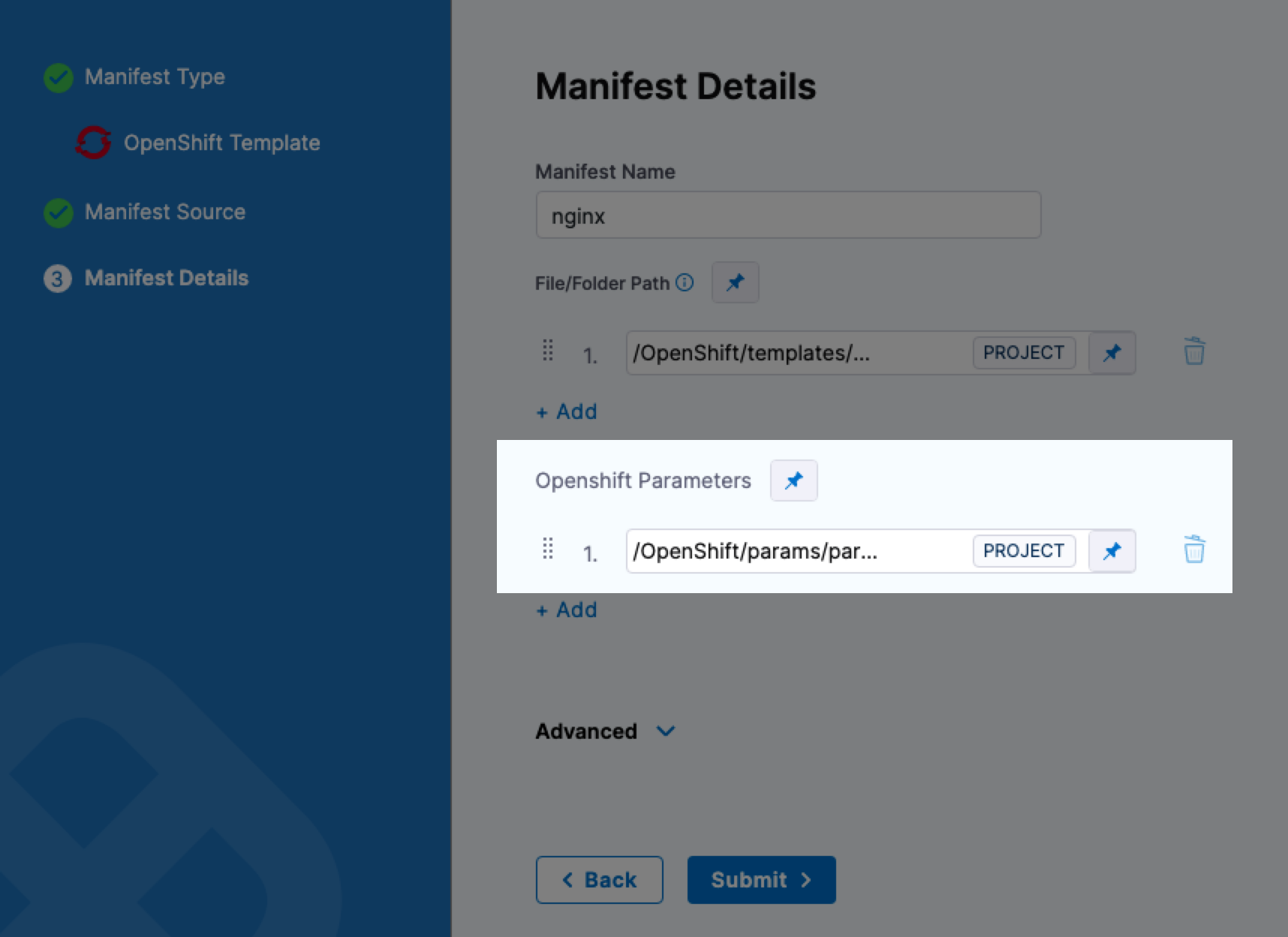

- Select Continue. Manifest Details appears.

- In Manifest Identifier, enter an Id for the manifest. It must be unique. It can be used in Harness expressions to reference this template's settings.

- In Git Fetch Type, select Latest from Branch or Specific Commit Id/Git Tag, and then enter the branch or commit Id/tag for the repo.

- In Template File Path, enter the path to the template file. The Connector you selected already has the repo name, so you simply need to add the path from the root of the repo to the file.

- Select Submit. The template is added to Manifests.

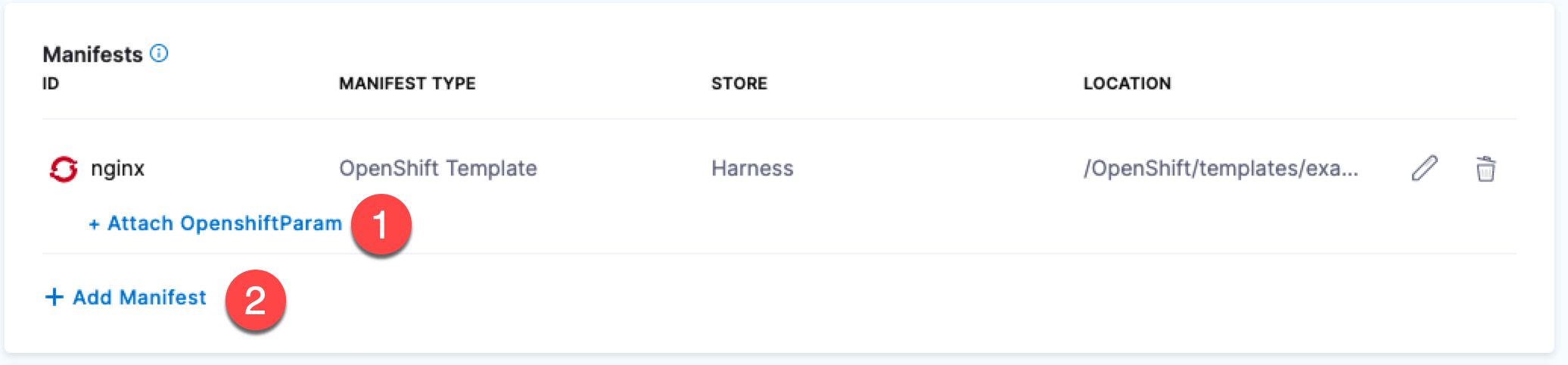

OpenShift Param Files can be added in the following ways:

- Attached to the OpenShift Template you added.

- Added as a separate manifest.

For an overview of OpenShift support, see Using OpenShift with Harness Kubernetes.

Let's look at an example where the OpenShift Param is attached to a template already added:

- In your project, in CD (Deployments), select Services.

- Select Manage Services, and then select the service with the OpenShift template.

- Select Configuration.

- In Manifests, select Attach OpenShift Param.

- In Enter File Path, select where your params file is located.

- Select Submit. The params file is added to Manifests.

You can now see the params file in the OpenShift Template Manifest Details.

Deployment strategy support

In addition to standard workload type support in Harness (see What can I deploy in Kubernetes?), Harness supports DeploymentConfig, Route, and ImageStream across Canary, Blue Green, and Rolling deployment strategies.

Please use apiVersion: apps.openshift.io/v1 and not apiVersion: v1.

Harness supports list objects

You can leverage Kubernetes list objects as needed without modifying your YAML for Harness.

When you deploy, Harness will render the lists and show all the templated and rendered values in the log.

Harness supports:

- List

- NamespaceList

- ServiceList

- For Kubernetes deployments, these objects are supported for all deployment strategies (Canary, Rolling, Blue/Green).

- For Native Helm, these objects are supported for Rolling deployments.

If you run kubectl api-resources you should see a list of resources, and kubectl explain will work with any of these.

Important notes

- Make sure that you update your version to

apiVersion: apps.openshift.io/v1and notapiVersion: v1. - The token does not need to have global read permissions. The token can be scoped to the namespace.

- The Kubernetes containers must be OpenShift-compatible containers. If you are already using OpenShift, then this is already configured. But be aware that OpenShift cannot simply deploy any Kubernetes container. You can get OpenShift images from the following public repos: https://hub.docker.com/u/openshift and https://access.redhat.com/containers.

- Useful articles for setting up a local OpenShift cluster for testing: How To Setup Local OpenShift Origin (OKD) Cluster on CentOS 7, OpenShift Console redirects to 127.0.0.1.

Artifacts

You have two options when referencing the artifacts you want to deploy:

- Add an artifact source to the Harness service and reference it using the Harness expression

<+artifacts.primary.image>in the values YAML file. - Hardcode the artifact into the manifests or values YAML file.

Use the artifact expression

Add the image location to Harness as an artifact in the Artifacts section of the service.

This allows you to reference the image in your values YAML files using the Harness expression <+artifacts.primary.image>.

---

image: <+artifacts.primary.image>

You cannot use Harness variables expressions in your Kubernetes object manifest files. You can only use Harness variables expressions in values YAML files, or Kustomize Patch file.

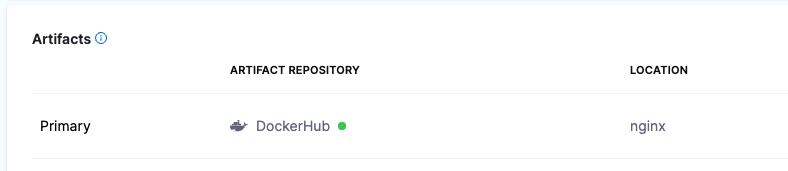

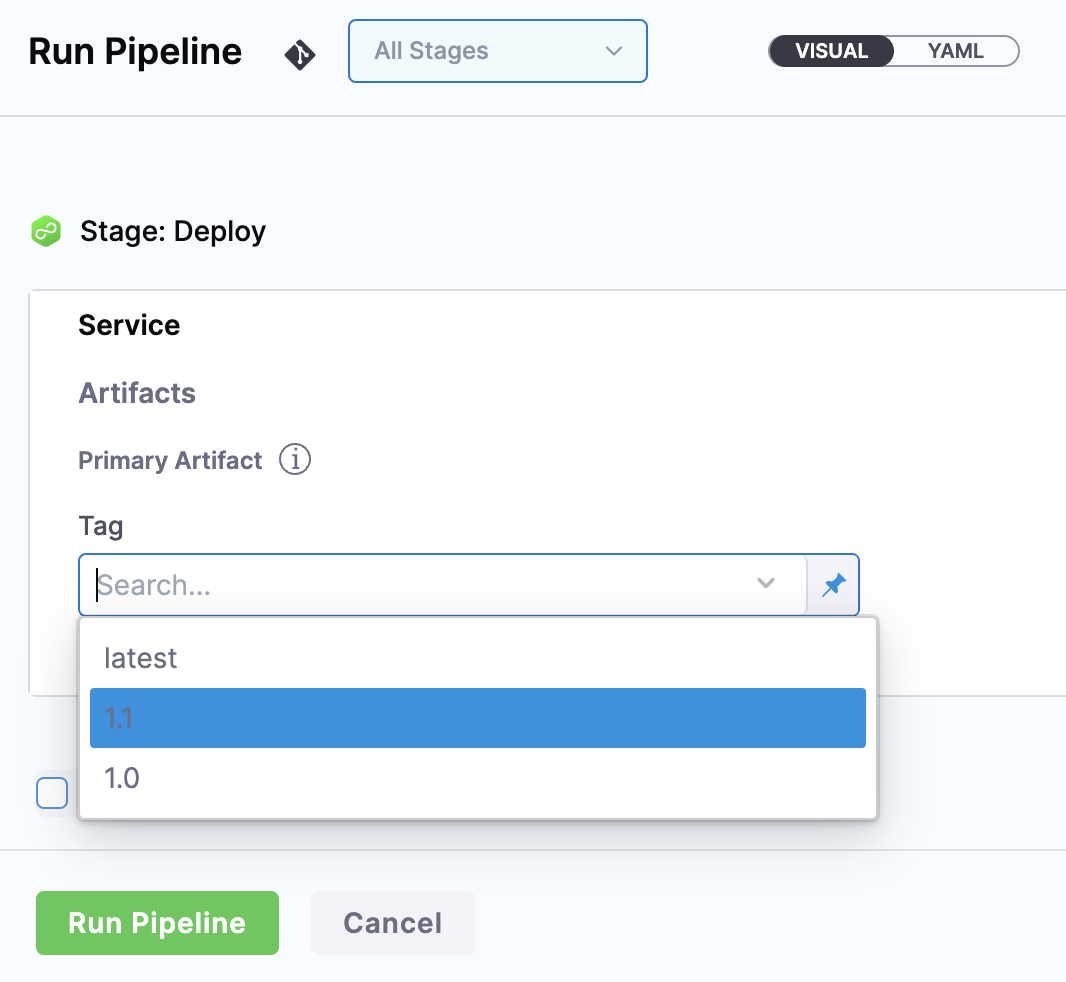

When you select the artifact repo for the artifact, like a Docker Hub repo, you specify the artifact and tag/version to use.

You can select a specific tag/version, use a runtime input so that you are prompted for the tag/version when you run the pipeline, or you can use an Harness variable expression to pass in the tag/version at execution.

Here's an example where a runtime input is used and you select which image version/tag to deploy.

With a Harness artifact, you can template your manifests, detaching them from a hardcoded location. This makes your manifests reusable and dynamic.

Hardcode the artifact

If a Docker image location is hardcoded in your Kubernetes manifest (for example, image: nginx:1.14.2), then you can simply add the manifest to Harness in Manifests and Kubernetes will pull the image during deployment.

When you hardcode the artifact in your manifests, any artifacts added to your Harness service are ignored.

Harness Artifact Registry

Use artifacts from Harness Artifact Registry

Harness Artifact Registry (HAR) is a fully managed artifact repository that lets you store and manage artifacts directly in your Harness account. HAR supports both container images (Docker) and packaged artifacts (Maven, npm, NuGet, generic formats), providing secure, scalable storage that integrates seamlessly with Harness CD pipelines.

HAR is natively integrated with Harness CD and does not require a separate connector. However, you need a valid HAR license to use this feature. Contact Harness Support for licensing information.

- YAML

- API

- Harness Manager

Service using HAR artifact YAML

service:

name: k8s_service

identifier: k8s_service

serviceDefinition:

type: Kubernetes

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates/deployment.yaml

valuesPaths:

- /values.yaml

skipResourceVersioning: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- identifier: myartifact

type: Har

spec:

registryRef: dev

type: docker

spec:

imagePath: my-app

tag: <+input>

digest: ""

Create a service with an artifact source that uses HAR using the Create Services API.

For step-by-step instructions on adding a Harness Artifact Registry artifact source in the Harness Manager UI, go to Harness Artifact Registry in the artifact sources documentation.

For more information on HAR, go to Harness Artifact Registry documentation.

Docker

Use artifacts in any Docker registry

- YAML

- API

- Terraform Provider

- Pipeline Studio

To use a Docker artifact, you create or use a Harness connector to connect to your Docker repo and then use that connector in your Harness service and reference the artifact to use.Docker connector YAML

connector:

name: Docker Hub with Pwd

identifier: Docker_Hub_with_Pwd

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: DockerRegistry

spec:

dockerRegistryUrl: https://index.docker.io/v2/

providerType: DockerHub

auth:

type: UsernamePassword

spec:

username: johndoe

passwordRef: Docker_Hub_Pwd

executeOnDelegate: falseService using Docker artifact YAML

service:

name: Example K8s2

identifier: Example_K8s2

serviceDefinition:

type: Kubernetes

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates/deployment.yaml

valuesPaths:

- /values.yaml

skipResourceVersioning: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: Docker_Hub_with_Pwd

imagePath: library/nginx

tag: stable-perl

identifier: myimage

type: DockerRegistry

gitOpsEnabled: false

Create the Docker connector using the Create a Connector API.Docker connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=123456' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.123456.123456' \

--data-raw 'connector:

name: dockerhub

identifier: dockerhub

description: ""

tags: {}

orgIdentifier: default

projectIdentifier: APISample

type: DockerRegistry

spec:

dockerRegistryUrl: https://index.docker.io/v2/

providerType: DockerHub

auth:

type: Anonymous'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider Docker connector resource, go to harness_platform_connector_docker.Docker connector example

# credentials anonymous

resource "harness_platform_connector_docker" "test" {

identifier = "identifer"

name = "name"

description = "test"

tags = ["foo:bar"]

type = "DockerHub"

url = "https://hub.docker.com"

delegate_selectors = ["harness-delegate"]

}

# credentials username password

resource "harness_platform_connector_docker" "test" {

identifier = "identifer"

name = "name"

description = "test"

tags = ["foo:bar"]

type = "DockerHub"

url = "https://hub.docker.com"

delegate_selectors = ["harness-delegate"]

credentials {

username = "admin"

password_ref = "account.secret_id"

}

}

For the Terraform Provider service resource, go to harness_platform_service.Service example

resource "harness_platform_service" "example" {

identifier = "identifier"

name = "name"

description = "test"

org_id = "org_id"

project_id = "project_id"

## SERVICE V2 UPDATE

## We now take in a YAML that can define the service definition for a given Service

## It isn't mandatory for Service creation

## It is mandatory for Service use in a pipeline

yaml = <<-EOT

service:

name: Example K8s2

identifier: Example_K8s2

serviceDefinition:

type: Kubernetes

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates/deployment.yaml

valuesPaths:

- /values.yaml

skipResourceVersioning: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: Docker_Hub_with_Pwd

imagePath: library/nginx

tag: stable-perl

identifier: myimage

type: DockerRegistry

gitOpsEnabled: false

EOT

}

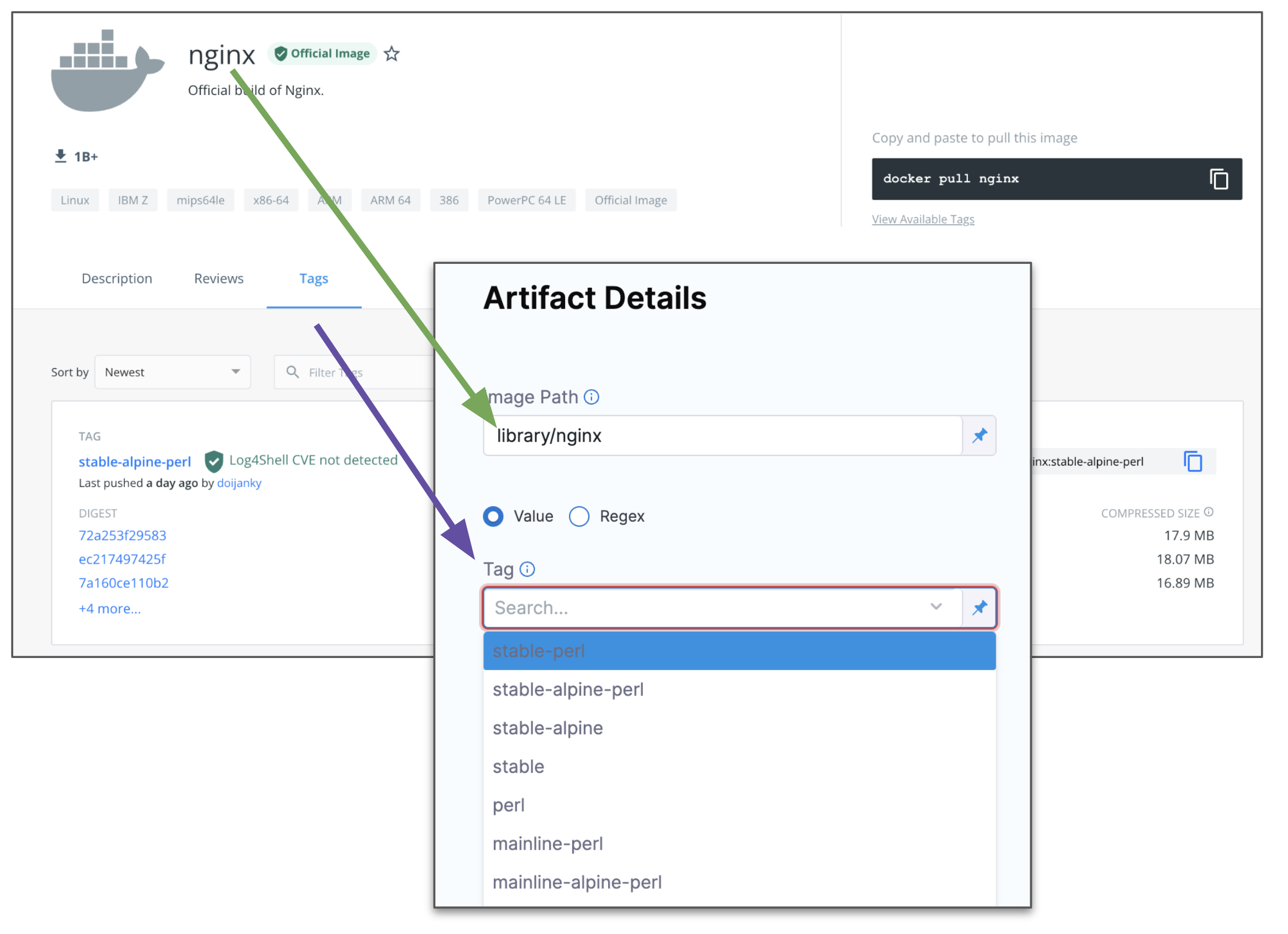

To add an artifact from a Docker registry, do the following:

-

In your project, in CD (Deployments), select Services.

-

Select Manage Services, and then select New Service.

-

Enter a name for the service and select Save.

-

Select Configuration.

-

In Service Definition, select Kubernetes.

-

In Artifacts, select Add Artifact Source.

-

In Select Artifact Repository Type, select the registry where your Docker artifact is hosted. For this example, we'll select Docker Registry, and then click Continue.

-

Select or create a Docker Registry Connector.

-

Select Continue.

-

In Artifact Source Name, enter a name that identifies your artifact.

-

In Image path, enter the name of the artifact you want to deploy, such as

library/nginxorjsmtih/privateimage.Official images in public repos often need the label

library, e.g.library/tomcat.Wildcards are not supported.

-

In Tag, enter or select the Docker image tag for the image.

-

Click Submit.

-

The Artifact is added to the Service Definition.

Important notes

- For pulling Docker images from Docker repos, Harness is restricted by the limits of the Docker repo. For example, Docker Hub limits.

- The maximum number of artifact image tags fetched by Harness that is 10000.

Google Container Registry (GCR)

Google Container Registry (GCR) is being deprecated. For more details, refer to the Deprecation Notice.

Use GCR artifacts

You connect to GCR using a Harness GCP Connector. For details on all the GCR requirements for the GCP Connector, see Google Cloud Platform (GCP) Connector Settings Reference.

- YAML

- API

- Terraform Provider

- Pipeline Studio

To use a GCR artifact, you create or use a Harness GCP Connector to connect to GCR repo and then use that connector in your Harness service and reference the artifact to use. This example uses a Harness Delegate installed in GCP for credentials.GCP connector YAML

connector:

name: GCR

identifier: GCR

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Gcp

spec:

credential:

type: InheritFromDelegate

delegateSelectors:

- gcpdocplay

executeOnDelegate: trueService using GCR artifact YAML

service:

name: Google Artifact

identifier: Google_Artifact

serviceDefinition:

type: Kubernetes

spec:

manifests:

- manifest:

identifier: manifests

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- account:/Templates

valuesPaths:

- account:/values.yaml

skipResourceVersioning: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: GCR

imagePath: docs-play/todolist-sample

tag: <+input>

registryHostname: gcr.io

identifier: myapp

type: Gcr

gitOpsEnabled: false

Create the GCR connector using the Create a Connector API.GCR connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: GCRexample

identifier: GCRexample

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Gcp

spec:

credential:

type: InheritFromDelegate

delegateSelectors:

- gcpdocplay

executeOnDelegate: true'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider GCP connector resource, go to harness_platform_connector_gcp.GCP connector example

# Credential manual

resource "harness_platform_connector_gcp" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

manual {

secret_key_ref = "account.secret_id"

delegate_selectors = ["harness-delegate"]

}

}

# Credentials inherit_from_delegate

resource "harness_platform_connector_gcp" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

inherit_from_delegate {

delegate_selectors = ["harness-delegate"]

}

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to GCR using a Harness GCP Connector. For details on all the GCR requirements for the GCP Connector, see Google Cloud Platform (GCP) Connector Settings Reference.

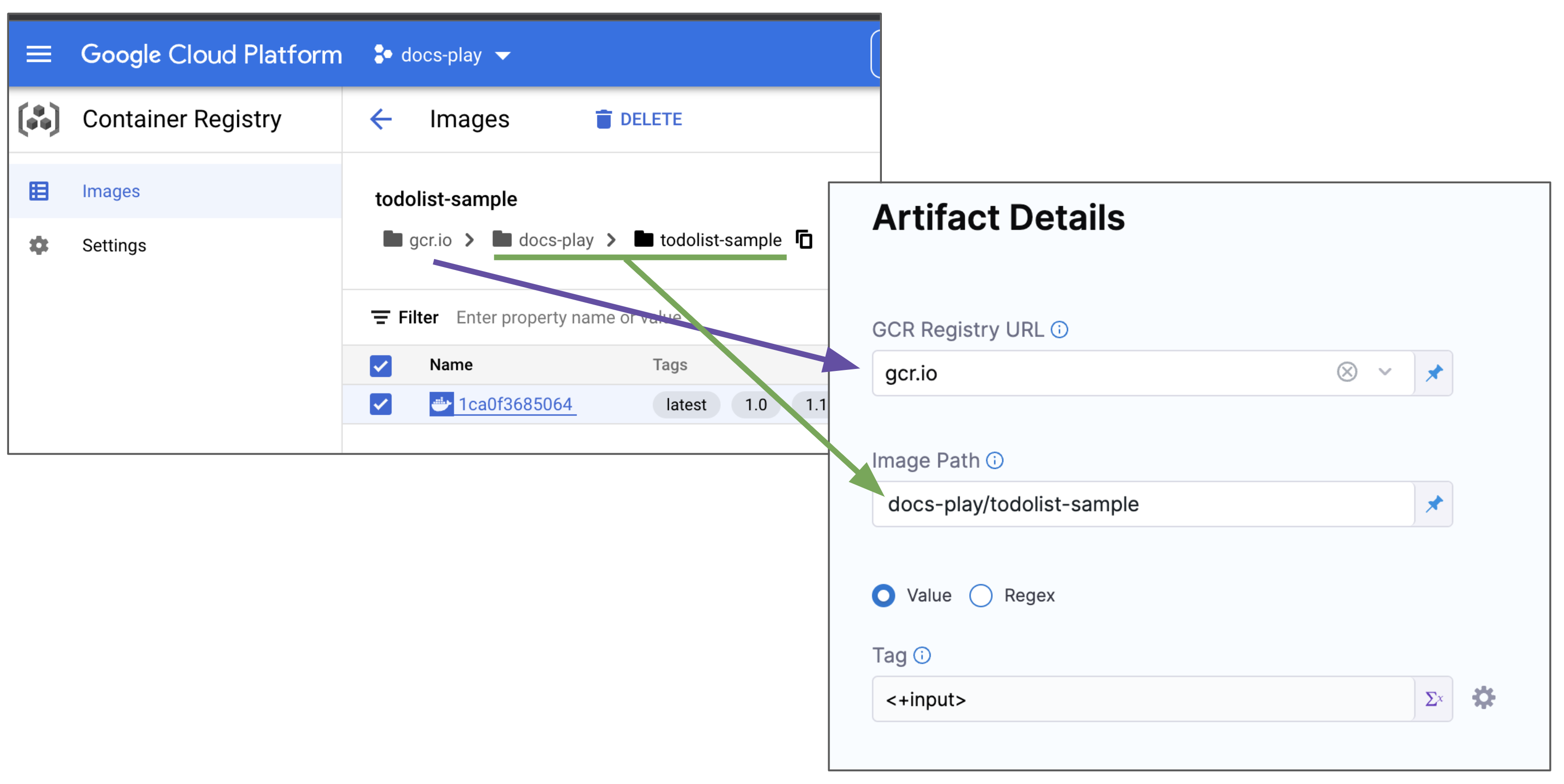

To add an artifact from GCR, do the following:

-

In your project, in CD (Deployments), select Services.

-

Select Manage Services, and then select New Service.

-

Enter a name for the service and select Save.

-

Select Configuration.

-

In Service Definition, select Kubernetes.

-

In Artifacts, select Add Artifact Source.

-

In Select Artifact Repository Type, click GCR, and then click Continue.

-

In GCR Repository, select or create a Google Cloud Platform (GCP) Connector that connects to the GCP account where the GCR registry is located.

-

Click Continue.

-

In Artifact Source Name, enter a name for the artifact.

-

In GCR Registry URL, select the GCR registry host name, for example

gcr.io. -

In Image Path, enter the name of the artifact you want to deploy.

Images in repos need to reference a path starting with the project Id that the artifact is in, for example:

myproject-id/image-name. -

In Tag, enter or select the Docker image tag for the image or select a runtime input or expression.

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.

-

Click Submit.

The Artifact is added to the Service Definition.

Permissions

For Google Container Registry (GCR), the following roles are required:

- Storage Object Viewer (roles/storage.objectViewer)

- Storage Object Admin (roles/storage.objectAdmin)

For more information, go to the GCP documentation about Cloud IAM roles for Cloud Storage.

Ensure the Harness Delegate you have installed can reach storage.cloud.google.com and your GCR registry host name, for example gcr.io.

Google Artifact Registry

Use Google Artifact Registry artifacts

You connect to Google Artifact Registry using a Harness GCP Connector.

For details on all the Google Artifact Registry requirements for the GCP Connector, see Google Cloud Platform (GCP) Connector Settings Reference.

- YAML

- API

- Terraform Provider

- Pipeline Studio

This example uses a Harness Delegate installed in GCP for credentials.Google Artifact Registry connector YAML

connector:

name: Google Artifact Registry

identifier: Google_Artifact_Registry

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Gcp

spec:

credential:

type: InheritFromDelegate

delegateSelectors:

- gcpdocplay

executeOnDelegate: trueService using Google Artifact Registry artifact YAML

service:

name: Google Artifact Registry

identifier: Google_Artifact_Registry

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates

valuesPaths:

- /values.yaml

skipResourceVersioning: false

enableDeclarativeRollback: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- identifier: myapp

spec:

connectorRef: Google_Artifact_Registry

repositoryType: docker

project: docs-play

region: us-central1

repositoryName: quickstart-docker-repo

package: quickstart-docker-repo

version: <+input>

type: GoogleArtifactRegistry

type: Kubernetes

Create the Google Artifact Registry connector using the Create a Connector API.GCR connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: Google Artifact Registry

identifier: Google_Artifact_Registry

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Gcp

spec:

credential:

type: InheritFromDelegate

delegateSelectors:

- gcpdocplay

executeOnDelegate: true'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider GCP connector resource, go to harness_platform_connector_gcp.GCP connector example

# Credential manual

resource "harness_platform_connector_gcp" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

manual {

secret_key_ref = "account.secret_id"

delegate_selectors = ["harness-delegate"]

}

}

# Credentials inherit_from_delegate

resource "harness_platform_connector_gcp" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

inherit_from_delegate {

delegate_selectors = ["harness-delegate"]

}

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to Google Artifact Registry using a Harness GCP Connector.

For details on all the Google Artifact Registry requirements for the GCP Connector, see Google Cloud Platform (GCP) Connector Settings Reference.

To add an artifact from Google Artifact Registry, do the following:

-

In your project, in CD (Deployments), select Services.

-

Select Manage Services, and then select New Service.

-

Enter a name for the service and select Save.

-

Select Configuration.

-

In Service Definition, select Kubernetes.

-

In Artifacts, select Add Artifact Source.

-

In Artifact Repository Type, select Google Artifact Registry, and then select Continue.

-

In GCP Connector, select or create a Google Cloud Platform (GCP) Connector that connects to the GCP account where the Google Artifact Registry is located.

-

Select Continue.

-

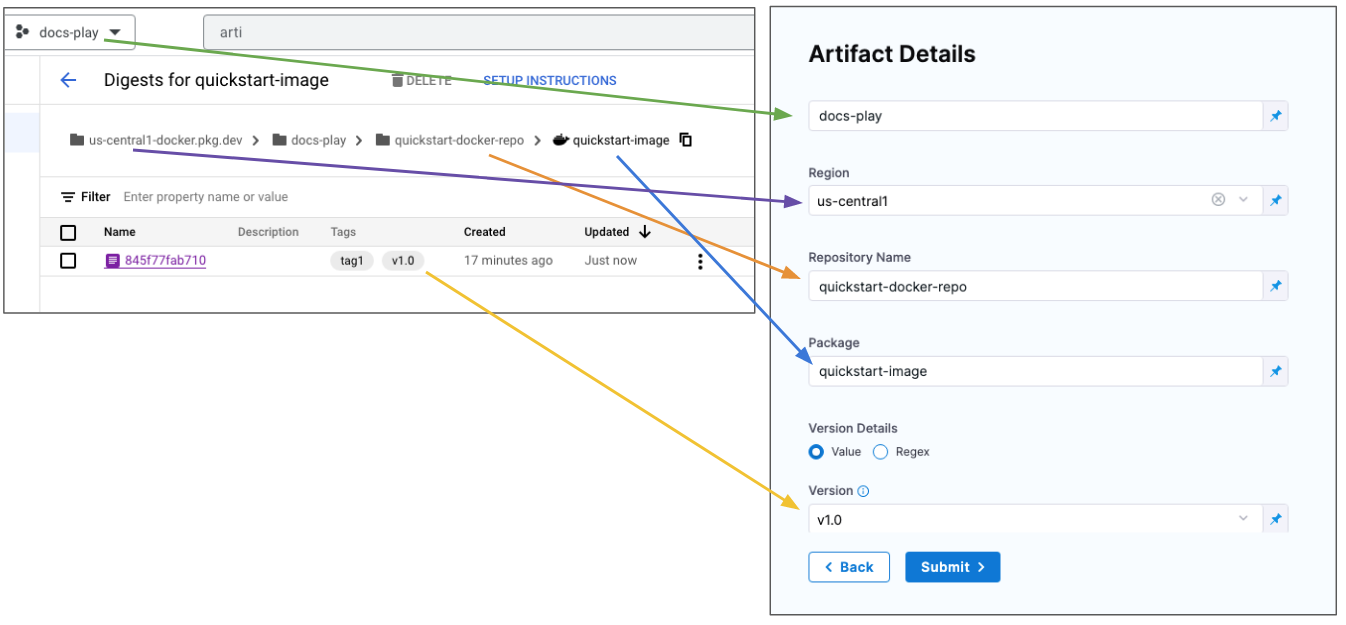

In Artifact Details, you are basically creating the pull command. For example:

docker pull us-central1-docker.pkg.dev/docs-play/quickstart-docker-repo/quickstart-image:v1.0 -

In Artifact Source Name, enter a name for the artifact.

-

In Repository Type, select the format of the artifact.

-

In Project, enter the Id of the GCP project.

-

In Region, select the region where the repo is located.

-

In Repository Name, enter the name of the repo.

-

In Package, enter the artifact name.

-

In Version Details, select Value or Regex.

-

In Version, enter or select the Docker image tag for the image or select runtime input or expression.

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.note

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.noteIf you used Fixed Value in Version and Harness is not able to fetch the image tags, ensure that the GCP service account key used in the GCP connector credentials, or in the service account used to install the Harness Delegate, has the required permissions. See the Permissions tab in this documentation.

-

Click Submit. The Artifact is added to the Service Definition.

Permissions

For Google Artifact Registry, the following roles are required:

- Artifact Registry Reader

- Artifact Registry Writer

For more information, go to the GCP documentation Configure roles and permissions.

Ensure the Harness Delegate you have installed can reach your Google Artifact Registry region, for example us-central1.

Amazon Elastic Container Registry (ECR)

Use ECR artifacts

You connect to ECR using a Harness AWS connector. For details on all the ECR requirements for the AWS connector, see AWS Connector Settings Reference.

- YAML

- API

- Terraform Provider

- Pipeline Studio

This example uses a Harness Delegate installed in AWS for credentials.ECR connector YAML

connector:

name: ECR

identifier: ECR

orgIdentifier: default

projectIdentifier: CD_Docs

type: Aws

spec:

credential:

type: ManualConfig

spec:

accessKey: xxxxx

secretKeyRef: secretaccesskey

region: us-east-1

delegateSelectors:

- doc-immut

executeOnDelegate: trueService using ECR artifact YAML

service:

name: ECR

identifier: ECR

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /values.yaml

valuesPaths:

- /Templates

skipResourceVersioning: false

enableDeclarativeRollback: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: ECR

imagePath: todolist-sample

tag: "1.0"

region: us-east-1

identifier: myapp

type: Ecr

type: Kubernetes

Create the ECR connector using the Create a Connector API.ECR connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: ECR

identifier: ECR

orgIdentifier: default

projectIdentifier: CD_Docs

type: Aws

spec:

credential:

type: ManualConfig

spec:

accessKey: xxxxx

secretKeyRef: secretaccesskey

region: us-east-1

delegateSelectors:

- doc-immut

executeOnDelegate: true'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider ECR connector resource, go to harness_platform_connector_aws.ECR connector example

# Credential manual

resource "harness_platform_connector_aws" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

manual {

secret_key_ref = "account.secret_id"

delegate_selectors = ["harness-delegate"]

}

}

# Credentials inherit_from_delegate

resource "harness_platform_connector_aws" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

inherit_from_delegate {

delegate_selectors = ["harness-delegate"]

}

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to ECR using a Harness AWS Connector. For details on all the ECR requirements for the AWS Connector, see AWS Connector Settings Reference.

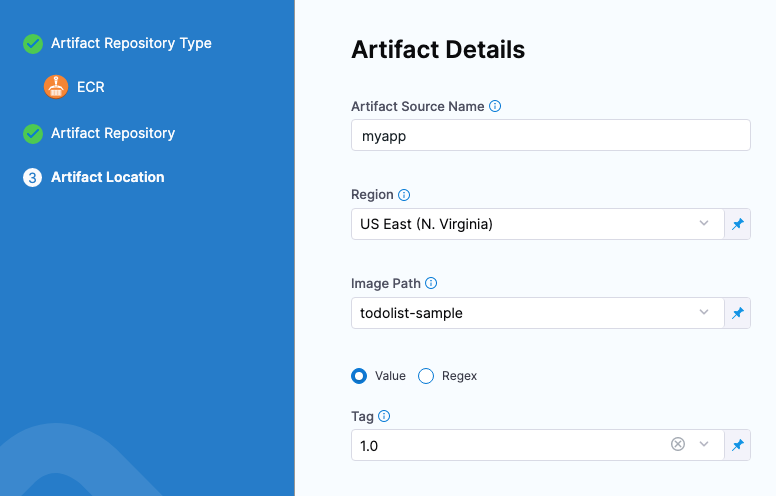

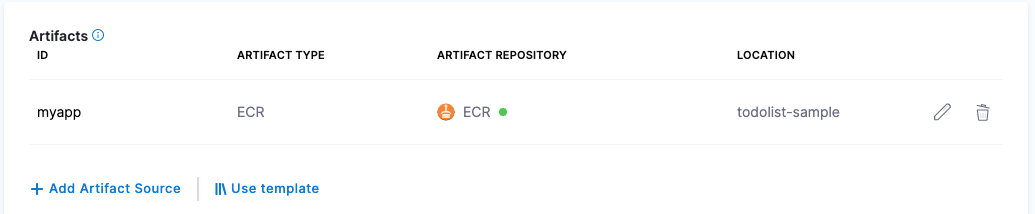

To add an artifact from ECR, do the following:

-

In your project, in CD (Deployments), select Services.

-

Select Manage Services, and then select New Service.

-

Enter a name for the service and select Save.

-

Select Configuration.

-

In Service Definition, select Kubernetes.

-

In Artifacts, select Add Artifact Source.

-

In Artifact Repository Type, click ECR, and then select Continue.

-

In ECR Repository, select or create an AWS connector that connects to the AWS account where the ECR registry is located.

-

Select Continue.

-

In Artifact Details, in Region, select the region where the artifact source is located.

-

In Image Path, enter the name of the artifact you want to deploy.

-

In Tag, enter or select the Docker image tag for the image.

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.

-

Select Submit.

The Artifact is added to the Service Definition.

Permissions

Ensure that the AWS IAM user account you use in the AWS Connector has the following policy.Pull from ECR policy

AmazonEC2ContainerRegistryReadOnlyarn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnlyProvides read-only access to Amazon EC2 Container Registry repositories.{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ecr:GetAuthorizationToken",

"ecr:BatchCheckLayerAvailability",

"ecr:GetDownloadUrlForLayer",

"ecr:GetRepositoryPolicy",

"ecr:DescribeRepositories",

"ecr:ListImages",

"ecr:DescribeImages",

"ecr:BatchGetImage"

],

"Resource": "*"

}

]

}

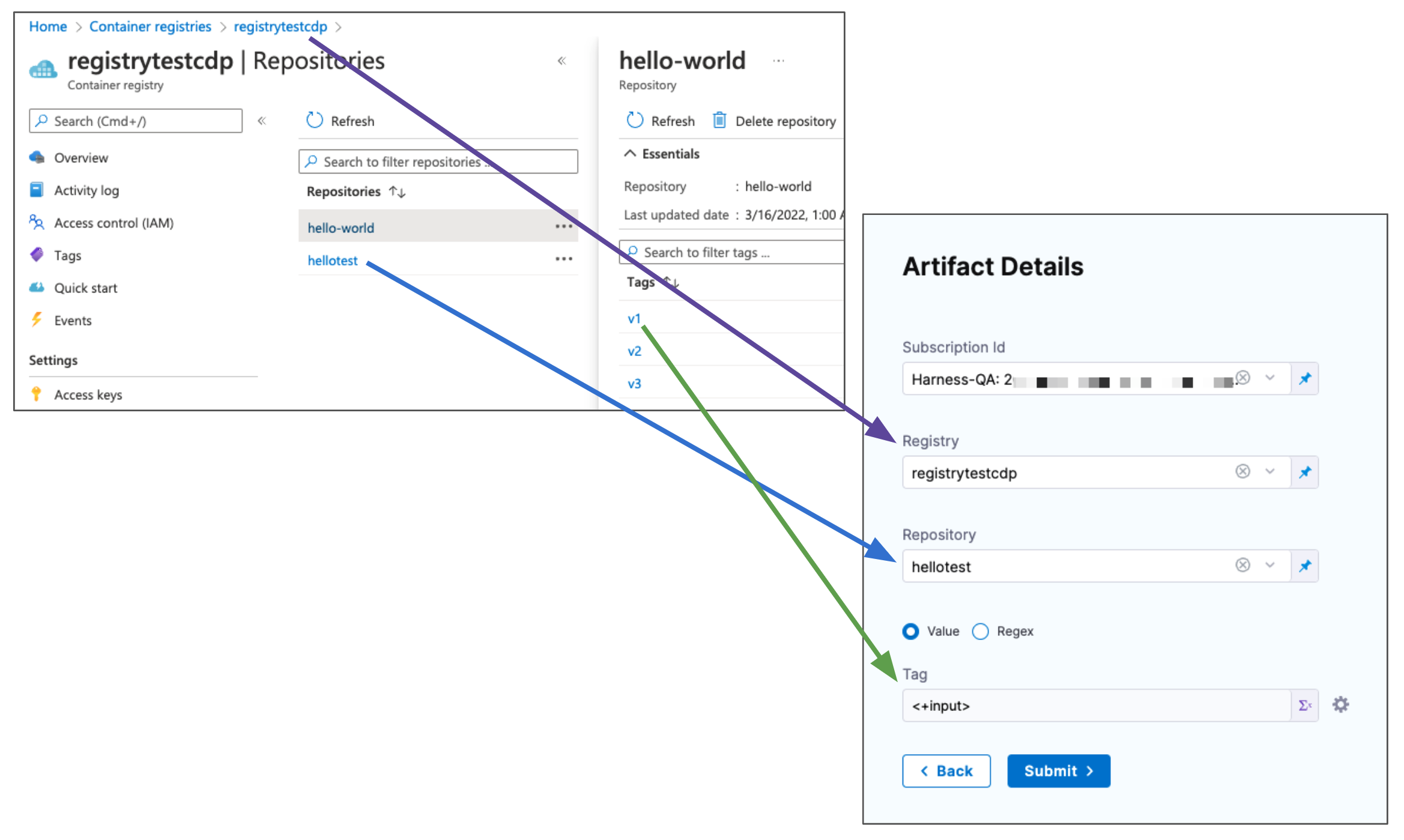

Azure Container Registry (ACR)

Use ACR artifacts

You connect to ACR using a Harness Azure Connector. For details on all the Azure requirements for the Azure Connector, see Add a Microsoft Azure cloud connector.

- YAML

- API

- Terraform Provider

- Pipeline Studio

This example uses a Harness Delegate installed in Azure for credentials.Azure connector for ACR YAML

connector:

name: ACR-docs

identifier: ACRdocs

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Azure

spec:

credential:

type: ManualConfig

spec:

applicationId: xxxxx-xxxx-xxxx-xxxx-xxxxx

tenantId: xxxxx-xxxx-xxxx-xxxx-xxxxx

auth:

type: Secret

spec:

secretRef: acrvalue

azureEnvironmentType: AZURE

executeOnDelegate: falseService using ACR artifact YAML

service:

name: Azure with ACR

identifier: Azure

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates

valuesPaths:

- /values.yaml

skipResourceVersioning: false

enableDeclarativeRollback: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: ACRdocs

tag: <+input>

subscriptionId: <+input>

registry: <+input>

repository: <+input>

identifier: myapp

type: Acr

type: Kubernetes

Create the ACR connector using the Create a Connector API.ACR connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: ACR-docs

identifier: ACRdocs

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: Azure

spec:

credential:

type: ManualConfig

spec:

applicationId: xxxxx-xxxx-xxxx-xxxx-xxxxx

tenantId: xxxxx-xxxx-xxxx-xxxx-xxxxx

auth:

type: Secret

spec:

secretRef: acrvalue

azureEnvironmentType: AZURE

executeOnDelegate: false'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider ACR connector resource, go to harness_platform_connector_azure_cloud_provider.ACR connector example

resource "harness_platform_connector_azure_cloud_provider" "manual_config_secret" {

identifier = "identifier"

name = "name"

description = "example"

tags = ["foo:bar"]

credentials {

type = "ManualConfig"

azure_manual_details {

application_id = "application_id"

tenant_id = "tenant_id"

auth {

type = "Secret"

azure_client_secret_key {

secret_ref = "account.${harness_platform_secret_text.test.id}"

}

}

}

}

azure_environment_type = "AZURE"

delegate_selectors = ["harness-delegate"]

}

resource "harness_platform_connector_azure_cloud_provider" "manual_config_certificate" {

identifier = "identifier"

name = "name"

description = "example"

tags = ["foo:bar"]

credentials {

type = "ManualConfig"

azure_manual_details {

application_id = "application_id"

tenant_id = "tenant_id"

auth {

type = "Certificate"

azure_client_key_cert {

certificate_ref = "account.${harness_platform_secret_text.test.id}"

}

}

}

}

azure_environment_type = "AZURE"

delegate_selectors = ["harness-delegate"]

}

resource "harness_platform_connector_azure_cloud_provider" "inherit_from_delegate_user_assigned_managed_identity" {

identifier = "identifier"

name = "name"

description = "example"

tags = ["foo:bar"]

credentials {

type = "InheritFromDelegate"

azure_inherit_from_delegate_details {

auth {

azure_msi_auth_ua {

client_id = "client_id"

}

type = "UserAssignedManagedIdentity"

}

}

}

azure_environment_type = "AZURE"

delegate_selectors = ["harness-delegate"]

}

resource "harness_platform_connector_azure_cloud_provider" "inherit_from_delegate_system_assigned_managed_identity" {

identifier = "identifier"

name = "name"

description = "example"

tags = ["foo:bar"]

credentials {

type = "InheritFromDelegate"

azure_inherit_from_delegate_details {

auth {

type = "SystemAssignedManagedIdentity"

}

}

}

azure_environment_type = "AZURE"

delegate_selectors = ["harness-delegate"]

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to ACR using a Harness Azure Connector. For details on all the Azure requirements for the Azure Connector, see Add a Microsoft Azure Cloud Connector.

To add an artifact from ACR, do the following:

-

In your project, in CD (Deployments), select Services.

-

Select Manage Services, and then select New Service.

-

Enter a name for the service and select Save.

-

Select Configuration.

-

In Service Definition, select Kubernetes.

-

In Artifacts, select Add Artifact Source.

-

In Artifact Repository Type, click ACR, and then select Continue.

-

In ACR Repository, select or create an Azure Connector that connects to the Azure account where the ACR registry is located.

-

Select Continue.

-

In Artifact Details, in Subscription Id, select the Subscription Id where the artifact source is located.

-

In Registry, select the ACR registry to use.

-

In Repository, select the repo to use.

-

In Tag, enter or select the tag for the image.

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.

-

Click Submit. The artifact is added to the Service Definition.

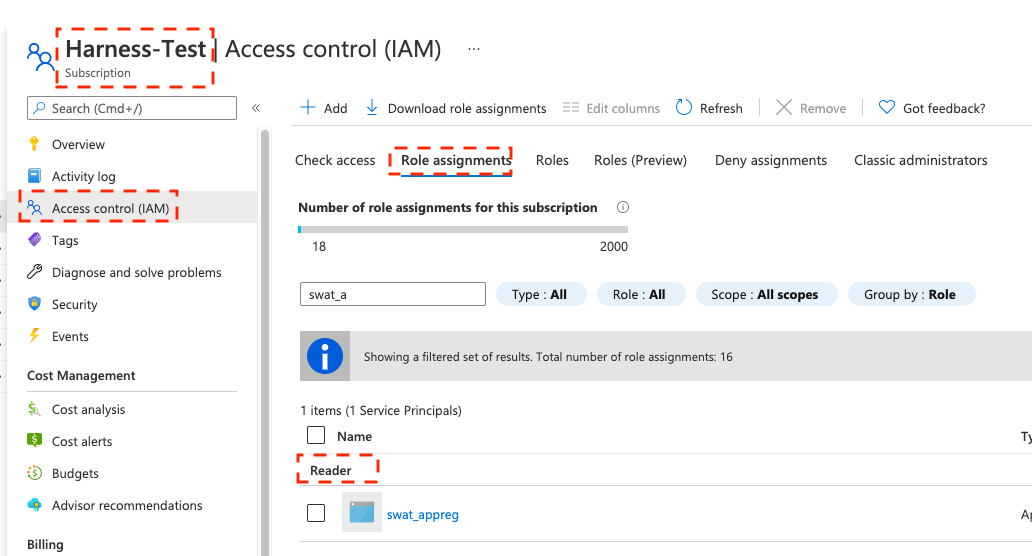

Permissions

The Harness Azure connectors that you'll use to connect Harness to ACR must have the Reader role, at minimum. You can also use a custom role that includes the permissions of the Reader role. The Reader role must be assigned at the Subscription or Resource Group level that is used by the Application (Client) Id that you'll use in the Azure connector's settings. The application must have permission to list all container registries. Make sure you: The following permissions (actions) are necessary for any Service Principal and/or Managed Identity user, regardless of whether you are using Kubernetes RBAC or Azure RBAC: For Helm deployments, the version of Helm must be >= 3.2.0. The Harness You can't use Pod Assigned Managed Identity and System Assigned Managed Identity for the same cluster. The following JSON sample creates a custom role with the required permissions. To use this sample, replace Reader role information

Custom role information

Microsoft.ContainerRegistry/registries/readMicrosoft.ContainerRegistry/registries/builds/readMicrosoft.ContainerRegistry/registries/metadata/readMicrosoft.ContainerRegistry/registries/pull/readMicrosoft.ContainerService/managedClusters/readMicrosoft.ContainerService/managedClusters/listClusterUserCredential/actionMicrosoft.Resource/subscriptions/resourceGroup/readHELM_VERSION_3_8_0 feature flag must be activated.xxxx with the role name, subscription Id, and resource group Id.{

"id": "/subscriptions/xxxx/providers/Microsoft.Authorization/roleDefinitions/xxxx",

"properties": {

"roleName": "xxxx",

"description": "",

"assignableScopes": ["/subscriptions/xxxx/resourceGroups/xxxx"],

"permissions": [

{

"actions": [],

"notActions": [],

"dataActions": [

"Microsoft.ContainerService/managedClusters/configmaps/read",

"Microsoft.ContainerService/managedClusters/configmaps/write",

"Microsoft.ContainerService/managedClusters/configmaps/delete",

"Microsoft.ContainerService/managedClusters/secrets/read",

"Microsoft.ContainerService/managedClusters/secrets/write",

"Microsoft.ContainerService/managedClusters/secrets/delete",

"Microsoft.ContainerService/managedClusters/apps/deployments/read",

"Microsoft.ContainerService/managedClusters/apps/deployments/write",

"Microsoft.ContainerService/managedClusters/apps/deployments/delete",

"Microsoft.ContainerService/managedClusters/events/read",

"Microsoft.ContainerService/managedClusters/events/write",

"Microsoft.ContainerService/managedClusters/events/delete",

"Microsoft.ContainerService/managedClusters/namespaces/read",

"Microsoft.ContainerService/managedClusters/nodes/read",

"Microsoft.ContainerService/managedClusters/pods/read",

"Microsoft.ContainerService/managedClusters/pods/write",

"Microsoft.ContainerService/managedClusters/pods/delete",

"Microsoft.ContainerService/managedClusters/services/read",

"Microsoft.ContainerService/managedClusters/services/write",

"Microsoft.ContainerService/managedClusters/services/delete",

"Microsoft.ContainerService/managedClusters/apps/replicasets/read",

"Microsoft.ContainerService/managedClusters/apps/replicasets/write",

"Microsoft.ContainerService/managedClusters/apps/replicasets/delete"

],

"notDataActions": []

}

]

}

}

Important notes

- Harness supports 500 images from an ACR repo. If you don't see some of your images, then you might have exceeded this limit. This is the result of an Azure API limitation.

- If you connect to an ACR repo via the platform-agnostic Docker Connector, the limit is 100.

Nexus

Use Nexus artifacts

You connect to Nexus using a Harness Nexus Connector. For details on all the requirements for the Nexus Connector, see Nexus Connector Settings Reference.

- YAML

- API

- Terraform Provider

- Pipeline Studio

Nexus connector YAML

connector:

name: Harness Nexus

identifier: Harness_Nexus

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: HttpHelmRepo

spec:

helmRepoUrl: https://nexus3.dev.harness.io/repository/test-helm/

auth:

type: UsernamePassword

spec:

username: harnessadmin

passwordRef: nexus3pwd

delegateSelectors:

- gcpdocplay

Service using Nexus artifact YAML

service:

name: Nexus Example

identifier: Nexus_Example

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates

valuesPaths:

- /values.yaml

skipResourceVersioning: false

enableDeclarativeRollback: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: account.Harness_Nexus

repository: todolist

repositoryFormat: docker

tag: "4.0"

spec:

artifactPath: nginx

repositoryPort: "6661"

identifier: myapp

type: Nexus3Registry

type: Kubernetes

Create the Nexus connector using the Create a Connector API.Nexus connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: Harness Nexus

identifier: Harness_Nexus

description: ""

orgIdentifier: default

projectIdentifier: CD_Docs

type: HttpHelmRepo

spec:

helmRepoUrl: https://nexus3.dev.harness.io/repository/test-helm/

auth:

type: UsernamePassword

spec:

username: harnessadmin

passwordRef: nexus3pwd

delegateSelectors:

- gcpdocplay'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider Nexus connector resource, go to harness_platform_connector_nexus.Nexus connector example

# Credentials username password

resource "harness_platform_connector_nexus" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

url = "https://nexus.example.com"

delegate_selectors = ["harness-delegate"]

version = "3.x"

credentials {

username = "admin"

password_ref = "account.secret_id"

}

}

# Credentials anonymous

resource "harness_platform_connector_nexus" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

url = "https://nexus.example.com"

version = "version"

delegate_selectors = ["harness-delegate"]

}

For the Terraform Provider service resource, go to harness_platform_service.

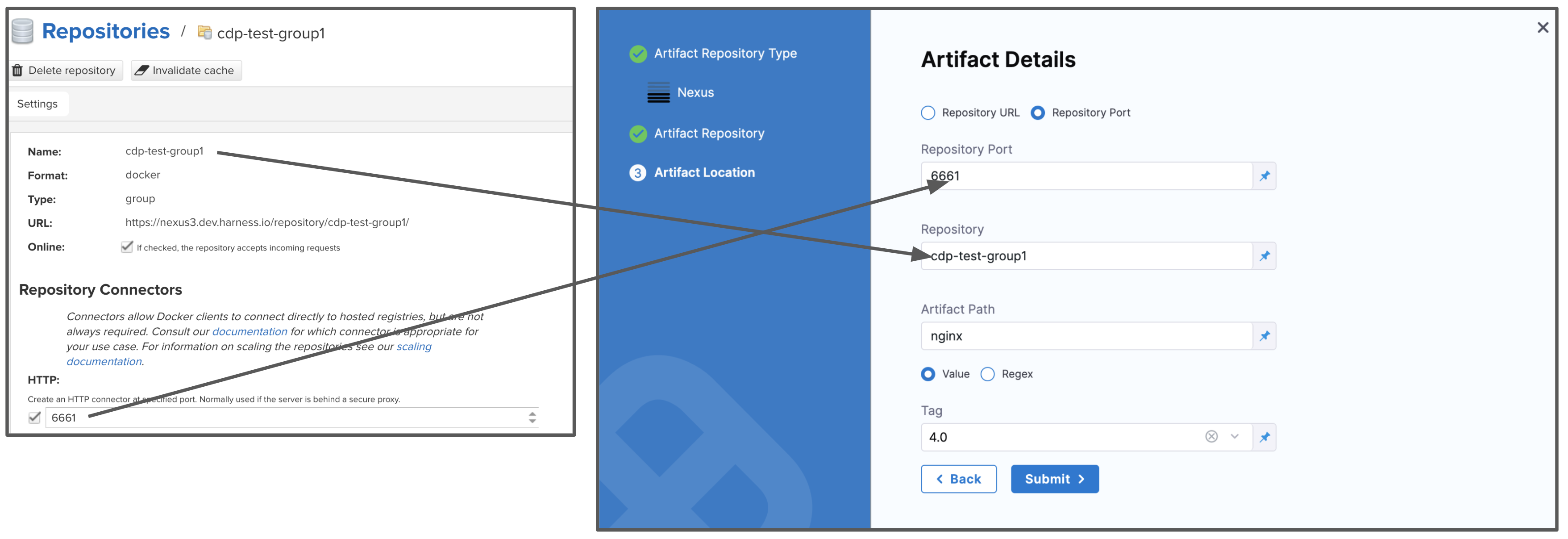

You connect to Nexus using a Harness Nexus Connector. For details on all the requirements for the Nexus Connector, see Nexus Connector Settings Reference.

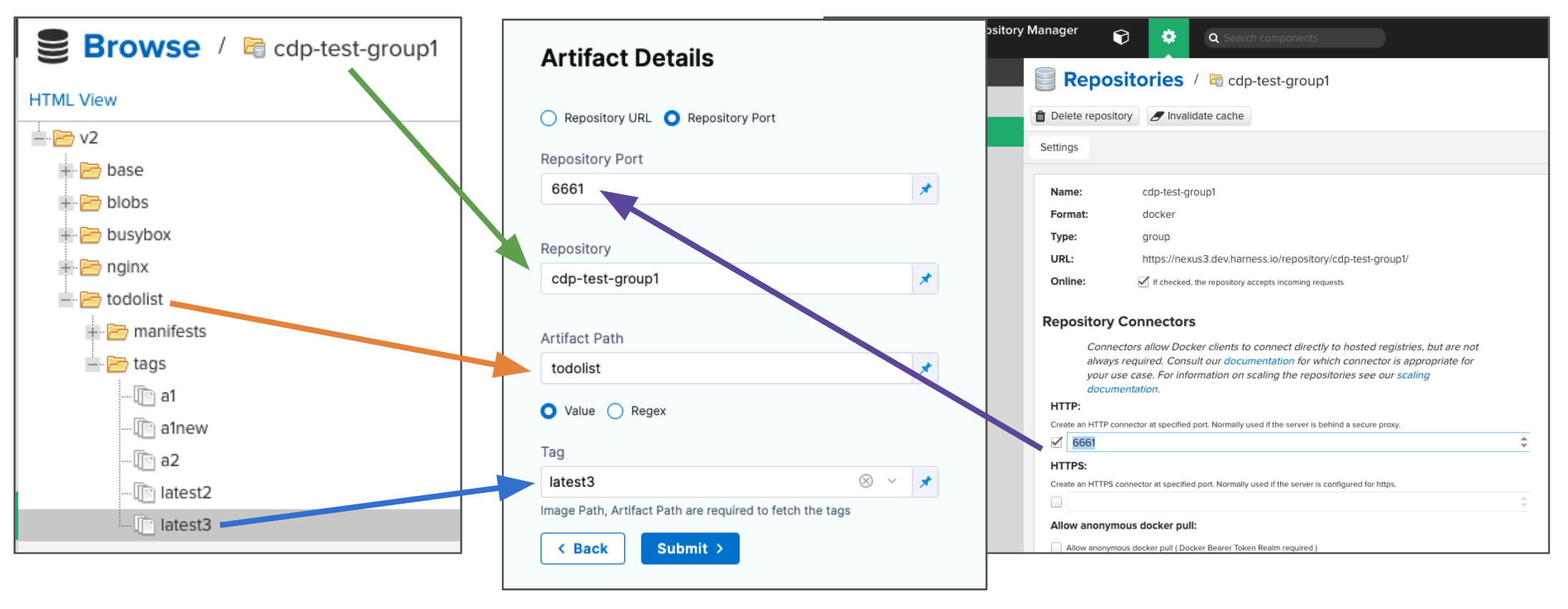

To add an artifact from Nexus, do the following:

-

In your project, in CD (Deployments), select Services.

-

Select Manage Services, and then select New Service.

-

Enter a name for the service and select Save.

-

Select Configuration.

-

In Service Definition, select Kubernetes.

-

In Artifacts, click Add Artifact Source.

-

In Artifact Repository Type, click Nexus, and then select Continue.

-

In Nexus Repository, select of create a Nexus Connector that connects to the Nexus account where the repo is located.

-

Select Continue.

-

Select Repository URL or Repository Port.

- Repository Port is more commonly used and can be taken from the repo settings. Each repo uses its own port.

- Repository URL is typically used for a custom infrastructure (for example, when Nexus is hosted behind a reverse proxy).

-

In Repository, enter the name of the repo.

-

In Artifact Path, enter the path to the artifact you want.

-

In Tag, enter or select the Docker image tag for the image.

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.

-

Click Submit.

The Artifact is added to the Service Definition.

Permissions

Ensure the connected user account has the following permissions in the Nexus Server.

- Repo: All repositories (Read)

- Nexus UI: Repository Browser

For Nexus 3, when used as a Docker repo, the user needs:

- A role with the

nx-repository-view-*_*_*privilege.

Artifactory

Use Artifactory artifacts

You connect to Artifactory (JFrog) using a Harness Artifactory Connector. For details on all the requirements for the Artifactory Connector, see Artifactory Connector Settings Reference.

- YAML

- API

- Terraform Provider

- Pipeline Studio

Artifactory connector YAML

connector:

name: artifactory-tutorial-connector

identifier: artifactorytutorialconnector

orgIdentifier: default

projectIdentifier: CD_Docs

type: Artifactory

spec:

artifactoryServerUrl: https://harness.jfrog.io/artifactory/

auth:

type: Anonymous

executeOnDelegate: false

Service using Artifactory artifact YAML

service:

name: Artifactory Example

identifier: Artifactory_Example

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates

valuesPaths:

- /values.yaml

skipResourceVersioning: false

enableDeclarativeRollback: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: artifactorytutorialconnector

artifactPath: alpine

tag: 3.14.2

repository: bintray-docker-remote

repositoryUrl: harness-docker.jfrog.io

repositoryFormat: docker

identifier: myapp

type: ArtifactoryRegistry

type: Kubernetes

Create the Artifactory connector using the Create a Connector API.Artifactory connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: artifactory-tutorial-connector

identifier: artifactorytutorialconnector

orgIdentifier: default

projectIdentifier: CD_Docs

type: Artifactory

spec:

artifactoryServerUrl: https://harness.jfrog.io/artifactory/

auth:

type: Anonymous

executeOnDelegate: false'

Create a service with an artifact source that uses the connector using the Create Services API.

For the Terraform Provider Artifactory connector resource, go to harness_platform_connector_artifactory.Artifactory connector example

# Authentication mechanism as username and password

resource "harness_platform_connector_artifactory" "example" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

org_id = harness_platform_project.test.org_id

project_id = harness_platform_project.test.id

url = "https://artifactory.example.com"

delegate_selectors = ["harness-delegate"]

credentials {

username = "admin"

password_ref = "account.secret_id"

}

}

# Authentication mechanism as anonymous

resource "harness_platform_connector_artifactory" "test" {

identifier = "identifier"

name = "name"

description = "test"

tags = ["foo:bar"]

org_id = harness_platform_project.test.org_id

project_id = harness_platform_project.test.id

url = "https://artifactory.example.com"

delegate_selectors = ["harness-delegate"]

}

For the Terraform Provider service resource, go to harness_platform_service.

You connect to Artifactory (JFrog) using a Harness Artifactory Connector. For details on all the requirements for the Artifactory Connector, go to Artifactory Connector Settings Reference.

To add an artifact from Artifactory, do the following:

-

In your project, in CD (Deployments), select Services.

-

Select Manage Services, and then select New Service.

-

Enter a name for the service and select Save.

-

Select Configuration.

-

In Service Definition, select Kubernetes.

-

In Artifacts, select Add Artifact Source.

-

In Artifact Repository Type, select Artifactory, and then select Continue.

-

In Artifactory Repository, select of create an Artifactory Connector that connects to the Artifactory account where the repo is located. Click Continue.

-

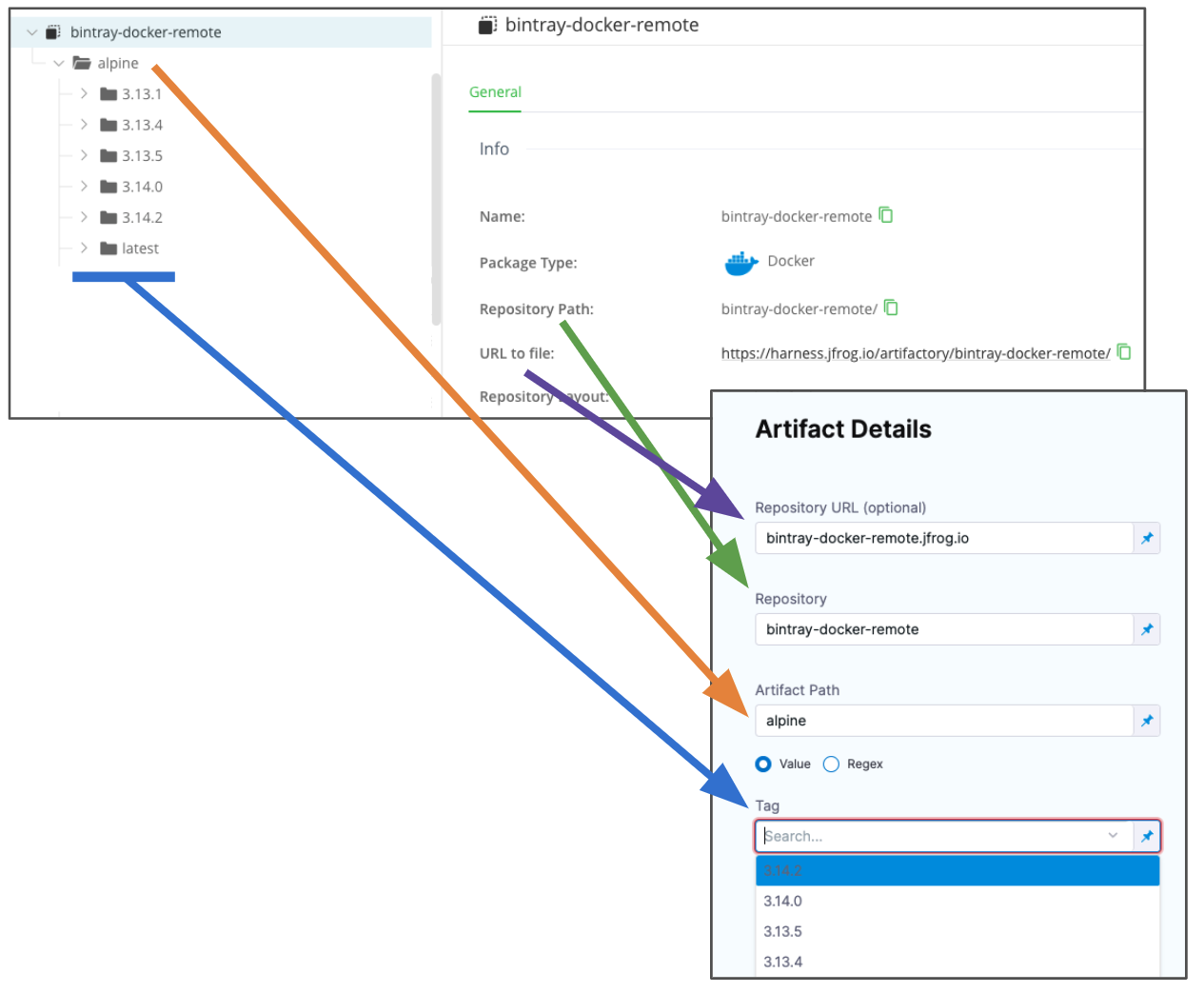

The Artifact Details settings appear.

-

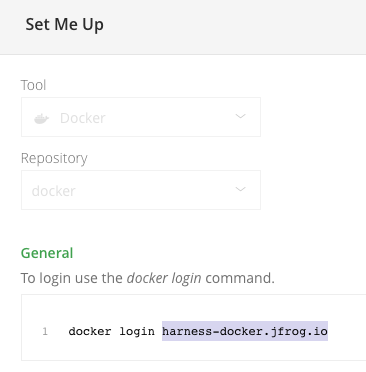

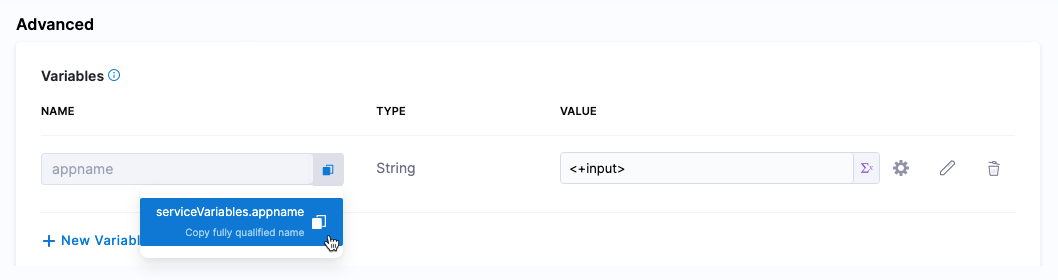

In Repository URL, enter the URL from the

docker logincommand in Artifactory's Set Me Up settings.

-

In Repository, enter the repo name. If the full path is

docker-remote/library/mongo/3.6.2, you would enterdocker-remote. -

In Artifact Path, enter the path to the artifact. If the full path is

docker-remote/library/mongo/3.6.2, you would enterlibrary/mongo. -

In Tag, enter or select the Docker image tag for the image.

-

If you use runtime input, when you deploy the pipeline, Harness will pull the list of tags from the repo and prompt you to select one.

-

Select Submit. The Artifact is added to the Service Definition.

Permissions

Make sure the following permissions are granted to the user:

- Privileged User is required to access API, whether Anonymous or a specific username (username and passwords are not mandatory).

- Read permission to all Repositories.

If used as a Docker Repo, user needs:

- List images and tags

- Pull images

Github packages

Use Github packages as artifacts

You can use Github Packages as artifacts for deployments.

Currently, Harness supports only the packageType as docker(container). Support for npm, maven, rubygems, and nuget is coming soon.

You connect to Github using a Harness Github Connector, username, and Personal Access Token (PAT).

New to Github Packages? This quick video will get you up to speed in minutes.

- YAML

- API

- Terraform Provider

- Pipeline Studio

GitHub Packages connector YAML

connector:

name: GitHub Packages

identifier: GitHub_Packages

orgIdentifier: default

projectIdentifier: CD_Docs

type: Github

spec:

url: https://github.com/johndoe/myapp.git

validationRepo: https://github.com/johndoe/test.git

authentication:

type: Http

spec:

type: UsernameToken

spec:

username: johndoe

tokenRef: githubpackages

apiAccess:

type: Token

spec:

tokenRef: githubpackages

delegateSelectors:

- gcpdocplay

executeOnDelegate: true

type: Repo

Service using Github Packages artifact YAML

service:

name: Github Packages

identifier: Github_Packages

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: myapp

type: K8sManifest

spec:

store:

type: Harness

spec:

files:

- /Templates

valuesPaths:

- /values.yaml

skipResourceVersioning: false

enableDeclarativeRollback: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- identifier: myapp

spec:

connectorRef: GitHub_Packages

org: ""

packageName: tweetapp

packageType: container

version: latest

type: GithubPackageRegistry

type: Kubernetes

Create the Github connector using the Create a Connector API.Github connector example

curl --location --request POST 'https://app.harness.io/gateway/ng/api/connectors?accountIdentifier=12345' \

--header 'Content-Type: text/yaml' \

--header 'x-api-key: pat.12345.6789' \

--data-raw 'connector:

name: GitHub Packages

identifier: GitHub_Packages

orgIdentifier: default

projectIdentifier: CD_Docs

type: Github

spec:

url: https://github.com/johndoe/myapp.git