Deploy Native Helm using Harness

This topic shows you how to perform Native Helm deployments using Harness.

Commands used by Harness to perform a Helm Chart Deployment managed by Helm

When using the Harness-managed Helm Chart Deployment approach, Harness uses a mix of helm and kubectl commands to perform the deployment.

- The command we run to perform Fetch Files, depends on the store type Git or Helm Repo.

For Git:

git clone {{YOUR GIT REPO}}

For Helm Repo:

helm pull {{YOUR HELM REPO}}

- Based on your values.yaml and Harness configured variables, Harness will then render those values via

helm template. We will consolidate all the rendered manifest into amanifest.yaml.

helm template release-75d461a29efd32e5d22b01dc0f93aa5275e2f003 /opt/harness-delegate/repository/helm/source/c1475174-18d6-38e6-8c67-1000f3b71297/helm-test-chart --namespace default -f ./repository/helm/overrides/6a7628964506885eb37908b81914a04c.yaml

- Harness will perform a dry run by default to show what is about to be applied.

kubectl --kubeconfig=config apply --filename=manifests-dry-run.yaml --dry-run=client

- Harness will then run the

helm installorhelm upgradecommand to install the chart on the Kubernetes clusters.

helm upgrade release-75d461a29efd32e5d22b01dc0f93aa5275e2f003 /opt/harness-delegate/repository/helm/source/c1475174-18d6-38e6-8c67-1000f3b71297/helm-test-chart -f ./repository/helm/overrides/6a7628964506885eb37908b81914a04c.yaml

- Harness will then query the deployed resources to show a summary of what was deployed.

helm get manifest release-75d461a29efd32e5d22b01dc0f93aa5275e2f003 --namespace=default

helm list --filter ^release-75d461a29efd32e5d22b01dc0f93aa5275e2f003$

- In the event of failure Harness will rollback, we perform a

helm rollback.

helm rollback release-1d0bcdea6247c9f82cc9204b1d81593e7b985651

Helm 2 in Native Helm

Helm 2 was deprecated by the Helm community in November 2020 and is no longer supported by Helm. If you continue to maintain the Helm 2 binary on your delegate, it might introduce high and critical vulnerabilities and put your infrastructure at risk.

To safeguard your operations and protect against potential security vulnerabilities, Harness deprecated the Helm 2 binary from delegates with an immutable image type (image tag yy.mm.xxxxx). For information on delegate types, go to Delegate image types.

If your delegate is set to auto-upgrade, Harness will automatically remove the binary from your delegate. This will result in pipeline and workflow failures for services deployed via Helm 2.

If your development team still uses Helm 2, you can reintroduce the binary on the delegate. Harness is not responsible for any vulnerabilities or risks that might result from reintroducing the Helm 2 binary.

For more information about updating your delegates to reintroduce Helm 2, go to:

Contact Harness Support if you have any questions.

Deployment requirements

A Native Helm deployment requires the following:

- Helm chart.

- Kubernetes cluster.

- A Kubernetes service account with permission to create entities in the target namespace is required. The set of permissions should include

list,get,create, anddeletepermissions. In general, the cluster-admin permission or namespace admin permission is enough.

For more information, go to User-Facing Roles from Kubernetes.

Native Helm deploy stage

The following steps take you through a typical Native Helm pipeline stage setup to demonstrate the Harness settings involved.

Create a Project for your new CD Pipeline: If you don't already have a Harness Project, create a Project for your new CD Pipeline. Ensure that you add the Continuous Delivery module to the Project. Go to Create Organizations and Projects.

- In your Harness Project, select Deployments, and then select Create a Pipeline.

- Enter a name like Native Helm Example and select Start.

- Your Pipeline appears.

- Select Add Stage and select Deploy.

- Enter a name like quickstart.

- In Deployment Type, select Native Helm, and then select Set Up Stage.

- In Select Service, select Add Service.

- Give the service a name.

Once you have created a Service, it is persistent and can be used throughout the stages of this or any other Pipeline in the Project.

Next, we'll add the NGINX Helm chart for the deployment.

In Harness, we have native Helm support for deployment strategies like Blue-Green and Canary. For more information, see the Helm Step Reference.

Add a Helm chart to a Native Helm service

To add a Helm chart in this example, we will add a Harness connector to the HTTP server hosting the chart. This connector uses a Harness Delegate to verify credentials and pull charts. Ensure you have a Harness Delegate installed also. For steps on installing a delegate, go to Delegate installation overview.

-

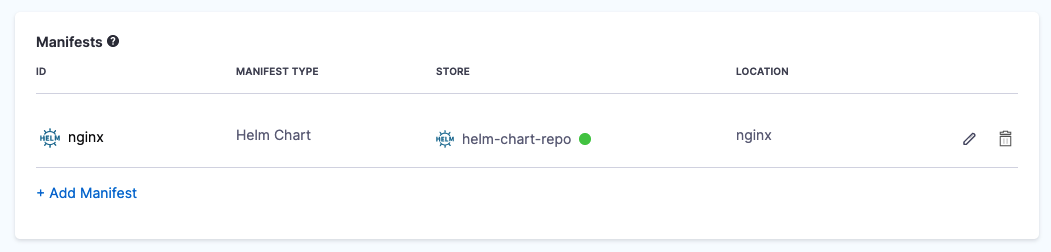

In the Harness service for your Native Helm deployment, in Manifests, select Add Manifest.

You can select a Helm Values YAML file or a Helm chart. For this example, we'll use a publicly available Helm chart. The process isn't very different between these options. For Values YAML, you simply provide the Git branch and path to the Values YAML file.

-

Select Helm Chart, and then select Continue.

-

In Specify Helm Chart Store, select HTTP Helm. In this example, we're pulling a Helm chart for NGINX from the Bitnami repo at

https://charts.bitnami.com/bitnami. You don't need any credentials for pulling this public chart. -

Select New HTTP Helm Repo Connector.

-

In the HTTP Helm Repo Connector, enter a name and select Continue.

-

In Helm Repository URL, enter

https://charts.bitnami.com/bitnami. -

In Authentication, select Anonymous.

-

Select Continue.

-

In Delegates Setup, select/create a delegate, and then select Save and Continue.

For steps on installing a delegate, go to Delegate installation overview.

When you are done, the Connector is tested. If it fails, your Delegate might not be able to connect to

https://charts.bitnami.com/bitnami. Review its network connectivity and ensure it can connect.

If you are using Helm v2, you will need to install Helm v2 and Tiller on the delegate pod. For steps on installing software on the delegate, go to Build custom delegate images with third-party tools. -

In Manifest Details, enter the following settings can select Submit.

- Manifest Identifier: enter nginx.

- Chart Name: enter nginx.

- Chart Version: enter 8.8.1.

- Helm Version: select Version 3.

The Helm chart is added to the Service Definition.

Next, you can target your Kubernetes cluster for deployment.

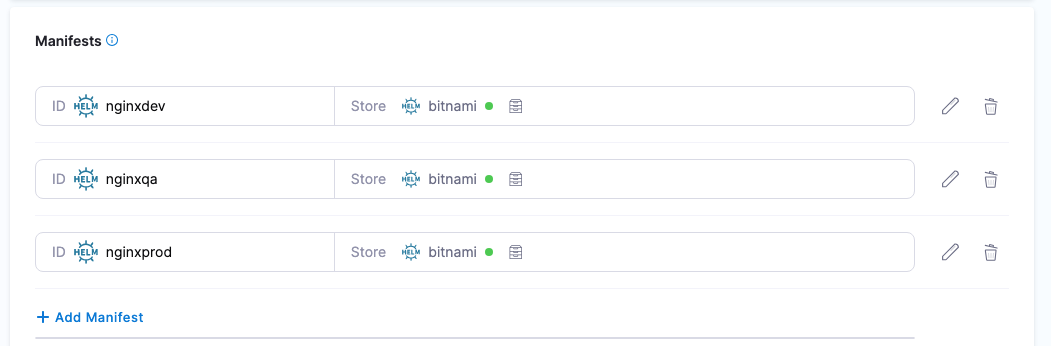

Using multiple Helm charts in a single Harness service

For Kubernetes Helm and Native Helm deployment types, you can add multiple Helm charts to a Harness service.

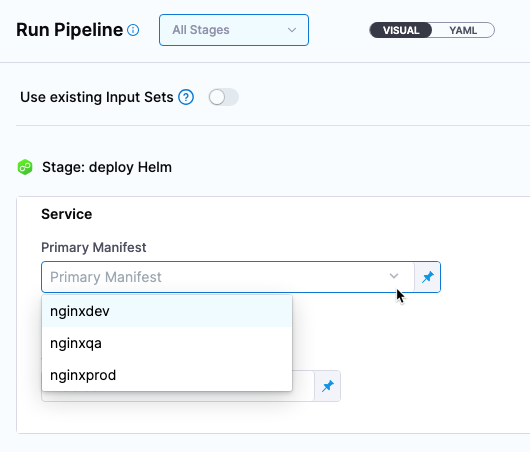

When you run a Harness pipeline that deploys the service, you can select one of the Helm charts to deploy.

By using multiple Helm charts, you can deploy the same artifact with different manifests at pipeline runtime.

Video summary of using multiple manifests

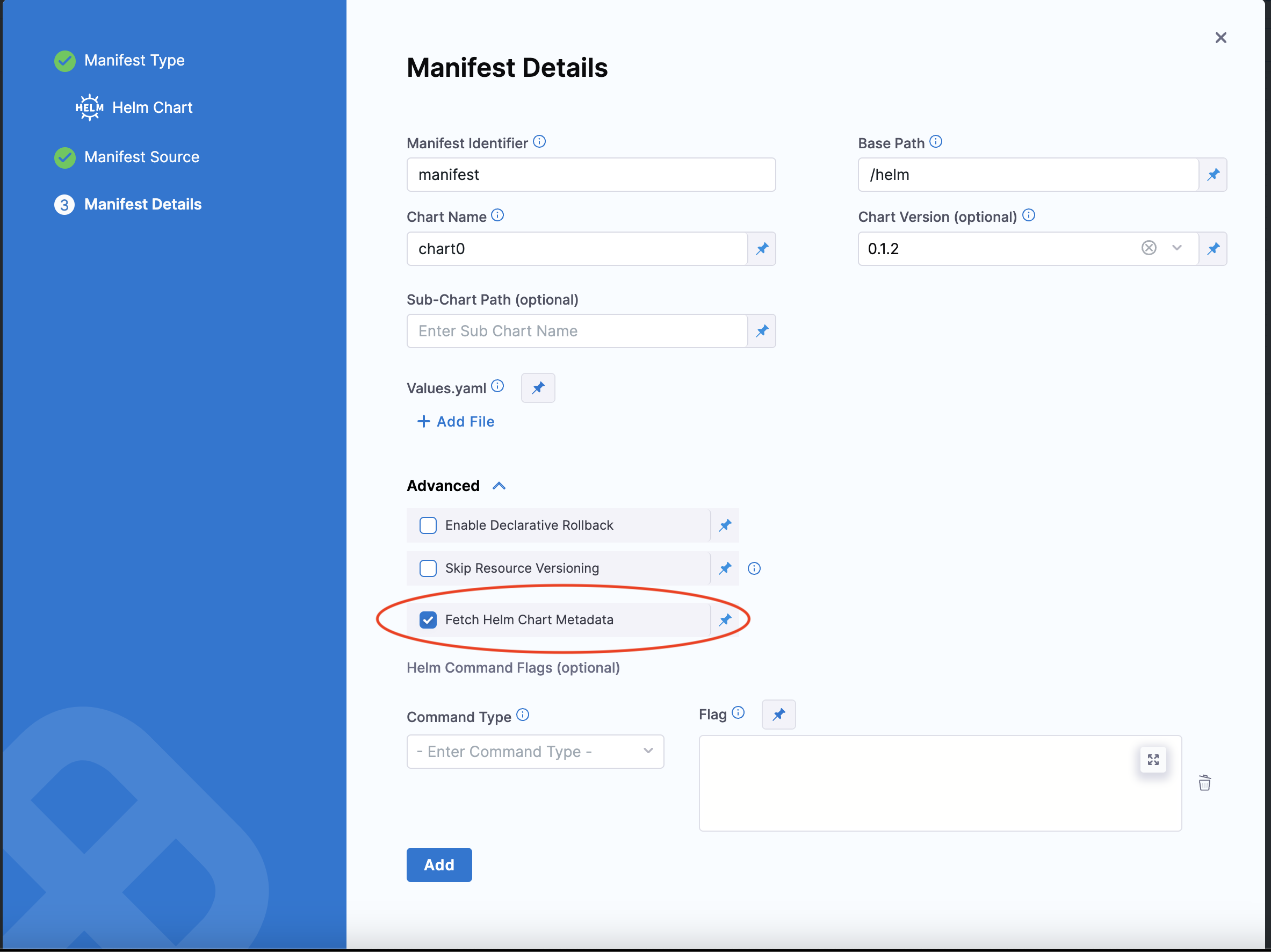

Helm chart expressions

For Kubernetes Helm and Native Helm deployments, you can use the following built-in expressions in your pipeline stage steps to reference chart details.

| Expression | Description |

|---|---|

<+manifests.MANIFEST_ID.helm.name> | Helm chart name. |

<+manifests.MANIFEST_ID.helm.description> | Helm chart description. |

<+manifests.MANIFEST_ID.helm.version> | Helm Chart version. |

<+manifests.MANIFEST_ID.helm.apiVersion> | Chart.yaml API version. |

<+manifests.MANIFEST_ID.helm.appVersion> | The app version. |

<+manifests.MANIFEST_ID.helm.kubeVersion> | Kubernetes version constraint. |

<+manifests.MANIFEST_ID.helm.metadata.url> | Helm Chart repository URL. |

<+manifests.MANIFEST_ID.helm.metadata.basePath> | Helm Chart base path, available only for OCI, GCS, and S3. |

<+manifests.MANIFEST_ID.helm.metadata.bucketName> | Helm Chart bucket name, available only for GCS and S3. |

<+manifests.MANIFEST_ID.helm.metadata.commitId> | Store commit Id, available only when manifest is stored in a Git repo and Harness is configured to use latest commit. |

<+manifests.MANIFEST_ID.helm.metadata.branch> | Store branch name, available only when manifest is stored in a Git repo and Harness is configured to use a branch. |

Select Fetch Helm Chart Metadata in the Manifest Details page's Advanced section to enable this functionality.

The MANIFEST_ID is located in service.serviceDefinition.spec.manifests.manifest.identifier in the Harness service YAML. In the following example, it is nginx:

service:

name: Helm Chart

identifier: Helm_Chart

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: nginx

type: HelmChart

spec:

store:

type: Http

spec:

connectorRef: Bitnami

chartName: nginx

helmVersion: V3

skipResourceVersioning: false

fetchHelmChartMetadata: true

commandFlags:

- commandType: Template

flag: mychart -x templates/deployment.yaml

type: Kubernetes

It can be also be fetched using the expression <+manifestConfig.primaryManifestId>. This expression is supported in multiple Helm chart manifest configuration.

Define the target Native Helm infrastructure

Defining the target cluster infrastructure definition for a Native Helm chart deployment is the same process as a typical Harness Kubernetes deployment.

For more information, go to Define Your Kubernetes Target Infrastructure.

Pre-existing and dynamically provisioned infrastructure

There are two methods of specifying the deployment target infrastructure:

- Pre-existing: the target infrastructure already exists and you simply need to provide the required settings.

- Dynamically provisioned: the target infrastructure will be dynamically provisioned on-the-fly as part of the deployment process.

For details on Harness provisioning, go to Provisioning overview.

Define your pre-existing target cluster

- In Infrastructure, in Environment, select New Environment.

- In Name, enter quickstart, select Non-Production, and select Save.

- In Infrastructure Definition, select the Kubernetes.

- In Cluster Details, select Select Connector. We'll create a new Kubernetes connector to your target platform. We'll use the same Delegate you installed earlier.

- Select New Connector.

- Enter a name for the Connector and select Continue.

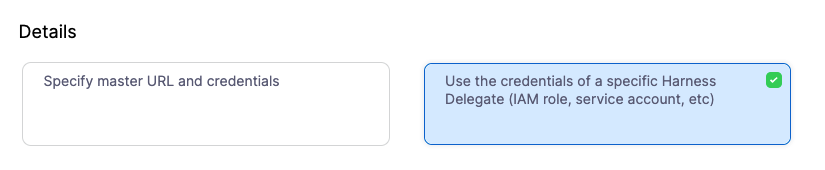

- In Details, select Use the credentials of a specific Harness Delegate, and then select Continue.

- In Set Up Delegates, select the Delegate you added earlier by entering one of its Tags.

- Select Save and Continue. The Connector is tested. Select Finish.

- Select the new Connector and select Apply Selector.

- In Namespace, enter default or the namespace you want to use in the target cluster.

- In Release Name, enter quickstart.

- Select Next. The deployment strategy options appear.

Enforcing Namespace Consistency

Helm deployments in Harness offer powerful packaging and templating capabilities, but with that flexibility comes a gap in namespace enforcement. By default, Helm allows users to deploy workloads into any namespace, regardless of what’s defined in the Infrastructure.

This can lead to security and governance issues, especially in shared clusters where namespace isolation is critical.

Helm does not perform strict validation against the namespace defined in the Infrastructure. Users can override the namespace in several ways—via chart templates, values files, or by passing the --namespace flag during Helm install or upgrade.

This feature requires delegate version 856xx or later.

:::

To enable this setting, go to: Account / Org / Project Settings → Default Settings Under the Continuous Delivery section, set the value to True for: Enable Namespace validation from different sources with infrastructure level namespace

How it's handled

Helm doesn't inherently block namespace overrides, so Harness enforces validation during execution when this feature flag is enabled.

If a Helm chart tries to install resources into a namespace that doesn’t match the one defined in the Infrastructure, Harness will fail the deployment.

This includes scenarios where:

- The chart templates define a hardcoded namespace (

metadata.namespace) - The

--namespaceflag is passed in install/upgrade arguments - Values passed into the chart result in a different namespace

For example:

- The Infrastructure is set to deploy into

secure-team-ns. - A Helm chart includes a hardcoded namespace like

sandbox-test. - During execution, the chart tries to install into s

andbox-test. - With namespace validation enabled, Harness detects the mismatch and blocks the deployment.

This prevents service owners or developers from bypassing namespace boundaries—ensuring that deployments stay confined to the approved environment.

Limitations

Currently, Helm Canary deployments are not supported with this namespace enforcement feature.

Add a Helm Deployment step

In this example, we're going to use a Rolling deployment strategy.

- Select Rolling, and select Apply.

- The Helm Deployment step is added to Execution.

Command Flags at Step Level

You can optionally override or add native Helm command parameters directly in the Helm Deploy step. This provides greater flexibility to customize Helm CLI behavior without modifying the Service definition.

Command Flags at the step level are supported for Helm v3 only.

How to Configure Command Flags

- In your pipeline, add or edit a Helm Deploy step.

- Navigate to the Advanced tab.

- Locate the Command Flags field.

- Add one or more Helm command flags as needed.

- Save the step.

During execution, Harness applies the specified flags to the corresponding Helm commands.

Behavior and Precedence

| Configuration Location | Behavior |

|---|---|

| Step and Service | Step-level flags take precedence. Service-level flags are used, unless overridden at the step level. |

| Step only | Step-level flags are used. |

| Service only | Service-level flags are used. |

There is no merging of flags between step and service levels. Step-level flags fully override service-level flags for the same command.

Example

To perform a server-side dry-run without modifying the Service:

- In the Helm Deploy step, add:

--dry-run --server

Additional Resources

For a full list of supported Helm v3 command flags, refer to the Helm CLI Command Reference

Environment Variables

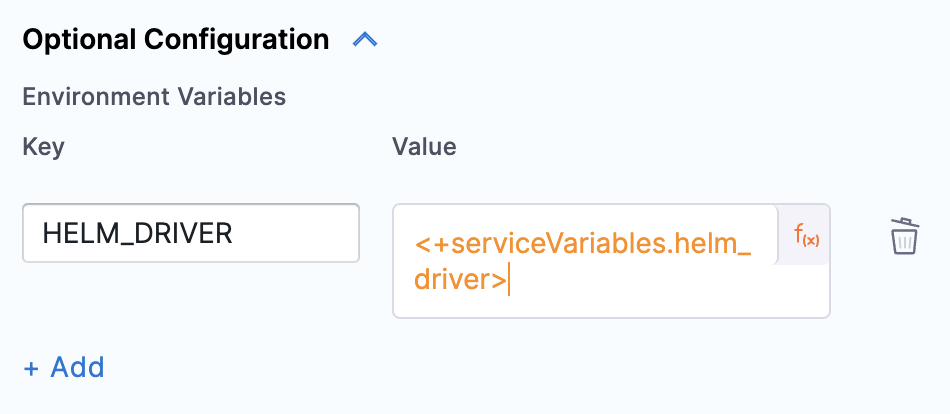

Native Helm Deploy and Native Helm Rollback steps support setting environment variables.

Under Optional Configuration for the step, add any environment variables that you would like to set during the deployment.

Deploy and review

-

Select Save to save your pipeline.

-

Select Run.

-

Select Run Pipeline.

Harness verifies the connections and then runs the Pipeline.

-

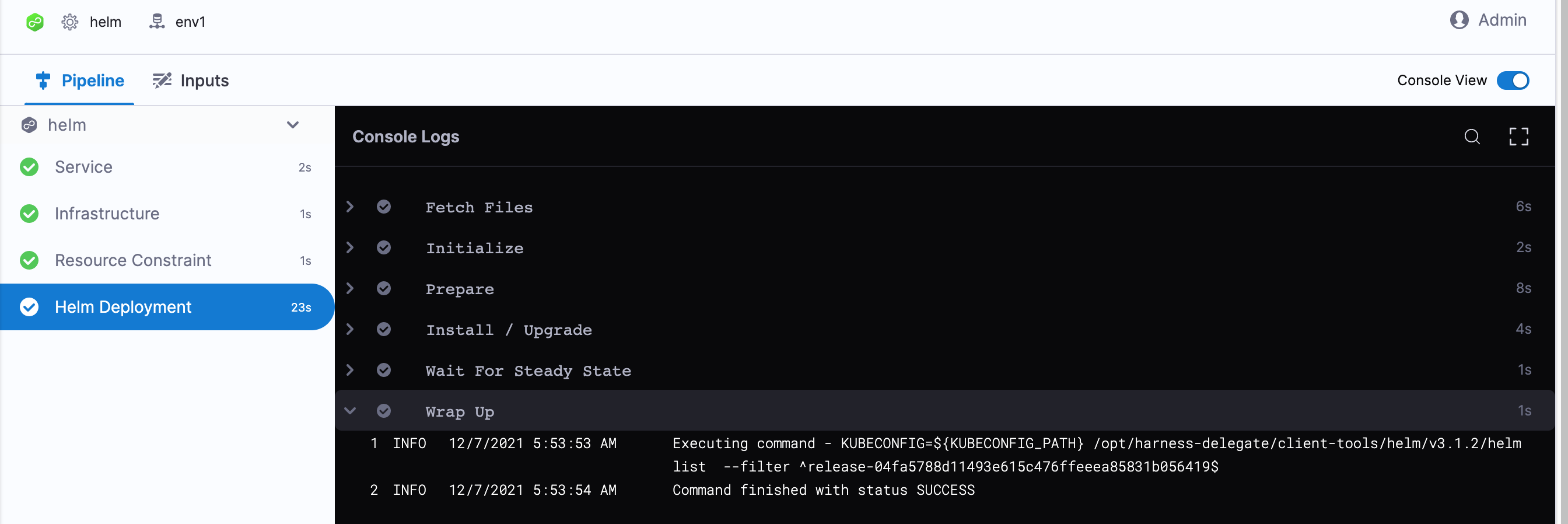

Toggle Console View to watch the deployment with more detailed logging.

-

Select the Helm Deployment step and expand Wait for Steady State.

Enable the feature flag CDS_HELM_STEADY_STATE_CHECK_1_16_V2_NG to enable steady state check for Native Helm deployments on Kubernetes clusters using 1.16 or higher. There is a behavior change in how Harness tracks managed workloads for rollback. We are not using ConfigMap anymore to match the deployed resources' release name to track managed workloads for rollback. We use helm get manifest to retrieve the workloads from a Helm release. For steady-state checks of the kubernetes jobs, we provide an option in account/org/project settings. This is not enabled by default. For customer's who didn't have this feature flag enabled before, they may start seeing that the Wait for steady state check is not skipped and won't need to configure it.

You can see Status : quickstart-quickstart deployment "quickstart-quickstart" successfully rolled out.

Congratulations! The deployment was successful.

In your project's Deployments, you can see the deployment listed.

Spec requirements for steady state check and versioning

Harness requires that the release label be used in every Kubernetes spec to ensure that Harness can identify a release, check its steady state, and perform verification and rollback on it.

Ensure that the release label is in every Kubernetes object's manifest. If you omit the release label from a manifest, Harness cannot track it.

The Helm built-in Release object describes the release and allows Harness to identify each release. For this reason, the harness.io/release: {{ .Release.Name }} label must be used in your Kubernetes spec.

See these Service and Deployment object examples:

{{- if .Values.env.config}}

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ template "todolist.fullname" . }}

labels:

app: {{ template "todolist.name" . }}

chart: {{ template "todolist.chart" . }}

release: "{{ .Release.Name }}"

harness.io/release: {{ .Release.Name }}

heritage: {{ .Release.Service }}

spec:

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

app: {{ template "todolist.name" . }}

release: {{ .Release.Name }}

template:

metadata:

labels:

app: {{ template "todolist.name" . }}

release: {{ .Release.Name }}

harness.io/release: {{ .Release.Name }}

spec:

{{- if .Values.dockercfg}}

imagePullSecrets:

- name: {{.Values.name}}-dockercfg

{{- end}}

containers:

- name: {{ .Chart.Name }}

image: {{.Values.image}}

imagePullPolicy: {{ .Values.pullPolicy }}

{{- if or .Values.env.config .Values.env.secrets}}

envFrom:

{{- if .Values.env.config}}

- configMapRef:

name: {{.Values.name}}

{{- end}}

{{- if .Values.env.secrets}}

- secretRef:

name: {{.Values.name}}

{{- end}}

{{- end}}

...

apiVersion: v1

kind: Service

metadata:

name: {{ template "todolist.fullname" . }}

labels:

app: {{ template "todolist.name" . }}

chart: {{ template "todolist.chart" . }}

release: {{ .Release.Name }}

heritage: {{ .Release.Service }}

spec:

type: {{ .Values.service.type }}

ports:

- port: {{ .Values.service.port }}

targetPort: http

protocol: TCP

name: http

selector:

app: {{ template "todolist.name" . }}

release: {{ .Release.Name }}

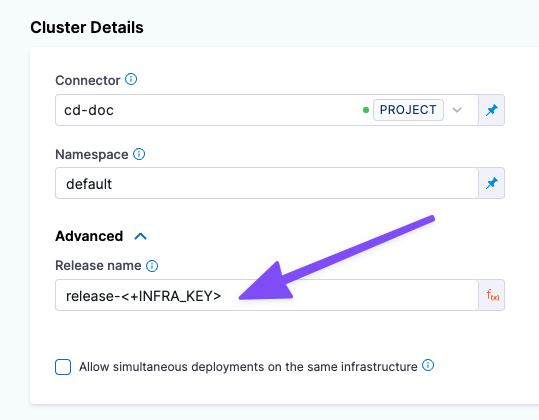

The Release name setting in the stage Infrastructure is used as the Helm Release Name to identify and track the deployment per namespace:

Autodetecting Helm Charts without configuring release names

When you want to deploy a commodity Helm Chart (ElasticSearch, Prometheus, etc.) or a pre-packaged Helm Chart, Harness now automatically applies tracking labels to the deployed Helm service. You do not need to add {{Release.Name}} to your Helm Chart.

Harness is able to track the deployed Helm Chart in the Services dashboard. All chart information is also available to view in the Services dashboard.

This feature will be available for users on delegate version 810xx. Please ensure the delegate is up to date before opting into this feature.

Deploy Helm Charts with CRDs

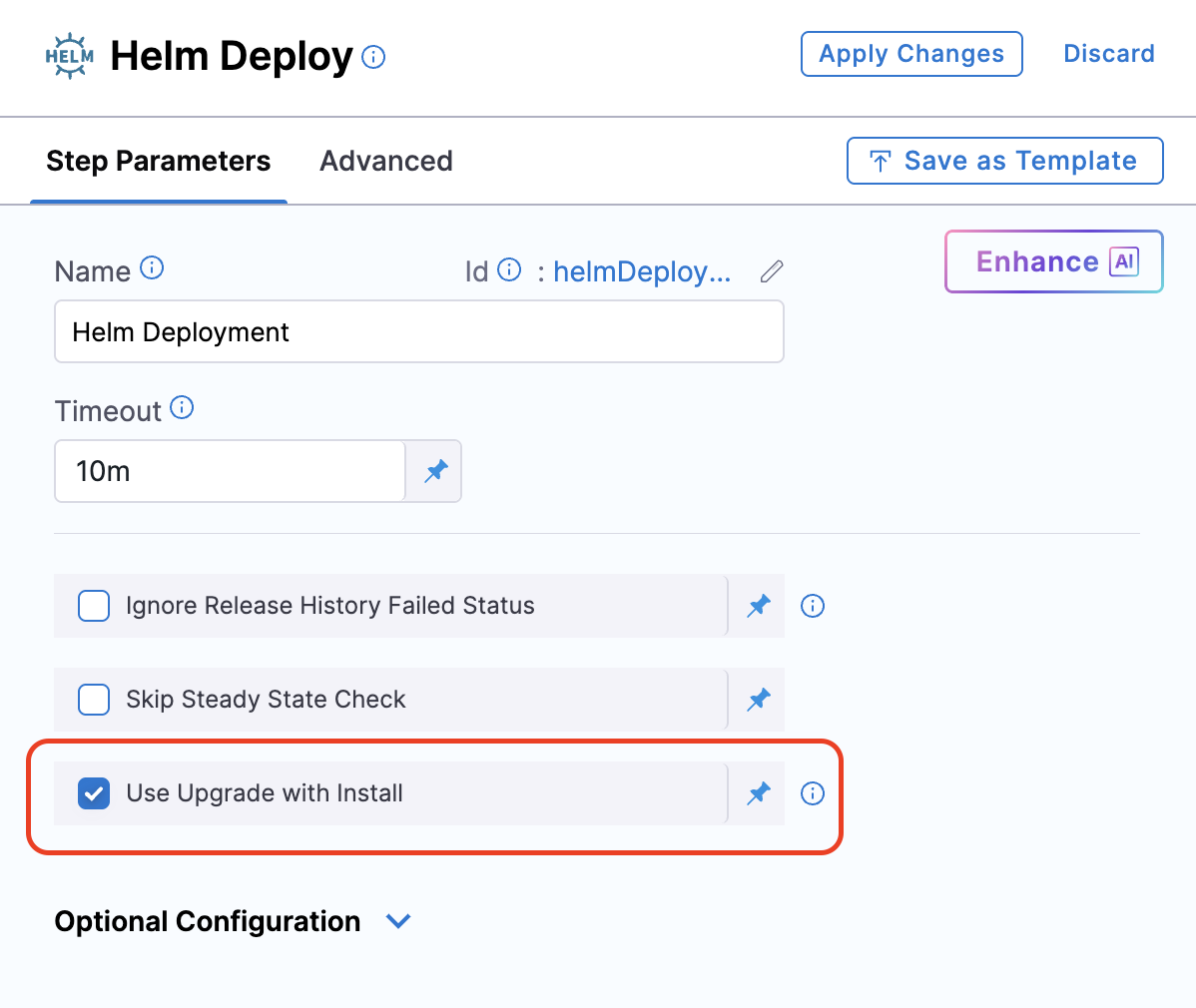

Harness now supports deploying Helm charts that include Custom Resource Definitions (CRDs) which may already exist outside the target namespace. This enhancement enables the use of the helm upgrade --install command to avoid common CRD-related installation errors.

This feature is currently behind the feature flag CDS_SKIP_HELM_INSTALL. Contact Harness Support to enable it.

Requires delegate version 856xx or later.

Once enabled, you’ll see a new checkbox titled Use Upgrade with Install in the Helm deployment step configuration.

This option:

- Supports fixed values, runtime inputs, and expressions.

- Works with both Helm v2 and Helm v3.

- Continues to support custom CLI flags.

This checkbox is available for Native Helm deployment steps, including Rolling Deploy, Canary, and Blue-Green deployments.

Native Helm notes

Please review the following notes.

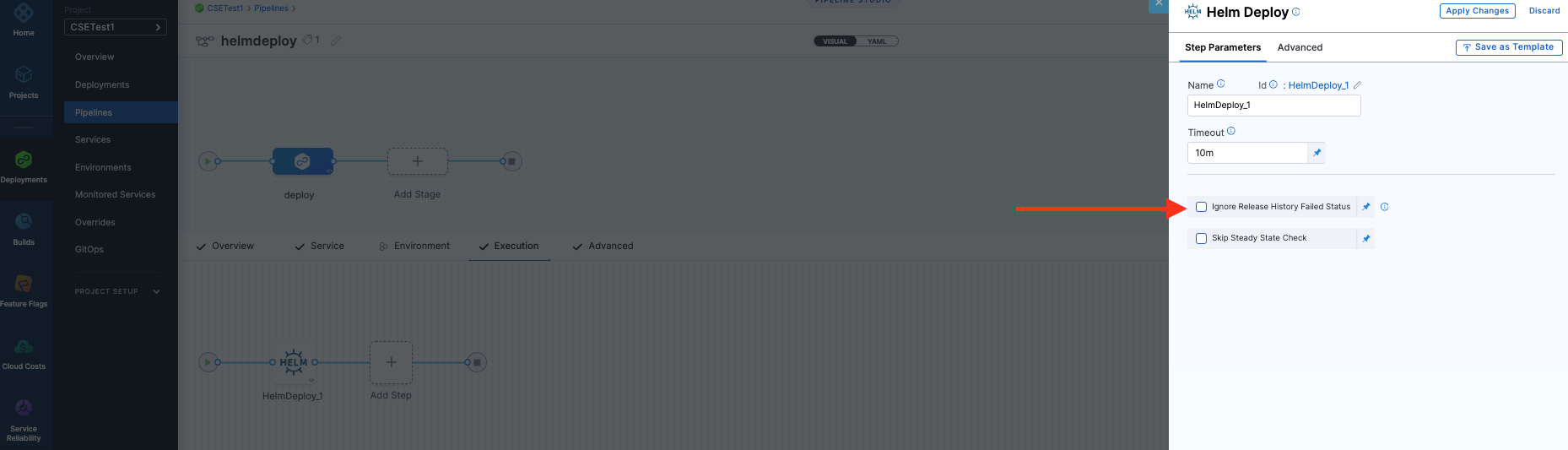

Ignore release history failed status

By default, if the latest Helm release failed, Harness does not proceed with the install/upgrade and throws an error.

For example, let's say you have a Pipeline that performs a Native Helm deployment and it fails during execution while running helm upgrade because of a timeout error from an etcd server.

You might have several retries configured in the Pipeline, but all of them will fail when Harness runs a helm history in the prepare stage with the message: there is an issue with latest release <latest release failure reason>.

Enable the Ignore Release History Failed Status option to have Harness ignore these errors and proceed with install/upgrade.

Options for connecting to a Helm chart store

The options avialable to you to specify a Helm chart store depend on whether or not specific feature flags are enabled on your account. Options available without any feature flags or with specific feature flags enabled are described here:

- Direct Connection. Contains the OCI Helm Registry Connector option (shortened to OCI Helm), which you can use with any OCI-based registry.

- Via Cloud Provider. Contains the ECR connector option. This connector is specifically designed for AWS ECR to help you overcome the limitation of having to regenerate the ECR registry authentication token every 12 hours. The ECR connector option uses an AWS connector and regenerates the required authentication token if the token has expired.

- For details on using different authentication types (access key, delegate IAM, and IRSA), go to Add an AWS connector.

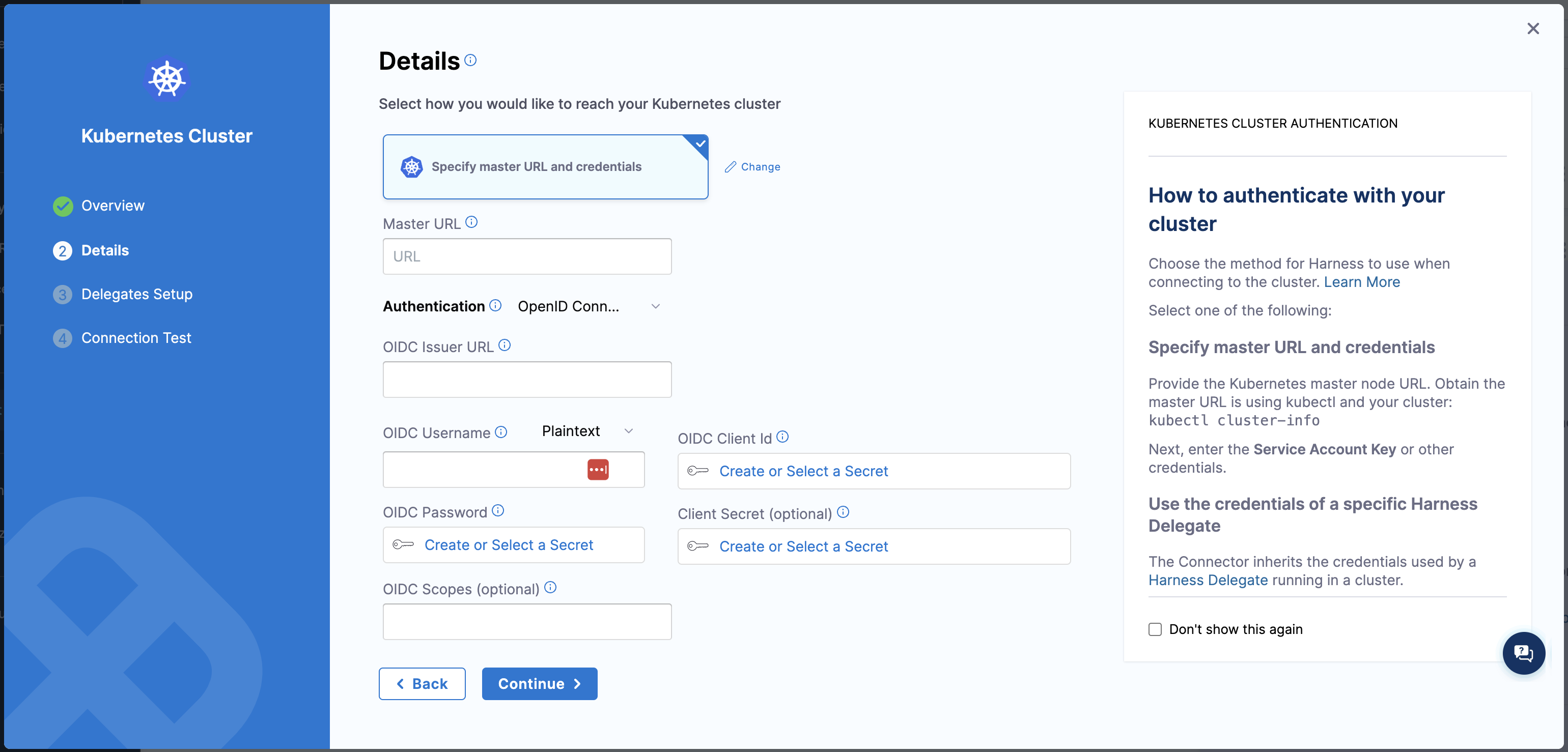

We now support OpenID Connect (OIDC) authentication in Native Helm, enabling seamless integration with OIDC-compliant identity providers for enhanced security and user management.

To configure OIDC authentication in Kubernetes, navigate to account/project settings.

Select New Connector, select Kubernetes cluster.

In the details tab, select Specify master URL and credentials.

Enter the Master URL.

In the Authentication, select OpenID Connect and fill in the necessary details.

Helm commands performance analysis

Harness interacts with helm charts and repositories by using various helm commands. When these commands are run in parallel along with a large helm repository, they can leave a significant CPU footprint on the Harness Delegate. Below is the summary of a few vulnerable helm commands which Harness uses:

helm repo add: Avoids redundant additions by checking if the repository already exists and employs locking to prevent conflicts during parallel deployments.helm repo update: Asynchronously fetches index.yaml for all repos, unmarshals and sorts them. Parallel commands do not perform locking, but still manage to run without any failures.helm pull: Involves pulling and locally dumping a chart, where it unmarshalls repository config, iterates through repositories, loads index.yaml file, and matches the requested chart entry for downloading.

Harness improvements

To improve performance of concurrent helm commands, 'N' parallel helm commands on the same helm repository will not always spawn an equivalent number of processes. This is achieved by trying to re-use the output from one command by other concurrent commands.

This reduces both CPU and memory usage of the Harness Delegate, since concurrently running processes are reduced. These improvements are available from 81803 version of Harness Delegate.

Harness Certified Limits

Delegate used for benchmarking: 2 vCPUs, 8 GB memory K8s delegate (1 replica, version 81803)

| Index.yaml size | Concurrent deployments |

|---|---|

| 20 MB | 15 |

| 75 MB | 5 |

| 150 MB | 3 |

Next Steps

See Kubernetes How-tos for other deployment features.