VM deployments using SSH

You can use a Secure Shell (SSH) deployment type to deploy your artifacts to VM or bare-metal hosts located in Microsoft Azure, AWS, or any platform-agnostic Physical Data Center (PDC).

Many traditional deployments use runtime environments such as Tomcat or JBoss. Your target hosts should have these installed before deployment. You can use the Harness Command step to install them in the same pipeline as your SSH deployment.

Deployment summary

An SSH deployment involves configuring the following:

- Create a Harness Secure Shell service.

- Set up a Harness connector to access your repository.

- Define the target infrastructure for deployment.

- Add the Harness connector for the target infrastructure.

- Add the credentials needed to connect to target hosts.

- Select the deployment strategy.

- Run the pipeline and review.

We support using SSH with an OIDC-enabled AWS connector, but it requires Delegate version 854xx or later. For more information, refer to AWS OIDC connector reference.

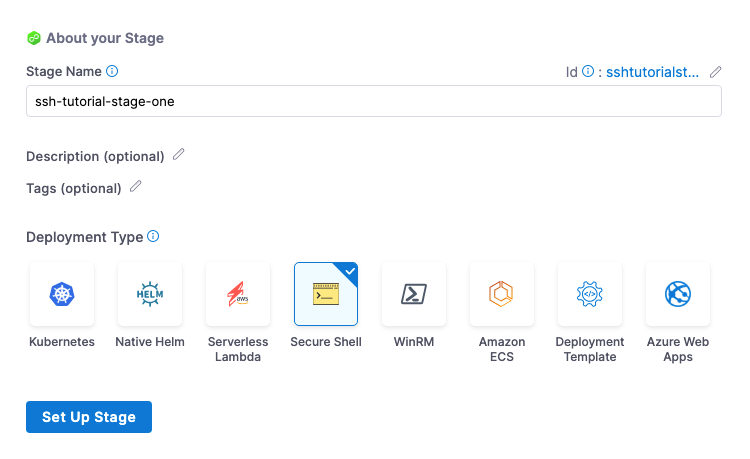

SSH stages

To start a SSH deployment, create a new pipeline and add a stage for Secure Shell deployments.

Next, you create the service, environment, and execution steps for the stage.

SSH Services

SSH Services define the artifact you want to deploy and any config files you want to use.

In the stage Service tab, you add the artifact metadata and the related config files to execute on the target hosts.

Create a Harness Secure Shell service

- For Select Service, select New Service, enter a name for the service.

- For Service Definition, in Deployment Type, select Secure Shell.

Add the artifact connector

For Secure Shell, you can access artifacts from the following sources:

- Harness Artifact Registry (Docker artifact type only)

- Jenkins

- Artifactory

- Bamboo

- Amazon S3

- Amazon ECR

- Nexus2

- Azure Artifacts

- GCR

warning

Google Container Registry (GCR) is being deprecated. For more details, refer to the Deprecation Notice.

- ACR

- Docker Registry (platform-agnostic)

- Custom. If you select Custom, you will need to provide a Bash script.

The supported artifact package types include Docker, JAR, TAR, WAR, RPM and ZIP.

Harness includes connectors for all the major artifact repositories. In this example, we will use Artifactory and a publicly available artifact.

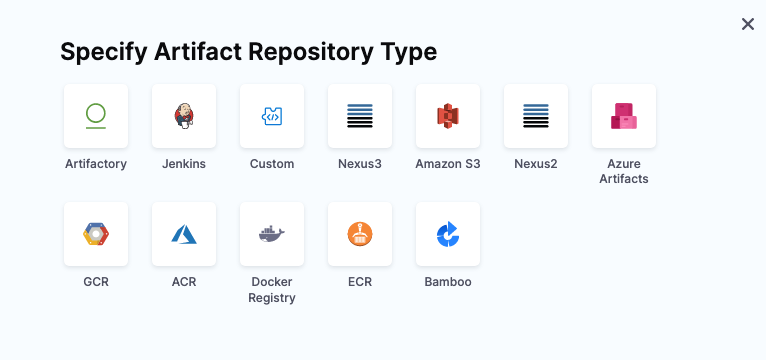

-

In Artifacts, select Add Primary Artifact.

-

In Specify Artifact Repository Type.

- As an example, select Artifactory and select Continue. You can use another artifact repo if you like.

-

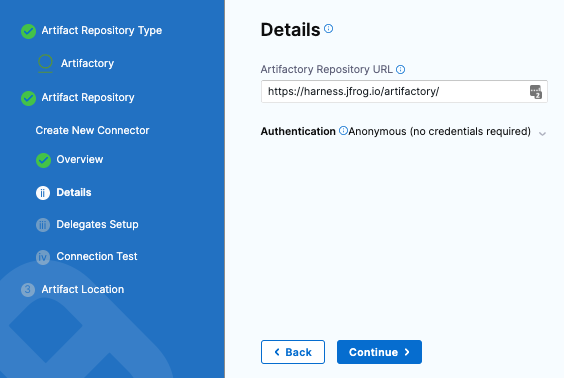

For the Artifactory Connector, select New Artifactory Connector.

-

In Name, enter a name for the connector and select Continue.

-

In Details, enter the the following URL path for Artifactory Repository URL:

https://harness.jfrog.io/artifactory. In this example, we will use the artifacts stored in that repository. -

For Authentication, select Anonymous and select Continue.

-

Select Continue to connect with Artifactory by using a Harness Delegate.

-

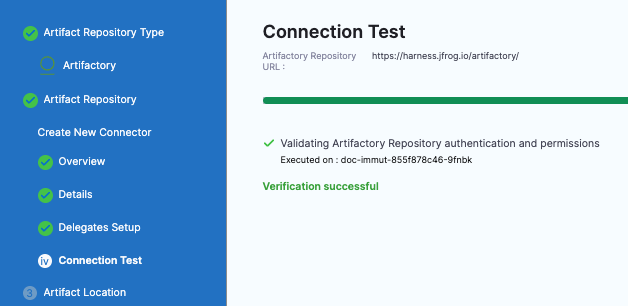

In Delegates Setup, select Connect through the Harness Platform.

-

Select Save and Continue.

-

In Connection Test, Harness validates authentication and permissions for the repo.

-

Select Continue.

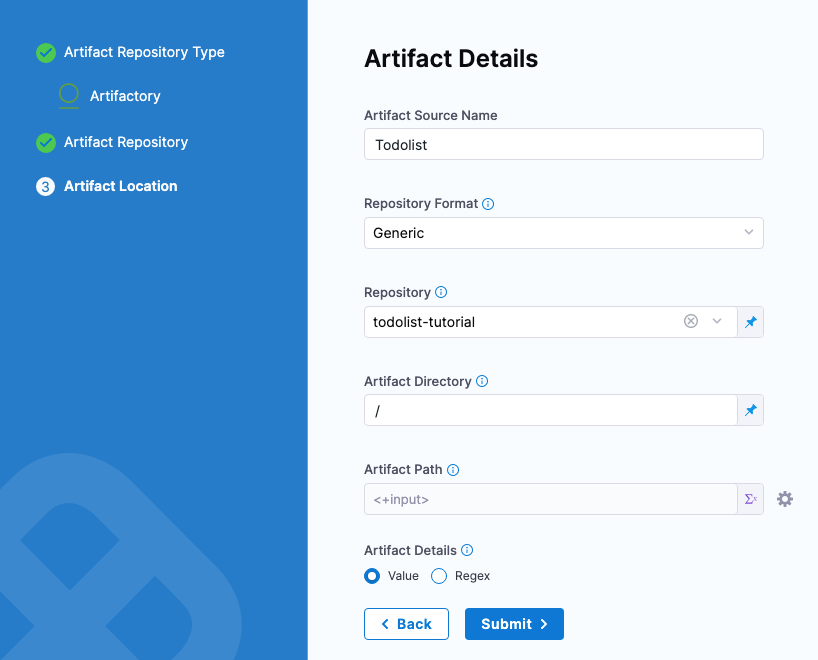

Set up artifact location and details

For this example, we'll use a publicly available ToDo List app artifact, todolist.war, available in a public Harness Artifactory repo.

In Artifact Details, enter the following:

-

In Artifact Source Name, enter Todolist.

-

In Repository Format, keep the default value Generic.

-

For Repository, enter: todolist-tutorial. Note that if you select Repository, Harness loads any available repositories and displays them for selection.

-

In Artifact Directory, enter a forward slash /.

-

In Artifact Details, keep the default Value.

-

In Artifact Path, leave the default Runtime Input value <+input> for that field.

-

Select Submit.

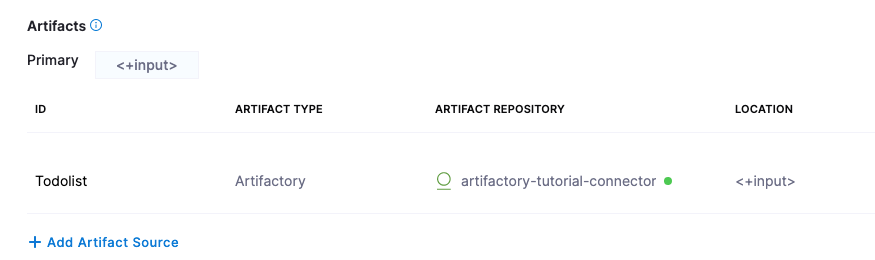

The artifact is added to your service.

-

Select Save. The Service is added to your stage.

-

Select Continue to set up the target Environment.

Define the target infrastructure

You define the target infrastructure for your deployment in the Environment settings of the pipeline stage. You can define an environment separately and select it in the stage, or create the environment within the stage Environment tab.

There are two methods of specifying the deployment target infrastructure:

- Pre-existing: the target infrastructure already exists and you simply need to provide the required settings.

- Dynamically provisioned: the target infrastructure will be dynamically provisioned on-the-fly as part of the deployment process.

For details on Harness provisioning, go to Provisioning overview.

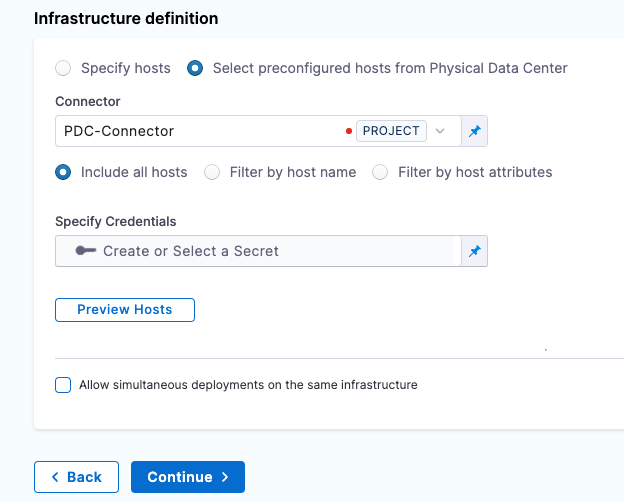

Selecting hosts

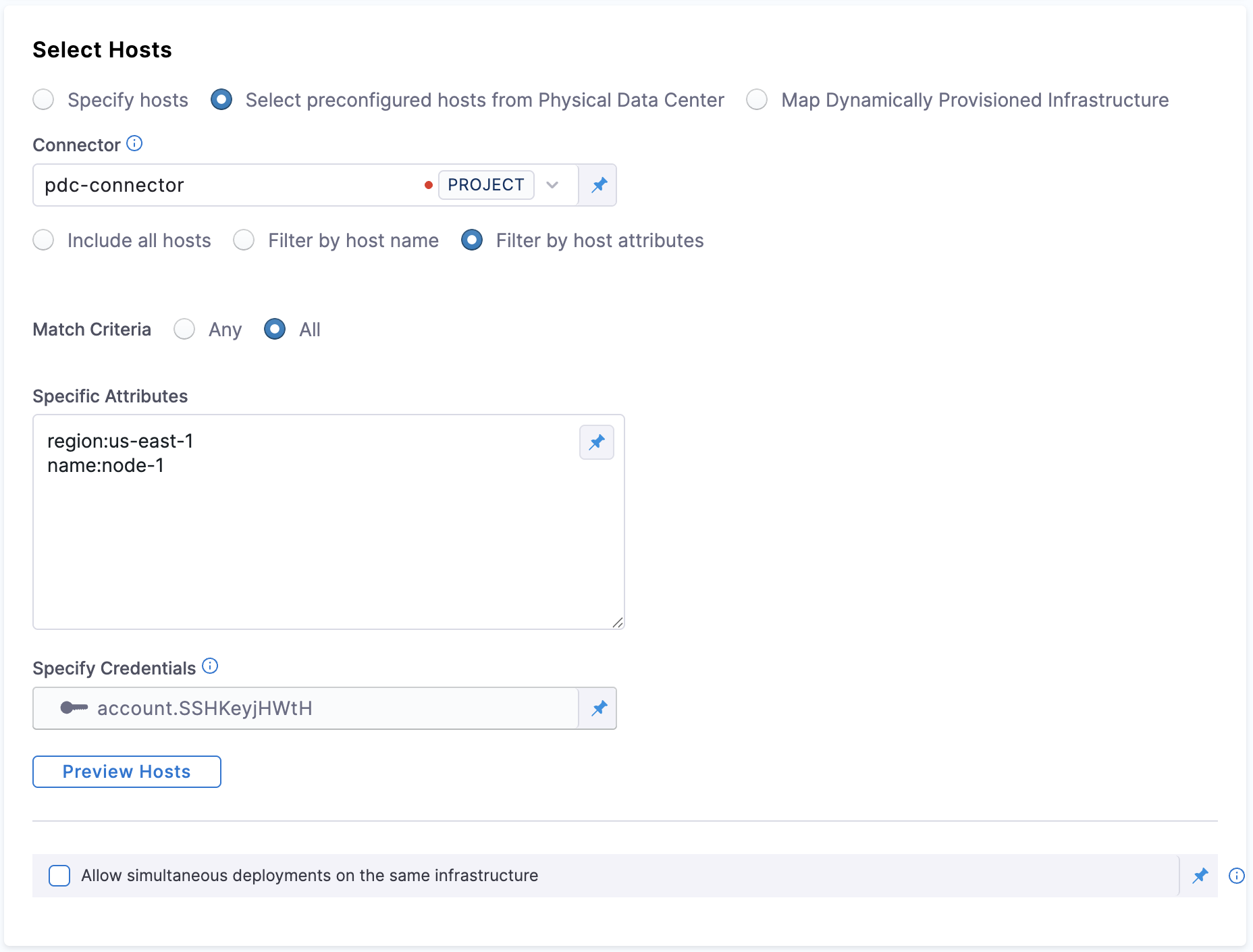

When you set up the Infrastructure Definition for the stage, there are three options for selecting the target hosts:

- Specify hosts

- Select preconfigured hosts from Physical Data Center

- Map Dynamically Provisioned Infrastructure

The Specify hosts or Select preconfigured hosts from Physical Data Center options are used when you are deploying to a pre-existing infrastructure.

The Map Dynamically Provisioned Infrastructure option is used when your are deploying to a dynamically provisioned infrastructure.

Write down hosts as a comma separated list.

Filtering Hosts by Attributes

This setting is available when you pick Select preconfigured hosts from Physical Data Center under Select hosts and select Filter by host attributes

You can control whether multiple host‑attribute filters are combined with OR (match any) or AND (match all) logic.

Any (default) – a host is selected if at least one filter condition matches (existing behavior).

All – a host is selected only if every filter condition matches.

Specific Attribute: This is where you specify the condition on which the match criteria is checked with. You can specify attributes like region, type, name, etc.

Under Preview Hosts, you can see what hosts match the condition.

Example of how filtering hosts by attributes works

Suppose you have two hosts specified in your physical data center

// Host‑1

{

"hostname": "ec2-00-00-01.compute-1.amazonaws.com",

"hostAttribute": {

"region": "us-east-1",

"name": "node-1"

}

}

// Host‑2

{

"hostname": "ec2-00-00-02.compute-1.amazonaws.com",

"hostAttribute": {

"region": "us-west",

"name": "node-5"

}

}

Scenario 1: matchCriteria: ALL

YAML Example for matchCriteria: ALL

infrastructureDefinition:

name: pdc

identifier: pdc

orgIdentifier: default

projectIdentifier: project_id

environmentRef: env_id

deploymentType: Ssh

type: Pdc

spec:

connectorRef: abc

credentialsRef: credentials_ref

hostFilter:

type: HostAttributes

spec:

value:

region: us-east-1

name: node-1

matchCriteria: ALL

allowSimultaneousDeployments: false

- matchCriteria: ALL means that both conditions must be true for the host to be selected (both

region=us-east-1andname=node-1).

Host‑1

region: us-east-1 (matches)name: node-1 (matches)

Selected: Host‑1 meets both conditions, so it matches.

Host‑2

region: us-west (does not matchus-east-1)name: node-5 (does not matchnode-1)

Not Selected: Host‑2 does not meet both conditions, so it does not match.

Summary for ALL:

- Host‑1 is selected because both

regionandnamematch. - Host‑2 is not selected because neither

regionnornamematch.

Scenario 2: matchCriteria: ANY

YAML Example for matchCriteria: ANY

infrastructureDefinition:

name: pdc

identifier: pdc

orgIdentifier: default

projectIdentifier: project_id

environmentRef: env_ref

deploymentType: Ssh

type: Pdc

spec:

connectorRef: abc

credentialsRef: credentials_ref

hostFilter:

type: HostAttributes

spec:

value:

region: us-east-1

name: node-1

matchCriteria: ANY

allowSimultaneousDeployments: false

- matchCriteria: ANY means that either condition must be true for the host to be selected (either

region=us-east-1orname=node-1).

Host‑1

region: us-east-1 (matches)name: node-1 (matches)

Selected: Host‑1 meets both conditions, so it matches.

Host‑2

region: us-west (does not matchus-east-1)name: node-5 (does not matchnode-1)

Not Selected: Host‑2 does not meet either condition, so it does not match.

Summary for ANY:

- Host‑1 is selected because both

regionandnamematch. - Host‑2 is not selected because neither

regionnornamematches.

Key Takeaways:

- matchCriteria: ALL: The host is selected only if both conditions are true.

- matchCriteria: ANY: The host is selected if either of the conditions is true.

Pre-existing infrastructure

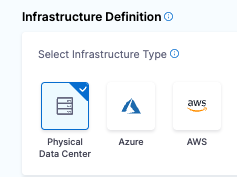

Let's look at an example of setting up an Infrastructure Definition for a pre-existing infrastructure.

- In Specify Environment, select/create an environment.

- In the environment, in Specify Infrastructure, select New infrastructure.

- Enter a name for the new infrastructure.

- For Select Infrastructure Type, select where you want to deploy. For this example, we'll use Physical Data Center.

- Keep the default selection, Physical Data Center.

Targetting Specific Hosts for Deployment

Users can pass in specific Host IPs to perform deployment in the SSH Infrastructure Definition. When your deployment stage deploys an Secure Shell (SSH) Service or Windows Remote Management (WinRM) using an Infrastructure Definition of deployment type SSH or WinRM, you can select target hosts that were not selected in the Stage's Infrastructure Definition. Harness will present a dropdown in the Pipeline Run form with the queried list of host names.

Demo Video

infrastructureDefinition:

name: aws-ssh-infra

identifier: awssshinfra

orgIdentifier: default

projectIdentifier: alexctest

environmentRef: qasetup

deploymentType: Ssh

type: SshWinRmAws

spec:

credentialsRef: qasetupcredentials

connectorRef: qasetupconnector

region: us-east-1

awsInstanceFilter:

vpcs:

- vpc-c20f38b9

tags:

type: ssh

hostConnectionType: PublicIP

instanceType: Aws

targetedHosts: <+input> ## This will be provided at runtime.

allowSimultaneousDeployments: false

Limitation

-

instance.namehas the same value asinstance.hostName. Both are available for backward compatibility. -

Only supported with AWS & Azure infrastructure, target to specific hosts already uses the same permissions as before, no new API call required.

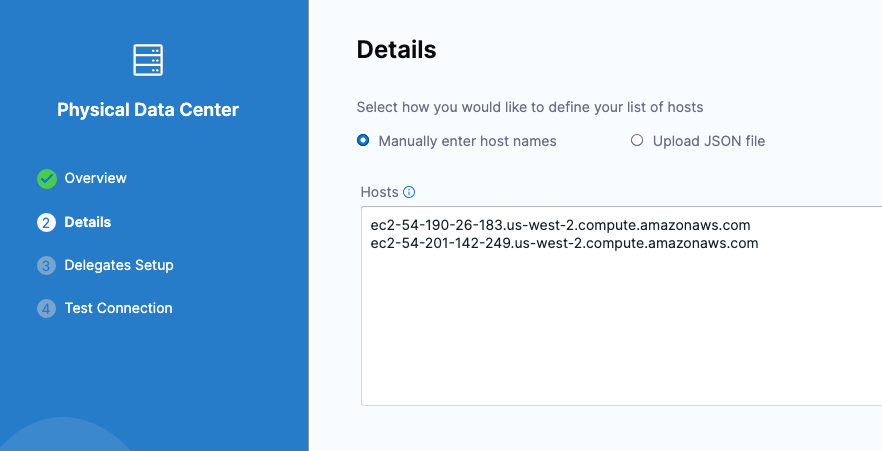

Create the PDC connector for the hosts

- In Infrastructure Definition, for Connector, select Select Connector to create the Connector for the PDC.

- In Create or Select an Existing Connector, select New Connector.

- In Physical Data Center, enter a name for this connector, such as PDC-Connector.

- Select Continue.

- In Details, keep the default for Manually enter host names and enter the hostname(s) for the target instance(s).

- Select Continue.

- In Delegates Setup, keep the default for Use any available Delegate.

- Select Save and Continue. Harness validates connectivity for the PDC connector.

- Select Finish. The Infrastructure Definition is updated with the PDC Connector.

Use an SSH credential for authenticating to the target hosts

You can use an SSH Key or Kerberos for authenticating to the target host(s). In this example, we will use an SSH Key.

-

In Specify Credentials, select Create or Select a Secret.

-

In Create or Select an Existing Secret, select New SSH Credential.

-

In SSH Details, for Name, enter a name for this SSH Credential and select Continue.

-

In Configuration and Authentication, there are three authentication options. In this example, we will use Username/SSH Key. Select the down-drop menu and select Username/SSH Key.

-

Enter a username, such as ec2-user.

tipAlways use ec2-user for EC2 instances.

-

For Select or create a SSH Key, select Create or Select a Secret.

-

in Create or Select an Existing Secret, select New Secret File.

-

in Add new Encrypted File, enter a name for Secret Name, such as ssh-key-name. This is the name you will use to reference this file.

-

For Select File, select Browse. On your machine, select the secret file that you downloaded from your instance (for EC2, use the .pem file).

-

Select Save.

-

In Configuration and Authentication, keep the default values for Passphrase and SSH port. Select Save and Continue.

-

In Verify Connection, enter the hostname for the instance in the Add a Host Name to start verification field and select Connection Test.

-

The Secure Shell connection to the instance is tested. Select Finish. Select Continue.

You can use the Preview Hosts section to test the connection at any time.

-

Select Save.

-

Back in Environment, select Continue.

Next, you'll select the deployment strategy for this stage, the package type, and the number of instances to deploy on.

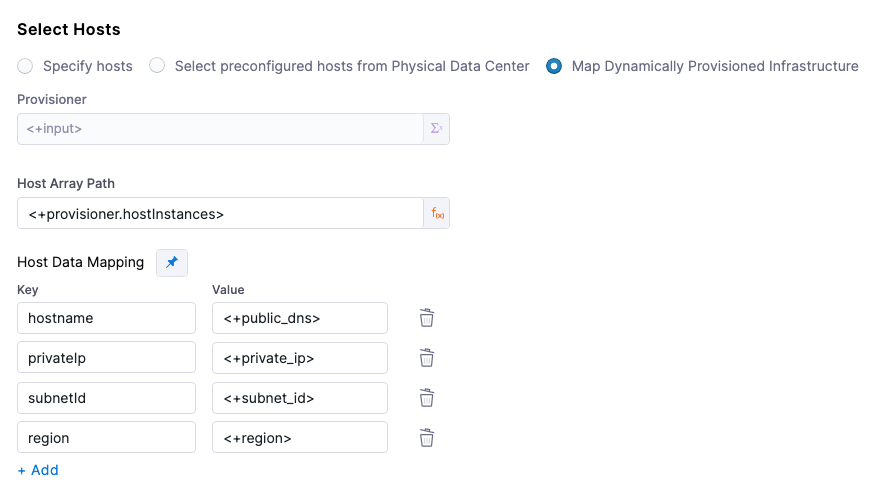

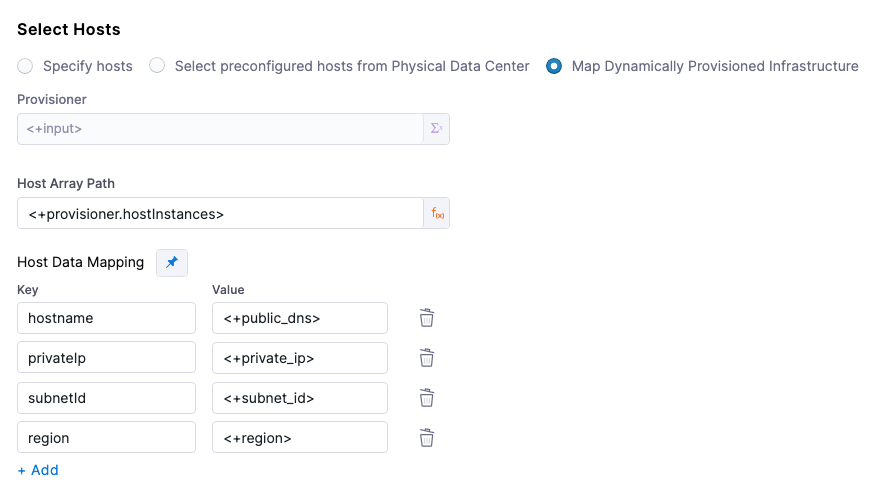

Dynamically provisioned infrastructure

Here is a summary of the steps to dynamically provision the target infrastructure for a deployment:

- Add dynamic provisioning to the CD stage:

-

In a Harness Deploy stage, in Environment, enable the option Provision your target infrastructure dynamically during the execution of your Pipeline.

-

Select the type of provisioner that you want to use.

Harness automatically adds the provisioner steps for the provisioner type you selected.

-

Configure the provisioner steps to run your provisioning scripts.

-

Select or create a Harness infrastructure in Environment.

-

- Map the provisioner outputs to the Infrastructure Definition:

- In the Harness infrastructure, enable the option Map Dynamically Provisioned Infrastructure.

- Map the provisioning script/template outputs to the required infrastructure settings.

Supported provisioners

The following provisioners are supported for SSH deployments:

- Terraform

- Terragrunt

- Terraform Cloud

- CloudFormation

- Azure Resource Manager (ARM)

- Azure Blueprint

- Shell Script

Adding dynamic provisioning to the stage

To add dynamic provisioning to a Harness pipeline Deploy stage, do the following:

-

In a Harness Deploy stage, in Environment, enable the option Provision your target infrastructure dynamically during the execution of your Pipeline.

-

Select the type of provisioner that you want to use.

Harness automatically adds the necessary provisioner steps.

-

Set up the provisioner steps to run your provisioning scripts.

For documentation on each of the required steps for the provisioner you selected, go to the following topics:

- Terraform:

- Terraform Plan

- Terraform Apply

- Terraform Rollback. To see the Terraform Rollback step, toggle the Rollback setting.

- Terragrunt

- Terraform Cloud

- CloudFormation:

- Create Stack

- Delete Stack

- Rollback Stack. To see the Rollback Stack step, toggle the Rollback setting.

- Azure Resource Management (ARM)

- Azure Blueprint

- Shell Script

Mapping provisioner output

Once you set up dynamic provisioning in the stage, you must map outputs from your provisioning script/template to specific settings in the Harness Infrastructure Definition used in the stage.

-

In the same CD Deploy stage where you enabled dynamic provisioning, select or create (New Infrastructure) a Harness infrastructure.

-

In the Harness infrastructure, in Select Infrastructure Type, select Physical Data Center, Azure, or AWS.

-

In Select Hosts/Azure/Amazon Web Services Infrastructure Details, enable the option Map Dynamically Provisioned Infrastructure.

A Provisioner setting is added and configured as a runtime input.

-

Map the provisioning script/template outputs to the required infrastructure settings.

To provision the target deployment infrastructure, Harness needs specific infrastructure information from your provisioning script. You provide this information by mapping specific Infrastructure Definition settings in Harness to outputs from your template/script.

For SSH infrastructures, Harness needs the following settings mapped to outputs:

- Physical Data Center (PDC):

- Host Array Path

- Host Data Mapping: you should map outputs for all of the necessary connection information. For example, if the VM(s) on a cloud platform:

- hostname

- privateIp

- subnetId

- region

- Azure:

- Subscription Id

- Resource Group

- Tag (optional)

- AWS:

- Region

- Tag (optional)

Ensure the mapped settings are set to the Expression option.

Host Array Path

For SSH PDC deployments, you use the expression <+provisioner.OUTPUT_NAME> for the Host Array Path setting.

For the subsequent Host Data Mapping key-value pairs, you use the expression format <+HOST_PROPERTY>. For example, <+public_dns>.

Here's an example:

Example

Here's a snippet of a Terraform script that provisions the infrastructure for an AWS EC2 VM SSH deployment and includes the required outputs for Physical Data Center:

provider "aws" {

region = "us-east-1" # Replace with your desired AWS region

}

resource "aws_vpc" "example" {

cidr_block = "10.0.0.0/16"

}

resource "aws_subnet" "example" {

vpc_id = aws_vpc.example.id

cidr_block = "10.0.1.0/24"

}

resource "aws_security_group" "example" {

name_prefix = "example-"

}

resource "aws_instance" "example" {

ami = "ami-0c55b159cbfafe1f0"

instance_type = "t2.micro"

subnet_id = aws_subnet.example.id

vpc_security_group_ids = [aws_security_group.example.id]

tags = {

Name = "ExampleInstance"

}

}

output "hostname" {

value = aws_instance.example.public_dns

}

output "privateIp" {

value = aws_instance.example.private_ip

}

output "subnetId" {

value = aws_subnet.example.id

}

output "region" {

value = provider.aws.region

}

In the Harness Infrastructure Definition, you map outputs to their corresponding settings using expressions in the format <+provisioner.OUTPUT_NAME>, such as <+provisioner.region>.

Reference mapped instance properties

Once you have mapped provisioning script outputs to the stage Infrastructure Definition, you can reference them in Execution of the stage.

To reference a mapped output, you use an expression in the format <+instance.properties.*>.

For example, here are some Host Data Mapping keys and expressions that reference them:

- hostname:

<+instance.properties.hostname> - privateIp:

<+instance.properties.privateIp> - subnetId:

<+instance.properties.subnetId> - region:

<+instance.properties.region>

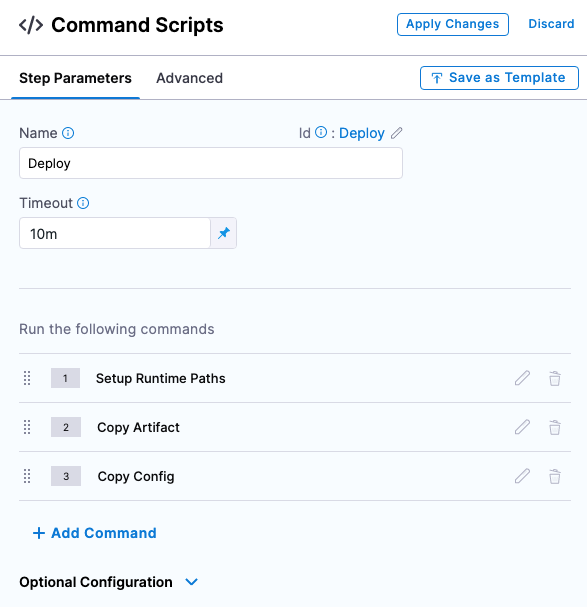

SSH Executions

In Execution, Harness automatically adds the steps required to deploy the service to the environment according to the deployment strategy you select.

The Execution Strategies supported for Secure Shell include Blank Canvas, Basic, Rolling, and Canary. Let's look at Basic.

Basic deployments

-

In Execution Strategies, select Basic. Typically, you use basic when deploying to one host and rolling or canary for multiple hosts.

-

For Package type, select WAR.

-

Select Use Strategy. Harness adds the Deploy step for execution.

-

Select the Deploy step. Here is where you add the scripts for your package. We'll use the defaults for this example. So, we'll simply copy the artifact to the target host.

-

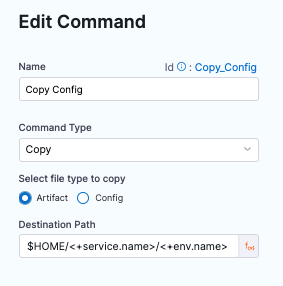

In Command Scripts, edit Copy Config.

-

In Edit Command, for Select file type to copy, select Artifact.

-

Select Save.

-

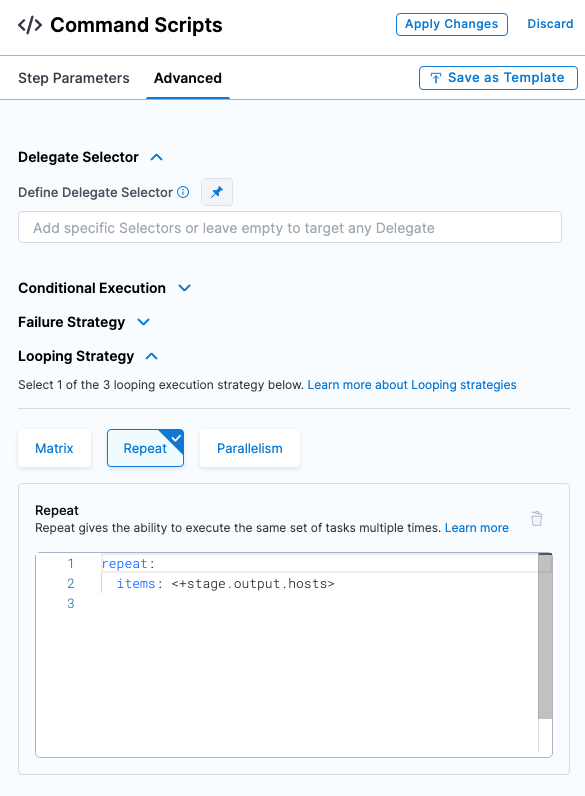

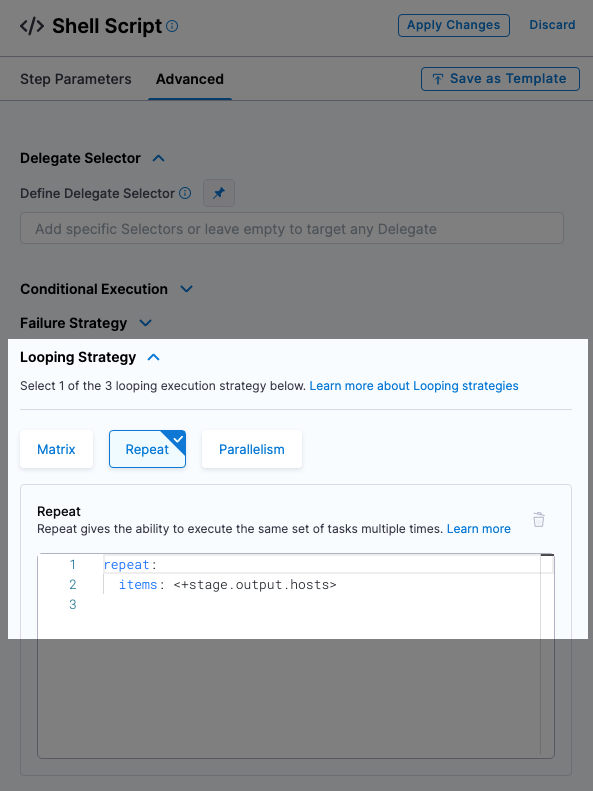

Review Looping Strategy: the looping strategy repeats deployments for multiple hosts and for different deployment strategies (Basic, Rolling, Canary).

- Select Advanced.

- Select Looping Strategy. You can see that the step will be repeated for all hosts using the

<+stage.output.hosts>expression.

For example, if you had two hosts the step would be repeated for each host.

- Select Apply Changes.

- When you're done, select Save to publish the Pipeline.

Deploy and review

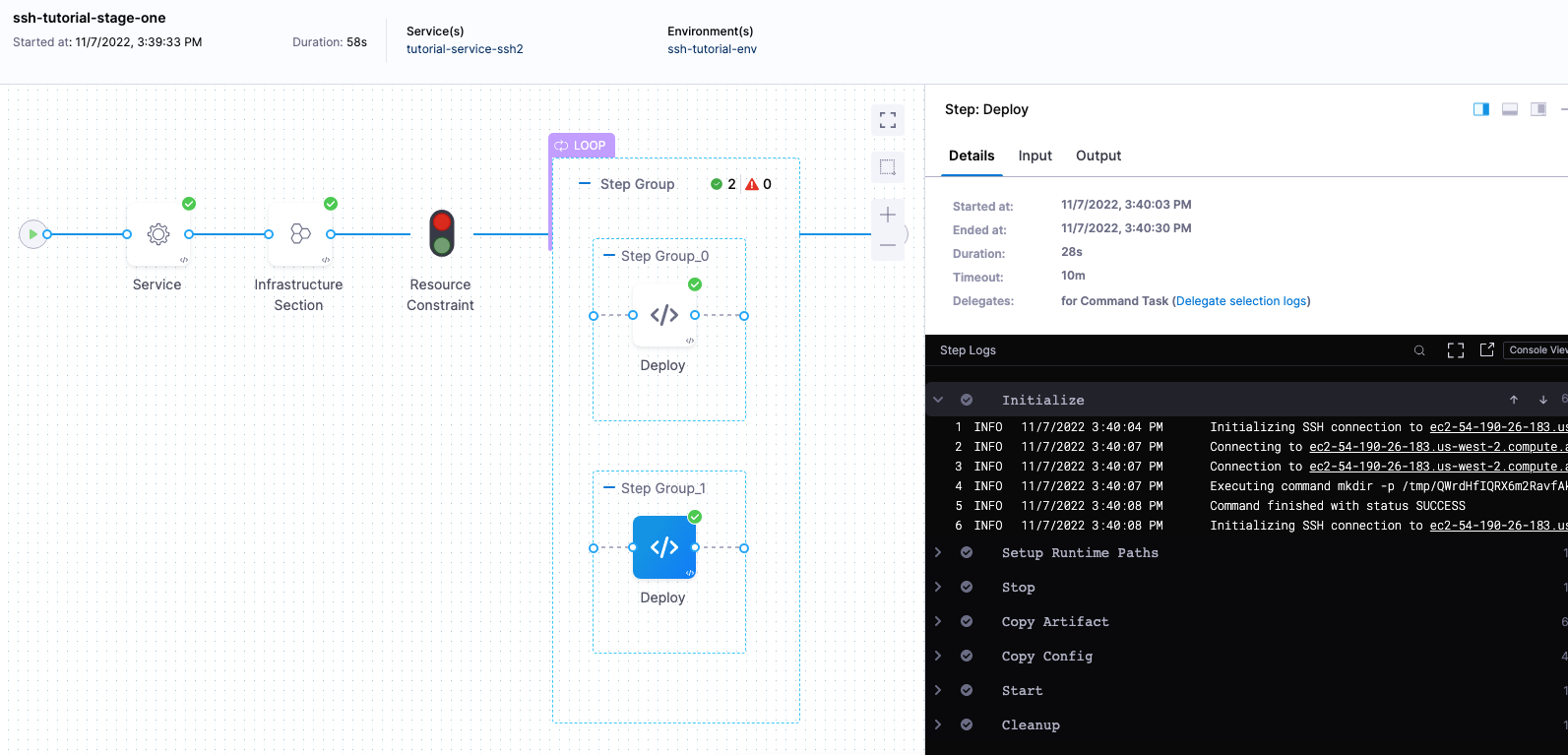

Let's look at an example SSH deployment.

-

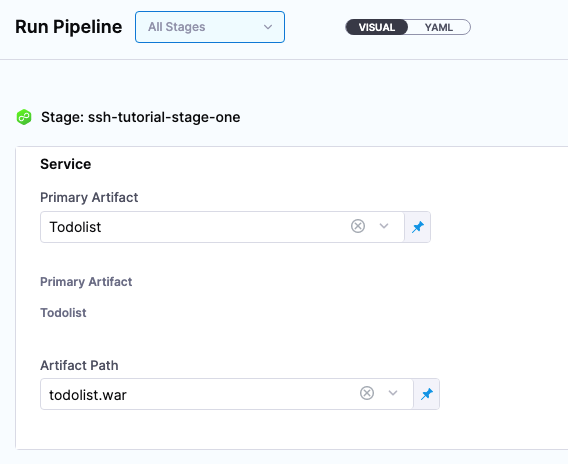

Select Run to run the pipeline.

-

In Run Pipeline, for Primary Artifact, select Todolist.

-

In Artifact Path, Harness displays a list of available artifact packages.

-

Select todolist.war.

-

Select Run Pipeline. Harness runs the pipeline and the Console View displays the tasks executed for each step.

Review deployment

Let's review what is happening in the Deploy step. Most sections correspond to the commands you can see in the Deploy step.

- Initialize: initialize the connection to the host(s) and create a temp directory for the deployment.

- Setup Runtime Paths: create folders for runtime, backup, and staging.

- Copy Artifact: copy the artifact to the host.

- Copy Config: copy the config files (if any) to the host.

- Cleanup: remove temp directories.

Initialize

Initializing SSH connection to ec2-54-201-142-249.us-west-2.compute.amazonaws.com ....

Connecting to ec2-54-201-142-249.us-west-2.compute.amazonaws.com ....

Connection to ec2-54-201-142-249.us-west-2.compute.amazonaws.com established

Executing command mkdir -p /tmp/aCy-RxnYQDSRmL8xqX4MZw ...

Command finished with status SUCCESS

Initializing SSH connection to ec2-54-201-142-249.us-west-2.compute.amazonaws.com ....

Connecting to ec2-54-201-142-249.us-west-2.compute.amazonaws.com ....

Setup Runtime Paths

Initializing SSH connection to ec2-54-201-142-249.us-west-2.compute.amazonaws.com ....

Connecting to ec2-54-201-142-249.us-west-2.compute.amazonaws.com ....

Connection to ec2-54-201-142-249.us-west-2.compute.amazonaws.com established

Executing command...

Command finished with status SUCCESS

Copy Artifact

Filename contains slashes. Stripping off the portion before last slash.

Got filename: todolist.war

Connecting to ec2-54-201-142-249.us-west-2.compute.amazonaws.com ....

Connection to ec2-54-201-142-249.us-west-2.compute.amazonaws.com established

Begin file transfer todolist.war to ec2-54-201-142-249.us-west-2.compute.amazonaws.com:/home/ec2-user/tutorial-service-ssh2/ssh-tutorial-env

File successfully transferred to ec2-54-201-142-249.us-west-2.compute.amazonaws.com:/home/ec2-user/tutorial-service-ssh2/ssh-tutorial-env

Command finished with status SUCCESS

Copy Config

Filename contains slashes. Stripping off the portion before last slash.

Got filename: todolist.war

Connecting to ec2-54-201-142-249.us-west-2.compute.amazonaws.com ....

Connection to ec2-54-201-142-249.us-west-2.compute.amazonaws.com established

Begin file transfer todolist.war to ec2-54-201-142-249.us-west-2.compute.amazonaws.com:/home/ec2-user/tutorial-service-ssh2/ssh-tutorial-env

File successfully transferred to ec2-54-201-142-249.us-west-2.compute.amazonaws.com:/home/ec2-user/tutorial-service-ssh2/ssh-tutorial-env

Command finished with status SUCCESS

Cleanup

Initializing SSH connection to ec2-54-201-142-249.us-west-2.compute.amazonaws.com ....

Connecting to ec2-54-201-142-249.us-west-2.compute.amazonaws.com ....

Connection to ec2-54-201-142-249.us-west-2.compute.amazonaws.com established

Executing command rm -rf /tmp/aCy-RxnYQDSRmL8xqX4MZw ...

Command finished with status SUCCESS

Congratulations! You have now successfully created and completed the steps for running a pipeline by using Secure Shell.

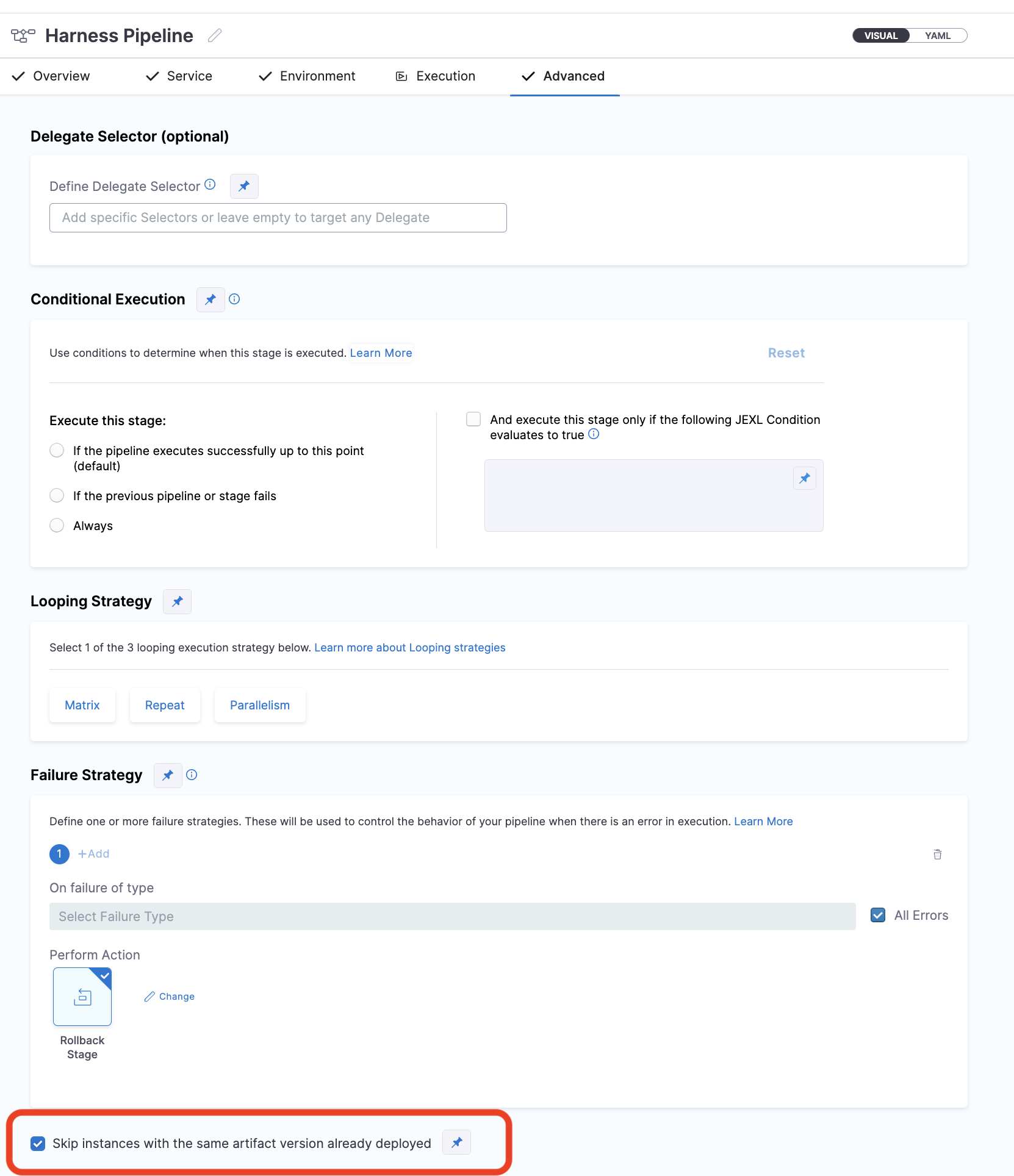

Selective Rerun and Skipping Hosts with Same Artifact

You can now skip the hosts where the last deployment was successful using the same artifact for traditional deployments. These improvements ensure:

- Efficient reruns: Redeploy only on failed hosts instead of all hosts.

- Expressions for failed hosts: Retrieve failed hosts dynamically for debugging, fixing and rerunning on only failed hosts.

To use this feature, navigate to the Advanced tab of the CD stage, enable the Skip instances with the same artifact version already deployed checkbox.

You can enable this checkbox using the run-time by making this checkbox a Runtime Input.

Currently, the Selective Failed Hosts Rerun feature is behind the feature flag CDS_SKIP_INSTANCES_V2. Contact Harness Support to enable the feature.

Change in Behavior with Feature Flag Activation

Enabling the CDS_SKIP_INSTANCES_V2 feature flag enhances the skip instances feature for improved reliability across deployment scenarios. The updated behavior includes:

- Org/Account-Level Service & Environment Handling: Ensures consistent application of skip instance logic across different organizational scopes.

- Partial Success Handling: Tracks and skips only successfully deployed hosts, preventing unnecessary re-deployments.

Success Criteria for Deployment on a Host

- Successfully Deployed Criteria: A host is considered successfully deployed only if all command steps in an execution complete successfully.

- Deployed Criteria: A host is considered deployed if any command step execution occurs on the host.

Key Features

1. Selective Retry for Failed Hosts

- Deployment retries now target only failed hosts instead of redeploying on all hosts when the Skip instances with the same artifact version already deployed checkbox is enabled.

2. Enhanced Skip Instances Feature

- Deployment is skipped on hosts where the last deployment was successful using the same artifact.

- Each host’s deployment success is tracked individually, ensuring that only failed hosts are retried.

- Infrastructure changes (e.g., connector updates, credential changes) are considered when determining the last deployment on a host.

3. Improved Rollback Behavior

- The Skip Instances feature now tracks rollbacks per host, ensuring that only the required hosts are updated.

- This guarantees that rollback logic correctly applies only to affected hosts, preventing unnecessary redeployments.

4. New Expressions Introduced

These expressions provide better tracking of deployment and skipped instances:

<+stage.output.skippedHosts>: Fetches hosts skipped during the current deployment via the Skip Instances feature.<+stageFqn.deployedHosts.succeeded>: Fetches hosts that successfully deployed in a stage.<+stageFqn.deployedHosts.failed>: Fetches hosts that failed deployment in a stage.

Currently, the <+stageFqn.deployedHosts.succeeded> and <+stageFqn.deployedHosts.failed> expressions are resolved only after stage completion.

- The full stage FQN (Fully Qualified Name) must be used, e.g.,

<+pipeline.stages.ssh.deployedHosts.succeeded>. - These expressions will include only the hosts that meet the Deployed criteria.

Example Workflow: Deployment with Partial Success

This example demonstrates how the Skip Instances feature allows rerunning a pipeline without redeploying successfully deployed hosts. By enabling this feature, only failed hosts are re-run, optimizing deployment efficiency and reducing unnecessary re-deployments.

Step 1: Deploy on Two Hosts Using Artifact Version 1

Deploy artifact version 1 on host1 and host2 using a command step.

Outcome:

- host1 successfully deployed version 1.

- Deployment on host2 failed.

Step 2: Fix the Issue on Host2 and Rerun the Pipeline with Skip Instances Enabled

After resolving the issue on host2, rerun the pipeline with the Skip Instances feature enabled.

Outcome:

- Deployment on host1 is skipped since it was previously successful.

- host2 successfully deploys version 1.

Use-Cases for Selective Rerun and Skipping Hosts

The improved retry and rollback mechanisms ensure that only necessary actions are taken, avoiding unnecessary redeployments and rollbacks. Below are some key scenarios and how they are handled:

- Pipeline Termination After Successful Deployment

- If the pipeline terminates due to expire/abort/failure cases, but the host was successfully deployed via a command step before termination, the deployment on that host is still considered successful.

- This ensures that unexpected pipeline failures do not unnecessarily mark successful hosts as failed.

- Parallel Deployments on the Same Hosts

- When the same hosts are deployed in parallel using different stages, the stage with the most recent command step execution is considered the last deployment for the skip instances feature.

- Executions Without Command Steps

- If a pipeline execution does not contain command steps, it is ignored in tracking.

- Such deployments are not considered for the skip instances feature.

- Partial Success Without Rollback

- If a deployment succeeds on some hosts but fails on others, only failed hosts are deployed on rerun.

- Successfully deployed hosts are skipped.

- Execution Failure Followed by a Partial Rollback

- If a rollback is partially successful, only successfully rolled-back hosts are marked as completed.

- The system ensures these hosts are correctly updated for future deployments.

- Handling Command Step Retries

- If a command step fails initially but succeeds after retry, the host is marked as successfully deployed.

- Ensures hosts are not mistakenly retried in future deployments.

- Command Steps within Step Groups

- If a command step inside a step group fails but succeeds on retry, the host is considered successfully deployed.

- This prevents unnecessary redeployments on already successful hosts.

- Pipeline Rollback Considerations

- If a pipeline rollback is triggered, only hosts which were rollbacked successfully are marked as completed.

- The system ensures these hosts are correctly updated for future deployments

Permission to perform SSH Deployments in AWS

We use the SSH Credentials to connect to hosts to perform deployment.

We use the AWS Connector to retrieve instances from the AWS Account. The specific calls we make:

- Retrieve the instances at runtime during the infrastructure step - DescribeInstanceRequest

- Retrieve the instances during instance sync to show service instances in the service - DescribeInstanceRequest

To use describe instance API, the action is ec2:DescribeInstances

Per AWS documentation: Example policies for working with the AWS CLI or an AWS SDK - Amazon Elastic Compute Cloud

Notes

Selecting multiple hosts

You can add multiple hosts in the Physical Data Center Connector:

During deployment, you'll see each host listed in the loop:

Looping strategies for each deployment strategy

Only the Repeat Looping strategy is supported by the Command step. Also, only the items parameter is supported when running the Command step on multiple target hosts:

repeat:

items: <+stage.output.hosts>

The Repeat looping strategy is used differently for the basic, rolling, and canary deployment types.

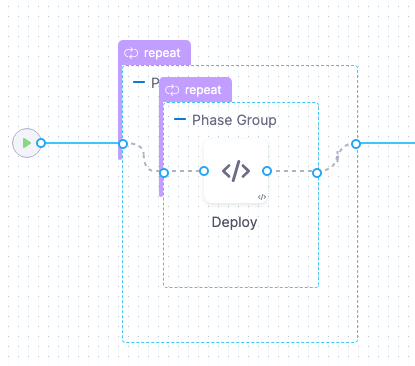

The looping strategy is automatically added to the Deploy step and configured for the deployment type you selected:

Let's look how it's used for different deployment types.

Basic

The looping strategy for the basic deployment simply repeats the deployment on all the target hosts.

repeat:

items: <+stage.output.hosts>

Rolling

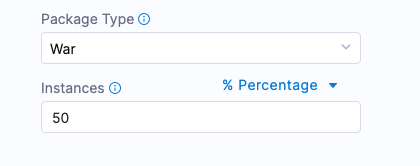

For a rolling strategy, you specify how many instances you want to deploy per phase.

Let’s say you have 10 target hosts in the stage Infrastructure Definition and you want to have 3 instances per phase.

In Instances, you would enter 3.

As a result, when execution starts there will be 4 phases: 3, 3, 3, 1. The number of instances per phase can be provided as a count or a percentage.

This is an example of the rolling strategy using 2 hosts with 50% in Instances.

This means, that Harness will roll out to 50% of target hosts first, and then the remaining 50% if the first 50% were successful.

Harness creates 2 phases.

You can add any Approval steps inside the Phase Group. For more information, go to Approvals.

The looping strategy for the first phase deploys to 50% of the hosts (partitions):

repeat:

items: <+stage.output.hosts>

maxConcurrency: 1

partitionSize: 50

unit: Percentage

The looping strategy for the second phase repeats the partition count:

repeat:

items: <+repeat.partition>

The <+repeat.partition> expression resolves how many instances (items) to iterate over per one partition (phase).

Let’s say we have 10 hosts and 4 partitions organized as 3, 3, 3, 1. The first partition includes 3 hosts, the second and third each have 3, and the last one has 1 host.

So, partition1 = host1, host2, host3, partition2 = host4, host5, host6, partition3 = host7, host8, host9, and partition4 = host10.

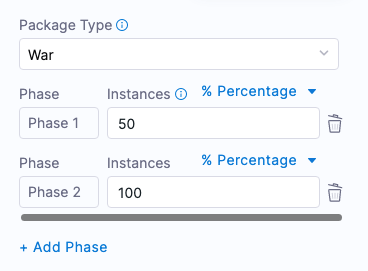

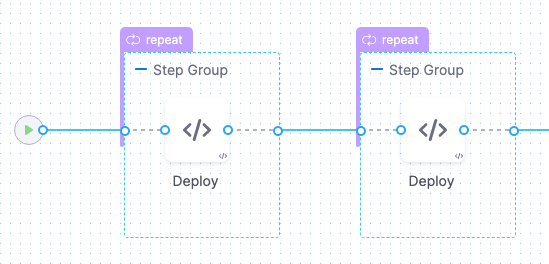

Canary

For canary strategies, Harness calculates phase instances based on the number of hosts and the number of requested instances per phase.

Let’s say you have 10 hosts and you add 2 phases with 50% and 100%. This means Harness deploys on 5 instances in the first phase and on the rest of the instances in the second phase.

Here is an example of the Canary strategy using 2 hosts and 2 phases. The first phase deploys to 50% and the second phase deploys to 100%.

This means, that Harness will roll out to 50% of target hosts first, and then the remaining 50% if the first 50% were successful.

Harness creates 2 phases as step groups.

You can add any Approval steps between the Step Groups. See Adding ServiceNow Approval Steps and Stages, Adding Jira Approval Stages and Steps, and Adding ServiceNow Approval Steps and Stages.

The Looping Strategy for the first Phase selects 50% of the target hosts:

repeat:

items: <+stage.output.hosts>

start: 0

end: 50

unit: Percentage

The Looping Strategy for the second Phase starts at the 50% from the first phase and continues to 100%:

repeat:

items: <+stage.output.hosts>

start: 50

end: 100

unit: Percentage

Reference hosts in steps using expressions

You can use the following instance expressions to reference your hosts:

For Microsoft Azure or Physical Data Center (PDC):

<+instance.hostName><+instance.host.hostName><+instance.name>

For Microsoft Azure and AWS:

<+instance.host.privateIp><+instance.host.publicIp>

-

For AWS with WinRM deployments:

Hostname-based expressions like<+instance.hostName>and<+instance.host.hostName>are not supported. Use<+instance.host.privateIp>or<+instance.host.publicIp>instead. -

For Azure with WinRM deployments:

Hostname-based expressions are supported if the hostname is available from the VM metadata. -

instance.namehas the same value asinstance.hostName. Both are available for backward compatibility.

Rollback

Harness restores the state of deployment to the pipeline's previous successful stage execution based on serivce, enviroment and infrastucture details.

Harness records the artifact version that was successfully deployed during previous successful executions. When using the Rollback step's Copy Artifact command unit, Harness copies the last successful version of the artifact deployed via Harness to the remote host.

Rollback Behavior Enhancement for SSH/WinRM

Harness has improved Rollback Behavior for SSH/WinRM deployments. This enhancement in behavior is controlled by the feature flag CDS_FIX_ROLLBACK_IN_SSH_WINRM. The following changes in behavior can be observed when this feature flag is enabled:

-

The Rollback Behavior has been enhanced to ensure that it functions correctly even in cases where multiple command steps are configured within a stage.

-

Identifier Matching: For rollback to work correctly, each command step in the rollback section must have the same identifier as the corresponding step in the execution section. The names of the steps may differ, but identifiers must match.

-

For Canary, Rolling, and Basic deployment strategies, the auto-generated YAML also follows the improved pattern with identifier matching if the feature flag is enabled, thus ensuring identifier alignment between execution and rollback sections.

First time deployment

If the first pipeline execution fails (regardless of stage), Harness skips the rollback since there is no record of any successful pipeline execution.

N+1 time deployment

In case of stage failures in subsequent executions ((Assuming the service, environment, infrastructure didn't changed in the corresponding stage)), Harness initiates rollback to the previous successful pipeline. The previous pipeline execution must be successful for all stages. The successful stage is matched regardless of the pipeline execution status.

- If any of the

service,environment, orinfrastructuredetails were changed in the stage, Harness won't consider any previous successful pipeline executions for rollback. It is treated as a completely different deployment. - In case of multiple stages referencing the same

service,environment, andinfrastructuredetails, Harness will rollback the deployment to last successful pipeline with stage execution that shared the sameservice,environment, andinfrastructure.

For more details, go to Use Harness expressions.

FAQs

For frequently asked questions about traditional deployments in Harness, go to SSH and WinRM deployment FAQs.