Save and Restore Cache from GCS

Modern continuous integration systems execute pipelines inside ephemeral environments that are provisioned solely for pipeline execution and are not reused from prior pipeline runs. As builds often require downloading and installing many library and software dependencies, caching these dependencies for quick retrieval at runtime can save a significant amount of time.

In addition to loading dependencies faster, you can also use caching to share data across stages in your Harness CI pipelines. You need to use caching to share data across stages because each stage in a Harness CI pipeline has its own build infrastructure.

Consider using Cache Intelligence, a Harness CI Intelligence feature, to automatically caches and restores software dependencies - hassle free.

This topic explains how you can use the Save Cache to GCS and Restore Cache from GCS steps in your CI pipelines to save and retrieve cached data from Google Cloud Storage (GCS) buckets. For more information about caching in GCS, go to the Google Cloud documentation on caching. In your pipelines, you can also save and restore cached data from S3 or use Harness Cache Intelligence.

You can't share access credentials or other Text Secrets across stages.

This topic assumes you have created a pipeline and that you are familiar with the following:

GCP connector and GCS bucket requirements

You need a dedicated GCS bucket for your Harness cache operations. Don't save files to the bucket manually. The Retrieve Cache operation fails if the bucket includes any files that don't have a Harness cache key.

You need a GCP connector that authenticates through a GCP service account key. To do this:

- In GCP, create an IAM service account. Note the email address generated for the IAM service account; you can use this to identify the service account when assigning roles.

- Assign the required GCS roles to the service account, as described in the GCP connector settings reference.

- Generate a JSON-formatted service account key.

- In the GCP connector's Details, select Specify credentials here, and then provide the service account key for authentication. For more information, refer to Store service account keys as Harness secrets in the GCP connector settings reference.

Add save and restore cache steps

- Visual

- YAML

- Go to the pipeline and stage where you want to add the Save Cache to GCS step.

- Select Add Step, select Add Step again, and then select Save Cache to GCS in the Step Library.

- Configure the Save Cache to GCS step settings.

- Select Apply changes to save the step.

- Go to the stage where you want to add the Restore Cache from GCS step.

- Select Add Step, select Add Step again, and then select Restore Cache from GCS in the Step Library.

- Configure the Restore Cache from GCS step settings. The bucket and key must correspond with the bucket and key settings in the Save Cache to GCS step.

- Select Apply changes to save the step, and then select Save to save the pipeline.

To add a Save Cache to GCS step in the YAML editor, add a type: SaveCacheGCS step, then define the Save Cache to GCS step settings. The following are required:

connectorRefThe GCP connector ID.bucket: The GCS cache bucket name.key: The GCS cache key to identify the cache.sourcePaths: Files and folders to cache. Specify each file or folder separately.archiveFormat: The archive format. The default format isTar.

Here is an example of the YAML for a Save Cache to GCS step.

- step:

type: SaveCacheGCS

name: Save Cache to GCS_1

identifier: SaveCachetoGCS_1

spec:

connectorRef: account.gcp

bucket: ci_cache

key: gcs-{{ checksum filePath1 }} # example cache key based on file checksum

sourcePaths:

- directory1 # example first directory to cache

- directory2 # example second directory to cache

archiveFormat: Tar

To add a Restore Cache from GCS step in the YAML editor, add a type: RestoreCacheGCS step, and then define the Restore Cache from GCS step settings. The following settings are required:

connectorRefThe GCP connector ID.bucket: The GCS cache bucket name. This must correspond with the Save Cache to GCSbucket.key: The GCS cache key to identify the cache. This must correspond with the Save Cache to GCSkey.archiveFormat: The archive format, corresponding with the Save Cache to GCSarchiveFormat.

Here is an example of the YAML for a Restore Cache from GCS step.

- step:

type: RestoreCacheGCS

name: Restore Cache From GCS_1

identifier: RestoreCacheFromGCS_1

spec:

connectorRef: account.gcp

bucket: ci_cache

key: gcs-{{ checksum filePath1 }} # example cache key based on file checksum

archiveFormat: Tar

Avoiding Prefix Collisions During Restore

To prevent prefix collisions and ensure successful cache restoration, use the featue flag PLUGIN_STRICT_KEY_MATCHING (default: true).

-

Strict Mode (Default): Only restores from exact key matches, preventing unexpected collisions and ensuring accurate cache restoration.

-

Flexible Mode (PLUGIN_STRICT_KEY_MATCHING=false): Processes all entries that start with the specified prefix, which may result in multiple paths being restored.

Using Flexible Mode (i.e., setting PLUGIN_STRICT_KEY_MATCHING to false) may result in unexpected behavior as multiple paths might be restored. The recommended setting is true.

Additional Recommendations:

Key Naming: Avoid using cache keys where one key is a prefix of another. Ensure cache key templates are distinct to prevent collisions.

Bucket Cleanup: If collisions occur, clean up the problematic entries in the GCS bucket by removing folders with the duplicated key structure.

GCS save and restore cache step settings

The Save Cache to GCS and Restore Cache from GCS steps have the following settings. Depending on the stage's build infrastructure, some settings might be unavailable or optional. Settings specific to containers, such as Set Container Resources, are not applicable when using these steps in a stage with VM or Harness Cloud build infrastructure.

Name

Enter a name summarizing the step's purpose. Harness automatically assigns an Id (Entity Identifier Reference) based on the Name. You can change the Id.

GCP Connector

The Harness connector for the GCP account where you want to save or retrieve a cache. For more information, go to Google Cloud Platform (GCP) connector settings reference.

This step supports GCP connectors that use access key authentication. It does not support GCP connectors that inherit delegate credentials.

Bucket

The name of the target GCS bucket. In the Save Cache to GCS step, this is the bucket where you want to save the cache. In the Restore Cache from GCS step, this is the bucket containing the cache you want to retrieve.

Key

The key identifying the cache.

You can use the checksum macro to create a key based on a file's checksum, for example: myApp-{{ checksum "path/to/file" }} or gcp-{{ checksum "package.json" }}. The result of the checksum macro is concatenated to the leading string.

In the Save Cache to GCS step, Harness checks if the key exists and compares the checksum. If the checksum matches, then Harness doesn't save the cache. If the checksum is different, then Harness saves the cache.

The backslash character isn't allowed as part of the checksum value here. This is a limitation of the Go language (golang) template. You must use a forward slash instead.

- Incorrect format:

cache-{{ checksum ".\src\common\myproj.csproj" } - Correct format:

cache-{{ checksum "./src/common/myproj.csproj" }}

Archive Format

Select the archive format. The default archive format is Tar.

Source Paths

Only for the Save Cache to GCS step. Provide a list of the files/folders to cache. Add each file/folder separately.

Override Cache

Only for the Save Cache to GCS step. Select this option if you want to override the cache if a cache with a matching Key already exists. The default is false (unselected).

Fail if Key Doesn't Exist

Only for the Restore Cache from GCS step. Select this option if you want the restore step to fail if the specified Key doesn't exist. The default is false (unselected).

Run as User

Specify the user ID to use to run all processes in the pod if running in containers. For more information, go to Set the security context for a pod.

Set Container Resources

Maximum resources limits for the resources used by the container at runtime:

- Limit Memory: Maximum memory that the container can use. You can express memory as a plain integer or as a fixed-point number with the suffixes

GorM. You can also use the power-of-two equivalents,GiorMi. Do not include spaces when entering a fixed value. The default is500Mi. - Limit CPU: The maximum number of cores that the container can use. CPU limits are measured in CPU units. Fractional requests are allowed. For example, you can specify one hundred millicpu as

0.1or100m. The default is400m. For more information, go to Resource units in Kubernetes.

Timeout

Set the timeout limit for the step. Once the timeout limit is reached, the step fails and pipeline execution continues. To set skip conditions or failure handling for steps, go to:

Preserve File Metadata

By default, Harness cache steps don’t preserve inode metadata, which means restored files can appear "new" on every build. This can cause cache growth over time, also called cache snowballing.

To address this, Harness supports a preserveMetadata flag, which can be configured as a stage variable PLUGIN_PRESERVE_METADATA set to true.

How it works

-

preserveMetadata: true

Cache archives include inode metadata (timestamps, ownership, permissions).

Restored files keep their original metadata, enabling tools like Gradle pruning orfind -atimeto work correctly. -

preserveMetadata: false(default)

Metadata is not preserved. Restores are slightly faster, but tools that rely on timestamps treat all files as new.

YAML examples

Here’s an example of saving and restoring a Gradle cache with metadata preserved:

steps:

- step:

type: SaveCache

name: save-gradle-cache

spec:

key: gradle-cache

paths:

- ~/.gradle

preserveMetadata: true

- step:

type: RestoreCache

name: restore-gradle-cache

spec:

key: gradle-cache

preserveMetadata: true

Backward compatibility

- The flag is optional and defaults to

false. - Pipelines without this setting continue to work as before.

- Old cache archives can still be restored even if

preserveMetadatais enabled.

Benefits

- Prevents cache snowballing by keeping Gradle cache size stable across builds.

- Accurate pruning of unused dependencies in Gradle and similar tools.

- Supports other tools that rely on inode metadata (for example,

find, cleanup scripts).

Troubleshooting

If your Gradle cache grows unexpectedly or pruning doesn’t work:

- Enable

preserveMetadata: truein both Save Cache and Restore Cache steps. - Make sure the

keyvalues match across your Save/Restore steps.

Set shared paths for cache locations outside the stage workspace

Steps in the same stage share the same workspace, which is /harness. If your steps need to use data in locations outside the stage workspace, you must specify these as shared paths. This is required if you want to cache directories outside /harness. For example:

stages:

- stage:

spec:

sharedPaths:

- /example/path # directory outside workspace to share between steps

Go, Node, and Maven cache key and path requirements

There are specific requirements for cache keys and paths for Go, Node.js, and Maven.

- Go

- Node.js

- Maven

Go pipelines must reference go.sum for spec.key in Save Cache to GCS and Restore Cache From GCS steps, for example:

spec:

key: cache-{{ checksum "go.sum" }}

spec.sourcePaths must include /go/pkg/mod and /root/.cache/go-build in the Save Cache to GCS step, for example:

spec:

sourcePaths:

- /go/pkg/mod

- /root/.cache/go-build

npm pipelines must reference package-lock.json for spec.key in Save Cache to GCS and Restore Cache From GCS steps, for example:

spec:

key: cache-{{ checksum "package-lock.json" }}

Yarn pipelines must reference yarn.lock for spec.key in Save Cache to GCS and Restore Cache From GCS steps, for example:

spec:

key: cache-{{ checksum "yarn.lock" }}

spec.sourcePaths must include node_modules in the Save Cache to GCS step, for example:

spec:

sourcePaths:

- node_modules

Maven pipelines must reference pom.xml for spec.key in Save Cache to GCS and Restore Cache From GCS steps, for example:

spec:

key: cache-{{ checksum "pom.xml" }}

spec.sourcePaths must include /root/.m2 in the Save Cache to GCS step, for example:

spec:

sourcePaths:

- /root/.m2

Cache step placement in single or multiple stages

The placement and sequence of the save and restore cache steps depends on how you're using caching in a pipeline.

Single-stage caching

If you use caching to optimize a single stage, the Restore Cache from GCS step occurs before the Save Cache to GCS step.

This YAML example demonstrates caching within one stage. At the beginning of the stage, the cache is restored so the cached data can be used for the build steps. At the end of the stage, if the cached files changed, updated files are saved to the cache bucket.

stages:

- stage:

name: Build

identifier: Build

type: CI

spec:

cloneCodebase: true

execution:

steps:

- step:

type: RestoreCacheGCS

name: Restore Cache From GCS_1

identifier: RestoreCacheFromGCS_1

spec:

connectorRef: account.gcp

bucket: ci_cache

key: gcp-{{ checksum "package.json" }}

archiveFormat: Tar

...

- step:

type: SaveCacheGCS

name: Save Cache to GCS_1

identifier: SaveCachetoGCS_1

spec:

connectorRef: account.gcp

bucket: ci_cache

key: gcp-{{ checksum "package.json" }}

sourcePaths:

- /harness/node_modules

archiveFormat: Tar

Multi-stage caching

Stages run in isolated workspaces, so you can use caching to pass data from one stage to the next.

If you use caching to share data across stages, the Save Cache to GCS step occurs in the stage where you create the data you want to cache, and the Restore Cache from GCS step occurs in the stage where you want to load the previously-cached data.

The following diagram illustrates caching across two stages.

This YAML example demonstrates how to use caching across two stages. The first stage creates a cache bucket and saves the cache to the bucket, and the second stage retrieves the previously-saved cache.

stages:

- stage:

identifier: GCS_Save_Cache

name: GCS Save Cache

type: CI

variables:

- name: GCP_Access_Key

type: String

value: <+input>

- name: GCP_Secret_Key

type: Secret

value: <+input>

spec:

sharedPaths:

- /.config

- /.gsutil

execution:

steps:

- step:

identifier: createBucket

name: create bucket

type: Run

spec:

connectorRef: <+input>

image: google/cloud-sdk:alpine

command: |+

echo $GCP_SECRET_KEY > secret.json

cat secret.json

gcloud auth -q activate-service-account --key-file=secret.json

gsutil rm -r gs://harness-gcs-cache-tar || true

gsutil mb -p ci-play gs://harness-gcs-cache-tar

privileged: false

- step:

identifier: saveCacheTar

name: Save Cache

type: SaveCacheGCS

spec:

connectorRef: <+input>

bucket: harness-gcs-cache-tar

key: cache-tar

sourcePaths:

- <+input>

archiveFormat: Tar

...

- stage:

identifier: gcs_restore_cache

name: GCS Restore Cache

type: CI

variables:

- name: GCP_Access_Key

type: String

value: <+input>

- name: GCP_Secret_Key

type: Secret

value: <+input>

spec:

sharedPaths:

- /.config

- /.gsutil

execution:

steps:

- step:

identifier: restoreCacheTar

name: Restore Cache

type: RestoreCacheGCS

spec:

connectorRef: <+input>

bucket: harness-gcs-cache-tar

key: cache-tar

archiveFormat: Tar

failIfKeyNotFound: true

Caching in parallel or concurrent stages

If you have multiple stages that run in parallel, Save Cache steps might encounter errors when they attempt to save to the same cache location concurrently. To prevent conflicts with saving caches from parallel runs, you need to skip the Save Cache step in all except one of the parallel stages.

This is necessary for any looping strategy that causes stages to run in parallel, either literal parallel stages or matrix/repeat strategies that generate multiple instances of a stage.

To skip the Save Cache step in all except one parallel stage, add the following conditional execution to the Save Cache step(s):

- Visual editor

- YAML editor

- Edit the Save Cache step, and select the Advanced tab.

- Expand the Conditional Execution section.

- Select Execute this step if the stage execution is successful thus far.

- Select And execute this step only if the following JEXL condition evaluates to True.

- For the JEXL condition, enter

<+strategy.iteration> == 0.

Add the following when definition to the end of your Save Cache step.

- step:

...

when:

stageStatus: Success ## Execute this step if the stage execution is successful thus far.

condition: <+strategy.iteration> == 0 ## And execute this step if this JEXL condition evaluates to true

This when definition causes the step to run only if both of the following conditions are met:

stageStatus: Success: Execute this step if the stage execution is successful thus far.condition: <+strategy.iteration> == 0: Execution this step if the JEXL expression evaluates to true.

The JEXL expression <+strategy.iteration> == 0 references the looping strategy's iteration index value assigned to each stage. The iteration index value is a zero-indexed value appended to a step or stage's identifier when it runs in a looping strategy. Although the stages run concurrently, each concurrent instance has a different index value, starting from 0. By limiting the Save Cache step to run on the 0 stage, it only runs in one of the concurrent instances.

Caching in cloned pipelines

When you clone a pipeline that has Save/Restore Cache steps, cache keys generated by the cloned pipeline use the original pipeline's cache key as a prefix. For example, if the original pipeline's cache key is some-cache-key, the cloned pipeline's cache key is some-cache-key2. This can cause problems if the Restore Cache step in the original pipeline looks for caches with the matching cache key prefix and pulls the caches for both pipelines.

To prevent this issue, Harness can add separators (/) to your GCS cache keys to prevent accidental prefix matching and pulling incorrect caches from cloned pipelines. To enable the separator, add this stage variable: PLUGIN_ENABLE_SEPARATOR: true.

If you don't enable the separator, make sure your cloned pipelines generate unique cache keys to avoid the prefix matching issue.

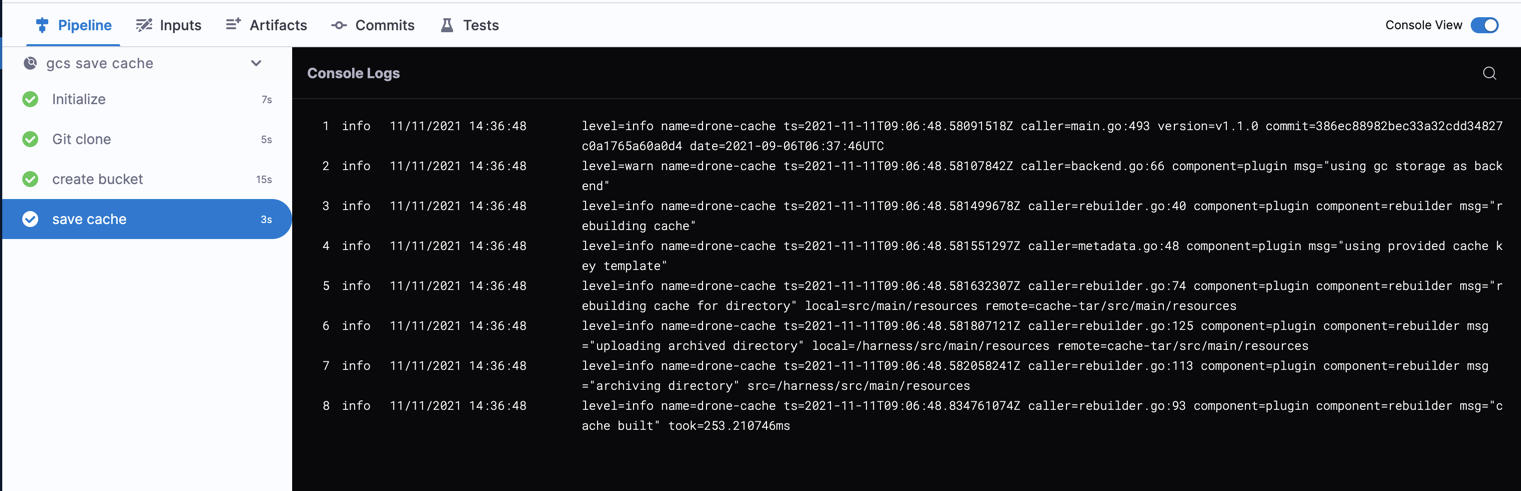

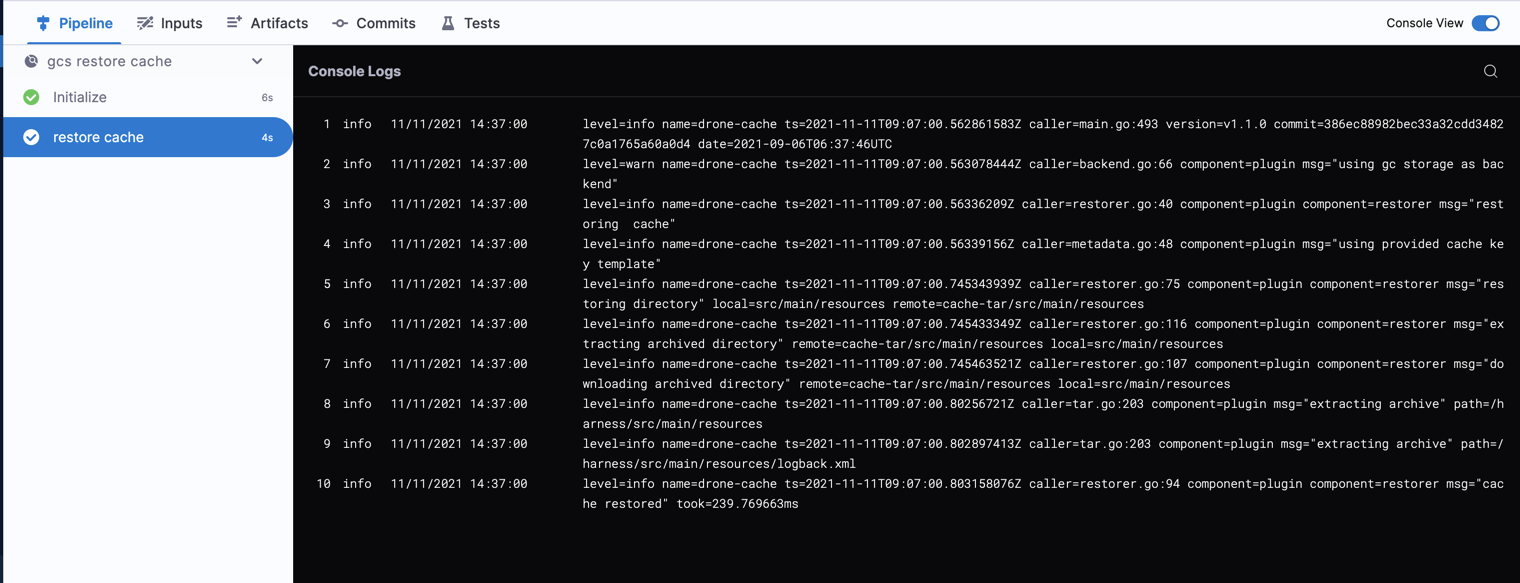

Cache step logs

You can observe and review build logs on the Build details page.

Save Cache to GCS logs

level=info name=drone-cache ts=2021-11-11T09:06:48.834761074Z caller=rebuilder.go:93 component=plugin component=rebuilder msg="cache built" took=253.210746ms

Restore Cache from GCS logs

level=info name=drone-cache ts=2021-11-11T09:07:00.803158076Z caller=restorer.go:94 component=plugin component=restorer msg="cache restored" took=239.769663ms

Troubleshoot caching

Go to the CI Knowledge Base for questions and issues related to caching, data sharing, dependency management, workspaces, shared paths, and more. For example:

- Why are changes made to a container image filesystem in a CI step is not available in the subsequent step that uses the same container image?

- How can I use an artifact in a different stage from where it was created?

- How can I check if the cache was restored?

- How can I share cache between different OS types (Linux/macOS)?