Configure audit streaming

Audit logs help you track and analyze your system and user events within your Harness account. You can stream these audit logs to external destinations and integrate them with Security Information and Event Management (SIEM) tools to:

- Trigger alerts for specific events

- Create custom views of audit data

- Perform anomaly detection

- Store audit data beyond Harness's 2-year retention limit

- Maintain security compliance and regulatory requirements

All audit event data is sent to your streaming destination and may include sensitive information such as user emails, account identifiers, project details, and resource information. Ensure you only configure trusted and secure destinations.

Add a streaming destination

You can only add streaming destinations at the Account scope. Follow these steps to create a new streaming destination:

- Interactive

- Manual

-

In your Harness account, select Account Settings.

-

Navigate to Security and Governance > Audit Trail > Audit Log Streaming.

-

Select New Streaming Destination to open the configuration settings.

-

Enter a name for the streaming destination.

-

(Optional) Enter a description and tag(s) for the streaming destination.

-

Select Continue to proceed.

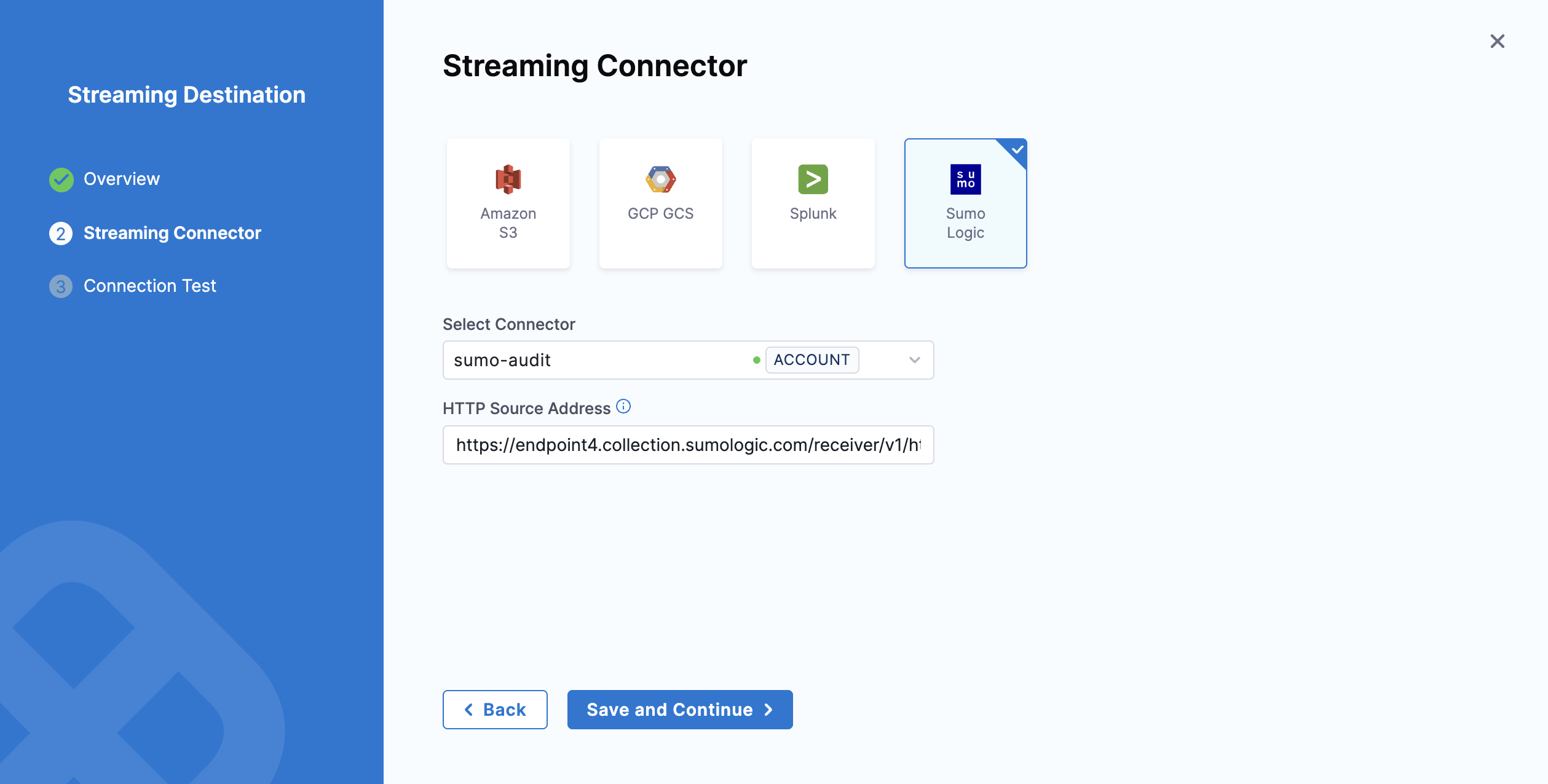

Configure the streaming connector

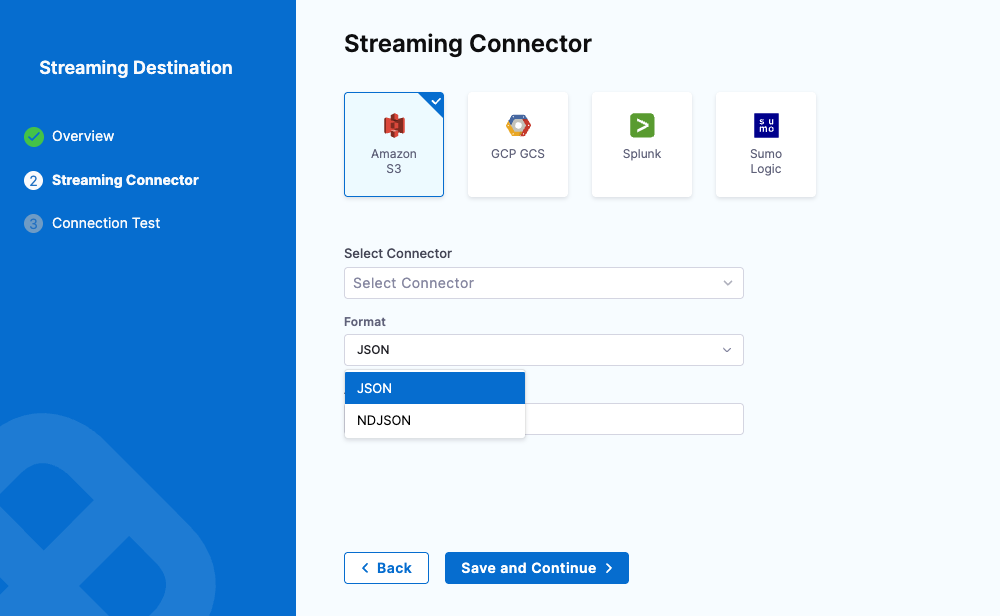

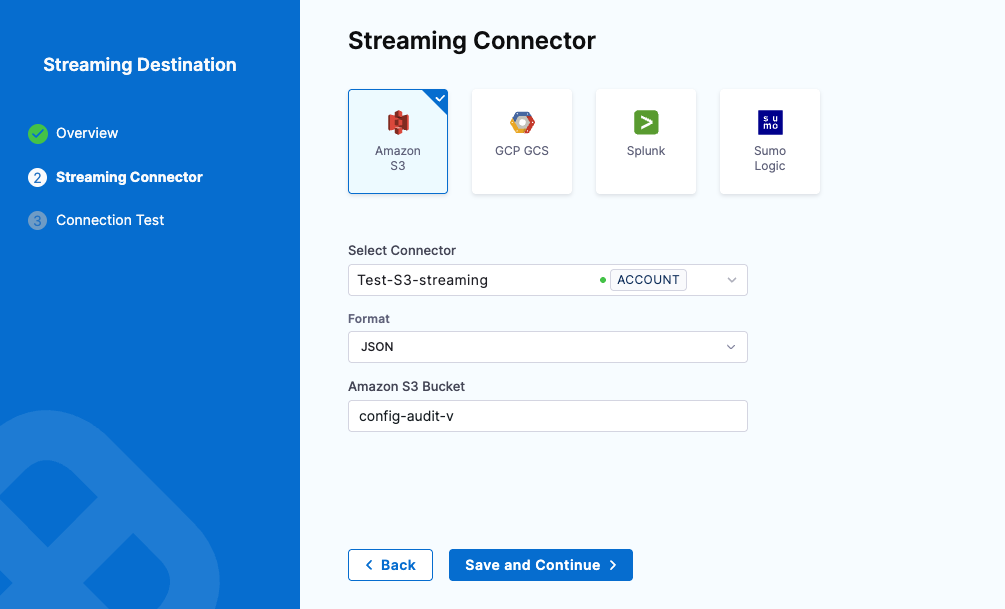

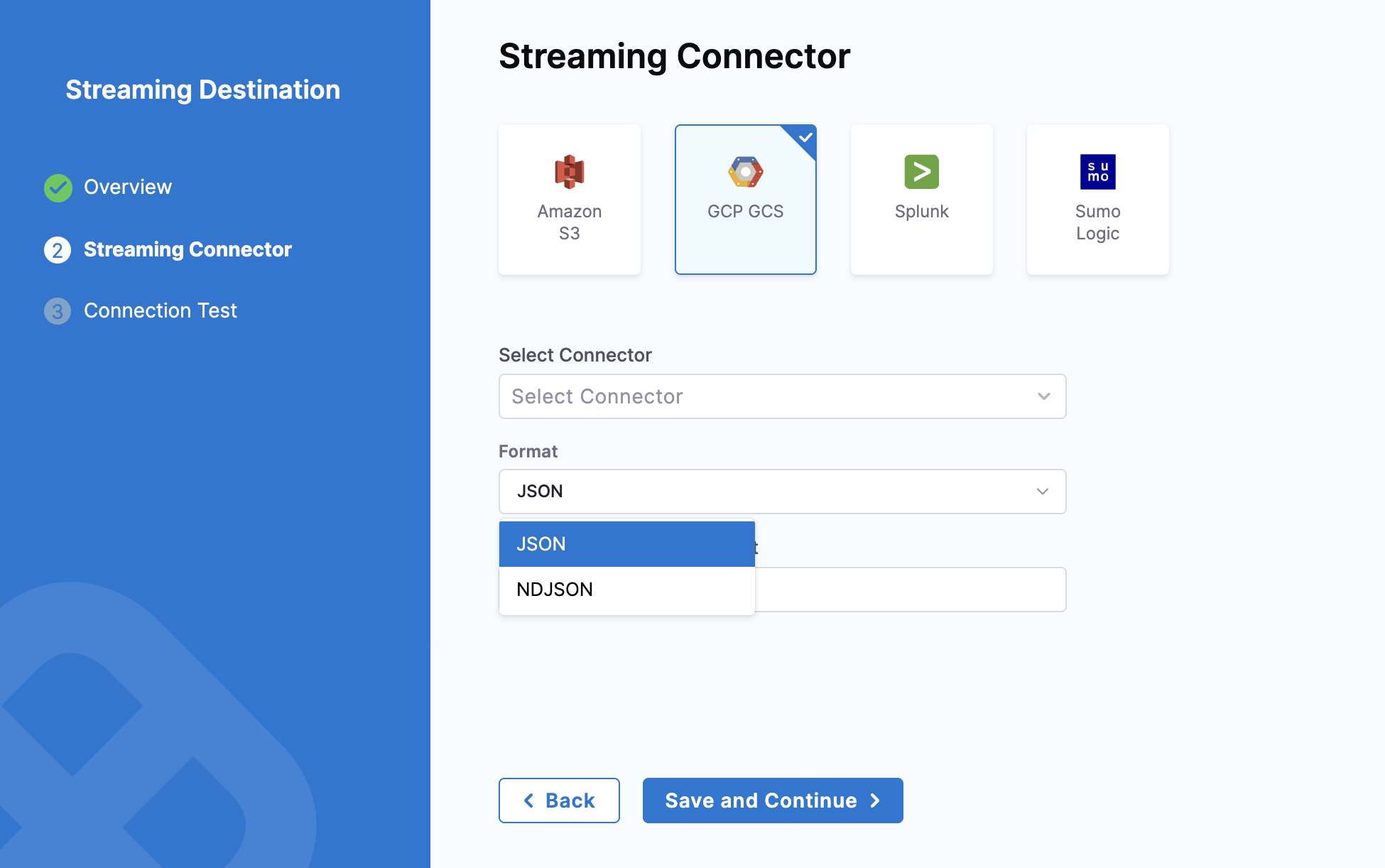

Once a streaming destination is added, you're ready to configure the streaming connector. For object storage services(AWS S3 and GCP GCS), you can choose to stream audit events in either JSON and NDJSON formats.

- Amazon S3

- GCP GCS

- Splunk

- Sumo Logic

To configure the Amazon S3 streaming connector:

-

Select Amazon S3.

-

In Select Connector, select an existing AWS Cloud Provider connector or create a new one.

You must use the Connect through a Harness Delegate connectivity mode option when you set up your AWS Cloud Provider connector. Audit streaming does not support the Connect through Harness Platform connector option.

Go to Add an AWS connector for steps to create a new AWS Cloud Provider connector.

-

Select Apply Selected.

-

Select the Format of the data — either JSON or NDJSON.

noteNDJSON format is supported in Harness Delegate version 25.10.87100 or later.

-

In Amazon S3 Bucket, enter the bucket name.

Harness writes all the streaming records to this destination.

-

Select Save and Continue.

-

After the connection test is successful, select Finish.

The streaming destination gets configured and appears in the list of destinations under Audit Log Streaming. By default the destination is inactive.

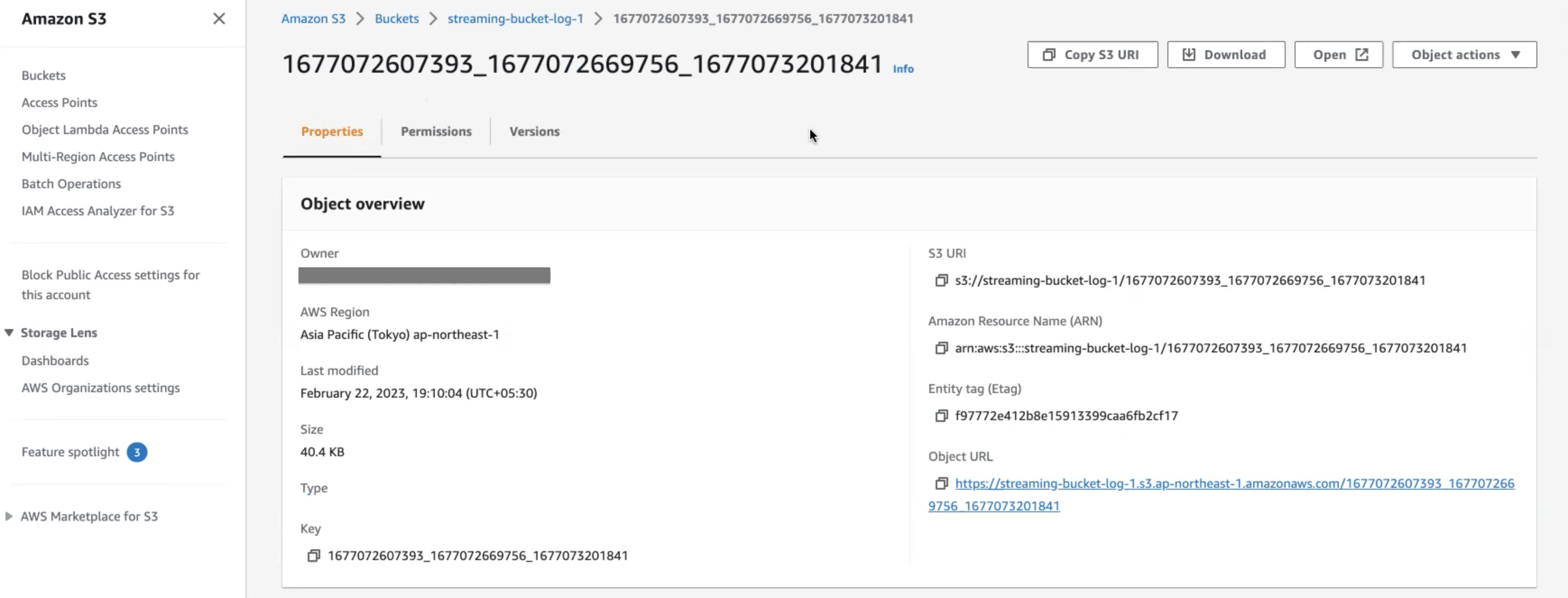

Amazon S3 audit file details

Here is an example of an audit stream file in one of the Amazon S3 buckets.

Currently, this feature is behind the feature flag PL_GCP_GCS_STREAMING_DESTINATION_ENABLED. Contact Harness Support to enable the feature.

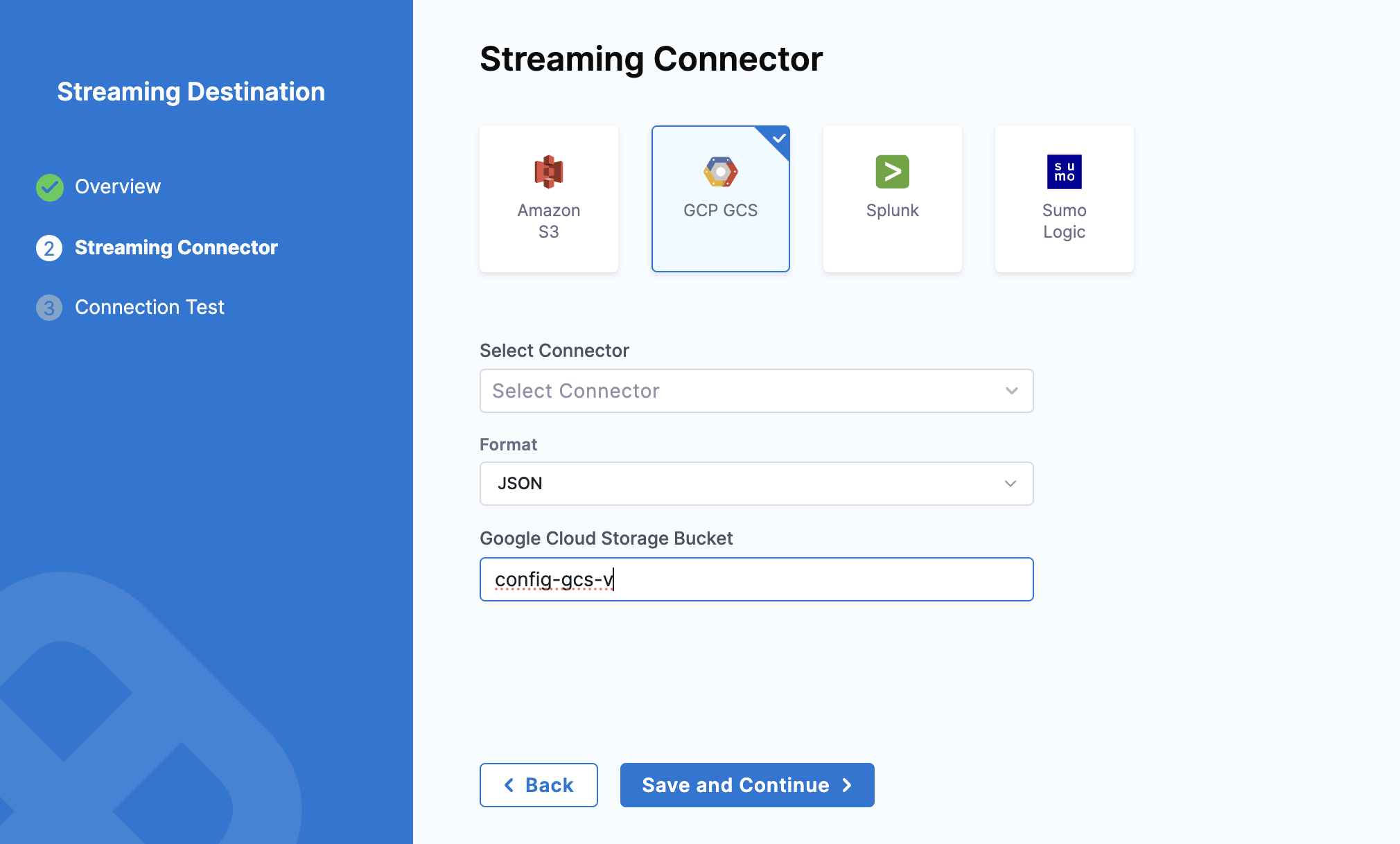

To configure the GCP GCS streaming connector:

-

Select GCP GCS.

-

In Select Connector, select an existing GCP connector or create a new one.

You can use either to Connect through a Harness Delegate or Connect through Harness Platform connectivity mode options when setting up your GCP connector. Audit streaming supports both options.

Go to Add an GCP connector for steps to create a new GCP Provider connector.

-

Select Apply Selected.

-

Select the Format of the data — either JSON or NDJSON.

noteNDJSON format is supported in Harness Delegate version 25.11.87200 or later.

-

In Google Cloud Storage Bucket, enter the bucket name. Harness writes all the streaming records to this destination.

-

Select Save and Continue.

-

After the connection test is successful, select Finish.

The streaming destination gets configured and appears in the list of destinations under Audit Log Streaming. By default the destination is inactive.

Currently, this feature is behind the feature flag PL_AUDIT_STREAMING_USING_SPLUNK_HEC_ENABLE and requires Harness Delegate version 82500 or later. Contact Harness Support to enable the feature.

Splunk audit log streaming is compatible with Splunk enterprise and SaaS.

To configure the Splunk streaming connector:

-

Select Splunk.

-

In Select Connector, select an existing Splunk connector or create a new one.

importantWhen you set up your Splunk connector, you must:

- Select HEC Authentication

- Set up and use your Splunk HEC token.

- Use the Connect through a Harness Delegate connectivity mode option. Audit streaming does not support the Connect through Harness Platform connector option.

-

Select Apply Selected, then select Save and Continue.

-

Select the Format of the data — either JSON or NDJSON.

-

After the connection test is successful, select Finish.

The streaming destination gets configured and appears in the list of destinations under Audit Log Streaming. By default the destination is inactive.

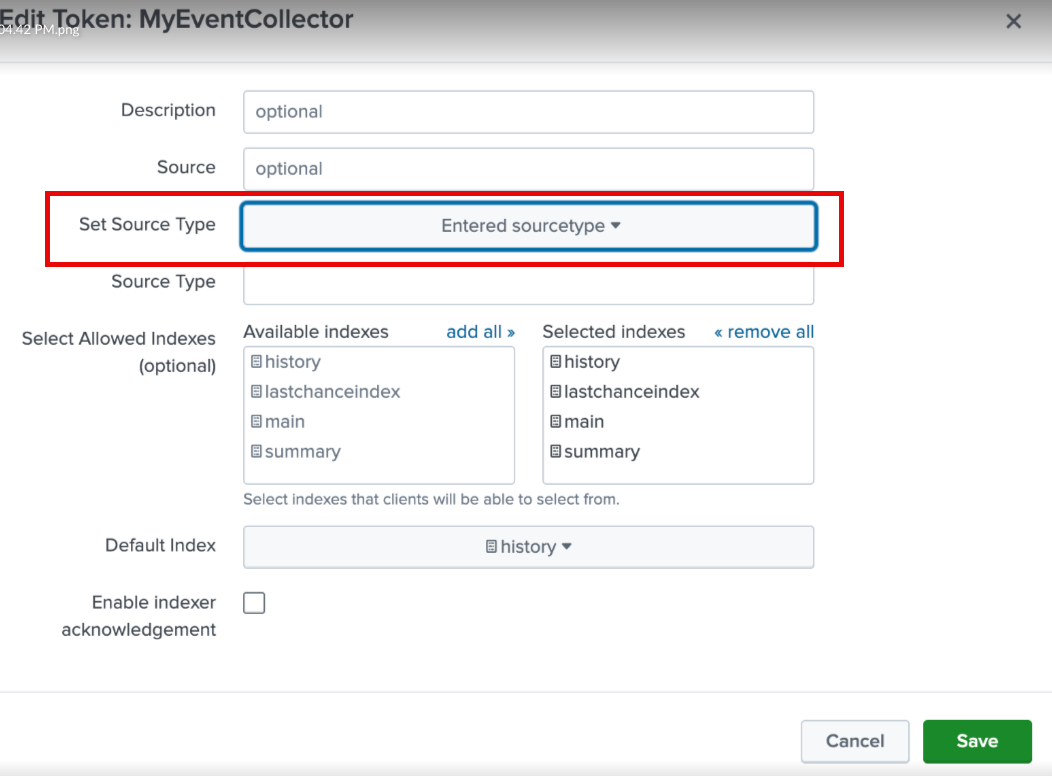

At times, you might experience issues with the HEC connector. Here are some troubleshooting steps you can take to resolve common issues.

-

You should set HEC connectors without a declared

SourceType. Although the data will be JSON-formatted, it is not declared as such. Setting aSourceTypeasJSONmight filter data from the stream.

-

Harness utilizes the following standard endpoints and appends them automatically to the customer's URL. They are not customizable.

/services/collector/event/services/collector/health/services/server/info

Currently, this feature is behind the feature flag PL_ENABLE_SUMOLOGIC_AUDIT_STREAMING and requires Harness Delegate version 85500 or later. Contact Harness Support to enable the feature.

Starting with Harness Delegate version 25.10.87100, each audit event is streamed as a separate log line in Sumo Logic.Example event streamed to Sumo Logic

{

"event": "Harness-Audit-Event-68ffa287f277183fb388a560",

"fields": {

"auditResource.identifier": "string",

"auditResourceScope.accountIdentifier": "string",

"auditEventAuthor.principal.type": "string",

"auditEventAuthor.principal.identifier": "string",

"auditAction": "string",

"auditEventMetadata.batchId": "string",

"auditHttpRequestInfo.requestMethod": "string",

"auditResource.type": "string",

"auditModule": "string",

"auditEventId": "string",

"auditEventTime": "number",

"auditEventAuthor.principal.email": "string",

"auditHttpRequestInfo.clientIP": "string"

}

}

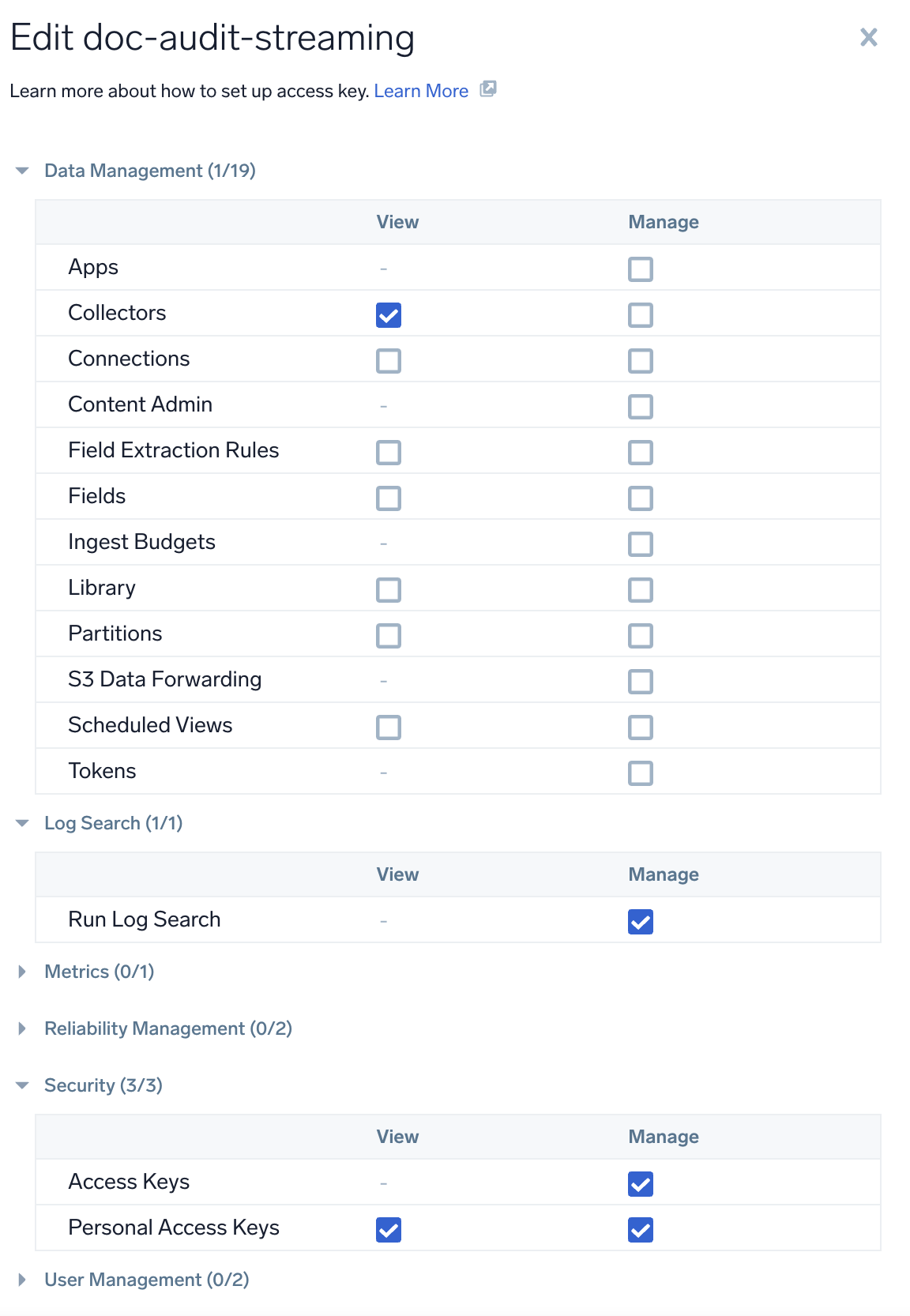

-

Sumo Logic's Access ID and Access Key. An Access Key can have two scope types: Default and Custom. If selecting Custom, ensure you enable the permissions shown in the image below.

-

HTTP Source Address to receive logs.

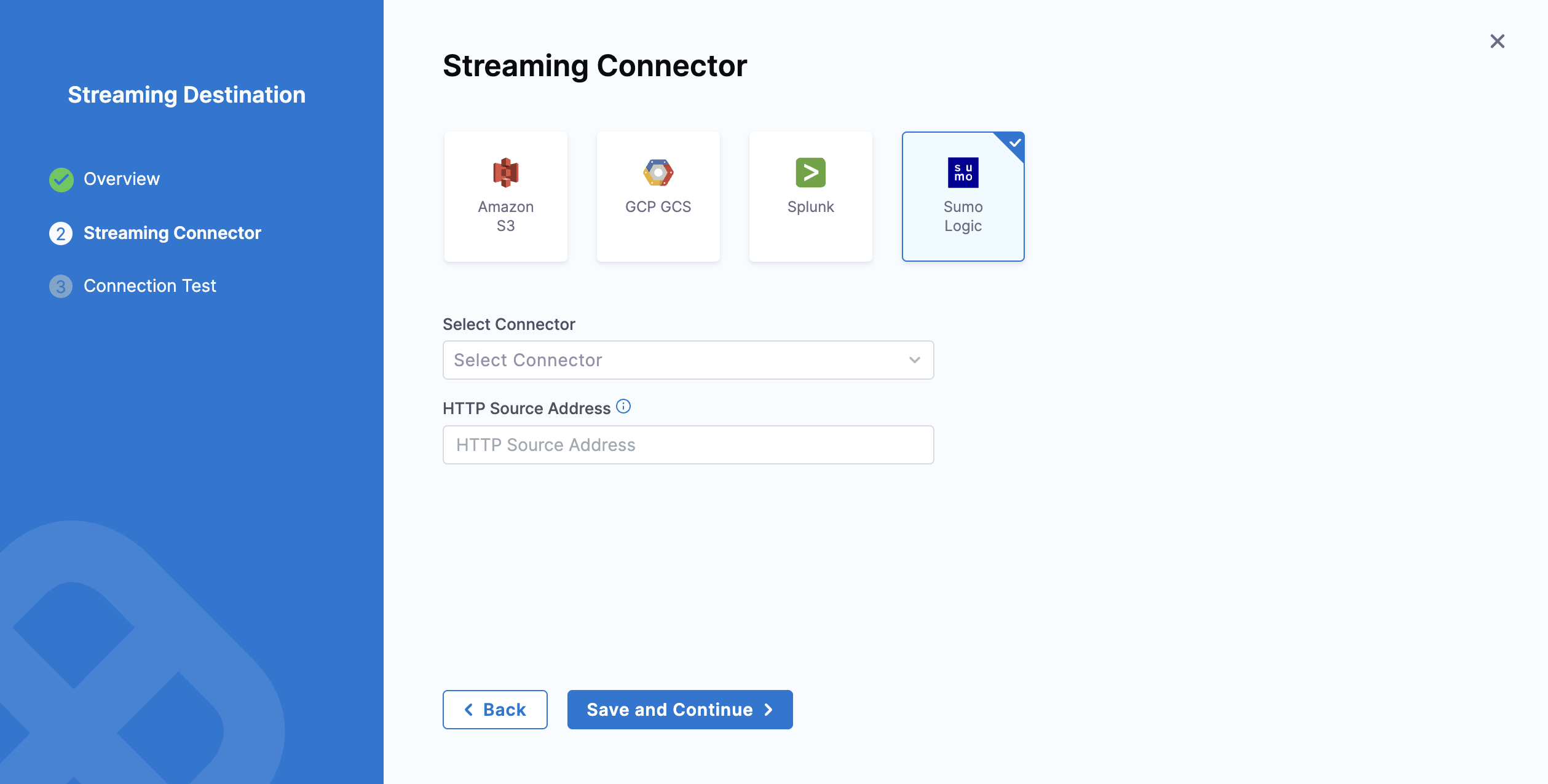

To configure Sumo Logic as a New Streaming Destination:

-

Select Sumo Logic.

-

In Select Connector, select an existing connector or create a new one. For now, let's add a new connector.

-

Click New Connector → Add a name, description, and tags accordingly.

-

Add the Sumo Logic API server URL. To know your Sumo Logic API Endpoint refer Sumo Logic documentation

-

Add Sumo Logic Access ID and Access Key. You can either add new secrets or use existing ones. For now, we have used the existing secrets as shown in the GIF below.

-

Complete the Delegate setup → Save and Continue.

-

Verify the connection and click Finish to complete the setup.

-

-

Add the HTTP Source Address. Click Save and Continue to proceed.

-

Verify the connection and click on Finish.

-

Verify that the correct HTTP Source Address is provided. If an incorrect address is entered, logs will not be streamed, and an error will appear in the Harness UI.

-

Ensure that the access keys are valid and have the required permissions. If an invalid Access ID or Access Key is provided, or if the key does not have the required permissions, Sumo Logic will not generate an error message, and you will not see any error shown in the Harness UI.

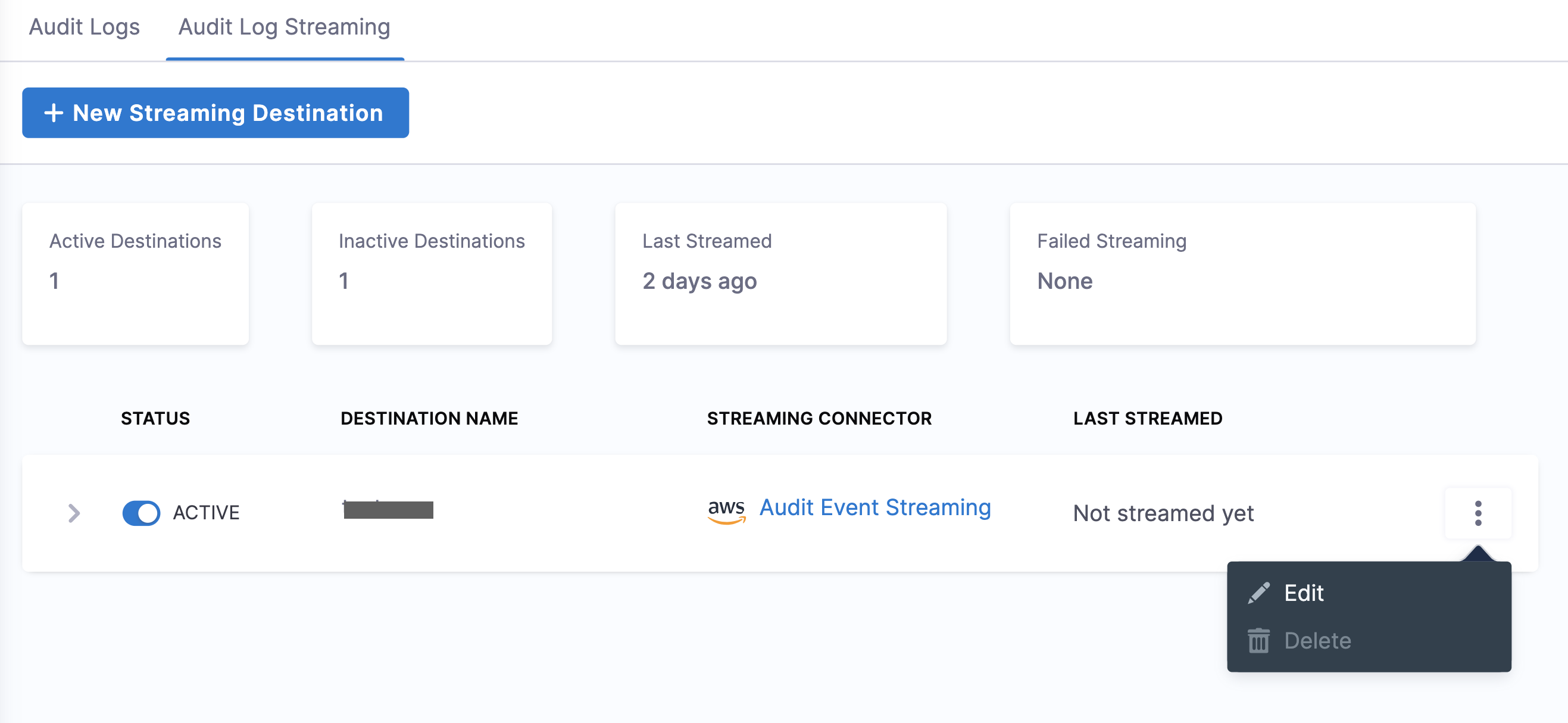

Activate or deactivate streaming

-

To start streaming to this destination, toggle the status to Active. Audit logs will begin writing once the destination is activated and are streamed every 30 minutes.

-

You can pause audit streaming, preventing any new audit events from being streamed to the configured endpoint by setting the status to Inactive.

When you reactivate the streaming destination, Harness starts streaming the audit logs from the point where it was paused.

Update audit stream

You can change the audit stream configuration by clicking three dots beside the stream destination. This opens a pop-up menu with the following options:

-

Edit: Select a different streaming destination or make changes to the existing destination.

-

Delete: Delete the audit stream destination. You must set the audit stream destination to inactive before you can delete it.

Payload schema

Streamed audit events have a predictable schema in the body of the response.

| Field | Description | Is required |

|---|---|---|

| auditEventId | Unique ID for the audit event. | Required |

| auditEventAuthor | Principal attached with audit event. | Required |

| auditModule | Module for which the audit event is generated. | Required |

| auditResource | Resource audited. | Required |

| auditResourceScope | Scope of the audited resource. | Required |

| auditAction | Action on the audited resource. | Required |

| auditEventTime | Date and time of the event. | Required |

| auditHttpRequestInfo | Details of the HTTP request. | Optional |

Example audit event

This file contains a list of audit events in JSON format. Key points about the audit stream file naming convention:

- The file name includes three timestamps:

<t1>_<t2>_<t3>. <t1>and<t2>indicate the time range of the audit events in the file. This range is provided for reference only and may not always be accurate. Timestamps can also be out of range if there is a delay in capturing events.<t3>represents the time when the file was written.

- JSON

- NDJSON

In JSON format, an array of object is streamed per batch. Each object in the array represents an audit event.

{

"$schema": "http://json-schema.org/draft-04/schema#",

"type": "object",

"properties": {

"auditEventId": {

"type": "string"

"description":"Unique ID for each audit event"

},

"auditEventAuthor": {

"type": "object",

"properties": {

"principal": {

"type": "object",

"properties": {

"type": {

"type": "string"

},

"identifier": {

"type": "string"

},

"email": {

"type": "string"

}

},

"required": [

"type",

"identifier",

]

}

},

"required": [

"principal"

]

"description":"Information about Author of the audit event"

},

"auditModule": {

"type": "string"

"description":"Information about Module of audit event origin"

},

"auditResource": {

"type": "object",

"properties": {

"type": {

"type": "string"

},

"identifier": {

"type": "string"

}

},

"required": [

"type",

"identifier"

]

"description":"Information about resource for which Audit event was generated"

},

"auditResourceScope": {

"type": "object",

"properties": {

"accountIdentifier": {

"type": "string"

},

"orgIdentifier": {

"type": "string"

},

"projectIdentifier": {

"type": "string"

}

},

"required": [

"accountIdentifier",

]

"description":"Information about scope of the resource in Harness"

},

"auditAction": {

"type": "string"

"description":"Action CREATE,UPDATE,DELETE,TRIGGERED,ABORTED,FAILED , Not exhaustive list of events"

},

"auditHttpRequestInfo": {

"type": "object",

"properties": {

"requestMethod": {

"type": "string"

}

"clientIP": {

"type": "string"

}

},

"required": [

"requestMethod",

"clientIP"

]

"description":"Information about HTTP Request"

},

"auditEventTime": {

"type": "string"

"description":"Time of auditEvent in milliseconds"

}

},

"required": [

"auditEventId",

"auditEventAuthor",

"auditModule",

"auditResource",

"auditResourceScope",

"auditAction",

"auditEventTime",

]

}

In NDJSON format, each audit event is streamed as a single log line with the following structure:

{

"auditEventId": "string",

"auditEventAuthor": {

"principal": {

"type": "string",

"identifier": "string"

}

},

"auditModule": "string",

"auditResource": {

"type": "string",

"identifier": "string"

},

"auditResourceScope": {

"accountIdentifier": "string"

},

"auditAction": "string",

"auditHttpRequestInfo": {

"requestMethod": "string",

"clientIP": "string"

},

"auditEventTime": "number",

"auditEventMetadata": {

"batchId": "string"

}

}