Continuous Integration (CI) FAQs

Build infrastructure

What is build infrastructure and why do I need it for Harness CI?

A build stage's infrastructure definition, the build infrastructure, defines "where" your stage runs. It can be a Kubernetes cluster, a VM, or even your own local machine. While individual steps can run in their own containers, your stage itself requires a build infrastructure to define a common workspace for the entire stage. For more information about build infrastructure and CI pipeline components go to:

What kind of build infrastructure can I use? Which operating systems are supported?

For support operating systems, architectures, and cloud providers, go to Which build infrastructure is right for me.

Can I use multiple build infrastructures in one pipeline?

Yes, each stage can have a different build infrastructure. Additionally, depending on your stage's build infrastructure, you can also run individual steps on containers rather than the host. This flexibility allows you to choose the most suitable infrastructure for each part of your CI pipeline.

I have a MacOS build, do I have to use homebrew as the installer?

No. Your build infrastructure can be configured to use whichever tools you like. For example, Harness Cloud build infrastructure includes pre-installed versions of xcode and other tools, and you can install other tools or versions of tools that you prefer to use. For more information, go to the CI macOS and iOS development guide.

What's the difference between CI_MOUNT_VOLUMES, ADDITIONAL_CERTS_PATH, and DESTINATION_CA_PATH?

CI_MOUNT_VOLUMES - An environment variable used for CI Build Stages. This variable should be set to a comma-separated list of source:destination mappings for certificates where source is the certificate path on the delegate, and destination is the path where you want to expose the certificates on the build containers. For example,

- name: CI_MOUNT_VOLUMES

value: "/tmp/ca.bundle:/etc/ssl/certs/ca-bundle.crt,/tmp/ca.bundle:/kaniko/ssl/certs/additional-ca-cert-bundle.crt"

ADDITIONAL_CERTS_PATH - An environment variable used for CI Build Stages. This variable should be set to the path where the certificates exist in the delegate. For example,

- name: ADDITIONAL_CERTS_PATH

value: "/tmp/ca.bundle"

DESTINATION_CA_PATH - An environment variable used for CI Build Stages. This variable should be set to a comma-separated list of files where the certificate should be mounted. For example,

- name: DESTINATION_CA_PATH

value: "/etc/ssl/certs/ca-bundle.crt,/kaniko/ssl/certs/additional-ca-cert-bundle.crt"

ADDTIONAL_CERTS_PATH and CI_MOUNT_VOLUMES work in tandem to ensure certificates are mounted on the Kubernetes Build Infrastructure, whereas DESTINATION_CA_PATH does not require other environment variables to mount certificates. Instead, DESTINATION_CA_PATH relies on the certificate being mounted at /opt/harness-delegate/ca-bundle in order to copy the certificate to the provided comma-separated list of file paths.

DESTINATION_CA_PATH and ADDTIONAL_CERTS_PATH/CI_MOUNT_VOLUMES both perform the same operation of mounting certificates to Kubernetes Build Infrastructure. Harness recommends DESTINATION_CA_PATH over ADDTIONAL_CERTS_PATH/CI_MOUNT_VOLUMES however, if both are defined, DESTINATION_CA_PATH will be consumed over ADDTIONAL_CERTS_PATH/CI_MOUNT_VOLUMES.

For more information and instructions on how to mount certificates, please visit the Configure a Kubernetes build farm to use self-signed certificates documentation.

Local runner build infrastructure

Can I run builds locally? Can I run builds directly on my computer?

Yes. For instructions, go to Set up a local runner build infrastructure.

How do I check the runner status for a local runner build infrastructure?

To confirm that the runner is running, send a cURL request like curl http://localhost:3000/healthz.

If the running is running, you should get a valid response, such as:

{

"version": "0.1.2",

"docker_installed": true,

"git_installed": true,

"lite_engine_log": "no log file",

"ok": true

}

How do I check the delegate status for a local runner build infrastructure?

The delegate should connect to your instance after you finish the installation workflow above. If the delegate does not connect after a few minutes, run the following commands to check the status:

docker ps

docker logs --follow <docker-delegate-container-id>

The container ID should be the container with image name harness/delegate:latest.

Successful setup is indicated by a message such as Finished downloading delegate jar version 1.0.77221-000 in 168 seconds.

Runner can't find an available, non-overlapping IPv4 address pool.

The following runner error can occur during stage setup (the Initialize step in build logs):

Could not find an available, non-overlapping IPv4 address pool among the defaults to assign to the network.

This error means the number of Docker networks has exceeded the limit. To resolve this, you need to clean up unused Docker networks. To get a list of existing networks, run docker network ls, and then remove unused networks with docker network rm or docker network prune.

Docker daemon fails with invalid working directory path on Windows local runner build infrastructure

The following error can occur in Windows local runner build infrastructures:

Error response from daemon: the working directory 'C:\harness-DIRECTORY_ID' is invalid, it needs to be an absolute path

This error indicates there may be a problem with the Docker installation on the host machine.

-

Run the following command (or a similar command) to check if the same error occurs:

docker run -w C:\blah -it -d mcr.microsoft.com/windows/servercore:ltsc2022 -

If you get the

working directory is invaliderror again, uninstall Docker and follow the instructions in the Windows documentation to Prepare Windows OS containers for Windows Server. -

Restart the host machine.

How do I check if the Docker daemon is running in a local runner build infrastructure?

To check if the Docker daemon is running, use the docker info command. An error response indicates the daemon is not running. For more information, go to the Docker documentation on Troubleshooting the Docker daemon

Runner process quits after terminating SSH connection for local runner build infrastructure

If you launch the Harness Docker Runner binary within an SSH session, the runner process can quit when you terminate the SSH session.

To avoid this with macOS runners, use this command when you start the runner binary:

./harness-docker-runner-darwin-amd64 server >log.txt 2>&1 &

disown

For Linux runners, you can use a tool such as nohup when you start the runner, for example:

nohup ./harness-docker-runner-darwin-amd64 server >log.txt 2>&1 &

Where does the harness-docker-runner create the hostpath volume directories on macOS?

The harness-docker-runner creates the host volumes under /tmp/harness-* on macOS platforms.

Why do I get a "failed to create directory" error when trying to run a build on local build infra?

failed to create directory for host volume path: /addon: mkdir /addon: read-only file system

This error could occur when there's a mismatch between the OS type of the local build infrastructure and the OS type selected in the pipeline's infrastructure settings. For example, if your local runner is on a macOS platform, but the pipeline's infrastructure is set to Linux, this error can occur.

Is there an auto-upgrade feature for the Harness Docker runner?

No. You must upgrade the Harness Docker runner manually.

Self-managed VM build infrastructure

Can I use the same build VM for multiple CI stages?

No. The build VM terminates at the end of the stage and a new VM is used for the next stage.

Why are build VMs running when there are no active builds?

With self-managed VM build infrastructure, the pool value in your pool.yml specifies the number of "warm" VMs. These VMs are kept in a ready state so they can pick up build requests immediately.

If there are no warm VMs available, the runner can launch additional VMs up to the limit in your pool.yml.

If you don't want any VMs to sit in a ready state, set your pool to 0. Note that having no ready VMs can increase build time.

For AWS VMs, you can set hibernate in your pool.yml to hibernate warm VMs when there are no active builds. For more information, go to Configure the Drone pool on the AWS VM.

Do I need to install Docker on the VM that runs the Harness Delegate and Runner?

Yes. Docker is required for self-managed VM build infrastructure.

AWS build VM creation fails with no default VPC

When you run the pipeline, if VM creation in the runner fails with the error no default VPC, then you need to set subnet_id in pool.yml.

AWS VM builds stuck at the initialize step on health check

If your CI build gets stuck at the initialize step on the health check for connectivity with lite engine, either lite engine is not running on your build VMs or there is a connectivity issue between the runner and lite engine.

- Verify that lite-engine is running on your build VMs.

- SSH/RDP into a VM from your VM pool that is in a running state.

- Check whether the lite-engine process is running on the VM.

- Check the cloud init output logs to debug issues related to startup of the lite engine process. The lite engine process starts at VM startup through a cloud init script.

- If lite-engine is running, verify that the runner can communicate with lite-engine from the delegate VM.

- Run

nc -vz <build-vm-ip> 9079from the runner. - If the status is not successful, make sure the security group settings in

runner/pool.ymlare correct, and make sure your security group setup in AWS allows the runner to communicate with the build VMs. - Make sure there are no firewall or anti-malware restrictions on your AMI that are interfering with the cloud init script's ability to download necessary dependencies. For details about these dependencies, go to Set up an AWS VM Build Infrastructure - Start the runner.

- Run

AWS VM delegate connected but builds fail

If the delegate is connected but your AWS VM builds are failing, check the following:

- Make sure your the AMIs, specified in

pool.yml, are still available.- Amazon reprovisions their AMIs every two months.

- For a Windows pool, search for an AMI called

Microsoft Windows Server 2022 Base with Containersand updateamiinpool.yml.

- Confirm your security group setup and security group settings in

runner/pool.yml.

Use internal or custom AMIs with self-managed AWS VM build infrastructure

If you are using an internal or custom AMI, make sure it has Docker installed.

Additionally, make sure there are no firewall or anti-malware restrictions interfering with initialization, as described in CI builds stuck at the initialize step on health check.

Where can I find logs for self-managed AWS VM lite engine and cloud init output?

- Linux

- Lite engine logs:

/var/log/lite-engine.log - Cloud init output logs:

/var/log/cloud-init-output.log

- Lite engine logs:

- Windows

- Lite engine logs:

C:\Program Files\lite-engine\log.out - Cloud init output logs:

C:\ProgramData\Amazon\EC2-Windows\Launch\Log\UserdataExecution.log

- Lite engine logs:

What does it mean if delegate.task throws a "ConnectException failed to connect" error?

Before submitting a task to a delegate, Harness runs a capability check to confirm that the delegate is connected to the runner. If the delegate can't connect, then the capability check fails and that delegate is ignored for the task. This can cause failed to connect errors on delegate task assignment, such as:

INFO io.harness.delegate.task.citasks.vm.helper.HttpHelper - [Retrying failed to check pool owner; attempt: 18 [taskId=1234-DEL] \

java.net.ConnectException: Failed to connect to /127.0.0.1:3000\

To debug this issue, investigate delegate connectivity in your VM build infrastructure configuration:

- Verify connectivity for AWS VM build infra

- Verify connectivity for Microsoft Azure VM build infra

- Verify connectivity for GCP VM build infra

- Verify connectivity for Anka macOS VM build infra

How to establish a VPN connection within a CI pipeline?

One way to establish a secure connection between our platform’s servers and a customer’s on-premises infrastructure is through a Virtual Private Network (VPN).

- The user must publish a public IP address (or have a domain).

- The VPN connection must be established before any step that relies on VPN traffic for functionality.

Here is an example of using an OpenVPN server, but you can apply the same approach to Strongswan, Cisco, or any other VPN server.

Steps to configure OpenVPN

- Download an OpenVPN file, "config.ovpn".

- Encode the file to Base64 and save it.

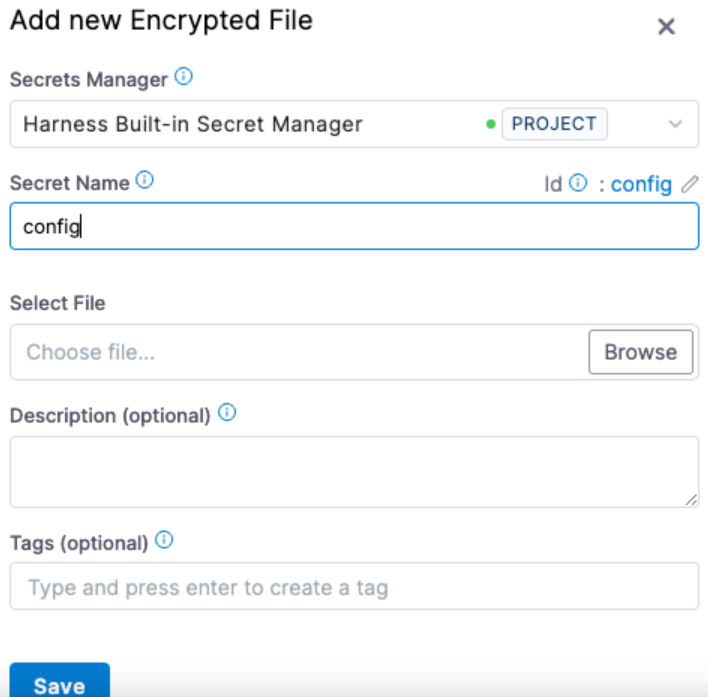

- Add the file as a secret.

-

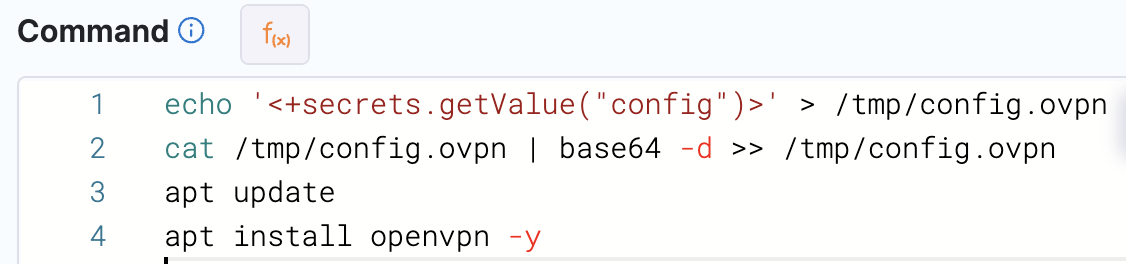

Decode the file, save it as a config file, and install OpenVPN.

-

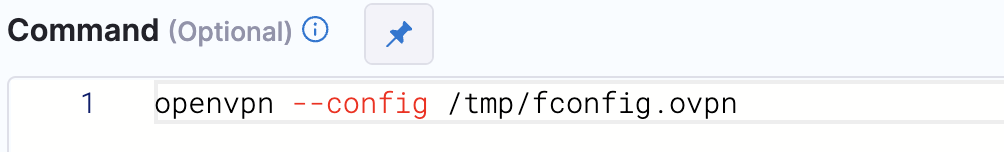

Run OpenVPN with the new file as a Background Step.

-

Continue with the rest of the pipeline steps.

Harness Cloud

What is Harness Cloud?

Harness Cloud lets you run builds on Harness-managed runners that are preconfigured with tools, packages, and settings commonly used in CI pipelines. It is one of several build infrastructure options offered by Harness. For more information, go to Which build infrastructure is right for me.

How do I use Harness Cloud build infrastructure?

Configuring your pipeline to use Harness Cloud takes just a few minutes. Make sure you meet the requirements for connectors and secrets, then follow the quick steps to use Harness Cloud.

Account verification error with Harness Cloud on Free plan

Recently Harness has been the victim of several Crypto attacks that use our Harness-managed build infrastructure (Harness Cloud) to mine cryptocurrencies. Harness Cloud is available to accounts on the Free tier of Harness CI. Unfortunately, to protect our infrastructure, Harness now limits the use of the Harness Cloud build infrastructure to business domains and block general-use domains, like Gmail, Hotmail, Yahoo, and other unverified domains.

To address these issues, you can do one of the following:

- Use the local runner build infrastructure option, or upgrade to a paid plan to use the self-managed VM or Kubernetes cluster build infrastructure options. There are no limitations on builds using your own infrastructure.

- Create a Harness account with your work email and not a generic email address, like a Gmail address.

What is the Harness Cloud build credit limit for the Free plan?

The Free plan allows 2,000 build credits per month. For more information, go to Harness Cloud billing and build credits.

Can I use xcode for a MacOS build with Harness Cloud?

Yes. Harness Cloud macOS runners include several versions of xcode as well as homebrew. For details, go to Harness Cloud image specifications. You can also install additional tools at runtime.

What Linux distribution does Harness Cloud use?

For Harness CI Cloud machine specs, go to Harness Cloud image specifications.

Can I use my own secrets manager with Harness Cloud build infrastructure?

Yes, Harness supports secret managers from various cloud providers, including HashiCorp Vault.

Connector errors with Harness Cloud build infrastructure

To use Harness Cloud build infrastructure, all connectors used in the stage must connect through the Harness Platform. This means that:

- GCP connectors can't inherit credentials from the delegate. They must be configured to connect through the Harness Platform.

- Azure connectors can't inherit credentials from the delegate. They must be configured to connect through the Harness Platform.

- AWS connectors can't use IRSA, AssumeRole, or delegate connectivity mode. They must connect through the Harness Platform with access key authentication.

For more information, go to Use Harness Cloud build infrastructure - Requirements for connectors and secrets.

To change the connector's connectivity mode:

- Go to the Connectors page at the account, organization, or project scope. For example, to edit account-level connectors, go to Account Settings, select Account Resources, and then select Connectors.

- Select the connector that you want to edit.

- Select Edit Details.

- Select Continue until you reach Select Connectivity Mode.

- Select Change and select Connect through Harness Platform.

- Select Save and Continue and select Finish.

Built-in Harness Docker Connector doesn't work with Harness Cloud build infrastructure

Depending on when your account was created, the built-in Harness Docker Connector (account.harnessImage) might be configured to connect through a Harness Delegate instead of the Harness Platform. In this case, attempting to use this connector with Harness Cloud build infrastructure generates the following error:

While using hosted infrastructure, all connectors should be configured to go via the Harness platform instead of via the delegate. \

Please update the connectors: [harnessImage] to connect via the Harness platform instead. \

This can be done by editing the connector and updating the connectivity to go via the Harness platform.

To resolve this error, you can either modify the Harness Docker Connector or use another Docker connector that you have already configured to connect through the Harness Platform.

To change the connector's connectivity settings:

- Go to Account Settings and select Account Resources.

- Select Connectors and select the Harness Docker Connector (ID:

harnessImage). - Select Edit Details.

- Select Continue until you reach Select Connectivity Mode.

- Select Change and select Connect through Harness Platform.

- Select Save and Continue and select Finish.

Can I change the CPU/memory allocation for steps running on Harness cloud?

Unlike with other build infrastructures, you can't change the CPU/memory allocation for steps running on Harness Cloud. Step containers running on Harness Cloud build VMs automatically use as much as CPU/memory as required up to the available resource limit in the build VM.

Does gsutil work with Harness Cloud?

No, gsutil is deprecated. You should use gcloud-equivalent commands instead, such as gcloud storage cp instead of gsutil cp.

However, neither gsutil nor gcloud are recommended with Harness Cloud build infrastructure. Harness Cloud sources build VMs from a variety of cloud providers, and it is impossible to predict which specific cloud provider hosts the Harness Cloud VM that your build uses for any single execution. Therefore, avoid using tools (such as gsutil or gcloud) that require a specific cloud provider's environment.

Can't use STO steps with Harness Cloud macOS runners

Currently, STO scan steps aren't compatible with Harness Cloud macOS runners, because Apple's M1 CPU doesn't support nested virtualization. You can use STO scan steps with Harness Cloud Linux and Windows runners.

How do I configure OIDC with GCP WIF for Harness Cloud builds?

Go to Configure OIDC with GCP WIF for Harness Cloud builds.

GCP OIDC Connector keeps saying "OIDC Configuration Error: Error encountered while obtaining OIDC Access Token from STS" configuration is correct

Harness obsfucates various information from GCP OIDC connections due to security and information concerns. In order to keep customers safe even in the case of connection error, Harness obasfucates information that may help troubleshoot this kind of issue.

To start, please ensure that your Harness Environment and Google Cloud Environment are configured according to our documentation about Configure OIDC with GCP WIF for Harness Cloud builds

Next, please ensure that you have an API key for an Harness account with at least CREATE_OIDC_ID_TOKEN_PERMISSION permissions in your Harness Environment.

Attain Your Harness JSON Web Token (JWT)

In order to perform some of the tasks, you will need to attain your JSON Web Token (JWT), you will need to cURL against the Harness API endpoint with the following command:

curl --location 'https://app.harness.io/ng/api/oidc/id-token/gcp' \

--header 'accept: application/json' \

--header 'Content-Type: application/json' \

--header 'x-api-key: YOUR_API_KEY' \

--data-raw '{

"accountId": "YOUR_HARNESS_ACCOUNT_ID",

"workloadPoolId": "YOUR_CONNECTOR_WORKLOAD_POOL_ID",

"providerId": "YOUR_CONNECTOR_PROVIDER_ID",

"gcpProjectId": "YOUR_CONNECTOR_PROJECT#_ID",

"serviceAccountEmail": "YOUR_CONNECTOR_SVC_ACCOUNT_EMAIL_ID"

}'

You'll need to replace the values in capital letters with your information from the OIDC Connector/WIF Account and your Harness Environment.

Once you run the command, Harness will return a successful JWT

{

"status": "SUCCESS",

"data": "aaa9aAAaAaAAAV9AaAAAhAaAaAaAAAAaA9AaAaAaAaAaAAaA9AaAAAAAAa9AAAaAaAaAaAaAAbAaAbAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAa999AaAaAaAaAaAa-aAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaA.aAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaAaA",

"metaData": null,

"correlationId": "00a000aa-a0000-0a00-000a-0a00a0a0a000"

}

The data portion is the JWT

Compare the issuer in the JSON Web Token with what is being supplied by Harness

You can now decode your JWT token and ensure its values are correct. You can decode the JWT using a tool of your choice (such as with Python) or visit a site like https://jwt.io/, and decode the values for the payload (please note that Harness does not endorse https://jwt.io). The values should look somewhat like the following:

{

"sub": "YOUR_HARNESS_ACCOUNT_ID",

"iss": "https://app.harness.io/ng/api/oidc/account/<YOUR_HARNESS_ACCOUNT_ID>",

"aud": "https://iam.googleapis.com/projects/<YOUR_CONNECTOR_PROJECT#_ID>/locations/global/workloadIdentityPools/<YOUR_CONNECTOR_WORKLOAD_POOL_ID>/providers/<YOUR_CONNECTOR_PROVIDER_ID>",

"exp": 1750713017,

"iat": 1750711017,

"account_id": "YOUR_HARNESS_ACCOUNT_ID"

}

Now perform a cURL from a local system to Harness, using the appropriate Hostname for your Harness Cluster. Note that the source IP must be part of the allow-listed for the account.

| Cluster | HostName |

|---|---|

| Prod1/Prod2 | app.harness.io |

| Prod3 | app3.harness.io |

| Prod0/Prod4 | accounts.harness.io |

| EU clusters | accounts.eu.harness.io |

curl https://<HOSTNAME>/ng/api/oidc/account/<YOUR_HARNESS_ACCOUNT_ID>/.well-known/openid-configuration

Compare the value for iss with the issuer value from the curl command. They should match.

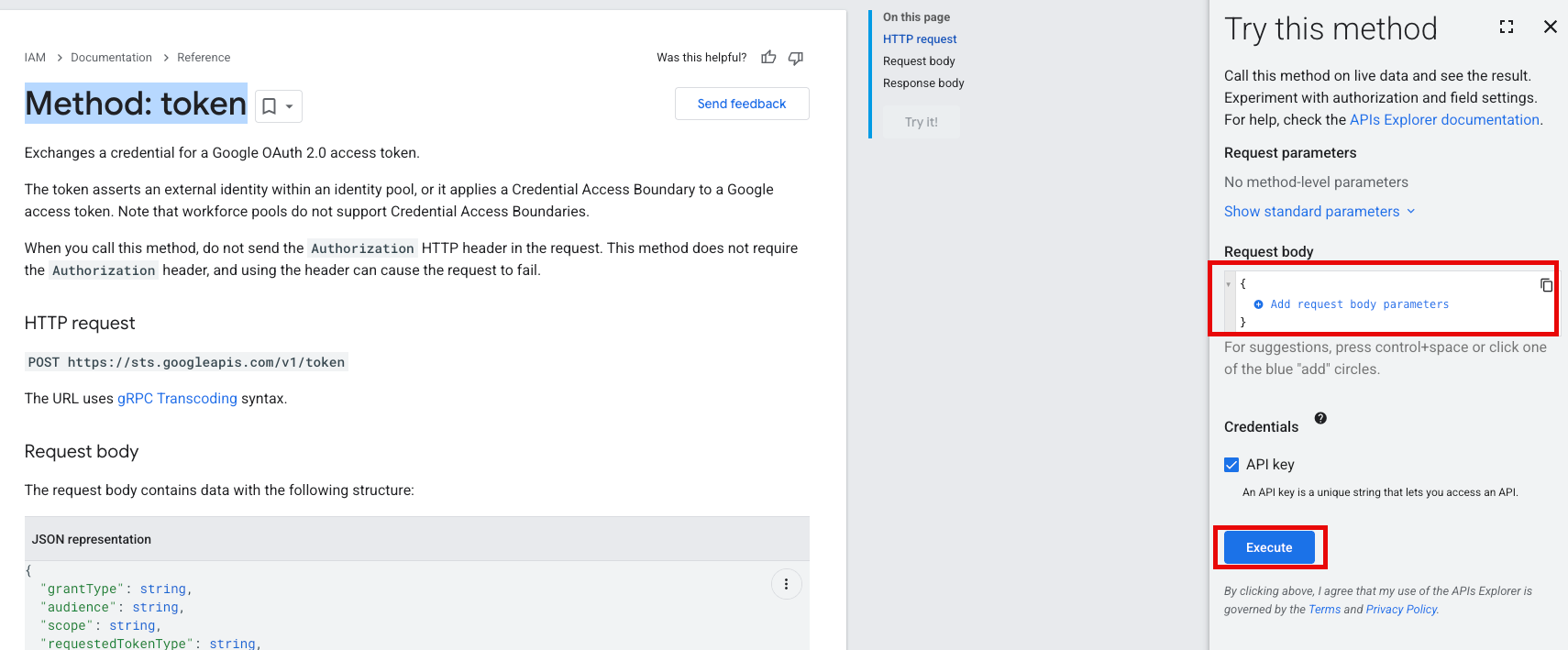

Test your JSON Web Token for issues using GCP's STS Method: Token test

GCP has a method to test a token's external identity within a pool.

Customers will need to visit the GCP site, and prepare a payload for testing.

Using the Try this Method section, add the following request body, that follows the example outlined in the GCP documentation. Take special note that the audience value for the test does not have the https: portion of the url. Including it will result in an error.

{

"grantType": "urn:ietf:params:oauth:grant-type:token-exchange",

"audience": "//iam.googleapis.com/projects/<YOUR_CONNECTOR_PROJECT#_ID>/locations/global/workloadIdentityPools/<YOUR_CONNECTOR_WORKLOAD_POOL_ID>/providers/<YOUR_CONNECTOR_PROVIDER_ID>",

"scope": "https://www.googleapis.com/auth/cloud-platform",

"requestedTokenType": "urn:ietf:params:oauth:token-type:access_token",

"subjectToken": "<YOUR_JWT_FROM_ABOVE_STEPS>",

"subjectTokenType": "urn:ietf:params:oauth:token-type:id_token"

}

Click on the Execute button. If you receive a 200 response, then your token should be set up correctly, and there should not be any issues. If there is another response, this means there is an issue with how the GCP WIF was set up, and we recommend reviewing the information in the error to help troubleshoot the issue.

When I run a build on Harness cloud, which delegate is used? Do I need to install a delegate to use Harness Cloud?

Harness Cloud builds use a delegate hosted in the Harness Cloud runner. You don't need to install a delegate in your local infrastructure to use Harness Cloud.

Can I use Harness Cloud run CD steps/stages?

No. Currently, you can't use Harness Cloud build infrastructure to run CD steps or stages. Currently, Harness Cloud is specific to Harness CI.

Can I connect to services running in a private corporate network when using Harness Cloud?

Yes. You can use Secure Connect for Harness Cloud.

With Harness Cloud build infrastructure, do I need to run DinD in a Background step to run Docker builds in a Run step?

No. Harness CI Cloud uses Harness-managed VM images that already have Docker installed. You can access these binaries by directly running Docker commands in your Run steps.

With Harness Cloud, can I cache images pulled from my internal container registry?

Currently, caching build images with Harness CI Cloud isn't supported.

When running a build in Harness cloud, does a built-in step run within a container or does it run as a VM process?

By default, a built-in step runs inside a container within the build VM.

How to fix the docker rate limiting errors while pulling the Harness internal images when the build is running on Harness cloud?

You could update the deafult docker connector harnessImage and point it to the Harness internal GAR/ECR as mentioned in the doc

Kubernetes clusters

What is the difference between a Kubernetes cluster build infrastructure and other build infrastructures?

For a comparison of build infrastructures go to Which build infrastructure is right for me.

For requirements, recommendations, and settings for using a Kubernetes cluster build infrastructure, go to:

- Set up a Kubernetes cluster build infrastructure

- Build and push artifacts and images - Kubernetes cluster build infrastructures require root access

- CI Build stage settings - Infrastructure - Kubernetes tab

Can I run Docker commands on a Kubernetes cluster build infrastructure?

If you want to run Docker commands when using a Kubernetes cluster build infrastructure, Docker-in-Docker (DinD) with privileged mode is required. For instructions, go to Run DinD in a Build stage.

If your cluster doesn't support privileged mode, you must use a different build infrastructure option, such as Harness Cloud, where you can run Docker commands directly on the host without the need for Privileged mode. For more information, go to Set up a Kubernetes cluster build infrastructure - Privileged mode is required for Docker-in-Docker.

Can I use Istio MTLS STRICT mode with Harness CI?

Yes, but you must create a headless service for Istio MTLS STRICT mode.

How can you execute Docker commands in a CI pipeline that runs on a Kubernetes cluster that lacks a Docker runtime?

You can run Docker-in-Docker (DinD) as a service with the sharedPaths set to /var/run. Following that, the steps can be executed as Docker commands. This works regardless of the Kubernetes container runtime.

The DinD service does not connect to the Kubernetes node daemon. It launches a new Docker daemon on the pod, and then other containers use that Docker daemon to run their commands.

For details, go to Run Docker-in-Docker in a Build stage.

Resource allocation for Kubernetes cluster build infrastructure

You can adjust CPU and memory allocation for individual steps running on a Kubernetes cluster build infrastructure or container. For information about how resource allocation is calculated, go to Resource allocation.

What is the default CPU and memory limit for a step container?

For default resource request and limit values, go to Build pod resource allocation.

Why do steps request less memory and CPU than the maximum limit? Why do step containers request fewer resources than the limit I set in the step settings?

By default, resource requests are always set to the minimum, and additional resources (up to the specified maximum limit) are requested only as needed during build execution. For more information, go to Build pod resource allocation.

How do I configure the build pod to communicate with the Kubernetes API server?

By default, the namespace's default service account is auto-mounted on the build pod, through which it can communicate with API server. To use a non-default service account, specify the Service Account Name in the Kubernetes cluster build infrastructure settings.

Do I have to mount a service account on the build pod?

No. Mounting a service account isn't required if the pod doesn't need to communicate with the Kubernetes API server during pipeline execution. To disable service account mounting, deselect Automount Service Account Token in the Kubernetes cluster build infrastructure settings.

What types of volumes can be mounted on a CI build pod?

You can mount many types of volumes, such as empty directories, host paths, and persistent volumes, onto the build pod. Use the Volumes in the Kubernetes cluster build infrastructure settings to do this.

How can I run the build pod on a specific node?

Use the Node Selector setting to do this.

It is possible to make a toleration configuration at the project, org, or account level?

Tolerations in a Kubernetes cluster build infrastructure can only be set at the stage level.

I want to use an EKS build infrastructure with an AWS connector that uses IRSA

You need to set the Service Account Name in the Kubernetes cluster build infrastructure settings.

If you get error checking push permissions or similar, go to the Build and Push to ECR error article.

Why are build pods being evicted?

Harness CI pods shouldn't be evicted due to autoscaling of Kubernetes nodes because Kubernetes doesn't evict pods that aren't backed by a controller object. However, build pods can be evicted due to CPU or memory issues in the pod or using spot instances as worker nodes.

If you notice either sporadic pod evictions or failures in the Initialize step in your Build logs, add the following Annotation to your Kubernetes cluster build infrastructure settings:

"cluster-autoscaler.kubernetes.io/safe-to-evict": "false"

I can't use Kubernetes autoscaling to distribute the pipeline workload.

In a Build stage, Harness creates a pod and launches each step in a container within the pod.

Harness reserves node resources based on the pipeline configuration.

Even if you enable autoscaling on your cluster, the pipeline uses resources from one node only.

AKS builds timeout

Azure Kubernetes Service (AKS) security group restrictions can cause builds running on an AKS build infrastructure to timeout.

If you have a custom network security group, it must allow inbound traffic on port 8080, which the delegate service uses.

For more information, refer to the following Microsoft Azure troubleshooting documentation: A custom network security group blocks traffic.

How do I set the priority class level? Can I prioritize my build pod if there are resource shortages on the host node?

Use the Priority Class setting to ensure that the build pod is prioritized in cases of resource shortages on the host node.

What's the default priority class level?

If you leave the Priority Class field blank, the PriorityClass is set to the globalDefault, if your infrastructure has one defined, or 0, which is the lowest priority.

Can I transfer files into my build pod?

To do this, use a script in a Run step.

Can I mount an existing Kubernetes secret into the build pod?

Currently, Harness doesn't offer built-in support for mounting existing Kubernetes secrets into the build pod.

How are step containers named within the build pod?

Step containers are named sequentially starting with step-1.

When I run a build, Harness creates a new pod and doesn't run the build on the delegate

This is the expected behavior. When you run a Build (CI) stage, each step runs on a new build farm pod that isn't connected to the delegate.

What permissions are required to run CI builds in an OpenShift cluster?

For information about building on OpenShift clusters, go to Permissions Required and OpenShift Support in the Kubernetes Cluster Connector Settings Reference.

What are the minimum permissions required for the service account role for a Kubernetes Cluster connector?

For information about permissions required to build on Kubernetes clusters, go to Permissions Required in the Kubernetes Cluster Connector Settings Reference.

How does the build pod communicate with the delegate? What port does the lite-engine listen on?

The delegate communicates to the temp pod created by the container step through the build pod IP. Build pods have a lite engine running on port 20001.

Experiencing OOM on java heap for the delegate

Check CPU utilization and try increasing the CPU request and limit amounts.

Your Java options must use UseContainerSupport instead of UseCGroupMemoryLimitForHeap, which was removed in JDK 11.

I have multiple delegates in multiple instances. How can I ensure the same instance is used for each step?

Use single replica delegates for tasks that require the same instance, and use a delegate selector by delegate name. The tradeoff is that you might have to compromise on your delegates' high availability.

Delegate is unable to connect to the created build farm

If you get this error when using a Kubernetes cluster build infrastructure, and you have confirmed that the delegate is installed in the same cluster where the build is running, you may need to allow port 20001 in your network policy to allow pod-to-pod communication.

If the delegate is unable to connect to the created build farm with Istio MTLS STRICT mode, and you are seeing that the pod is removed after a few seconds, you might need to add Istio ProxyConfig with "holdApplicationUntilProxyStarts": true. This setting delays application start until the pod is ready to accept traffic so that the delegate doesn't attempt to connect before the pod is ready.

For more delegate and Kubernetes troubleshooting guidance, go to Troubleshooting Harness.

Why aren't Harness CI Steps like Git clone inheriting my proxy settings from the Delegate, and how can I configure a proxy for these steps?

CI steps such as Git Clone don't automatically inherit the proxy settings configured on the Harness Delegate. This is because these steps run inside separate build pods, and proxy-related environment variables need to be explicitly passed down to those pods.

To ensure your CI steps work with your proxy setup, you must configure the following environment variables on the Delegate:

PROXY_HOST

PROXY_PORT

PROXY_SCHEME

These variables allow Harness to propagate the proxy configuration to build pods so that operations like cloning a Git repository can route through the correct proxy.

For a complete list of variables and instructions on setting up proxy configurations for your Delegate, refer to the Configure delegate proxy settings documentation

If my pipeline consists of multiple CI stages, are all the steps across different stages executed within the same build pod?

No. Each CI stage execution triggers the creation of a new build pod. The steps within a stage are then carried out within the stage's dedicated pod. If your pipeline has multiple CI stages, distinct build pods are generated for each individual stage.

When does the cleanup of build pods occur? Does it happen after the entire pipeline execution is finished?

Build pod cleanup takes place immediately after the completion of a stage's execution. This is true even if there are multiple CI stages in the same pipeline; as each build stage ends, the pod for that stage is cleaned up.

Is the build pod cleaned up in the event of a failed stage execution?

Yes, the build pod is cleaned up after stage execution, regardless of whether the stage succeeds or fails.

How do I know if the pod cleanup task fails?

To help identify pods that aren't cleaned up after a build, pod deletion logs include details such as the cluster endpoint targeted for deletion. If a pod can't be located for cleanup, then the logs include the pod identifier, namespace, and API endpoint response from the pod deletion API. You can find logs in the Build details.

Can I clean up container images already present on the nodes where build pods are scheduled?

To clean up cached images, you can execute commands like docker image prune or docker system prune -a, depending on the container runtime used on the Kubernetes nodes.

Perform this cleanup task outside of Harness, following the usual processes for clearing cached or unused container images from worker nodes.

Can I use an ECS cluster for my Kubernetes cluster build infrastructure?

Currently, Harness CI doesn't support running CI builds on ECS clusters.

Can I use a Docker delegate with a Kubernetes cluster build infrastructure?

Yes, if the Kubernetes connector is configured correctly. For more information, go to Use delegate selectors with Kubernetes cluster build infrastructure.

How can I configure the kaniko flag --skip-unused-stages in the built-in Build and Push step?

You can set the kaniko flags as an environment variable in the build and push step. For more information, go to Environment Variables (plugin runtime flags)

Does the built in Build and Push step now support all the kainko flags?

Yes, all the kaniko flags are supported and they can be added as the environment variables to the build and push step

Can I add additional docker options with the container being started via background step in k8s build, such as the option to mount a volume or attach the container to a specific docker network?

Adding additional Docker options when starting the container via a background step is not supported

Why Harness internal container lite-engine is requesting a huge cpu/memory within the build pod?

Lite-engine consumes very minimal compute resource however it reserves the resource for the other step containers. More details about how the resources are allocated within the build pod can be reffered in the doc

Why the build pod status is showing "not ready" in k8s cluster while the build is running?

Each step container will be terminated as soon the step execution has been completed. Since there could be containers in terminated state while the build is running, k8s would show the pod state as "not ready" which can be ignored.

We have a run step configured with an image that has few scripts in the container filesystem. Why don’t we see these files when Harness is starting this container during the execution?

This could happen if you are configuring the shared path in the CI stage with the same path or mount any other type of volumes in the build pod at the same path.

Can we add barriers in CI stage?

No, barrier is not currently supported in CI stage

How to set the kaniko flag "--reproducible" in the build and push step?

Kaniko flags can be configured as environment variables in build and push step. More details about the same can be referred in the doc

How can we configure the build and push to ECR step to pull the base image configured in dockerfile from a private container registry?

You can configure the base connector in the build and push step and Harness will use the creds configured in this connector to pull the private image during runtime

Does the output variable configured in the run step get exported even if the step execution fails?

No, the output variable configured in the run step does not get exported if the step execution fails

Why is the Exporting of output variable from a run step is failing with the error "* stat /tmp/engine/xxxxxxx-output.env: no such file or directory" even after the step executed successfully ?

When an output variable is configured in a run step, Harness adds a command at the end of the script to write the variable's value to a temp file, from which the output variable is processed. The error occurs if you manually exit the script with 'exit 0' before all commands are executed. Avoid calling 'exit 0' in the script if you are exporting an output variable.

Can we use docker compose to start multiple containers when we run the build in k8s cluster?

You can use docker compose while running the build in k8s cluster however you need to run the dind as a background step as detailed in the doc

When we start a container by running docker run command from a run step, does the new container get the environment variables configured in the run step?

No, ENV variables configured in the run step will not be available within the new container, you need to manually pass the required ENV variable while starting the container

How can we trigger a CI pipeline from a specific commit?

We can not execute the CI pipeline from a specific commit however you could create a branch or tag based on the required commit and then run the pipeline from the new branch or tag

How can we use the jfrog docker registry in the build and push step to docker step?

You can configure the docker connector using the jfrog docker registry URL and then use this connector in the build and push to docker step.

Why is the Build and Push to Docker step configured with the JFrog Docker connector not using the JFrog endpoint while pushing the image, instead defaulting to the Docker endpoint?

This could happen when the docker repository in the build and push step is not configured with the FQN. The repository should be configured with the FQN including the jfrog endpoint.

Why the execution is getting aborted without any reason and the "applied by" field is showing trigger?

This could happen when the PR/push trigger is configured with the 'Auto-abort Previous Execution' option, which will automatically cancel active builds started by the same trigger when a branch or PR is updated

Can we add the config "topologySpreadConstraints" in the build pod which will help CI pod to spread across different AZs?

Harness CI now includes a new property, podSpecOverlay, in the Kubernetes infrastructure properties of the CI stage. This allows users to apply additional settings to the build pod. Currently, we support specifying topologySpreadConstraint in this field. Please see doc

Why the docker commands in the run step is failing with the error "Cannot connect to the Docker daemon at unix:///var/run/docker.sock?" even after the dind background step logs shows that the docker daemon has been initialized?

This could happen if the folders /var/lib/docker and /var/run are not added under the shared path in the CI stage. More details about the dind config can be referred in the doc

Why the build using dind is failing with out of memory error even after enough memory is configured in the run step where the docker commands are running?

When dind is used, the build will run on the dind container instead of the step container where Docker commands are executed. Therefore, if an out-of-memory error occurs during the build on dind, the resources on the dind container need to be updated

Why the docker commands are failing on the run step with the error "command not found: docker" even if we have the dind running as background step?

This happens when the step container configured in the run step doesnt have docker cli installed

How can we use buildx while running the build in k8s build infra?

The built-in build and push step use kaniko to perform the build in k8s build infra. We can configure dind build to use buildx while running the build in k8s infra More details about the dind config can be referred in the doc

Do we need to have both ARM and AMD build infrastructure to build multiarch images using built-in build and push step in k8s infra?

Yes, we need to have one stage running on ARM and another stage running on AMD to build both ARM and AMD images using the built-in build and push step in k8s infra. More details including a sample pipeline can be referred in the doc

Does Harness CI support AKS 1.28.3 version?

Yes

Self-signed certificates

Can I mount internal CA certs on the CI build pod?

Yes. To do this with a Kubernetes cluster build infrastructure, go to Configure a Kubernetes build farm to use self-signed certificates.

Can I use self-signed certs with local runner build infrastructure?

With a local runner build infrastructure, you can use CI_MOUNT_VOLUMES to use self-signed certificates. For more information, go to Set up a local runner build infrastructure.

How do I make internal CA certs available to the delegate pod?

There are multiple ways you can do this:

- Build the delegate image with the certs baked into it, if you are custom building the delegate image.

- Create a secret/configmap with the certs data, and then mount it on the delegate pod.

- Run commands in the

INIT_SCRIPTto download the certs while the delegate launches and make them available to the delegate pod file system.

Where should I mount internal CA certs on the build pod?

The usage of the mounted CA certificates depends on the specific container image used for the step. The default certificate location depends on the base image you use. The location where the certs need to be mounted depends on the container image being used for the steps that you intend to run on the build pod.

Git connector SCM connection errors when using self-signed certificates

If you have configured your build infrastructure to use self-signed certificates, your builds may fail when the code repo connector attempts to connect to the SCM service. Build logs may contain the following error messages:

Connectivity Error while communicating with the scm service

Unable to connect to Git Provider, error while connecting to scm service

To resolve this issue, add SCM_SKIP_SSL=true to the environment section of the delegate YAML. For example, here is the environment section of a docker-compose.yml file with the SCM_SKIP_SSL variable:

environment:

- ACCOUNT_ID=XXXX

- DELEGATE_TOKEN=XXXX

- MANAGER_HOST_AND_PORT=https://app.harness.io

- DELEGATE_NAME=test

- NEXT_GEN=true

- DELEGATE_TYPE=DOCKER

- SCM_SKIP_SSL=true

For more information about self-signed certificates, delegates, and delegate environment variables, go to:

- Delegate environment variables

- Docker delegate environment variables

- Install delegates

- Set up a local runner build infrastructure

- Configure a Kubernetes build farm to use self-signed certificates

Certificate volumes aren't mounted to the build pod

If the volumes are not getting mounted to the build containers, or you see other certificate errors in your pipeline, try the following:

-

Add a Run step that prints the contents of the destination path. For example, you can include a command such as:

cat /kaniko/ssl/certs/additional-ca-cert-bundle.crt -

Double-check that the base image used in the step reads certificates from the same path given in the destination path on the Delegate.

pnpm enters infinite loop without logs

If your pipeline runs pnpm or npm commands that cause it to enter an infinite loop or wait indefinitely without producing logs, try adding the following command to your script to see if this allows the build to proceed:

npm config set strict-ssl false

If your pnpm commands are waiting for user input. Try using the append-only flag.

How do I use a self-signed certificate for a private Docker registry in the Build and Push to Docker Registry step?

To use a self-signed certificate for a private Docker registry in the Build and Push to Docker Registry step, you need to ensure that the runner (or build infrastructure) trusts the certificate. Since the Build and Push to Docker Registry step does not provide a direct way to configure self-signed certificates, you can use a Run step before it to manually add the certificate.

Example: Using a Run step to configure the self-signed certificate

Before running the Build and Push to Docker Registry step, add a Run step in your pipeline and use the following example to set up the self-signed certificate:

- step:

type: Run

name: Run_1

identifier: Run_1

spec:

shell: Sh

command: |-

# Write the certificate content to a file

echo '<+secrets.getValue("crt")>' > ca.crt

# Create the Docker certificates directory for the private registry

mkdir -p /etc/docker/certs.d/PRIVATE_DOCKER_REGISTRY_SERVER:PRIVATE_DOCKER_REGISTRY_PORT

# Copy the certificate to the Docker certificates directory

cp ca.crt /etc/docker/certs.d/PRIVATE_DOCKER_REGISTRY_SERVER:PRIVATE_DOCKER_REGISTRY_PORT

# Update the system's trusted certificate store (for Ubuntu/Debian)

update-ca-certificates

# Update the system's trusted certificate store (for CentOS/RHEL/Fedora)

update-ca-trust

Use another Run step to test the connection:

- step:

type: Run

name: Run_2

identifier: Run_2

spec:

shell: Sh

command: |-

docker login PRIVATE_DOCKER_REGISTRY_SERVER:PRIVATE_DOCKER_REGISTRY_PORT --username SOMEUSERNAME --password SOMEPASSWORD

Windows builds

Error when running Docker commands on Windows build servers

Make sure that the build server has the Windows Subsystem for Linux installed. This error can occur if the container can't start on the build system.

Docker commands aren't supported for Windows builds on Kubernetes cluster build infrastructures.

Is rootless configuration supported for builds on Windows-based build infrastructures?

No, currently this is not supported for Windows builds.

What is the default user set on the Windows Lite-Engine and Addon image? Can I change it?

The default user for these images is ContainerAdministrator. For more information, go to Run Windows builds in a Kubernetes build infrastructure - Default user for Windows builds.

Can I use custom cache paths on a Windows platform with Cache Intelligence?

Yes, you can use custom cache paths with Cache Intelligence on Windows platforms.

How do I specify the disk size for a Windows instance in pool.yml?

With self-managed VM build infrastructure, the disk configuration in your pool.yml specifies the disk size (in GB) and type.

For example, here is a Windows pool configuration for an AWS VM build infrastructure:

version: "1"

instances:

- name: windows-ci-pool

default: true

type: amazon

pool: 1

limit: 4

platform:

os: windows

spec:

account:

region: us-east-2

availability_zone: us-east-2c

access_key_id:

access_key_secret:

key_pair_name: XXXXX

ami: ami-088d5094c0da312c0

size: t3.large ## VM machine size.

hibernate: true

network:

security_groups:

- sg-XXXXXXXXXXXXXX

disk:

size: 100 ## Disk size in GB.

type: "pd-balanced"

Step continues running for a long time after the command is complete

In Windows builds, if the primary command in a Powershell script starts a long-running subprocess, the step continues to run as long as the subprocess exits (or until it reaches the step timeout limit). To resolve this:

- Check if your command launches a subprocess.

- If it does, check whether the process is exiting, and how long it runs before exiting.

- If the run time is unacceptable, you might need to add commands to sleep or force exit the subprocess.

Example: Subprocess with two-minute life

Here's a sample pipeline that includes a Powershell script that starts a subprocess. The subprocess runs for no more than two minutes.

pipeline:

identifier: subprocess_demo

name: subprocess_demo

projectIdentifier: default

orgIdentifier: default

tags: {}

stages:

- stage:

identifier: BUild

type: CI

name: Build

spec:

cloneCodebase: true

execution:

steps:

- step:

identifier: Run_1

type: Run

name: Run_1

spec:

connectorRef: YOUR_DOCKER_CONNECTOR_ID

image: jtapsgroup/javafx-njs:latest

shell: Powershell

command: |-

cd folder

gradle --version

Start-Process -NoNewWindow -FilePath "powershell" -ArgumentList "Start-Sleep -Seconds 120"

Write-Host "Done!"

resources:

limits:

memory: 3Gi

cpu: "1"

infrastructure:

type: KubernetesDirect

spec:

connectorRef: YOUR_KUBERNETES_CLUSTER_CONNECTOR_ID

namespace: YOUR_KUBERNETES_NAMESPACE

initTimeout: 900s

automountServiceAccountToken: true

nodeSelector:

kubernetes.io/os: windows

os: Windows

caching:

enabled: false

paths: []

properties:

ci:

codebase:

connectorRef: YOUR_CODEBASE_CONNECTOR_ID

build:

type: branch

spec:

branch: main

Concatenated variable values in PowerShell scripts print to multiple lines

If your PowerShell script (in a Run step) echoes a stage variable that has a concatenated value that includes a ToString representation of a PowerShell object (such as the result of Get-Date), this output might unexpectedly print to multiple lines in the build logs.

To resolve this, exclude the ToString portion from the stage variable's concatenated value, and then, in your PowerShell script, call ToString separately and "manually concatenate" the values. Expand the sections below to learn more about the cause and solution for this issue.

What causes unexpected multiline output from PowerShell scripts?

For example, the following two stage variables include one variable that has a ToString value and another variable that concatenates three expressions into a single expression, including the ToString value.

variables:

- name: DATE_FORMATTED ## This variable's value is 'ToString' output.

type: String

description: ""

required: false

value: (Get-Date).ToString("yyyy.MMdd")

- name: BUILD_VAR ## This variable's value concatenates the execution ID, the sequence ID, and the value of DATE_FORMATTED.

type: String

description: ""

required: false

value: <+<+pipeline.executionId>+"-"+<+pipeline.sequenceId>+"-"+<+stage.variables.DATE_FORMATTED>>

When a PowerShell script calls the concatenated variable, such as echo <+pipeline.stages.test.variables.BUILD_VAR>, the ToString portion of the output prints on a separate line from the rest of the value, despite being part of one concatenated expression.

How do I fix unexpected multiline output from PowerShell scripts?

To resolve this, exclude the ToString portion from the stage variable's concatenated value, and then, in your PowerShell script, call ToString separately and "manually concatenate" the values.

For example, here are the two stage variables from the previous example without the ToString value in the concatenated expression.

variables:

- name: DATE_FORMATTED ## This variable is unchanged.

type: String

description: ""

required: false

value: (Get-Date).ToString("yyyy.MMdd")

- name: BUILD_VAR ## This variable's value concatenates only the execution ID and sequence ID. It no longer includes DATE_FORMATTED.

type: String

description: ""

required: false

value: <+<+pipeline.executionId>+"-"+<+pipeline.sequenceId>>

In the Run step's PowerShell script, call the ToString value separately and then "manually concatenate" it onto the concatenated expression. For example:

- step:

identifier: echo

type: Run

name: echo

spec:

shell: Powershell

command:

|- ## DATE_FORMATTED is resolved separately and then appended to BUILD_VAR.

$val = <+stage.variables.DATE_FORMATTED>

echo <+pipeline.stages.test.variables.BUILD_VAR>-$val

User data isn't running on AWS Windows Server 2022 VM Pool

Windows only runs User Data during initialization. To fix this, go to C:\ProgramData\Amazon\EC2Launch\state and delete the .run-once file. This file is generated after the Windows VM initializes. On startup Windows will check for this file and decide whether or not to run the User Data script. If this file is not present, Windows will run the User Data script.

How do I install Docker on Windows?

To install Docker on Windows, run:

Invoke-WebRequest -UseBasicParsing "https://raw.githubusercontent.com/microsoft/Windows-Containers/Main/helpful_tools/Install-DockerCE/install-docker-ce.ps1" -o install-docker-ce.ps1

.\install-docker-ce.ps1

More information on this can be found in the Microsoft Documentation.

Do I need to enable Hyper V for AWS Windows VM Pool?

Hyper V is not required to run Harness Builds in a Windows VM Pool. Hyper V is a requirement for Docker Desktop and Docker Desktop is not required for self-managed Windows VM build infrastructure.

Do I need to install WSL for AWS Windows VM Pool?

WSL is not required to run Harness Builds in a Windows VM Pool. WSL is a requirement for Docker Desktop and Docker Desktop is not required for Windows Self-Managed Build Infrastructure.

How do I check the logs for Windows Server 2022 when using EC2Launchv2

Logs are generated in the C:\ProgramData\Amazon\EC2Launch\log directory for EC2Launchv2. To view startup logs, check the C:\ProgramData\Amazon\EC2Launch\log\agent file. For any errors check the C:\ProgramData\Amazon\EC2Launch\log\err file.

Default user, root access, and run as non-root

Which user does Harness use to run steps like Git Clone, Run, and so on? What is the default user ID for step containers?

Harness uses user 1000 by default. You can use a step's Run as User setting to use a different user for a specific step.

Can I enable root access for a single step?

If your build runs as non-root (meaning you have set runAsNonRoot: true in your build infrastructure settings), you can run a specific step as root by setting Run as User to 0 in the step's settings. This setting uses the root user for this specific step while preserving the non-root user configuration for the rest of the build. This setting is not available for all build infrastructures, as it is not applicable to all build infrastructures.

When I try to run as non-root, the build fails with "container has runAsNonRoot and image has non-numeric user (harness), cannot verify user is non-root"

This error occurs if you enable Run as Non-Root without configuring the default user ID in Run as User. For more information, go to CI Build stage settings - Run as non-root or a specific user.

Codebases

What is a codebase in a Harness pipeline?

The codebase is the Git repository where your code is stored. Pipelines usually have one primary or default codebase. If you need files from multiple repos, you can clone additional repos.

How do I connect my code repo to my Harness pipeline?

For instructions on configuring your pipeline's codebase, go to Configure codebase.

What permissions are required for GitHub Personal Access Tokens in Harness GitHub connectors?

For information about configuring GitHub connectors, including required permissions for personal access tokens, go to the GitHub connector settings reference.

Can I skip the built-in clone codebase step in my CI pipeline?

Yes, you can disable the built-in clone codebase step for any Build stage. For instructions, go to Disable Clone Codebase for specific stages.

Can I configure a failure strategy for a built-in clone codebase step?

No, you can't configure a failure strategy for the built-in clone codebase step. If you have concerns about clone failures, you can disable Clone Codebase, and then add a Git Clone step with a step failure strategy at the beginning of each stage where you need to clone your codebase.

Can I recursively clone a repo?

Yes. You can use Include Submodules option under Configure Codebase or Git Clone step to clone submodules recursively.

Can I clone a specific subdirectory rather than an entire repo?

Yes. For instructions, go to Clone a subdirectory.

Does the built-in clone codebase step fetch all branches? How can I fetch all branches?

You can use Fetch Tags option under Configure Codebase or Git Clone step to fetch all the new commits, branches, and tags from the remote repository. Setting this to true by checking the box is equivalent to adding the --tags flag.

Can I clone a different branch in different Build stages throughout the pipeline?

Yes. Refer to the Build Type, Branch Name, and Tag Name configuration options for the Git Clone step to specify a Branch Name or Tag Name in the stage's settings.

Can I clone the default codebase to a different folder than the root?

Yes. Refer to the Clone Directory options under the Configure Codebase or Git Clone step documentation to enter an optional target path in the stage workspace where you want to clone the repo.

What is the default clone depth setting for CI builds?

For information about the default clone depth setting, go to Configure codebase - Depth.

Can I change the depth of the built-in clone codebase step?

Yes. Use the Depth setting to do this.

How can I reduce clone codebase time?

There are several strategies you can use to improve codebase clone time:

- Depending on your build infrastructure, you can set Limit Memory to

1Giin your codebase configuration. - For builds triggered by PRs, set the Pull Request Clone Strategy to Source Branch and set Depth to

1. - If you don't need the entire repo contents for your build, you can disable the built-in clone codebase step and use a Run step to execute specific

git clonearguments, such as to clone a subdirectory.

What codebase environment or payload variables/expressions are available to use in triggers, commands, output variables, or otherwise?

For a list of <+codebase.*> and similar expressions you can use in your build triggers and otherwise, go to the CI codebase variables reference.

What expression can I use to get the repository name and the project/organization name for a trigger?

You can use the expressions <+eventPayload.repository.name> or <+trigger.payload.repository.name> to reference the repository name from the incoming trigger payload.

If you want both the repo and project name, and your Git provider's webhook payload doesn't include a single payload value with both names, you can concatenate two expressions together, such as <+trigger.payload.repository.project.key>/<+trigger.payload.repository.name>.

For more information about available codebase expressions, go to the CI codebase variables reference.

The expression eventPayload.repository.name causes the clone step to fail when used with a Bitbucket account connector.

Try using the expression <+trigger.payload.repository.name> instead.

Codebase expressions aren't resolved or resolve to null.

Empty or null values primarily occur due to the build type (tag, branch, or PR) and start conditions (manual or automated trigger). For example, <+codebase.branch> is always null for tag builds, and <+trigger.*> expressions are always null for manual builds.

Other possible causes for null values are that the connector doesn't have API access enabled in the connector's settings or that your pipeline doesn't use the built-in clone codebase step.

For more information about when codebase expressions are resolved, go to CI codebase variables reference.

How can I share the codebase configuration between stages in a CI pipeline?

The pipeline's default codebase is automatically available to each subsequent Build stage in the pipeline. When you add additional Build stages to a pipeline, Clone Codebase is enabled by default, which means the stage clones the default codebase declared in the first Build stage.

If you don't want a stage to clone the default codebase, you can disable Clone Codebase for specific stages.

The same Git commit is not used in all stages

If your pipeline has multiple stages, each stage that has Clone Codebase enabled clones the codebase during stage initialization. If your pipeline uses the generic Git connector and a commit is made to the codebase after a pipeline run has started, it is possible for later stages to clone the newer commit, rather than the same commit that the pipeline started with.

If you want to force all stages to use the same commit ID, even if there are changes in the repository while the pipeline is running, you must use a code repo connector for a specific SCM provider, rather than the generic Git connector.

Git fetch fails with invalid index-pack output when cloning large repos

- Error: During the Initialize step, when cloning the default codebase,

git fetchthrowsfetch-pack: invalid index-pack output. - Cause: This can occur with large code repos and indicates that the build machine might have insufficient resources to clone the repo.

- Solution: To resolve this, edit the pipeline's YAML and allocate

memoryandcpuresources in thecodebaseconfiguration. For example:

properties:

ci:

codebase:

connectorRef: YOUR_CODEBASE_CONNECTOR_ID

repoName: YOUR_CODE_REPO_NAME

build:

type: branch

spec:

branch: <+input>

sslVerify: false

resources:

limits:

memory: 4G ## Set the maximum memory to use. You can express memory as a plain integer or as a fixed-point number using the suffixes `G` or `M`. You can also use the power-of-two equivalents `Gi` and `Mi`. The default is `500Mi`.

cpu: "2" ## Set the maximum number of cores to use. CPU limits are measured in CPU units. Fractional requests are allowed; for example, you can specify one hundred millicpu as `0.1` or `100m`.

Clone codebase fails due to missing plugin

- Error: Git clone fails during stage initialization, and the runner's logs contain

Error response from daemon: plugin \"<plugin>\" not found - Platform: This error can occur in build infrastructures that use a Harness Docker Runner, such as the local runner build infrastructure or the VM build infrastructures.

- Cause: A required plugin is missing from your build infrastructure container's Docker installation. The plugin is required to configure Docker networks.

- Solution:

- On the machine where the runner is running, stop the runner.

- Set the

NETWORK_DRIVERenvironment variable to your preferred network driver plugin (such asexport NETWORK_DRIVER="nat"orexport NETWORK_DRIVER="bridge"). For Windows, use PowerShell variable syntax, such as$Env:NETWORK_DRIVER="nat"or$Env:NETWORK_DRIVER="bridge". - Restart the runner.

How do I configure the Git Clone step? What is the Clone Directory setting?

For details about Git Clone step settings, go to:

Does Harness CI support Git Large File Storage (git-lfs)?

Yes. Under Configure Codebase or Git Clone step, Set Download LFS Files to true to download Git-LFS files.

Can I run git commands in a CI Run step?

Yes. You can run any commands in a Run step. With respect to Git, for example, you can use a Run step to clone multiple code repos in one pipeline, or clone a subdirectory.

How do I handle authentication for git commands in a Run step?

You can store authentication credentials as secrets and use expressions, such as <+secrets.getValue("YOUR_TOKEN_SECRET")>, to call them in your git commands.

You could also pull credentials from a git connector used elsewhere in the pipeline.

Can I use codebase variables when cloning a codebase in a Run step?

No. Codebase variables are resolved only for the built-in Clone Codebase functionality. These variables are not resolved for git commands in Run steps or Git Clone steps.

Git connector fails to connect to the SCM service. SCM request fails with UNKNOWN

This error may occur if your code repo connector uses SSH authentication. To resolve this error, make sure HTTPS is enabled on port 443. This is the protocol and port used by the Harness connection test for Git connectors.

Also, SCM service connection failures can occur when using self-signed certificates.

How can I see which files I have cloned in the codebase?

You can add a Run step to the beginning of your Build stage that runs ls -ltr. This returns all content cloned by the Clone Codebase step.

Why is the codebase connector config not saved?

Changes to a pipeline's codebase configuration won't save if all CI stages in the pipeline have Clone Codebase disabled in the Build stage's settings.

Can I get a list of all branches available for a manual branch build?

This is not available in Harness.

Can I configure a trigger or manual tag build that pulls the second-to-last Git tag?

There is no built-in functionality for this.

Depending on your tag naming convention, if it is possible to write a regex that could resolve correctly for your repo, then you could configure a trigger to do this.

For manual tag builds, you need to enter the tag manually at runtime.

How can we set ENV variable for the git clone step as there is no option available in the UI to set the ENV variable for this step?

Yes. Refer to the Pre Fetch Command under the Configure Codebase or Git Clone step documentation to specify any additional Git commands to run before fetching the code.

SCM status updates and PR checks

Does Harness supports Pull Request status updates?

Yes. Your PRs can use the build status as a PR status check. For more information, go to SCM status checks.

How do I configure my pipelines to send PR build validations?

For instructions, go to SCM status checks - Pipeline links in PRs.

What connector does Harness uses to send build status updates to PRs?

Harness uses the pipeline's codebase connector, specified in the pipeline's default codebase configuration to send status updates to PRs in your Git provider.

Can I use the Git Clone step, instead of the built-in clone codebase step, to get build statues on my PRs?

No. You must use the built-in clone codebase step (meaning, you must configure a default codebase) to get pipeline links in PRs.

Pipeline status updates aren't sent to PRs

Harness uses the pipeline's codebase connector to send status updates to PRs in your Git provider. If status updates aren't being sent, make sure that you have configured a default codebase and that it is using the correct code repo connector. Also make sure the build that ran was a PR build and not a branch or tag build.

Build statuses don't show on my PRs, even though the code base connector's token has all repo permissions.

If the user account used to generate the token doesn't have repository write permissions, the resulting token won't have sufficient permissions to post the build status update to the PR. Specific permissions vary by connector. For example, GitHub connector credentials require that personal access tokens have all repo, user, and admin:repo_hook scopes, and the user account used to generate the token must have admin permissions on the repo.

For repos under organizations or projects, check the role/permissions assigned to the user in the target repository. For example, a user in a GitHub organization can have some permissions at the organization level, but they might not have those permissions at the individual repository level.

Why does the status check for my PR redirect to a different PR's build Harness?

This issue occurs when two PRs are created for the same commit ID. Harness CI associates builds with commits rather than specific PRs. If multiple PRs share the same commit, the latest build will replace the previous one, causing the build check on the earlier PR to redirect to the newer PR’s build. To resolve this, push a new commit to the affected PR and rebuild it. This creates a unique commit, ensuring the build check redirects to the correct PR.

Can I export a failed step's output to a pull request comment?

To do this, you could:

- Modify the failed step's command to save output to a file, such as

your_command 2>&1 | tee output_file.log. - After the failed step, add a Run step that reads the file's content and uses your Git provider's API to export the file's contents to a pull request comment.

- Configure the subsequent step's conditional execution settings to Always execute this step.

Does my pipeline have to have a Build stage to get the build status on the PR?

Yes, the build status is updated on a PR only if a Build (CI) stage runs.

My pipeline has multiple Build stages. Is the build status updated for each stage or for the entire pipeline?

The build status on the PR is updated for each individual Build stage.

How is the build status updated for parallel chained pipelines?

For chained pipelines, if you use a looping strategy to execute the same chained pipeline in parallel, the build status is overwritten due to the parallel chained pipelines having identical pipeline and stage IDs.

To prevent parallel chained pipelines from overwriting one another, you can try these strategies:

- Create a pipeline template for your chained pipeline, create multiple pipelines from this template that have unique pipeline identifiers, and then add those separate pipelines in parallel within the parent pipeline.

- Add a custom SCM status check at the end of the chained pipeline's Build stage that manually updates the PR with the build status. Make sure this step alway runs, regardless of the outcome of previous steps or stages.

My pipeline has multiple Build stages, and I disabled Clone Codebase for some of them. Why is the PR status being updated for the stages that don't clone my codebase?

Currently, Harness CI updates the build status on a PR even if you disabled Clone Codebase for some build stages. We are investigating enhancements that could change this behavior.

Is there any character limit for the PR build status message?

Yes. For GitHub, the limit is 140 characters. If the message is too long, the request fails with description is too long (maximum is 140 characters).

What identifiers are included in the PR build status message?

The pipeline identifier and stage identifier are included in the build status message.

What is the format and content of the PR build status message?

The PR build status message format is PIPELINE_ID-STAGE_ID — Execution status of Pipeline - PIPELINE_ID (EXECUTION_ID) Stage - STAGE_ID was STATUS

I don't want to send build statuses to my PRs. I want to disable PR status updates.

Because the build status updates operate through the default codebase connector, the easiest way to prevent sending PR status updates would be to disable Clone Codebase for all Build stages in your pipeline, and then use a Git Clone or Run step to clone your codebase.

You could try modifying the permissions of the code repo connector's token so that it can't write to the repo, but this could interfere with other connector functionality.

Removing API access from the connector is not recommended because API access is required for other connector functions, such as cloning codebases from PRs, auto-populating branch names when you manually run builds, and so on.

Why wasn't PR build status updated for an Approval stage? Can I mark the build failed if any non-Build stage fails?

Build status updates occur for Build stages only.

Failed pipelines don't block PR merges

Harness only sends pipeline statuses to your PRs. You must configure branch protection rules (such as status check requirements) and other checks in your SCM provider's configuration.

Troubleshoot Git event (webhook) triggers

For troubleshooting information for Git event (webhook) triggers, go to Troubleshoot Git event triggers.

Can we configure the CI pipeline to send the status check for the entire pipeline instead of sending it for individual stage?

Currently, the status check is sent for each CI stage execution and it can not be configured to send one status check for the entire pipeline when you have multiple CI stages within the pipeline.

How to create a pull request in github using a CI pipeline?

There isn't a built-in step available to create a PR in Git Hub from a Harness pipeline. We could run a custom script in a shell script step to invoke the GitHub API to create PR. A sample command is given below

curl -X POST -H "Authorization: token YOUR_ACCESS_TOKEN" \

-H "Accept: application/vnd.github.v3+json" \

-d '{

"title": "Pull Request Title",

"head": "branch-to-merge-from",

"base": "branch-to-merge-into",

"body": "Description of the pull request"

}' https://api.github.com/repos/OWNER/REPOSITORY/pulls

Pipeline initialization and Harness CI images

Initialize step fails with a "Null value" error or timeout