Publish anything to the Artifacts tab

You can use the Artifact Metadata Publisher plugin to publish any URL on the Artifacts tab of the Build details page.

If you use AWS S3, you can use either the Artifact Metadata Publisher plugin or the S3 Upload and Publish plugin, which combines the upload artifact and publish URL steps in one plugin.

Configure the Artifact Metadata Publisher plugin

To use the Artifact Metadata Publisher plugin, add a Plugin step to your CI pipeline.

For artifacts generated in the same pipeline, the Plugin step is usually placed after the step that uploads the artifact to cloud storage.

- step:

type: Plugin

name: publish artifact metadata

identifier: publish_artifact_metadata

spec:

connectorRef: account.harnessImage

image: plugins/artifact-metadata-publisher

settings:

file_urls: https://domain.com/path/to/artifact

artifact_file: artifact.txt

Plugin step specifications

Use these specifications to configure the Plugin step to use the Artifact Metadata Publisher plugin.

connectorRef

Use the built-in Docker connector (account.harness.Image) or specify your own Docker connector. Harness uses this to pull the plugin Docker image.

This setting is labeled Container Registry in the Visual editor.

image

Set to plugins/artifact-metadata-publisher.

file_urls

Provide the URL for the artifact you want to link on the Artifacts tab. In the Visual editor, set this as a key-value pair under Settings.

For artifacts in cloud storage, use the appropriate URL format for your cloud storage provider, for example:

- GCS:

https://storage.googleapis.com/GCS_BUCKET_NAME/TARGET_PATH/ARTIFACT_NAME_WITH_EXTENSION - S3:

https://BUCKET.s3.REGION.amazonaws.com/TARGET/ARTIFACT_NAME_WITH_EXTENSION

For artifacts uploaded through Upload Artifacts steps, use the Bucket, Target, and artifact name specified in the Upload Artifacts step.

For private S3 buckets, use the console view URL, such as https://s3.console.aws.amazon.com/s3/object/BUCKET?region=REGION&prefix=TARGET/ARTIFACT_NAME_WITH_EXTENSION.

If you uploaded multiple artifacts, you can provide a list of URLs, such as:

file_urls:

- https://BUCKET.s3.REGION.amazonaws.com/TARGET/artifact1.html

- https://BUCKET.s3.REGION.amazonaws.com/TARGET/artifact2.pdf

For information about using Harness CI to upload artifacts, go to Build and push artifacts and images.

artifact_file

Provide any .txt file name, such as artifact.txt or url.txt. In the Visual editor, set this as a key-value pair under Settings.

This is a required setting that Harness uses to store the artifact URL and display it on the Artifacts tab. This value is not the name of your uploaded artifact, and it has no relationship to the artifact object itself.

Build logs and artifact files

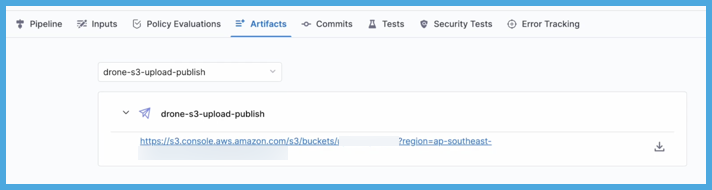

When you run the pipeline, you can observe the step logs on the Build details page, and you can find the artifact URL on the Artifacts tab.

On the Artifacts tab, select the step name to expand the list of artifact links associated with that step.

If your pipeline has multiple steps that upload artifacts, use the dropdown menu on the Artifacts tab to switch between lists of artifacts uploaded by different steps.

Tutorial: Upload an Allure report to the Artifacts tab

This tutorial demonstrates how to use the Artifacts Metadata Publisher plugin by generating and uploading an Allure report.

Part 1: Prepare cloud storage

-

You need access to a cloud storage provider. This tutorial uses GCS. If you use another option, you'll need to modify some of the steps according to your chosen cloud storage provider.

-

If you use S3, GCS, or JFrog, you need a Harness connector to use with the Upload Artifact step:

- S3: AWS connector

- GCS: GCP connector

- JFrog: Artifactory connector

tipStore authentication and access keys for connectors as Harness secrets.

-

In your cloud storage, create a bucket or repo where you can upload your artifact.

To access the artifact directly from the Artifacts tab, the upload location must be publicly available. If the location is not publicly available, you might need to log in to view the artifact or use a different artifact URL (such as a console view URL). This tutorial uses a publicly available GCS bucket to store the report.

Part 2: Prepare artifacts to upload

Add steps to your pipeline that generate and prepare artifacts to upload, such as Run steps. The steps you use depend on what artifacts you ultimately want to upload.

For example, this tutorial uses three Run steps to generate and prepare an artifact:

- The first step runs tests with Maven.

- The second step generates an Allure report. To ensure the build environment has the Allure tool, the step uses a Docker image that has this tool:

solutis/allure:2.9.0. - The third step combines the Allure report into a single HTML file.

- To view an Allure report in a browser, you must run a web server with the

allure opencommand; however, this command won't persist after the CI pipeline ends. Instead, use the allure-combine tool to convert the Allure report into a single HTML file. - Running the

allure-combine .command insideallure-reportgenerates thecomplete.htmlfile. - To ensure the build environment as access to the allure-combine tool, you can include steps to install it or use a Docker image that has the tool, such as

shubham149/allure-combine:latest.

- To view an Allure report in a browser, you must run a web server with the

- step:

type: Run

name: run maven tests

identifier: run_maven_tests

spec:

connectorRef: account.harnessImage

image: openjdk:11

shell: Sh

command: ./mvnw clean test site

- step:

type: Run

name: generate allure report

identifier: generate_allure_report

spec:

connectorRef: account.harnessImage

image: solutis/allure:2.9.0

command: |

cd target

allure generate allure-results --clean -o allure-report

- step:

type: Run

name: combine report

identifier: combine_report

spec:

connectorRef: account.harnessImage

image: shubham149/allure-combine:latest

command: |

cd target/allure-report

allure-combine .

cd ../..

cp target/allure-report/complete.html .

For connectorRef, you can use the built-in Docker connector, account.harnessImage, or use your own Docker Hub connector.

Part 3: Upload to cloud storage

Add a step to upload your artifact to cloud storage:

- Upload Artifacts to JFrog

- Upload Artifacts to GCS

- Upload Artifacts to S3

- Upload Artifacts to Sonatype Nexus

For example, this tutorial uploads the combined Allure report to GCS:

- step:

type: GCSUpload

name: upload report

identifier: upload_report

spec:

connectorRef: YOUR_GCP_CONNECTOR_ID

bucket: YOUR_GCS_BUCKET

sourcePath: target/allure-report/complete.html

target: <+pipeline.sequenceId>

The target value uses a Harness expression, <+pipeline.sequenceId>, to ensure that artifacts uploaded by this pipeline are stored in unique directories and don't overwrite one another.

Part 4: Add URLs to the Artifacts tab

At this point, you can run the pipeline and then manually find the uploaded artifact in your cloud storage bucket or repo. Alternately, you can use the Artifact Metadata Publisher plugin, which makes it easier to find the artifact directly associated with a particular build.

For example, this step publishes the URL for the combined Allure report on the Artifacts tab:

- step:

type: Plugin

name: publish artifact metadata

identifier: publish_artifact_metadata

spec:

connectorRef: account.harnessImage

image: plugins/artifact-metadata-publisher

settings:

file_urls: https://storage.googleapis.com/YOUR_GCS_BUCKET/<+pipeline.sequenceId>/complete.html

artifact_file: artifact.txt

For details about these settings, go to Configure the Artifact Metadata Publisher plugin.

Tutorial YAML examples

These YAML examples demonstrate the pipeline created in the preceding tutorial. This pipeline:

- Builds a Java Maven application.

- Generates and compiles an Allure report.

- Uploads the report to cloud storage.

- Provides a URL to access the report from the Artifacts tab in Harness.

- Harness Cloud

- Self-managed

This example uses Harness Cloud build infrastructure and uploads the Allure report artifact to GCS.

pipeline:

name: allure-report-upload

identifier: allurereportupload

projectIdentifier: YOUR_HARNESS_PROJECT_ID

orgIdentifier: default

tags: {}

properties:

ci:

codebase:

connectorRef: YOUR_CODEBASE_CONNECTOR_ID

repoName: YOUR_CODE_REPO_NAME

build: <+input>

stages:

- stage:

name: test and upload artifact

identifier: test_and_upload_artifact

description: ""

type: CI

spec:

cloneCodebase: true

platform:

os: Linux

arch: Amd64

runtime:

type: Cloud

spec: {}

execution:

steps:

- step:

type: Run

name: run maven tests

identifier: run_maven_tests

spec:

connectorRef: account.harnessImage

image: openjdk:11

shell: Sh

command: ./mvnw clean test site

- step:

type: Run

name: generate allure report

identifier: generate_allure_report

spec:

connectorRef: account.harnessImage

image: solutis/allure:2.9.0

command: |

cd target

allure generate allure-results --clean -o allure-report

- step:

type: Run

name: combine report

identifier: combine_report

spec:

connectorRef: account.harnessImage

image: shubham149/allure-combine:latest

command: |

cd target/allure-report

allure-combine .

cd ../..

cp target/allure-report/complete.html .

- step:

type: GCSUpload

name: upload report

identifier: upload_report

spec:

connectorRef: YOUR_GCP_CONNECTOR_ID

bucket: YOUR_GCS_BUCKET

sourcePath: target/allure-report/complete.html

target: <+pipeline.sequenceId>

- step:

type: Plugin

name: publish artifact metadata

identifier: publish_artifact_metadata

spec:

connectorRef: account.harnessImage

image: plugins/artifact-metadata-publisher

settings:

file_urls: https://storage.googleapis.com/YOUR_GCS_BUCKET/<+pipeline.sequenceId>/complete.html

artifact_file: artifact.txt

This example uses a Kubernetes cluster build infrastructure and uploads the Allure report artifact to GCS.

pipeline:

name: allure-report-upload

identifier: allurereportupload

projectIdentifier: YOUR_HARNESS_PROJECT_ID

orgIdentifier: default

tags: {}

properties:

ci:

codebase:

connectorRef: YOUR_CODEBASE_CONNECTOR_ID

repoName: YOUR_CODE_REPO_NAME

build: <+input>

stages:

- stage:

name: build

identifier: build

description: ""

type: CI

spec:

cloneCodebase: true

infrastructure:

type: KubernetesDirect

spec:

connectorRef: YOUR_KUBERNETES_CLUSTER_CONNECTOR_ID

namespace: YOUR_KUBERNETES_NAMESPACE

automountServiceAccountToken: true

nodeSelector: {}

os: Linux

execution:

steps:

- step:

type: Run

name: run maven tests

identifier: run_maven_tests

spec:

connectorRef: account.harnessImage

image: openjdk:11

shell: Sh

command: ./mvnw clean test site

- step:

type: Run

name: generate allure report

identifier: generate_allure_report

spec:

connectorRef: account.harnessImage

image: solutis/allure:2.9.0

command: |

cd target

allure generate allure-results --clean -o allure-report

- step:

type: Run

name: combine report

identifier: combine_report

spec:

connectorRef: account.harnessImage

image: shubham149/allure-combine:latest

command: |

cd target/allure-report

allure-combine .

cd ../..

cp target/allure-report/complete.html .

- step:

type: GCSUpload

name: upload report

identifier: upload_report

spec:

connectorRef: YOUR_GCP_CONNECTOR_ID

bucket: YOUR_GCS_BUCKET

sourcePath: target/allure-report/complete.html

target: <+pipeline.sequenceId>

- step:

type: Plugin

name: publish artifact metadata

identifier: publish_artifact_metadata

spec:

connectorRef: account.harnessImage

image: plugins/artifact-metadata-publisher

settings:

file_urls: https://storage.googleapis.com/YOUR_GCS_BUCKET/<+pipeline.sequenceId>/complete.html

artifact_file: artifact.txt

See also

- View test reports on the Artifacts tab

- View code coverage reports on the Artifacts tab

- View GCS artifacts on the Artifacts tab

- View JFrog artifacts on the Artifacts tab

- View Sonatype Nexus artifacts on the Artifacts tab

- View S3 artifacts on the Artifacts tab

Troubleshoot the Artifacts tab

Go to the CI Knowledge Base for questions and issues related to the Artifacts tab, such as: