Deploy using Helm Chart

This tutorial is designed to help you get started with Harness Continuous Delivery (CD). We will guide you through creating a CD pipeline/GitOps for deploying a Guestbook application. This Guestbook application will use Helm Chart for deployment.

Kubernetes is required to complete these steps. Run the following to check your system resources and (optionally) install a local cluster.

bash <(curl -fsSL https://raw.githubusercontent.com/harness-community/scripts/main/delegate-preflight-checks/cluster-preflight-checks.sh)

- GitOps Workflow

- CD Pipeline

Whether you're new to GitOps or already have an Argo CD instance, this guide will assist you in getting started with Harness GitOps, both with and without Argo CD.

Harness also offers a Hosted GitOps solution. A tutorial for it will be available soon.

Before you begin

Make sure that you have met the following requirements:

- You have set up a Kubernetes cluster. We recommend using K3D for installing Harness Delegates and deploying a sample application in a local development environment. For more information, go to Delegate system and network requirements.

- You have forked the harnesscd-example-apps repository through the GitHub web interface. For more details, go to Forking a GitHub repository.

Deploy your applications using Harness GitOps

-

Log in to the Harness App.

-

Select Projects in the top left corner of the UI, and then select Default Project.

-

In Deployments, select GitOps.

Install a Harness GitOps Agent

What is a GitOps Agent?

A Harness GitOps Agent is a worker process that runs in your environment, makes secure, outbound connections to Harness, and performs all the GitOps tasks you request in Harness.

- Select Settings on the top right corner of the UI.

- Select GitOps Agents, and then select New GitOps Agent.

- In Do you have any existing Argo CD instances?, select Yes if you already have an Argo CD instance, else select No to install the Harness GitOps Agent.

- Harness GitOps Agent Fresh Install

- Harness GitOps Agent with existing Argo CD instance

- In Do you have any existing Argo CD instances?, select No, and then select Start.

- In Name, enter the name for the new Agent.

- In Namespace, enter the namespace where you want to install the Harness GitOps Agent. Typically, this is the target namespace for your deployment.

For this tutorial, let's use the

defaultnamespace to install the Agent and deploy applications. - Select Continue.

The Review YAML settings appear. This is the manifest YAML for the Harness GitOps Agent. You can download this YAML file and run it in your Harness GitOps Agent cluster.

kubectl apply -f gitops-agent.yml -n default - Select Continue and verify that the Agent is successfully installed and can connect to Harness Manager.

- In Do you have any existing Argo CD instances?, select Yes, and then select Start.

- In Name, enter the name of the existing Argo CD project.

- In Namespace, enter the namespace where you want to install the Harness GitOps Agent. Typically, this is the target namespace for your deployment.

For this tutorial, let's use the

defaultnamespace to install the Agent and deploy applications. - Select Continue.

The Review YAML settings appear. This is the manifest YAML for the Harness GitOps Agent. You can download this YAML file and run it in your Harness GitOps Agent cluster.

kubectl apply -f gitops-agent.yml -n default - Select Continue and verify that the Agent is successfully installed and can connect to Harness Manager.

Once you have installed the Agent, Harness will start importing all the entities from the existing Argo CD Project.

- CLI

- UI

- Download and Configure Harness CLI.

- MacOS

- Linux

- Windows

curl -LO https://github.com/harness/harness-cli/releases/download/v0.0.24-Preview/harness-v0.0.24-Preview-darwin-amd64.tar.gz

tar -xvf harness-v0.0.24-Preview-darwin-amd64.tar.gz

export PATH="$(pwd):$PATH"

echo 'export PATH="'$(pwd)':$PATH"' >> ~/.bash_profile

source ~/.bash_profile

harness --version

- ARM

- AMD

curl -LO https://github.com/harness/harness-cli/releases/download/v0.0.24-Preview/harness-v0.0.24-Preview-linux-arm64.tar.gz

tar -xvf harness-v0.0.24-Preview-linux-arm64.tar.gz

curl -LO https://github.com/harness/harness-cli/releases/download/v0.0.24-Preview/harness-v0.0.24-Preview-linux-amd64.tar.gz

tar -xvf harness-v0.0.24-Preview-linux-amd64.tar.gz

a. Open Windows Powershell and run the command below to download the Harness CLI.

Invoke-WebRequest -Uri https://github.com/harness/harness-cli/releases/download/v0.0.24-Preview/harness-v0.0.24-Preview-windows-amd64.zip -OutFile ./harness.zip

b. Extract the downloaded zip file and change directory to extracted file location.

c. Follow the steps below to make it accessible via terminal.

$currentPath = Get-Location

[Environment]::SetEnvironmentVariable("PATH", "$env:PATH;$currentPath", [EnvironmentVariableTarget]::Machine)

d. Restart terminal.

-

Clone the Forked harnesscd-example-apps repo and change directory.

git clone https://github.com/GITHUB_ACCOUNTNAME/harnesscd-example-apps.git

cd harnesscd-example-appsnoteReplace

GITHUB_ACCOUNTNAMEwith your GitHub Account name. -

Log in to Harness from the CLI.

harness login --api-key --account-id HARNESS_API_TOKENnoteReplace

HARNESS_API_TOKENwith Harness API Token that you obtained during the prerequisite section of this tutorial.

For the pipeline to run successfully, please follow all of the following steps as they are, including the naming conventions.

Add a Harness GitOps repository

What is a GitOps Repository?

A Harness GitOps Repository is a repository containing the declarative description of a desired state. The declarative description can be in Kubernetes manifests, Helm Chart, Kustomize manifests, etc.

Use the following command to add a Harness GitOps repository.

harness gitops-repository --file helm-guestbook/harness-gitops/repository.yml apply --agent-identifier $AGENT_NAME

Add a Harness GitOps cluster

What is a GitOps Cluster?

A Harness GitOps Cluster is the target deployment cluster that is compared to the desire state. Clusters are synced with the source manifests you add as GitOps Repositories.

Use the following command to add a Harness GitOps cluster.

harness gitops-cluster --file helm-guestbook/harness-gitops/cluster.yml apply --agent-identifier $AGENT_NAME

Add a Harness GitOps application

What is a GitOps Application?

GitOps Applications are how you manage GitOps operations for a given desired state and its live instantiation.

A GitOps Application collects the Repository (what you want to deploy), Cluster (where you want to deploy), and Agent (how you want to deploy). You select these entities when you set up your Application.

Use the following command to create a Gitops application

harness gitops-application --file helm-guestbook/harness-gitops/application.yml apply --agent-identifier $AGENT_NAME

Add a Harness GitOps repository

What is a GitOps Repository?

A Harness GitOps Repository is a repository containing the declarative description of a desired state. The declarative description can be in Kubernetes manifests, Helm Chart, Kustomize manifests, etc.

- Select Settings in the top right corner of the UI.

- Select Repositories, and then select New Repository

- Select Git and enter the following details:

- In Repository Name, enter the Git repository name.

- In GitOps Agent, choose the Agent that you installed in your cluster and select Apply.

- In Git Repository URL, enter

https://github.com/GITHUB_USERNAME/harnesscd-example-apps.gitand replace GITHUB_USERNAME with your GitHub username.

- Select Continue and choose Specify Credentials For Repository.

- In Connection Type, select HTTPS.

- In Authentication, select Anonymous (no credentials required).

- Select Save & Continue and wait for Harness to verify the conenction.

- Select Finish.

Add a Harness GitOps cluster

What is a GitOps Cluster?

A Harness GitOps Cluster is the target deployment cluster that is compared to the desire state. Clusters are synced with the source manifests you add as GitOps Repositories.

- Select Settings in the top right corner of the UI.

- Select Clusters, and then select New Cluster.

- In the cluster Overview dialog, enter a name for the cluster.

- In GitOps Agent, select the Agent that you installed in your cluster and select Apply.

- Select Continue and select Use the credentials of a specific Harness GitOps Agent.

- Select Save & Continue and wait for Harness to verify the conenction.

- Select Finish.

Add a Harness GitOps application

What is a GitOps Application?

GitOps Applications are how you manage GitOps operations for a given desired state and its live instantiation.

A GitOps Application collects the Repository (what you want to deploy), Cluster (where you want to deploy), and Agent (how you want to deploy). You select these entities when you set up your Application.

-

Select Applications on the top right corner of the UI.

-

Select New Application.

-

In Application Name, enter the name,

guestbook. -

In GitOps Agent, select the Agent that you installed in your cluster and select Apply.

-

Select New Service and toggle to the YAML view (next to VISUAL).

-

Select Edit YAML, and then paste the following, and select Save.

service:

name: gitopsguestbook

identifier: gitopsguestbook

serviceDefinition:

type: Kubernetes

spec: {}

gitOpsEnabled: true -

Select New Environment and toggle to the YAML view (next to VISUAL).

-

Select Edit YAML, and then paste the following, and select Save

environment:

name: gitopsenv

identifier: gitopsenv

description: ""

tags: {}

type: PreProduction

orgIdentifier: default

projectIdentifier: default_project

variables: [] -

Select Continue, keep the Sync Policy settings as is, and then select Continue.

-

In Repository URL, select the Repository you created earlier and select Apply.

-

Select master as the target Revision and enter

kustomize-guestbookin the Path and hit enter. -

Select Continue to select the Cluster created in the above steps.

-

Enter the target Namespace for Harness GitOps to sync the application. Enter

defaultand select Finish

Sync the application

Finally, it's time to Synchronize the application state.

- Select Sync in the top right corner of the UI.

- Check the application details, and then select Synchronize to initiate the deployment.

-

After a successful execution, you can check the deployment on your Kubernetes cluster using the following command:

kubectl get pods -n default -

To access the Guestbook application deployed via the Harness pipeline, port forward the service and access it at

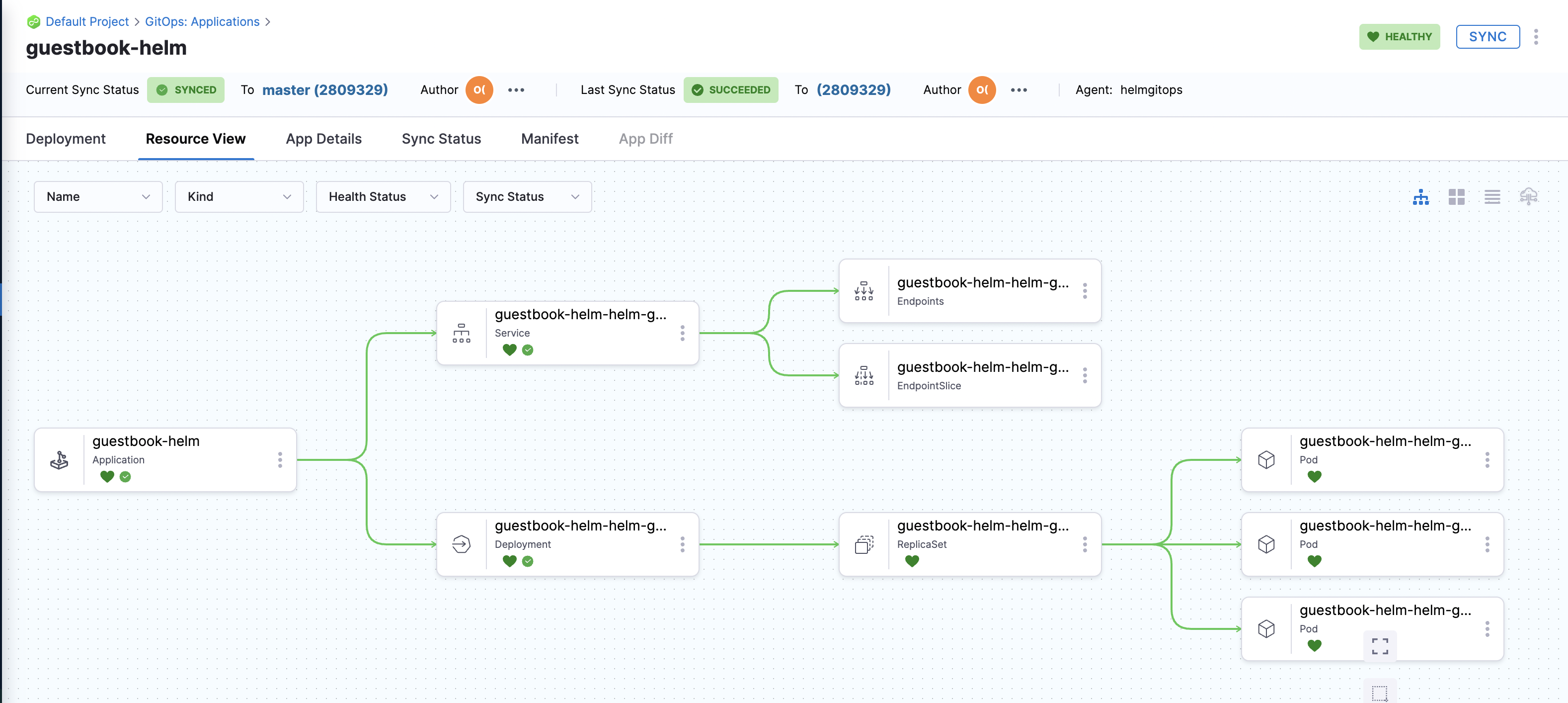

http://localhost:8080:kubectl port-forward svc/<service-name> 8080:80On successful application sync, you'll see the status tree under Resource View as shown below:

-

You can choose to proceed with the tutorial either by using the command-line interface (Harness CLI) or the user interface (Harness UI).

- CLI

- UI

Before you begin

Verify the following:

- Obtain Harness API Token. For steps, go to the Harness documentation on creating a personal API token.

- Obtain GitHub personal access token with repo permissions. For steps, go to the GitHub documentation on creating a personal access token.

- A Kubernetes cluster. Use your own Kubernetes cluster or we recommend using K3D for installing Harness Delegates and deploying a sample application in a local development environment.

- Install Helm CLI.

- Fork the harnesscd-example-apps repository through the GitHub web interface.

- For details on forking a GitHub repository, go to GitHub docs.

Getting Started with Harness CD

- Download and Configure Harness CLI.

- MacOS

- Linux

- Windows

curl -LO https://github.com/harness/harness-cli/releases/download/v0.0.24-Preview/harness-v0.0.24-Preview-darwin-amd64.tar.gz

tar -xvf harness-v0.0.24-Preview-darwin-amd64.tar.gz

export PATH="$(pwd):$PATH"

echo 'export PATH="'$(pwd)':$PATH"' >> ~/.bash_profile

source ~/.bash_profile

harness --version

- ARM

- AMD

curl -LO https://github.com/harness/harness-cli/releases/download/v0.0.24-Preview/harness-v0.0.24-Preview-linux-arm64.tar.gz

tar -xvf harness-v0.0.24-Preview-linux-arm64.tar.gz

curl -LO https://github.com/harness/harness-cli/releases/download/v0.0.24-Preview/harness-v0.0.24-Preview-linux-amd64.tar.gz

tar -xvf harness-v0.0.24-Preview-linux-amd64.tar.gz

a. Open Windows Powershell and run the command below to download the Harness CLI.

Invoke-WebRequest -Uri https://github.com/harness/harness-cli/releases/download/v0.0.24-Preview/harness-v0.0.24-Preview-windows-amd64.zip -OutFile ./harness.zip

b. Extract the downloaded zip file and change directory to extracted file location.

c. Follow the steps below to make it accessible via terminal.

$currentPath = Get-Location

[Environment]::SetEnvironmentVariable("PATH", "$env:PATH;$currentPath", [EnvironmentVariableTarget]::Machine)

d. Restart terminal.

-

Clone the Forked harnesscd-example-apps repo and change directory.

git clone https://github.com/GITHUB_ACCOUNTNAME/harnesscd-example-apps.git

cd harnesscd-example-appsnoteReplace

GITHUB_ACCOUNTNAMEwith your GitHub Account name. -

Log in to Harness from the CLI.

harness login --api-key --account-id HARNESS_API_TOKENnoteReplace

HARNESS_API_TOKENwith Harness API Token that you obtained during the prerequisite section of this tutorial.

For the pipeline to run successfully, please follow all of the following steps as they are, including the naming conventions.

Delegate

The Harness Delegate is a service that runs in your local network or VPC to establish connections between the Harness Manager and various providers such as artifact registries, cloud platforms, etc. The delegate is installed in the target infrastructure (Kubernetes cluster) and performs operations including deployment and integration. To learn more about the delegate, go to delegate Overview.

-

Log in to the Harness UI. In Project Setup, select Delegates.

-

Select Delegates.

- Select Install delegate. For this tutorial, let's explore how to install the delegate using Helm.

- Add the Harness Helm chart repo to your local Helm registry.

helm repo add harness-delegate https://app.harness.io/storage/harness-download/delegate-helm-chart/helm repo update harness-delegate-

In the command provided,

ACCOUNT_ID,MANAGER_ENDPOINT, andDELEGATE_TOKENare auto-populated values that you can obtain from the delegate Installation wizard.helm upgrade -i helm-delegate --namespace harness-delegate-ng --create-namespace \

harness-delegate/harness-delegate-ng \

--set delegateName=helm-delegate \

--set accountId=ACCOUNT_ID \

--set managerEndpoint=MANAGER_ENDPOINT \

--set delegateDockerImage=harness/delegate:23.03.78904 \

--set replicas=1 --set upgrader.enabled=false \

--set delegateToken=DELEGATE_TOKEN

-

Verify that the delegate is installed successfully and can connect to the Harness Manager.

-

You can also go to Install Harness Delegate on Kubernetes or Docker for instructions on installing the delegate using the Terraform Helm Provider or Kubernetes manifest.

-

Secrets

What are Harness secrets?

Harness offers built-in secret management for encrypted storage of sensitive information. Secrets are decrypted when needed, and only the private network-connected Harness Delegate has access to the key management system. You can also integrate your own secret manager. To learn more about secrets in Harness, go to Harness Secret Manager Overview.

-

Use the following command to add the GitHub PAT you created previously for your secret.

harness secret --token <YOUR GITHUB PAT>

Connectors

What are connectors?

Connectors in Harness enable integration with 3rd party tools, providing authentication and operations during pipeline runtime. For instance, a GitHub connector facilitates authentication and fetching files from a GitHub repository within pipeline stages. Explore connector how-tos here.

-

Replace GITHUB_USERNAME with your GitHub account username in the

github-connector.yaml -

In

projectIdentifier, verify that the project identifier is correct. You can see the Id in the browser URL (afteraccount). If it is incorrect, the Harness YAML editor will suggest the correct Id. -

Now create the GitHub connector using the following CLI command:

harness connector --file "helm-guestbook/harnesscd-pipeline/github-connector.yml" apply --git-user <YOUR GITHUB USERNAME> -

Please check the delegate name to be

helm-delegatein thekubernetes-connector.yml -

Create the Kubernetes connector using the following CLI command:

harness connector --file "helm-guestbook/harnesscd-pipeline/kubernetes-connector.yml" apply --delegate-name kubernetes-delegate

Deployment Strategies

Helm is primarily focused on managing the release and versioning of application packages. Helm supports rollback through its release management features. When you deploy an application using Helm, it creates a release that represents a specific version of the application with a unique release name.

How Harness performs canary and blue-green deployments with Helm

- Harness integrates with Helm by utilizing Helm charts and releases. Helm charts define the application package and its dependencies, and Helm releases represent specific versions of the application.

- Harness allows you to define the application configuration, including Helm charts, values files, and any custom configurations required for your application.

- In Harness, You can specify the percentage of traffic to route to the new version in a canary deployment or define the conditions to switch traffic between the blue and green environments in a blue-green deployment.

- Harness orchestrates the deployment workflow, including the deployment of Helm charts, by interacting with the Helm API. It manages the release lifecycle, tracks revisions, and controls the rollout process based on the defined canary or blue-green strategy.

Harness adds an additional layer of functionality on top of Helm, providing a streamlined and automated approach to canary and blue-green deployments. By leveraging Helm's package management capabilities and integrating with its release management features, Harness extends Helm's capabilities to support canary and blue-green deployment strategies.

- Canary

- Blue Green

- K8s Rolling

- Native Helm Rolling

What are Canary deployments?

A Canary deployment updates nodes in a single environment, gradually allowing you to use gates between increments. Canary deployments allow incremental updates and ensure a controlled rollout process. For more information, go to When to use Canary deployments.

Create an environment

What are Harness environments?

Environments define the deployment location, categorized as Production or Pre-Production. Each environment includes infrastructure definitions for VMs, Kubernetes clusters, or other target infrastructures. To learn more about environments, go to Environments overview.

-

Use the following CLI Command to create Environments in your Harness project:

harness environment --file "helm-guestbook/harnesscd-pipeline/k8s-environment.yml" apply -

In your new environment, add Infrastructure Definitions using the following CLI command:

harness infrastructure --file "helm-guestbook/harnesscd-pipeline/k8s-infrastructure-definition.yml" apply

Create a service

What are Harness services?

In Harness, services represent what you deploy to environments. You use services to configure variables, manifests, and artifacts. The Services dashboard provides service statistics like deployment frequency and failure rate. To learn more about services, go to Services overview.

-

Use the following CLI command to create Services in your Harness Project.

harness service -file "helm-guestbook/harnesscd-pipeline/k8s-service.yml" apply

Create a pipeline

What are Harness pipelines?

A pipeline is a comprehensive process encompassing integration, delivery, operations, testing, deployment, and monitoring. It can utilize CI for code building and testing, followed by CD for artifact deployment in production. A CD Pipeline is a series of stages where each stage deploys a service to an environment. To learn more about CD pipeline basics, go to CD pipeline basics.

-

CLI Command for canary deployment:

harness pipeline --file "helm-guestbook/harnesscd-pipeline/k8s-canary-pipeline.yml" apply -

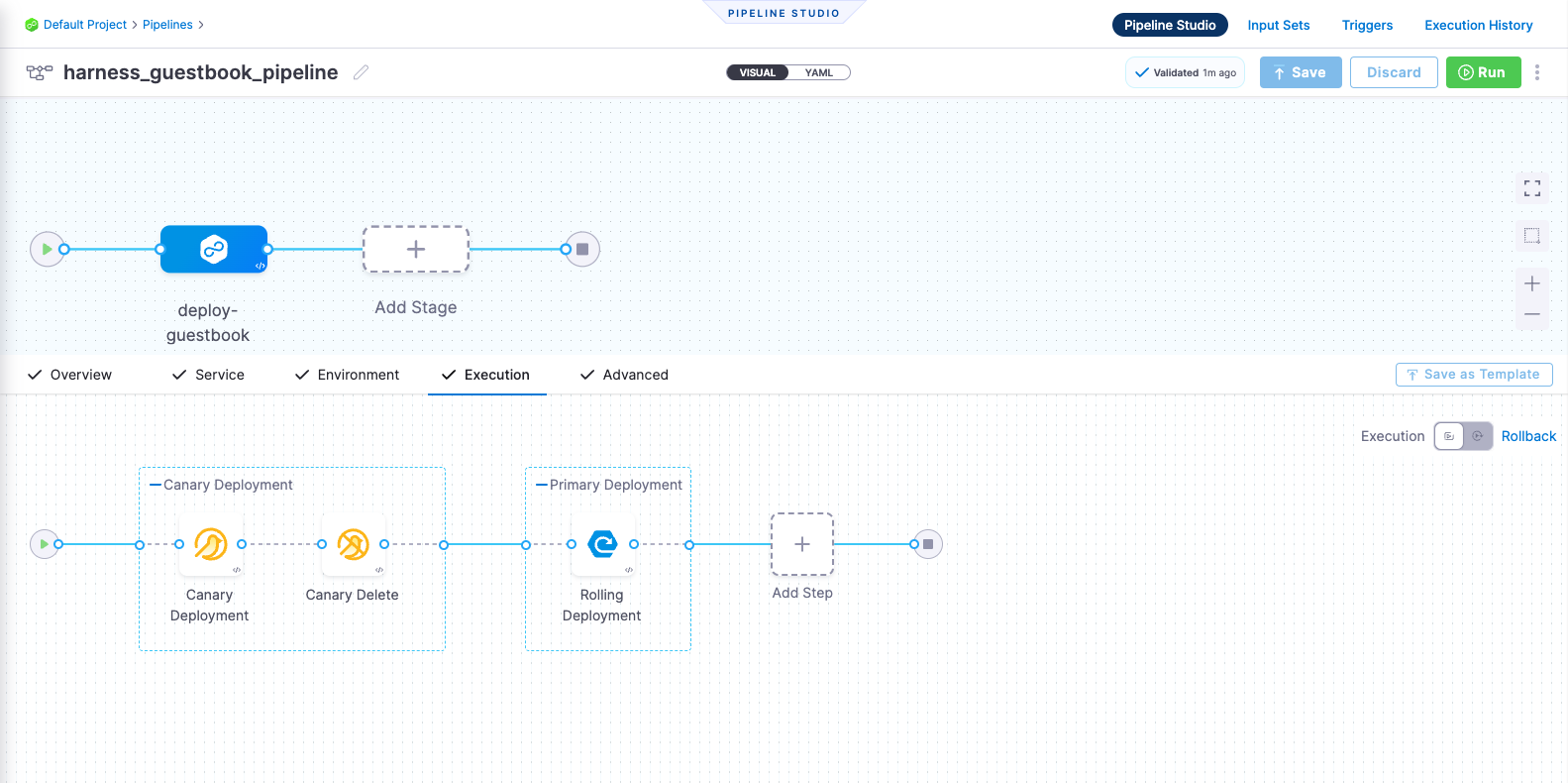

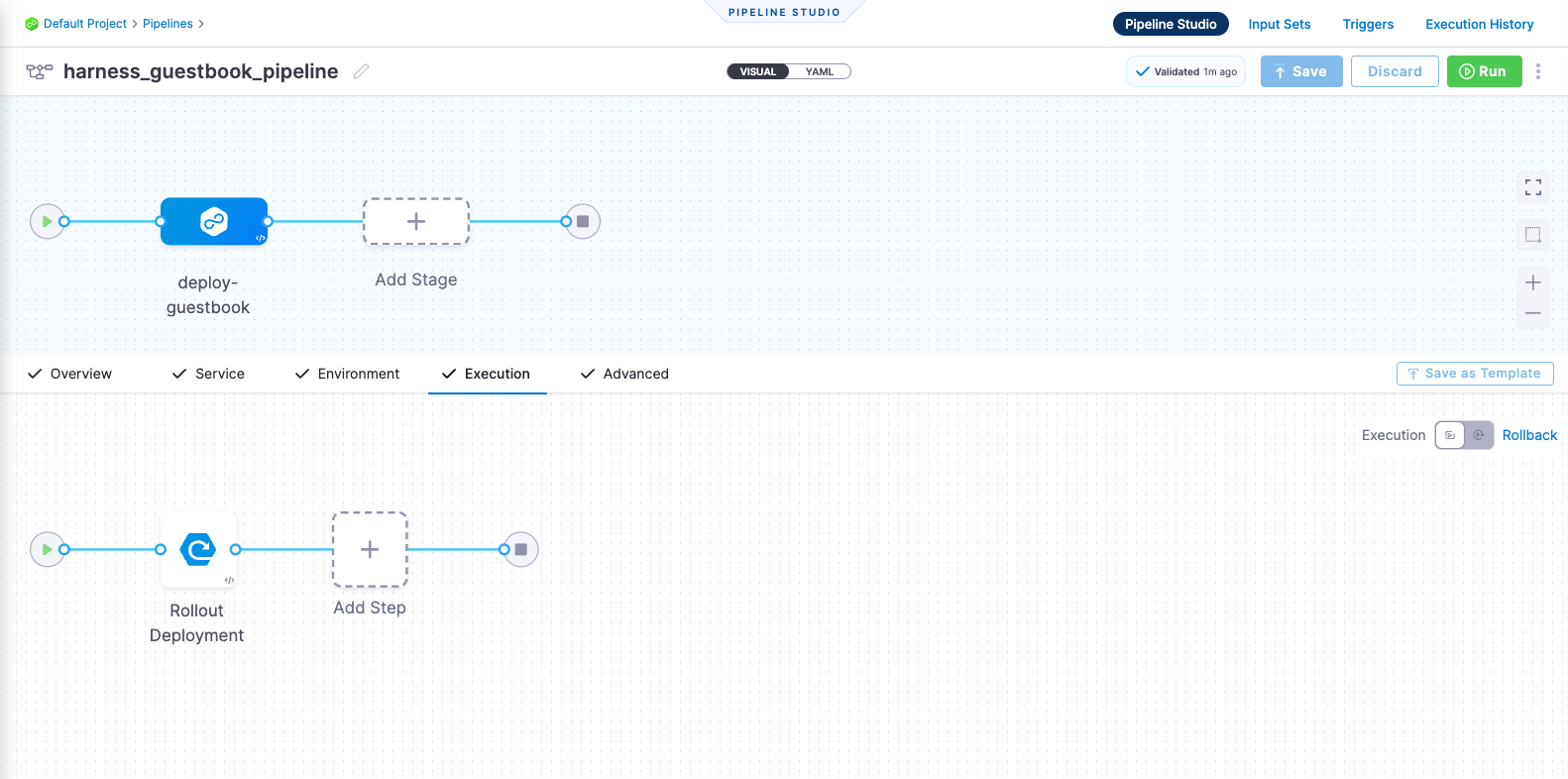

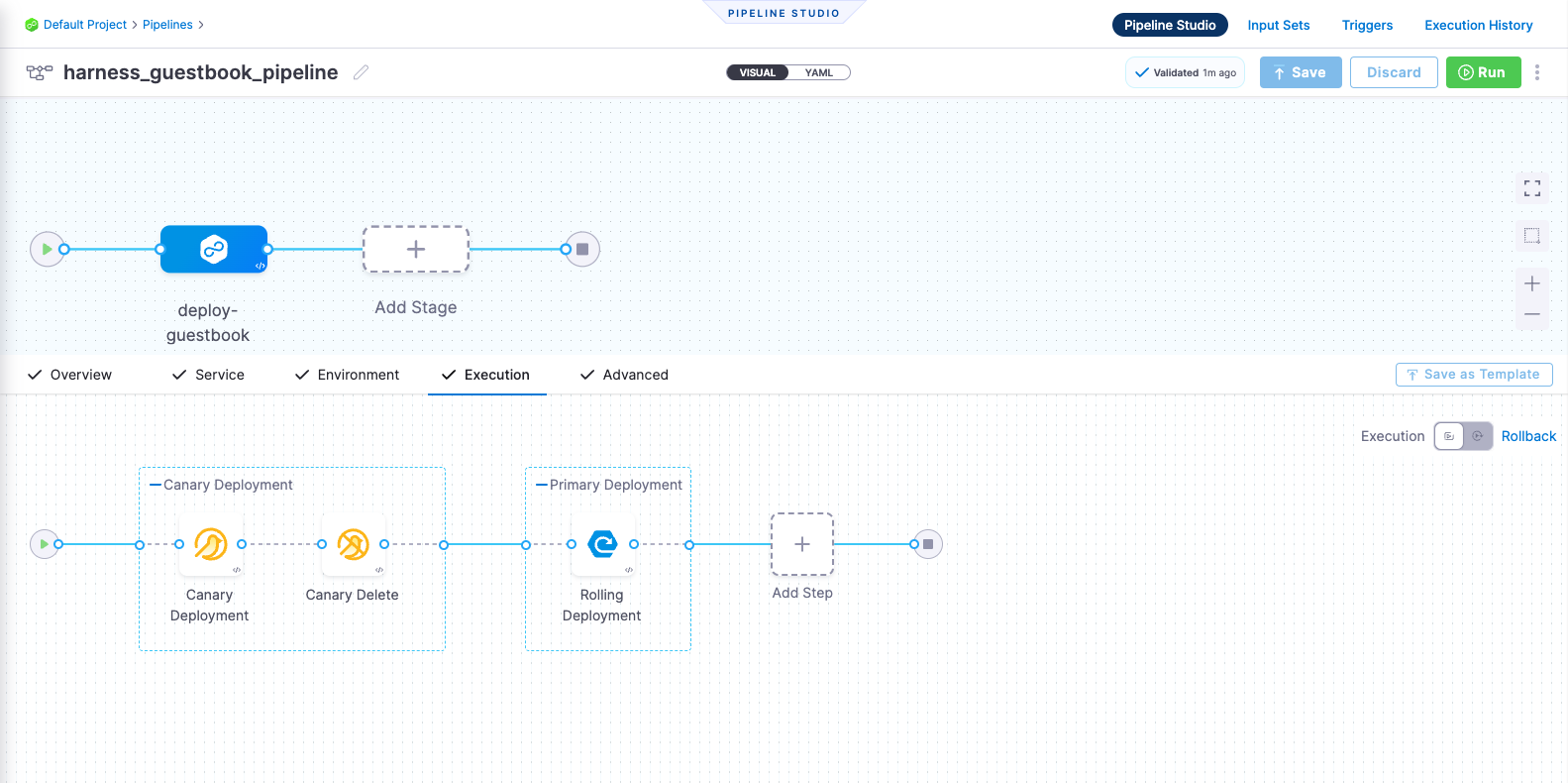

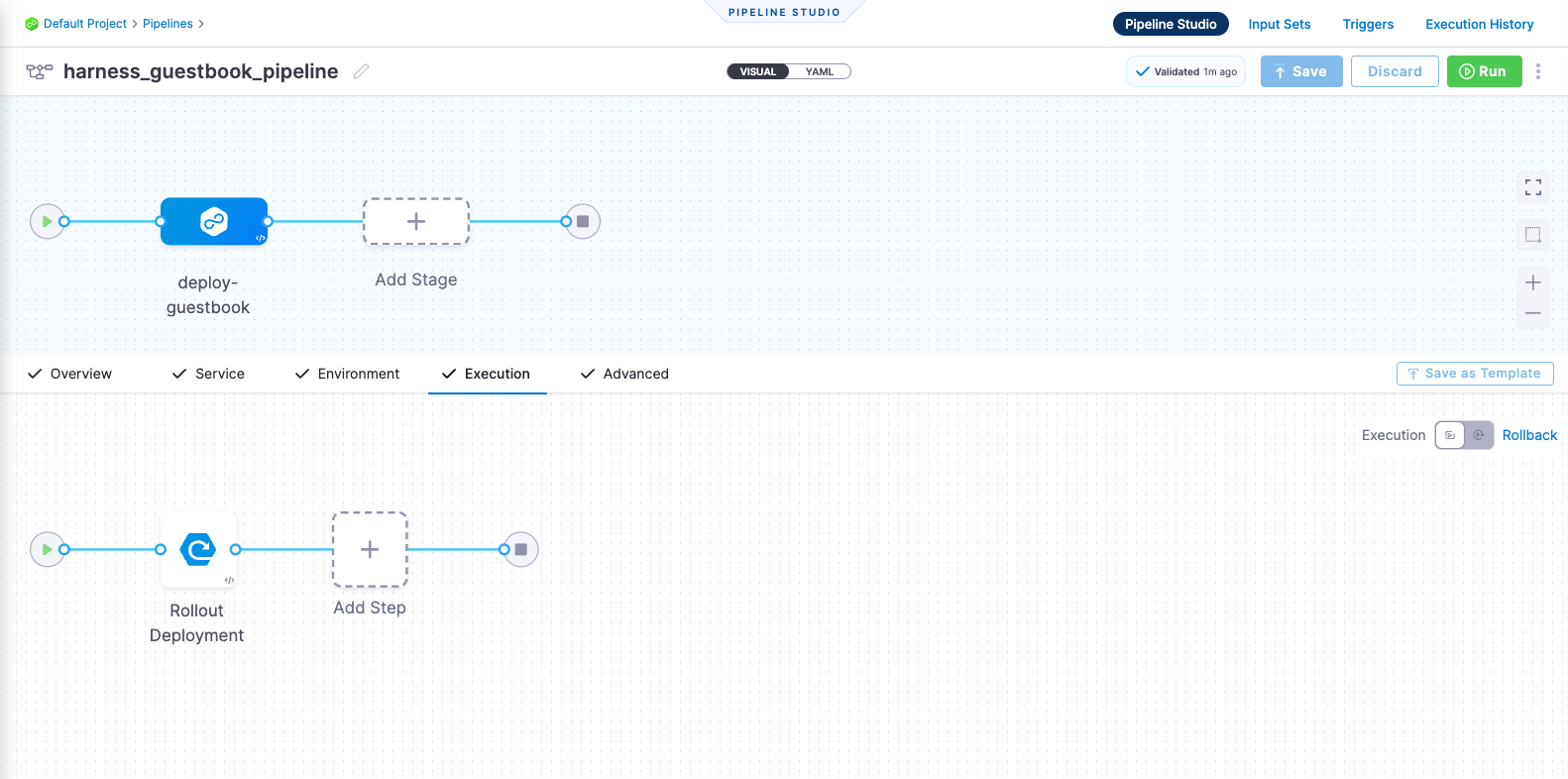

You can switch to the VISUAL view, and verify that the pipeline stage and execution steps appear as shown below.

What are Blue Green deployments?

Blue Green deployments involve running two identical environments (stage and prod) simultaneously with different service versions. QA and UAT are performed on a new service version in the stage environment first. Next, traffic is shifted from the prod environment to stage, and the previous service version running on prod is scaled down. Blue Green deployments are also referred to as red/black deployment by some vendors. For more information, go to When to use Blue Green deployments.

Create an environment

What are Harness environments?

Environments define the deployment location, categorized as Production or Pre-Production. Each environment includes infrastructure definitions for VMs, Kubernetes clusters, or other target infrastructures. To learn more about environments, go to Environments overview.

-

Use the following CLI Command to create Environments in your Harness project:

harness environment --file "helm-guestbook/harnesscd-pipeline/k8s-environment.yml" apply -

In your new environment, add Infrastructure Definitions using the following CLI command:

harness infrastructure --file "helm-guestbook/harnesscd-pipeline/k8s-infrastructure-definition.yml" apply

Create a service

What are Harness services?

In Harness, services represent what you deploy to environments. You use services to configure variables, manifests, and artifacts. The Services dashboard provides service statistics like deployment frequency and failure rate. To learn more about services, go to Services overview.

-

Use the following CLI command to create Services in your Harness Project.

harness service -file "helm-guestbook/harnesscd-pipeline/k8s-service.yml" apply

Create a pipeline

What are Harness pipelines?

A pipeline is a comprehensive process encompassing integration, delivery, operations, testing, deployment, and monitoring. It can utilize CI for code building and testing, followed by CD for artifact deployment in production. A CD pipeline is a series of stages where each stage deploys a service to an environment. To learn more about CD pipeline basics, go to CD pipeline basics.

- CLI Command for blue-green deployment:

harness pipeline --file "helm-guestbook/harnesscd-pipeline/k8s-bluegreen-pipeline.yml" apply

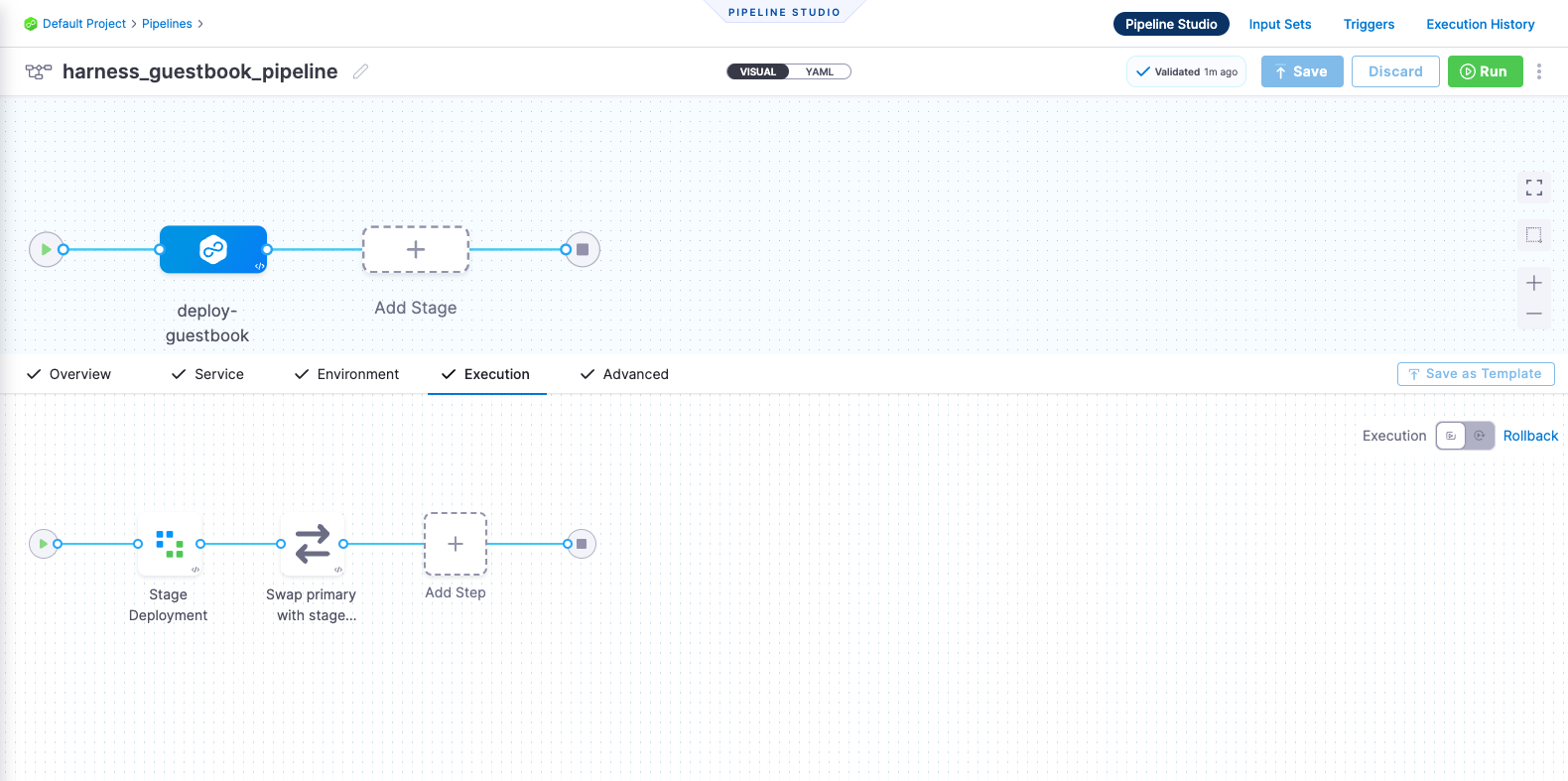

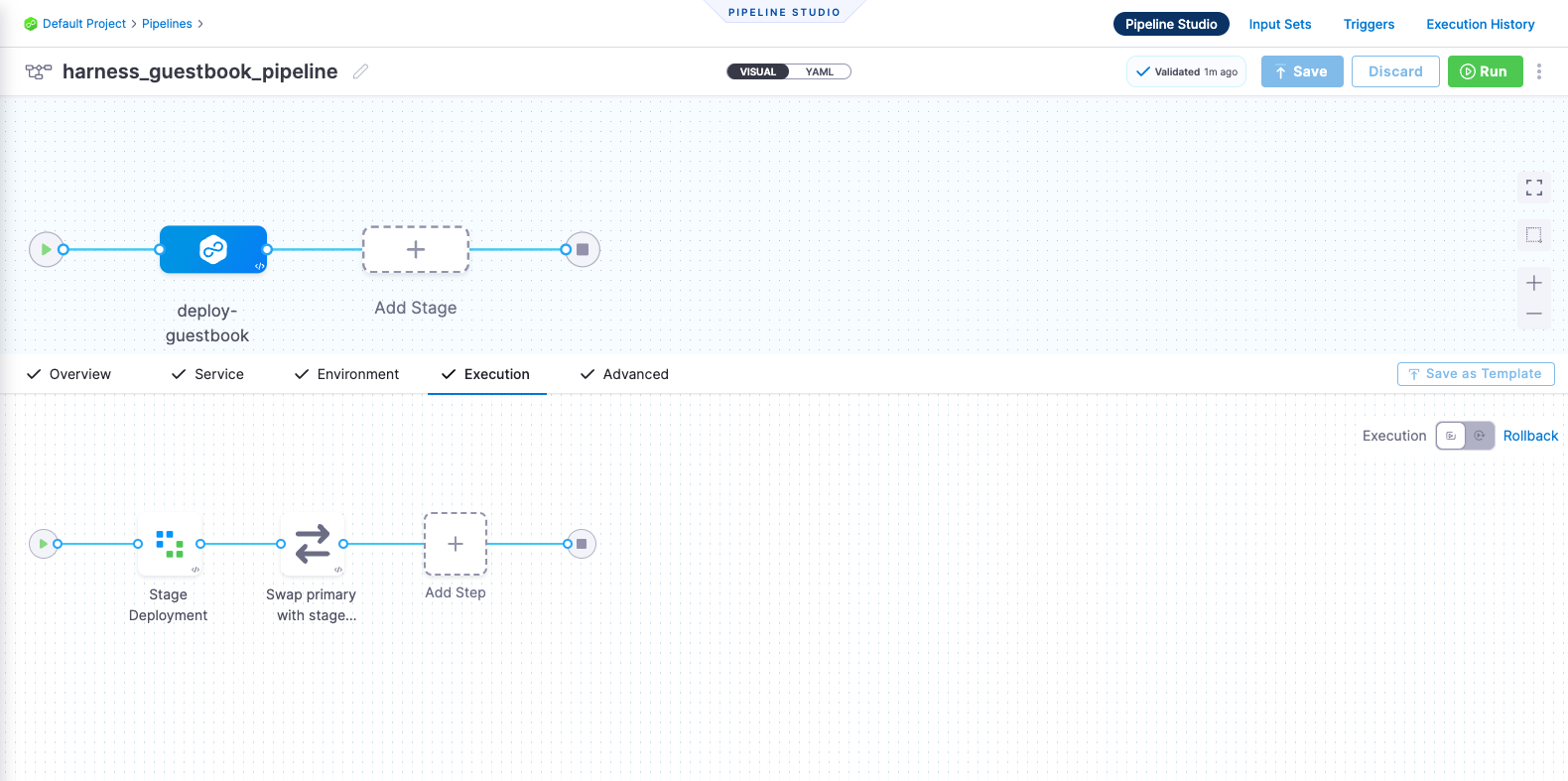

- You can switch to the VISUAL view, and verify that the pipeline stage and execution steps appear as shown below.

What are Rolling deployments?

Rolling deployments incrementally add nodes with a new service version to a single environment, either one by one or in batches defined by a window size. Rolling deployments allow a controlled and gradual update process for the new service version. For more information, go to When to use rolling deployments.

Create an environment

What are Harness environments?

Environments define the deployment location and are categorized as Production or Pre-Production. Each environment includes infrastructure definitions for VMs, Kubernetes clusters, or other target infrastructures. To learn more about environments, go to Environments overview.

-

Use the following CLI Command to create Environments in your Harness project:

harness environment --file "helm-guestbook/harnesscd-pipeline/k8s-environment.yml" apply -

In your new environment, add Infrastructure Definitions using the following CLI command:

harness infrastructure --file "helm-guestbook/harnesscd-pipeline/k8s-infrastructure-definition.yml" apply

Create a service

What are Harness services?

In Harness, services represent what you deploy to environments. You use services to configure variables, manifests, and artifacts. The Services dashboard provides service statistics like deployment frequency and failure rate. To learn more about services, go to Services overview.

-

Use the following CLI command to create Services in your Harness Project.

harness service -file "helm-guestbook/harnesscd-pipeline/k8s-service.yml" apply

Create a pipeline

What are Harness pipelines?

A pipeline is a comprehensive process encompassing integration, delivery, operations, testing, deployment, and monitoring. A pipeline can utilize CI for code building and testing and CD for artifact deployment in production. A CD pipeline is a series of stages where each stage deploys a service to an environment. To learn more about CD pipeline basics, go to CD pipeline basics.

-

CLI Command for Rolling deployment:

harness pipeline --file "helm-guestbook/harnesscd-pipeline/k8s-rolling-pipeline.yml" apply -

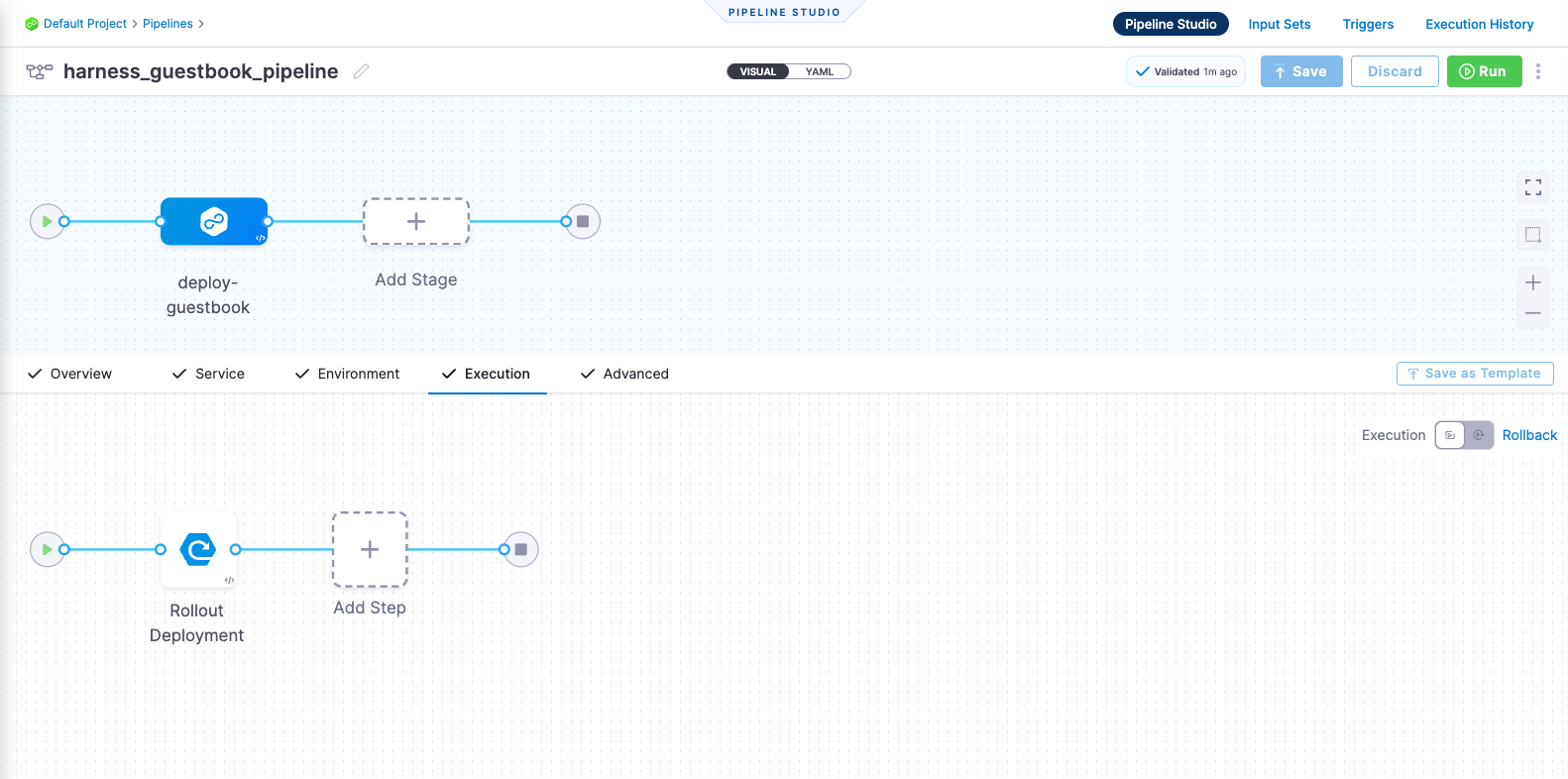

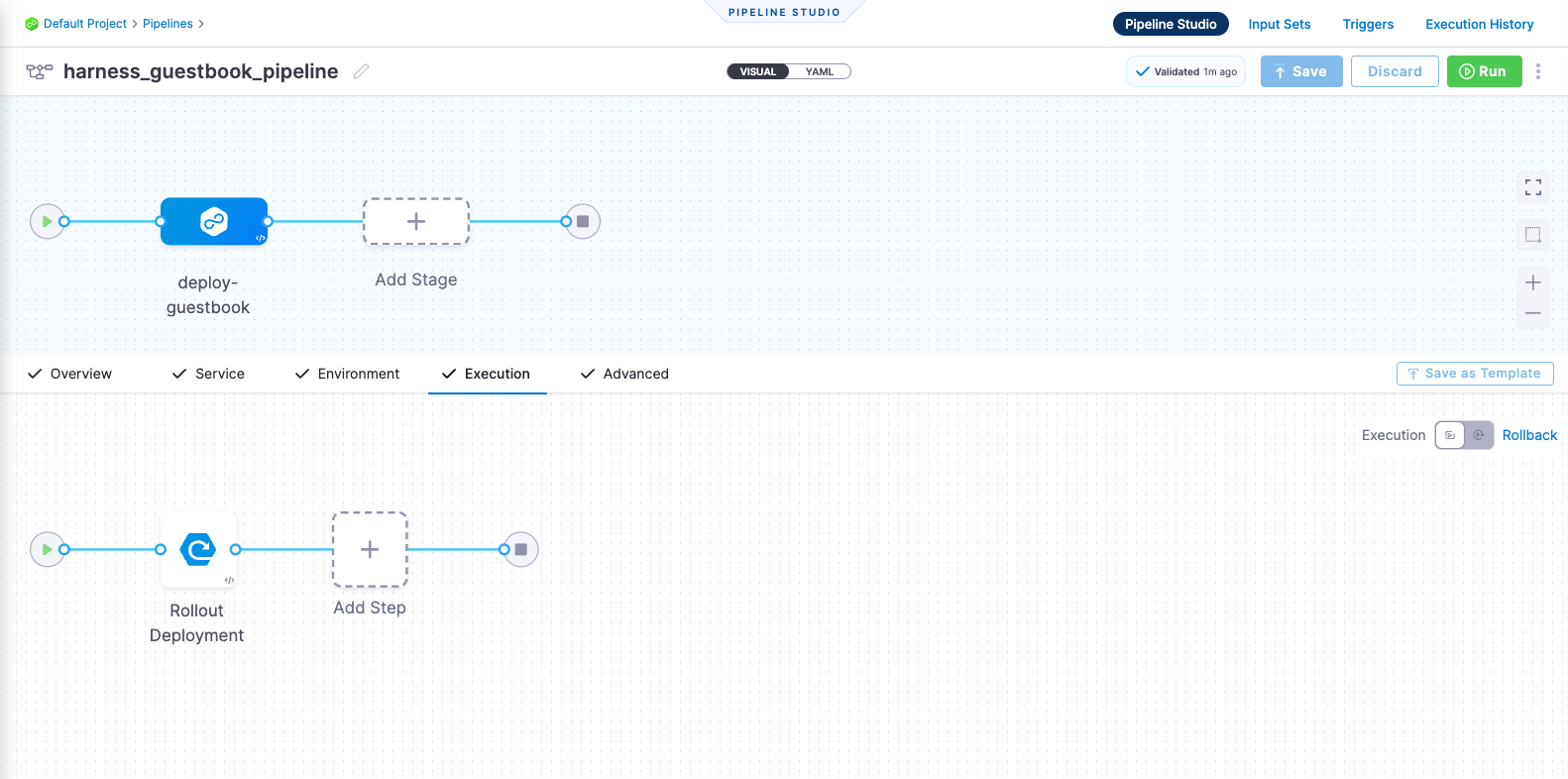

You can switch to the VISUAL view, and verify that the pipeline stage and execution steps appear as shown below.

What are Rolling deployments?

Rolling deployments incrementally add nodes in a single environment with a new service version, either one-by-one or in batches defined by a window size. Rolling deployments allow a controlled and gradual update process for the new service version. For more information, go to When to use rolling deployments.

Create an environment

What are Harness environments?

Environments define the deployment location, categorized as Production or Pre-Production. Each environment includes infrastructure definitions for VMs, Kubernetes clusters, or other target infrastructures. To learn more about environments, go to Environments overview.

-

Use the following CLI Command to create Environments in your Harness project:

harness environment --file "helm-guestbook/harnesscd-pipeline/nativehelm-environment.yml" apply -

In your new environment, add Infrastructure Definitions using the following CLI command:

harness infrastructure --file "helm-guestbook/harnesscd-pipeline/nativehelm-infrastructure-definition.yml" apply

Create a service

What are Harness services?

In Harness, services represent what you deploy to environments. You use services to configure variables, manifests, and artifacts. The Services dashboard provides service statistics like deployment frequency and failure rate. To learn more about services, go to Services overview.

-

Use the following CLI command to create Services in your Harness Project.

harness service -file "helm-guestbook/harnesscd-pipeline/nativehelm-service.yml" apply

Create a pipeline

What are Harness pipelines?

A pipeline is a comprehensive process encompassing integration, delivery, operations, testing, deployment, and monitoring. It can utilize CI for code building and testing, followed by CD for artifact deployment in production. A CD pipeline is a series of stages where each stage deploys a service to an environment. To learn more about CD pipeline basics, go to CD pipeline basics.

-

CLI Command for Rolling deployment:

harness pipeline --file "helm-guestbook/harnesscd-pipeline/nativehelm-rolling-pipeline.yml" apply -

You can switch to the VISUAL view, and verify that the pipeline stage and execution steps appear as shown below.

Before you begin

Make sure that you have met the following requirements:

- You have a GitHub Personal Access Token (PAT) with proper repository permissions. For more information, go to Managing your personal access token.

- You have set up a Kubernetes cluster. You can use your own Kubernetes cluster or a K3D (recommended) for installing Harness Delegates and deploying a sample application in a local development environment. For more information, go to Delegate system and network requirements.

- You have installed Helm CLI.

- You have forked the harnesscd-example-apps repository through the GitHub web interface. For more details, go to Forking a GitHub repository.

Deploy your applications using a Helm template

-

Log in to the Harness App.

-

Select Projects on the top left corner of the UI, and then select Default Project.

Follow the below mentioned steps as they are, including the naming conventions, for the pipeline to run successfully.

Set up a delegate

What is the Harness Delegate?

The Harness Delegate is a service that runs in your local network or VPC to establish connections between the Harness Manager and various providers such as artifacts registries, cloud platforms, etc. The delegate is installed in the target infrastructure, for example, a Kubernetes cluster, and performs operations including deployment and integration. Learn more about the delegate in the Delegate Overview.

-

In PROJECT SETUP, select Delegates, and then Select Delegates on the top right corner of the UI.

-

Select New Delegate. For this tutorial, let's explore how to install the delegate using Helm.

-

In Select where you want to install your Delegate, select Kubernetes.

-

In Install your Delegate, select Helm Chart.

-

Add the Harness Helm Chart repository to your local helm registry using the following commands:

helm repo add harness-delegate https://app.harness.io/storage/harness-download/delegate-helm-chart/helm repo update harness-delegate -

Copy the following command from the Delegate Installation wizard.

DELEGATE_TOKEN,ACCOUNT_IDandMANAGER_ENDPOINTare auto-populated values that you can obtain from the Delegate Installation wizard.helm upgrade -i helm-delegate --namespace harness-delegate-ng --create-namespace \

harness-delegate/harness-delegate-ng \

--set delegateName=helm-delegate \

--set accountId=ACCOUNT_ID \

--set managerEndpoint=MANAGER_ENDPOINT \

--set delegateDockerImage=harness/delegate:23.03.78904 \

--set replicas=1 --set upgrader.enabled=false \

--set delegateToken=DELEGATE_TOKEN -

Verify that the delegate is installed successfully and can connect to the Harness Manager.

-

You can also Install Harness Delegate on Kubernetes or Docker using a Terraform Helm Provider or Kubernetes Manifest.

Create a secret

What are Harness secrets?

Harness offers built-in secret management for encrypted storage of sensitive information. Secrets are decrypted when needed, and only the private network-connected Harness Delegate has access to the key management system. You can also integrate your own secret manager. To learn more about secrets in Harness, go to Harness Secret Manager Overview.

- In PROJECT SETUP, select Secrets.

- Select New Secret, and then select Text.

- In the Add new Encrypted Text dialog:

- In Secret Name, enter

harness_gitpat. - In Secret Value, enter your GitHub PAT.

- In Secret Name, enter

- Select Save.

Create a connector

What are connectors?

Connectors in Harness enable integration with 3rd party tools, providing authentication and operations during pipeline runtime. For instance, a GitHub connector facilitates authentication and fetching files from a GitHub repository within pipeline stages. Explore connector how-tos here.

- Create a GitHub connector.

- In PROJECT SETUP, select Connectors, and then select Create via YAML Builder.

- Copy and paste the contents of github-connector.yml.

- Replace GITHUB_USERNAME with your GitHub account username in the YAML wherever required. We assume that you have already forked the harnesscd-example-apps repository as mentioned in the Before you begin section.

- Select Save Changes, and verify that the new connector named harness_gitconnector is successfully created.

- Select Connection Test under Connectivity Status to ensure that the connection is successful.

- Create a Kubernetes connector.

- In PROJECT SETUP, select Connectors, and then select Create via YAML Builder.

- Copy and paste the contents of kubernetes-connector.yml.

- Replace DELEGATE_NAME with the installed delegate name. To obtain the delegate name, navigate to Default Project > PROJECT SETUP > Delegates.

- Select Save Changes, and verify that the new connector named harness_k8sconnector is successfully created.

- Select Connection Test under Connectivity Status to ensure that the connection is successful.

Deployment Strategies

Helm is primarily focused on managing the release and versioning of application packages. Helm supports rollback through its release management features. When you deploy an application using Helm, it creates a release that represents a specific version of the application with a unique release name.

How Harness performs canary and blue-green deployments with Helm

- Harness integrates with Helm by utilizing Helm charts and releases. Helm charts define the application package and its dependencies, and Helm releases represent specific versions of the application.

- Harness allows you to define the application configuration, including Helm charts, values files, and any custom configurations required for your application.

- In Harness, You can specify the percentage of traffic to route to the new version in a canary deployment or define the conditions to switch traffic between the blue and green environments in a blue-green deployment.

- Harness orchestrates the deployment workflow, including the deployment of Helm charts, by interacting with the Helm API. It manages the release lifecycle, tracks revisions, and controls the rollout process based on the defined canary or blue-green strategy.

Harness adds an additional layer of functionality on top of Helm, providing a streamlined and automated approach to canary and blue-green deployments. By leveraging Helm's package management capabilities and integrating with its release management features, Harness extends Helm's capabilities to support canary and blue-green deployment strategies.

- Canary

- Blue Green

- K8s Rolling

- Native Helm Rolling

What are Canary deployments?

A Canary deployment updates nodes in a single environment, gradually allowing you to use gates between increments. Canary deployments allow incremental updates and ensure a controlled rollout process. For more information, go to When to use Canary deployments.

Create an environment

What are Harness environments?

Environments define the deployment location, categorized as Production or Pre-Production. Each environment includes infrastructure definitions for VMs, Kubernetes clusters, or other target infrastructures. To learn more about environments, go to Environments overview.

- In Default Project, select Environments.

- Select New Environment and toggle to the YAML view (next to VISUAL).

- Copy the contents of k8s-environment.yml and paste it in the YAML editor, and then select Save.

- In the Infrastructure Definitions tab, select Infrastructure Definition, and then select Edit YAML.

- Copy the contents of k8s-infrastructure-definition.yml and paste it in the YAML editor.

- Select Save and verify that the environment and infrastructure definition are created successfully.

Create a service

What are Harness services?

In Harness, services represent what you deploy to environments. You use services to configure variables, manifests, and artifacts. The Services dashboard provides service statistics like deployment frequency and failure rate. To learn more about services, go to Services overview.

- In Default Project, select Services.

- Select New Service, enter the name,

harnessguestbookdep, and then select Save. - Toggle to the YAML view (next to VISUAL) under the Configuration tab, and then select Edit YAML.

- Copy the contents of k8s-service.yml and paste it in the YAML editor.

- Select Save and verify that the service, harness_guestbook is successfully created.

Create a pipeline

What are Harness pipelines?

A pipeline is a comprehensive process encompassing integration, delivery, operations, testing, deployment, and monitoring. It can utilize CI for code building and testing, followed by CD for artifact deployment in production. A CD Pipeline is a series of stages where each stage deploys a service to an environment. To learn more about CD pipeline basics, go to CD pipeline basics.

- In Default Project, select Pipelines.

- Select New Pipeline.

- Enter the name

guestbook_canary_pipeline. - Select Inline to store the pipeline in Harness.

- Select Start and, in the Pipeline Studio, toggle to YAML to use the YAML editor.

- Select Edit YAML to enable edit mode, and copy the contents of k8s-canary-pipeline.yml and paste it in the YAML editor.

- Select Save to save the pipeline.

- You can switch to the VISUAL view, and verify that the pipeline stage and execution steps appear as shown below.

What are Blue Green deployments?

Blue Green deployments involve running two identical environments (stage and prod) simultaneously with different service versions. QA and UAT are performed on a new service version in the stage environment first. Next, traffic is shifted from the prod environment to stage, and the previous service version running on prod is scaled down. Blue Green deployments are also referred to as red/black deployment by some vendors. For more information, go to When to use Blue Green deployments.

Create an environment

What are Harness environments?

Environments define the deployment location, categorized as Production or Pre-Production. Each environment includes infrastructure definitions for VMs, Kubernetes clusters, or other target infrastructures. To learn more about environments, go to Environments overview.

- In Default Project, select Environments.

- Select New Environment and toggle to the YAML view (next to VISUAL).

- Copy the contents of k8s-environment.yml and paste it in the YAML editor, and then select Save.

- In the Infrastructure Definitions tab, select Infrastructure Definition, and then select Edit YAML.

- Copy the contents of k8s-infrastructure-definition.yml and paste it in the YAML editor.

- Select Save and verify that the environment and infrastructure definition are created successfully.

Create a service

What are Harness services?

In Harness, services represent what you deploy to environments. You use services to configure variables, manifests, and artifacts. The Services dashboard provides service statistics like deployment frequency and failure rate. To learn more about services, go to Services overview.

- In Default Project, select Services.

- Select New Service, enter the name,

harnessguestbookdep, and then select Save. - Toggle to the YAML view (next to VISUAL) under the Configuration tab, and then select Edit YAML.

- Copy the contents of k8s-service.yml and paste it in the YAML editor.

- Select Save and verify that the service, harness_guestbook is successfully created.

Create a pipeline

What are Harness pipelines?

A pipeline is a comprehensive process encompassing integration, delivery, operations, testing, deployment, and monitoring. It can utilize CI for code building and testing, followed by CD for artifact deployment in production. A CD pipeline is a series of stages where each stage deploys a service to an environment. To learn more about CD pipeline basics, go to CD pipeline basics.

- In Default Project, select Pipelines.

- Select New Pipeline.

- Enter the name

guestbook_bluegreen_pipeline. - Select Inline to store the pipeline in Harness.

- Select Start and, in the Pipeline Studio, toggle to YAML to use the YAML editor.

- Select Edit YAML to enable edit mode, and copy the contents of k8s-bluegreen-pipeline.yml and paste it in the YAML editor.

- Select Save to save the pipeline.

- You can switch to the VISUAL view, and verify that the pipeline stage and execution steps appear as shown below.

What are Rolling deployments?

Rolling deployments incrementally add nodes with a new service version to a single environment, either one by one or in batches defined by a window size. Rolling deployments allow a controlled and gradual update process for the new service version. For more information, go to When to use rolling deployments.

Create an environment

What are Harness environments?

Environments define the deployment location and are categorized as Production or Pre-Production. Each environment includes infrastructure definitions for VMs, Kubernetes clusters, or other target infrastructures. To learn more about environments, go to Environments overview.

- In Default Project, select Environments.

- Select New Environment and toggle to the YAML view (next to VISUAL).

- Copy the contents of k8s-environment.yml, paste it in the YAML editor, and then select Save.

- On the Infrastructure Definitions tab, select Infrastructure Definition, and then select Edit YAML.

- Copy the contents of k8s-infrastructure-definition.yml and paste it in the YAML editor.

- Select Save and verify that the environment and infrastructure definition were created successfully.

Create a service

What are Harness services?

In Harness, services represent what you deploy to environments. You use services to configure variables, manifests, and artifacts. The Services dashboard provides service statistics like deployment frequency and failure rate. To learn more about services, go to Services overview.

- In Default Project, select Services.

- Select New Service, enter the name,

harnessguestbookdep, and then select Save. - On the Configuration tab, toggle to the YAML view (next to VISUAL), and then select Edit YAML.

- Copy the contents of k8s-service.yml and paste it in the YAML editor.

- Select Save and verify that the service, harness_guestbook was successfully created.

Create a pipeline

What are Harness pipelines?

A pipeline is a comprehensive process encompassing integration, delivery, operations, testing, deployment, and monitoring. A pipeline can utilize CI for code building and testing and CD for artifact deployment in production. A CD pipeline is a series of stages where each stage deploys a service to an environment. To learn more about CD pipeline basics, go to CD pipeline basics.

- In Default Project, select Pipelines.

- Select New Pipeline.

- Enter the name

guestbook_k8s_rolling_pipeline. - Select Inline to store the pipeline in Harness.

- Select Start and, in the Pipeline Studio, toggle to YAML to use the YAML editor.

- Select Edit YAML to enable edit mode, and copy the contents of k8s-rolling-pipeline.yml and paste it in the YAML editor.

- Select Save to save the pipeline.

- You can switch to the VISUAL view, and verify that the pipeline stage and execution steps appear as shown below.

What are Rolling deployments?

Rolling deployments incrementally add nodes in a single environment with a new service version, either one-by-one or in batches defined by a window size. Rolling deployments allow a controlled and gradual update process for the new service version. For more information, go to When to use rolling deployments.

Create an environment

What are Harness environments?

Environments define the deployment location, categorized as Production or Pre-Production. Each environment includes infrastructure definitions for VMs, Kubernetes clusters, or other target infrastructures. To learn more about environments, go to Environments overview.

- In Default Project, select Environments.

- Select New Environment and toggle to the YAML view (next to VISUAL).

- Copy the contents of nativehelm-environment.yml and paste it in the YAML editor, and then select Save.

- In the Infrastructure Definitions tab, select Infrastructure Definition, and then select Edit YAML.

- Copy the contents of nativehelm-infrastructure-definition.yml and paste it in the YAML editor.

- Select Save and verify that the environment and infrastructure definition are created successfully.

Create a service

What are Harness services?

In Harness, services represent what you deploy to environments. You use services to configure variables, manifests, and artifacts. The Services dashboard provides service statistics like deployment frequency and failure rate. To learn more about services, go to Services overview.

- In Default Project, select Services.

- Select New Service, enter the name,

harnessguestbook, and then select Save. - Toggle to the YAML view (next to VISUAL) under the Configuration tab, and then select Edit YAML.

- Copy the contents of nativehelm-service.yml and paste it in the YAML editor.

- Select Save and verify that the service, harness_guestbook is successfully created.

Create a pipeline

What are Harness pipelines?

A pipeline is a comprehensive process encompassing integration, delivery, operations, testing, deployment, and monitoring. It can utilize CI for code building and testing, followed by CD for artifact deployment in production. A CD pipeline is a series of stages where each stage deploys a service to an environment. To learn more about CD pipeline basics, go to CD pipeline basics.

- In Default Project, select Pipelines.

- Select New Pipeline.

- Enter the name

guestbook_rolling_pipeline. - Select Inline to store the pipeline in Harness.

- Select Start and, in the Pipeline Studio, toggle to YAML to use the YAML editor.

- Select Edit YAML to enable edit mode, and copy the contents of nativehelm-rolling-pipeline.yml and paste it in the YAML editor.

- Select Save to save the pipeline.

- You can switch to the VISUAL view, and verify that the pipeline stage and execution steps appear as shown below.

Run the pipeline

Finally, it's time to execute the pipeline.

-

Select Run, and then select Run Pipeline to initiate the deployment.

-

Observe the execution logs as Harness deploys the workload and checks for steady state.

-

After a successful execution, you can check the deployment on your Kubernetes cluster using the following command:

kubectl get pods -n default -

To access the Guestbook application deployed using the Harness pipeline, port forward the service and access it at

http://localhost:8080:kubectl port-forward svc/<service-name> 8080:80

How to deploy your own app by using Harness

You can integrate your own microservice application into this tutorial by following the steps outlined below:

-

Utilize the same delegate that you deployed as part of this tutorial. Alternatively, deploy a new delegate, but remember to use a newly created delegate identifier when creating connectors.

-

If you intend to use a private Git repository that hosts your Helm chart, create a Harness secret containing the Git personal access token (PAT). Subsequently, create a new Git connector using this secret.

-

Create a Kubernetes connector if you plan to deploy your applications in a new Kubernetes environment. Make sure to update the infrastructure definition to reference this newly created Kubernetes connector.

-

Once you complete all the aforementioned steps, create a new Harness service that leverages the Helm chart for deploying applications.

-

Lastly, establish a new deployment pipeline and select the newly created infrastructure definition and service. Choose a deployment strategy that aligns with your microservice application's deployment needs.

-

Voila! You're now ready to deploy your own application by using Harness.

Congratulations!🎉

You've just learned how to use Harness CD to deploy application using a Helm Chart template.

Keep learning about Harness CD. Add Triggers to your pipeline that'll respond to Git events. For more information, go to Triggering pipelines.