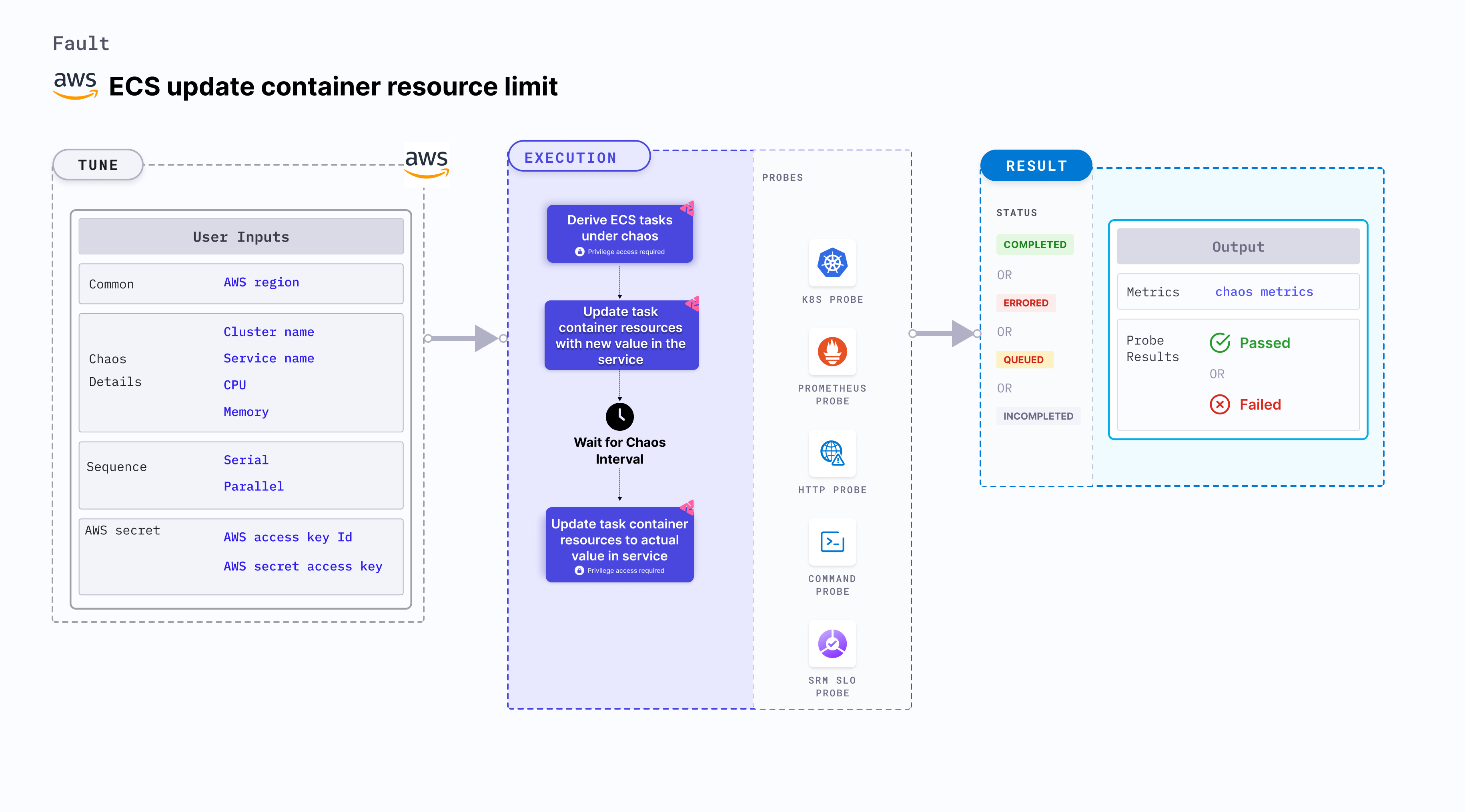

ECS update container resource limit

ECS update container resource limits allows you to modify the CPU and memory resources of containers in an Amazon ECS (Elastic Container Service) task. This experiment primarily involves ECS Fargate and doesn't depend on EC2 instances. They focus on altering the state or resources of ECS containers without direct container interaction.

Use cases

ECS update container resource limit:

- Determines the behavior of your ECS tasks when their resource limits are changed.

- Verifies the scalability and resilience of your ECS tasks under different resource configurations.

- Modifies the resource limits of a container by updating the task definition associated with the ECS service or task.

- Simulates scenarios where containers experience changes in their allocated resources, which may affect their performance or availability. For example, you can increase or decrease the CPU or memory limits of a container to test how your application adapts to changes in resource availability.

- Validates the behavior of your application and infrastructure during simulated resource limit changes, such as:

- Testing how your application scales up or down in response to changes in CPU or memory limits.

- Verifying the resilience of your system when containers are running with lower resource limits.

- Evaluating the impact of changes in resource limits on the performance and availability of your application.

Modifying the container resource limits using the ECS update container resource limit is an intentional disruption and should be used carefully in controlled environments, such as during testing or staging, to avoid any negative impact on the production workloads.

Prerequisites

- Kubernetes >= 1.17

- ECS cluster running with the desired tasks and containers and familiarity with ECS service update and deployment concepts.

- Create a Kubernetes secret that has the AWS access configuration(key) in the

CHAOS_NAMESPACE. Below is a sample secret file:

apiVersion: v1

kind: Secret

metadata:

name: cloud-secret

type: Opaque

stringData:

cloud_config.yml: |-

# Add the cloud AWS credentials respectively

[default]

aws_access_key_id = XXXXXXXXXXXXXXXXXXX

aws_secret_access_key = XXXXXXXXXXXXXXX

HCE recommends that you use the same secret name, that is, cloud-secret. Otherwise, you will need to update the AWS_SHARED_CREDENTIALS_FILE environment variable in the fault template with the new secret name and you won't be able to use the default health check probes.

Below is an example AWS policy to execute the fault.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ecs:DescribeTasks",

"ecs:DescribeServices",

"ecs:DescribeTaskDefinition",

"ecs:RegisterTaskDefinition",

"ecs:UpdateService",

"ecs:ListTasks",

"ecs:DeregisterTaskDefinition"

],

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"iam:PassRole"

],

"Resource": "*"

}

]

}

- Refer to AWS named profile for chaos to use a different profile for AWS faults.

- The ECS containers should be in a healthy state before and after introducing chaos.

- Refer to the common attributes and AWS-specific tunables to tune the common tunables for all faults and aws specific tunables.

- Refer to the superset permission/policy to execute all AWS faults.

Mandatory tunables

| Tunable | Description | Notes |

|---|---|---|

| CLUSTER_NAME | Name of the target ECS cluster. | For example, cluster-1. |

| SERVICE_NAME | Name of the ECS service under chaos. | For example, nginx-svc. |

| REGION | Region name of the target ECS cluster | For example, us-east-1. |

Optional tunables

| Tunable | Description | Notes |

|---|---|---|

| TOTAL_CHAOS_DURATION | Duration that you specify, through which chaos is injected into the target resource (in seconds). | Default: 30s. For more information, go to duration of the chaos. |

| CHAOS_INTERVAL | Interval between successive instance terminations (in seconds). | Default: 30s. For more information, go to chaos interval. |

| AWS_SHARED_CREDENTIALS_FILE | Path to the AWS secret credentials. | Defaults to /tmp/cloud_config.yml. |

| CPU | CPU resouce set of the target ECS container. | Default: 256. For more information, go to CPU limit. |

| Memory | Memory resouce set of the target ECS container | Default: 256. For more information, go to memory limit. |

| RAMP_TIME | Period to wait before and after injecting chaos (in seconds). | For example, 30 s. For more information, go to ramp time. |

CPU and memory resource limit

CPU and memory limit for the task containers. Tune it by using the CPU and MEMORY environment variables.

The following YAML snippet illustrates the use of this environment variable:

# Set CPU and Memory container resouce for the target container

apiVersion: litmuschaos.io/v1alpha1

kind: ChaosEngine

metadata:

name: aws-nginx

spec:

engineState: "active"

annotationCheck: "false"

chaosServiceAccount: litmus-admin

experiments:

- name: ecs-update-container-resource-limit

spec:

components:

env:

- name: CPU

value: '256'

- name: MEMORY

value: '256'

- name: REGION

value: 'us-east-2'

- name: TOTAL_CHAOS_DURATION

VALUE: '60'