Set up a macOS VM build infrastructure with Anka Registry

Harness recommends Harness Cloud for macOS builds.

This recommendation is due to licensing requirements and the complexity of managing macOS VMs with Anka virtualization.

With Harness Cloud, your builds run on Harness-managed runners, and you can start running builds in minutes.

This topic describes how to use AWS EC2 instances to run a macOS build farm with Anka's virtualization platform for macOS. This configuration uses AWS EC2 instances and dedicated hosts to host a Harness Delegate and Runner, as well as the Anka Controller, Registry, and Virtualization. Working through the Anka controller and registry, the Harness Runner creates VMs dynamically in response to CI build requests.

For more information about Anka and Mac on EC2 go to the Anka documentation on What is the Anka Build Cloud, Setting up the Controller and Registry on Linux/Docker, and Anka on AWS EC2 Macs - Community AMIs.

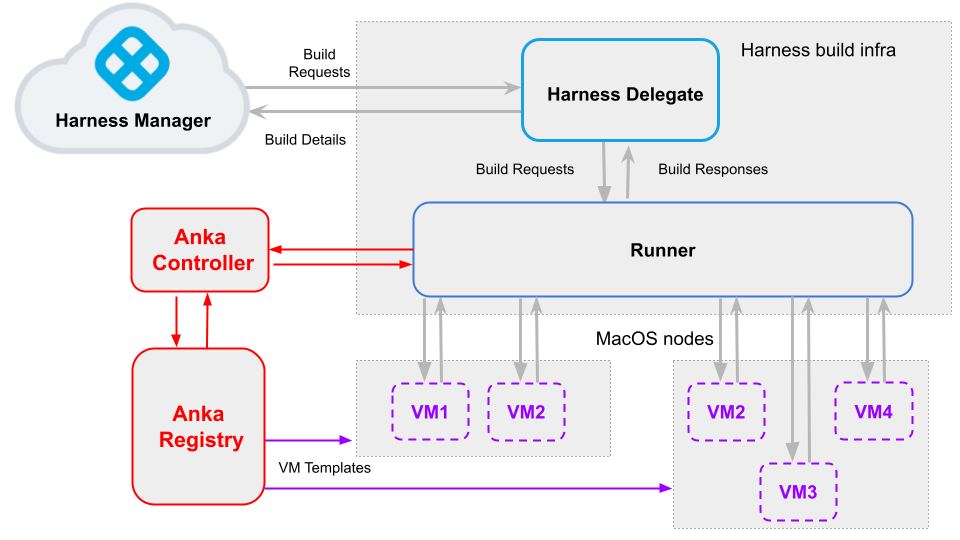

The following diagram illustrates how Harness CI and Anka work together. The Harness Delegate communicates directly with your Harness instance. The VM runner maintains a pool of VMs for running builds. When the delegate receives a build request, it forwards the request to the runner, which runs the build on an available VM. The Anka registry and controller orchestrate and maintain the Mac VMs. Once you set up the Harness and Anka components, you can scale up your build farm with additional templates, build nodes, and VMs.

This is one of several build infrastructure options, for example, you can also run Mac builds on Harness Cloud build infrastructure

Requirements

This configuration requires:

- An Anka Build license.

- Familiarity with AWS EC2, Anka, and the macOS ecosystem.

- Familiarity with the Harness Platform and CI pipeline creation.

- Familiarity with Harness Delegates, VM runners, and pools.

Set up the Anka Controller and Registry

Install the Anka Controller and Registry on your Linux-based EC2 instance. For more information about this set up and getting acquainted with the Anka Controller and Registry, go to the Anka documentation on Setting up the Controller and Registry on Linux/Docker.

- In the AWS EC2 Console, launch a Linux-based instance. Make sure this instances has enough resources to support the Anka Controller and Registry, at least 10GB. Harness recommends a size of t2-large or greater or storage of 100 GiB.

- SSH into your Linux-based EC2 instance.

- Install Docker.

- Install Docker Compose.

- Follow the Docker Compose post-installation setup so you can run

docker-composewithoutsudo. - Download and extract the Anka Docker Package.

- Configure Anka settings in docker-compose.yml.

- Run

docker-compose up -dto start the containers. - Run

docker ps -aand verify theanka-controllerandanka-registrycontainers are running. - Optionally, you can enable token authentication for the Controller and Registry.

Set up Anka Virtualization

After setting up the Anka Controller and Registry, you can set up Anka Virtualization on a Mac-based EC2 instance. For more information, go to Anka on AWS EC2 Macs. These instructions use an Anka community AMI.

-

In AWS EC2, allocate a dedicated host. For Instance family, select mac-m2.

-

Launch an instance on the dedicated host, configured as follows:

- Application and OS Images: Select Browse AMIs and select an Anka community AMI. If you don't want to use a preconfigured AMI, you can use a different macOS AMI, but you'll need to take additional steps to install Anka Virtualization on the instance.

- Instance type: Select a mac-m2 instance.

- Key pair: Select an existing key pair or create one.

- Advanced details - Tenancy: Select Dedicated host - Launch this instance on a dedicated host

- Advanced details - Target host by: Select Host ID

- Advanced details - Tenancy host ID: Select your mac-m2 dedicated host.

- User data: Refer to the Anka documentation on User data.

- Network settings: Allow HTTP Traffic from the Internet might be required to use virtualization platforms, such as VNC viewer.

The first three minutes of this Veertu YouTube video briefly demonstrate the Anka community AMI set up process, include details about User data.

-

Open a terminal and run

anka versionto check if Anka Virtualization is installed. If it is not, Install Anka Virtualization. -

Make sure the environment is updated and necessary packages are installed. For example, you can run

softwareupdate -i -a.

Create Anka VM templates

-

In your Anka Virtualization EC2 instance, create one or more Anka VMs.

Anka VMs created with

anka createare VM templates. You can use these to create Vms on other Anka Virtualization nodes you launch in EC2. -

Set up port forwarding on each VM template to enable connectivity between the Harness Runner and your Anka VMs. For each VM template, stop the VM and run the following command:

anka modify $VM_NAME add port-forwarding service -g 9079For more information about

anka modify, go to the Anka documentation on Modifying your VM.

Install the Harness Delegate and Runner

Install a Harness Docker Delegate and Runner on the Linux-based AWS EC2 instance where the Anka Controller and Registry are running.

Configure pool.yml

The pool.yml file defines the VM pool size, the Anka Registry host location, and other information used to run your builds on Anka VMs. A pool is a group of instantiated VMs that are immediately available to run CI pipelines.

-

SSH into your Linux-based EC2 instance where your Anka Controller and Registry are running.

-

Create a

/runnerfolder andcdinto it.mkdir /runner

cd /runner -

In the

/runnerfolder, create apool.ymlfile. -

Modify

pool.ymlas described in the following example and the Pool settings reference. You can configure multiple pools in pool.yml, but you can only specify one pool (byname) in each Build (CI) stage in Harness.

version: "1"

instances:

- name: anka-build

default: true

type: ankabuild

pool: 2

limit: 10

platform:

os: darwin

arch: amd64

spec:

account:

username: anka

password: admin

vm_id: xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxx

registry_url: https://anka-controller.myorg.com:8089

tag: 1.0.6

auth_token: sometoken

Pool settings reference

You can configure the following settings in your pool.yml file. You can also learn more in the Drone documentation for the Pool File and Anka drivers.

User Data Example

Provide cloud-init data in either user_data_path or user_data if you need custom configuration. Refer to the user data examples for supported runtime environments.

Below is a sample pool.yml for GCP with user_data configuration:

version: "1"

instances:

- name: linux-amd64

type: google

pool: 1

limit: 10

platform:

os: linux

arch: amd64

spec:

account:

project_id: YOUR_PROJECT_ID

json_path: PATH_TO_SERVICE_ACCOUNT_JSON

image: IMAGE_NAME_OR_PATH

machine_type: e2-medium

zones:

- YOUR_GCP_ZONE # e.g., us-central1-a

disk:

size: 100

user_data: |

#cloud-config

{{ if and (.IsHosted) (eq .Platform.Arch "amd64") }}

packages: []

{{ else }}

apt:

sources:

docker.list:

source: deb [arch={{ .Platform.Arch }}] https://download.docker.com/linux/ubuntu $RELEASE stable

keyid: 9DC858229FC7DD38854AE2D88D81803C0EBFCD88

packages: []

{{ end }}

write_files:

- path: {{ .CaCertPath }}

path: {{ .CertPath }}

permissions: '0600'

encoding: b64

content: {{ .TLSCert | base64 }}

- path: {{ .KeyPath }}

runcmd:

- 'set -x'

- |

if .ShouldUseGoogleDNS; then

echo "DNS=8.8.8.8 8.8.4.4\nFallbackDNS=1.1.1.1 1.0.0.1\nDomains=~." | sudo tee -a /etc/systemd/resolved.conf

systemctl restart systemd-resolved

fi

- ufw allow 9079

| Setting | Type | Description |

|---|---|---|

name | String | Unique identifier of the pool. You will need to specify this pool name in Harness when you set up the CI stage build infrastructure. |

pool | Integer | Warm pool size number. Denotes the number of VMs in ready state to be used by the runner. |

limit | Integer | Maximum number of VMs the runner can create at any time. pool indicates the number of warm VMs, and the runner can create more VMs on demand up to the limit.For example, assume pool: 3 and limit: 10. If the runner gets a request for 5 VMs, it immediately provisions the 3 warm VMs (from pool) and provisions 2 more, which are not warm and take time to initialize. |

username, password | Strings | User name and password of the Anka VM in the Anka Virtualization machine (the mac-m2 EC2 machine). These are set when you run anka create. |

vm_id | String - ID | ID of the Anka VM. |

registry_url | String - URL | Registry/Controller URL and port. This URL must be reachable by your Anka nodes. You can configure ANKA_ANKA_REGISTRY in your Controller's docker-compose.yml. |

tag | String - Tag | Anka VM template tag. |

auth_token | String - Token | Required if you enabled token authentication for the Controller and Registry. |

File System Access

In harness-docker-runner, the following default mount paths are used on Mac. Harness requires write access to these locations because it creates directories inside them.

If write access is blocked, runs will fail with a 500 error similar to:

failed with code: 500, message: { "error_msg": "failed to create directory for host volume path: /addon: mkdir /addon: read-only file system" }

Default mount paths:

/tmp/addon/tmp/harness/private/tmp/harness(only when theCI_MOUNT_PATH_ENABLED_MACfeature flag is enabled)

Harness also needs write access to any declared shared paths that your pipelines or CI Stages use.

Start the runner

SSH into your Linux-based EC2 instance and run the following command to start the runner:

docker run -v /runner:/runner -p 3000:3000 drone/drone-runner-aws:latest delegate --pool /runner/pool.yml

This command mounts the volume to the Docker runner container and provides access to pool.yml, which is used to authenticate with AWS and pass the spec for the pool VMs to the container. It also exposes port 3000.

You might need to modify the command to use sudo and specify the runner directory path, for example:

sudo docker run -v ./runner:/runner -p 3000:3000 drone/drone-runner-aws:latest delegate --pool /runner/pool.yml

When a build starts, the delegate receives a request for VMs on which to run the build. The delegate forwards the request to the runner, which then allocates VMs from the warm pool (specified by pool in pool.yml) and, if necessary, spins up additional VMs (up to the limit specified in pool.yml).

The runner includes lite engine, and the lite engine process triggers VM startup through a cloud init script. This script downloads and installs Scoop package manager, Git, the Drone plugin, and lite engine on the build VMs. The plugin and lite engine are downloaded from GitHub releases. Scoop is downloaded from get.scoop.sh which redirects to raw.githubusercontent.com.

Firewall restrictions can prevent the script from downloading these dependencies. Make sure your images don't have firewall or anti-malware restrictions that are interfering with downloading the dependencies.

Install the delegate

-

In Harness, go to Account Settings, select Account Resources, and then select Delegates.

You can also create delegates at the project scope. In your Harness project, select Project Settings, and then select Delegates.

-

Select New Delegate or Install Delegate.

-

Select Docker.

-

Enter a Delegate Name.

-

Copy the delegate install command and paste it in a text editor.

-

To the first line, add

--network host, and, if required,sudo. For example:sudo docker run --cpus=1 --memory=2g --network host -

SSH into your Linux-based EC2 instance and run the delegate install command.

The delegate install command uses the default authentication token for your Harness account. If you want to use a different token, you can create a token and then specify it in the delegate install command:

- In Harness, go to Account Settings, then Account Resources, and then select Delegates.

- Select Tokens in the header, and then select New Token.

- Enter a token name and select Apply to generate a token.

- Copy the token and paste it in the value for

DELEGATE_TOKEN.

For more information about delegates and delegate installation, go to Delegate installation overview.

Verify connectivity

-

Verify that the delegate and runner containers are running correctly. You might need to wait a few minutes for both processes to start. You can run the following commands to check the process status:

docker ps

docker logs DELEGATE_CONTAINER_ID

docker logs RUNNER_CONTAINER_ID -

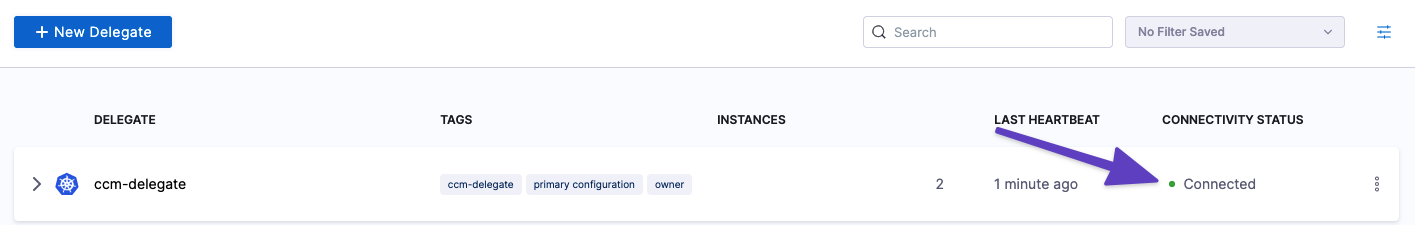

In the Harness UI, verify that the delegate appears in the delegates list. It might take two or three minutes for the delegates list to update. Make sure the Connectivity Status is Connected. If the Connectivity Status is Not Connected, make sure the Docker host can connect to

https://app.harness.io.

The delegate and runner are now installed, registered, and connected.

Specify build infrastructure

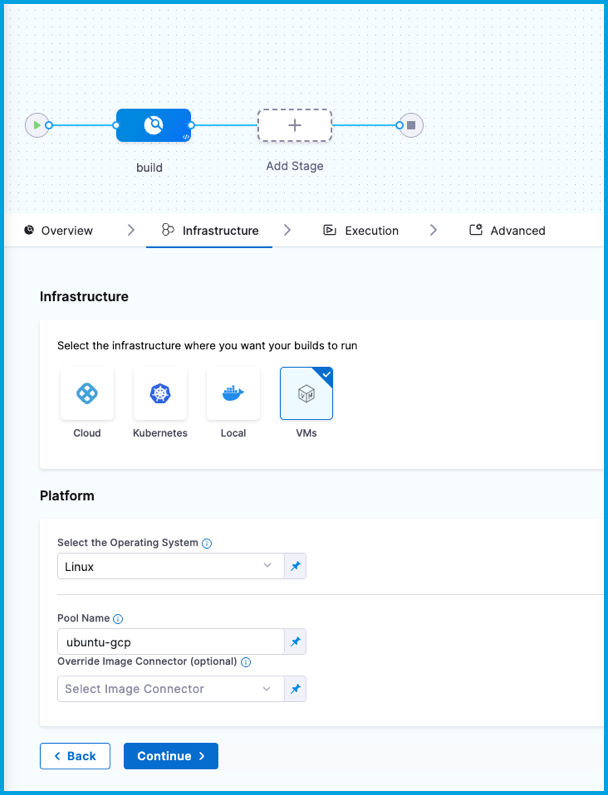

Configure your pipeline's Build (CI) stage to use your Anka VMs as build infrastructure.

- Visual

- YAML

- In Harness, go to the CI pipeline that you want to use the AWS VM build infrastructure.

- Select the Build stage, and then select the Infrastructure tab.

- Select VMs.

- For Operating System, select MacOS.

- Enter the Pool Name from your pool.yml.

- Save the pipeline.

- stage:

name: build

identifier: build

description: ""

type: CI

spec:

cloneCodebase: true

infrastructure:

type: VM

spec:

type: Pool

spec:

poolName: POOL_NAME_FROM_POOL_YML

os: MacOS

execution:

steps:

...

Delegate selectors with self-managed VM build infrastructures

Currently, delegate selectors for self-managed VM build infrastructures is behind the feature flag CI_ENABLE_VM_DELEGATE_SELECTOR. Contact Harness Support to enable the feature.

Although you must install a delegate to use a self-managed VM build infrastructure, you can choose to use a different delegate for executions and cleanups in individual pipelines or stages. To do this, use pipeline-level delegate selectors or stage-level delegate selectors.

Delegate selections take precedence in the following order:

- Stage

- Pipeline

- Platform (build machine delegate)

This means that if delegate selectors are present at the pipeline and stage levels, then these selections override the platform delegate, which is the delegate that you installed on your primary VM with the runner. If a stage has a stage-level delegate selector, then it uses that delegate. Stages that don't have stage-level delegate selectors use the pipeline-level selector, if present, or the platform delegate.

For example, assume you have a pipeline with three stages called alpha, beta, and gamma. If you specify a stage-level delegate selector on alpha and you don't specify a pipeline-level delegate selector, then alpha uses the stage-level delegate, and the other stages (beta and gamma) use the platform delegate.

Early access feature: Use delegate selectors for codebase tasks

Currently, delegate selectors for CI codebase tasks is behind the feature flag CI_CODEBASE_SELECTOR. Contact Harness Support to enable the feature.

By default, delegate selectors aren't applied to delegate-related CI codebase tasks.

With this feature flag enabled, Harness uses your delegate selectors for delegate-related codebase tasks. Delegate selection for these tasks takes precedence in order of pipeline selectors over connector selectors.

Mount Custom Certificates on Windows Build VMs

This configuration applies to Harness CI VM Runners used for Windows build VMs.

You can make custom CA certificates available inside all build step containers (including the drone/git clone container) on Windows build VMs by setting the DRONE_RUNNER_VOLUMES environment variable when starting the VM runner.

Example

docker run -d \

-v /runner:/runner \

-p 3000:3000 \

-e DRONE_RUNNER_VOLUMES=/custom-cert:/git/mingw64/ssl/certs \

<your_registry_domain>/drone/drone-runner-aws:latest \

delegate --pool /runner/pool.yml

Notes

- The certificate file inside

/custom-certmust be namedca-bundle.crt. - The

drone-gitcontainer on Windows expects the certificate to be available atC:\git\mingw64\ssl\certs\ca-bundle.crt. - The

DRONE_RUNNER_VOLUMESpath must use Linux-style syntax — use/as the path separator and omit the drive letter (C:), even though the build VM runs Windows.

Add more Mac nodes and VM templates to the Anka Registry

You can launch more Anka Virtualization nodes on your EC2 dedicated host and create more VM templates as needed. After you join a Virtualization node to the Controller cluster, it can pull VM templates from the registry and use them to create VMs.

Troubleshoot self-managed VM build infrastructure

Go to the CI Knowledge Base for questions and issues related to self-managed VM build infrastructures, including:

- Can I use the same build VM for multiple CI stages?

- Why are build VMs running when there are no active builds?

- How do I specify the disk size for a Windows instance in pool.yml?

- Clone codebase fails due to missing plugin

- Can I limit memory and CPU for Run Tests steps running on self-managed VM build infrastructure?