1. Harness Deployments Onboarding Path

This topic describes the different phases and steps involved in onboarding with Harness CD. Follow these steps to ensure that you have all the settings and resources required for moving forward with your own deployments.

If you are only looking for tutorials, go to [Continuous Delivery & GitOps tutorial](/docs/continuous-delivery/get-started/tutorials/cd-gitops-tutorials.

Overview

This section lists the major onboarding phases and provides links to more details.

Steps with an asterisk "*" have YAML examples that can be used for setting up the step.

Phase 1: Initial setup

| Step | Details | Documentation Link | Demo Video |

|---|---|---|---|

| Account and entities setup | Create organization, project, invite initial users | Create organizations and projects | |

| Installing delegates | Kubernetes, Docker | Install Harness Delegate on Kubernetes or Docker | Watch Video |

| Installing secret managers, Migrating existing secrets | AWS KMS, HashiCorp, Azure Key Vault, Google KMS | Add a secret manager |

Phase 2: Deploy to QA

| Step | Details | Documentation Link | Demo Video |

|---|---|---|---|

| Service Definition and Variables * | Runtime Inputs or expressions | Create services | Watch Video |

| Environments * | Service Override | Create environments | Watch Video |

| Adding artifact sources | Docker Registry, GCR, GCS, ACR, Azure DevOps Artifacts, ECR, etc. | CD artifact sources | |

| Simple pipelines * | Stage, service, environment, infrastructure | CD pipeline modeling overview | Watch Video |

Phase 3: Deploy to staging

| Step | Details | Documentation Link | Demo Video |

|---|---|---|---|

| Deployment strategies * | Rollback, Blue Green, Canary, Kubernetes Apply, Kubernetes Scale | Deployment concepts and strategies | Watch Video |

| Triggers and input sets * | SCM triggers, artifact triggers | Pipeline triggers | Watch Video |

Phase 4: Deploy to production

| Step | Details | Documentation Link | Demo Video |

|---|---|---|---|

| Approvals and governance (OPA) * | Harness Approval, JIRA Approval | Approvals | Watch Video |

| RBAC | CoE, Distributed Center of DevOps | Role-based access control (RBAC) in Harness | Watch Video |

| Continuous Verification | Auto, Rolling Update, Canary, Blue Green, Load Test | Harness Continuous Verification (CV) overview |

Phase 5: SSO-enabled DevOps with Infrastructure as Code (IaC)

| Step | Details | Documentation Link | Demo Video |

|---|---|---|---|

| SSO | SAML SSO with Harness, Okta, OneLogin, Keycloak, etc | Single Sign-On (SSO) with SAML | |

| Templatization and automation * | Templates, Terraform Automation | Templates overview | Watch Video |

Phase 1: Initial setup

Step 1. Account and entities setup

Harness organizations (orgs) allow you to group projects that share the same goal. For example, all projects for a business unit or division.

A Harness project is a group of Harness modules and their pipelines. For example, a project might have a Harness CI pipeline to build code and push an image to a repo and a Harness CD pipeline to pull and deploy that image to a cloud platform.

Create a Harness org

- In Harness, in Account Settings, select Organizations.

- Select New Organization. The new organization settings appear.

- In Name, enter a name for your organization.

- Select Save and Continue.

Invite collaborators

- In Invite People to Collaborate, enter a user's name and select it.

- In Role, select the role the member will have in this org, such as Organization Admin or Organization Member.

- Select Add.

- Members receive invites via their email addresses.

- You can always invite more members from within the org later.

- Select Finish. The org is added to the list in Account Settings > Organizations.

Create a project

- In Harness, go to Home and select Projects.

- Select Project.

- Name the project, and select a color. The Id of the project is generated automatically.

- In Organization, select the org you created.

- Add a description and tags, and then select Save and Continue.

- In Invite Collaborators, type a member's name and select it.

- Select a role for the member, and select Add.

- Select Save and Continue to create the project.

Step 2. Installing Delegate

The Harness Delegate is a service you run in your local network or VPC to connect your artifacts, infrastructure, collaboration, verification, and other providers, with Harness Manager.

The first time you connect Harness to a third-party resource, the Harness Delegate is installed in your target infrastructure, for example, a Kubernetes cluster. After the delegate is installed and registers with Harness, you can Harness connect to third-party resources. The delegate performs all operations, including deployment and integration.

For more information, go to Install Harness Delegate on Kubernetes or Docker.

Step 3. Installing secret managers, migrating existing secrets

Harness includes a built-in secret management feature that enables you to store encrypted secrets, such as access keys, and use them in your Harness connectors and pipelines. For more information, go to Add a secret manager.

Looking for specific secret managers? Go to:

- Add an AWS KMS Secret Manager

- Add a HashiCorp Vault Secret Manager

- Add an Azure Key Vault Secret Manager

- Add Google KMS as a Harness Secret Manager

- Add an AWS Secrets Manager

Phase 2: Deploy to QA

Step 1. Service and environments

Services

Services represent your microservices and other workloads. Each service contains a Service Definition that defines your deployment artifacts, manifests or specifications, configuration files, and service-specific variables. For more information, go to Create a service.

Services are often configured using runtime inputs or expressions, so you can change service settings for different deployment scenarios at pipeline runtime. To use services with runtime inputs and expressions, go to Using services with inputs and expressions. Below are some example Kubernetes and ECS Fargate Harness services:

- K8s Service

- ECS Fargate Service

service:

name: k8s-svc

identifier: k8ssvc

serviceDefinition:

spec:

release:

name: release-<+INFRA_KEY_SHORT_ID>

manifests:

- manifest:

identifier: Kubernetes

type: K8sManifest

spec:

store:

type: Github

spec:

connectorRef: account.YourGitHubConnector

gitFetchType: Branch

paths:

- content/en/examples/application/nginx-app.yaml

repoName: kubernetes/website

branch: main

skipResourceVersioning: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: account.YourArtifactConnector

imagePath: bitnami/kafka

tag: <+input>

digest: ""

identifier: artifact

type: DockerRegistry

type: Kubernetes

tags: {}

service:

name: ecs-service

identifier: ecs_service

orgIdentifier: default

projectIdentifier: ECS Deployment

serviceDefinition:

type: ECS

spec:

manifests:

- manifest:

identifier: serviceDefinition

type: EcsServiceDefinition

spec:

store:

type: Github

spec:

connectorRef: org.YourGitHubConnector

gitFetchType: Branch

paths:

- applications/ecs-fargate-manifests/CreateServiceRequest.yaml

repoName: harness-community/developer-hub-apps

branch: main

- manifest:

identifier: taskDefinition

type: EcsTaskDefinition

spec:

store:

type: Github

spec:

connectorRef: org.YourGitHubConnector

gitFetchType: Branch

paths:

- ECS/BlueGreenNoVariables/RegisterTaskDefinitionRequest.yaml

repoName: harness-community/developer-hub-apps

branch: main

- manifest:

identifier: scalingPolicy

type: EcsScalingPolicyDefinition

spec:

store:

type: Github

spec:

connectorRef: org.GitHubConnector

gitFetchType: Branch

paths:

- applications/ecs-fargate-manifests/PutScalingPolicyRequest.yaml

repoName: harness-community/developer-hub-apps

branch: main

- manifest:

identifier: scalabletarget

type: EcsScalableTargetDefinition

spec:

store:

type: Github

spec:

connectorRef: org.GitHubConnector

gitFetchType: Branch

paths:

- applications/ecs-fargate-manifests/RegisterScalableTargetRequest.yaml

repoName: harness-community/developer-hub-apps

branch: main

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: org.YourAwsConnector

imagePath: your-image-path

tag: latest

region: us-east-1

identifier: ECRartifact

type: Ecr

gitOpsEnabled: false

Environments

Environments represent your deployment targets (QA, Prod, etc). Each environment contains one or more Infrastructure Definitions that list your target clusters, hosts, namespaces, etc. To create your own environments, go to Create environments.

- Environment Definition

- Infrastructure Definition

environment:

name: Env_1

identifier: Env_1

tags: {}

type: Production

orgIdentifier: default

projectIdentifier: Default_Project

variables: []

infrastructureDefinition:

name: Infra_1

identifier: Infra_1

orgIdentifier: default

projectIdentifier: Default_Project

environmentRef: Env_1

deploymentType: Kubernetes

type: KubernetesDirect

spec:

connectorRef: org.KubernetesConnectorForAutomationTest

namespace: cdp-k8s-qa-sanity

releaseName: release-<+INFRA_KEY_SHORT_ID>

allowSimultaneousDeployments: true

Service Overrides

In DevOps, it is common to have multiple environments, such as development, testing, staging, and production. Each environment might require different configurations or settings for the same service.

For example, in the development environment, a service may need to use a local database for testing, while in the production environment, it should use a high-availability database cluster.

To enable the same service to use different environment settings, DevOps teams can override service settings for each environment.

For more information, go to Create service overrides.

Step 2. Adding artifact sources

In DevOps, an artifact source is a location where the compiled, tested, and ready-to-deploy software artifacts are stored. These artifacts could be container images, compiled binary files, executables, or any other software components that are part of the application.

To add an artifact source, you add a Harness connector to the artifact platform (DockerHub, GCR, Artifactory, etc.) and then add an artifact source to a Harness service that defines the artifact source name, path, tags, and so on.

For the list of artifact sources that you can use in your Harness services, go to Artifact Sources.

Step 3. Create a simple pipeline

To create a simple CD pipeline, follow the steps:

- Create a pipeline.

- Add a CD stage.

- Define a service.

- Target an environment and infrastructure.

- Select execution steps.

- You can model visually, using YAML, or via the REST API.

Here's a simple CD pipeline using a Kubernetes type deployment:

K8s Rolling Deployment Pipeline YAML

pipeline:

name: K8s Rolling Deployment

identifier: K8s_Rolling_Deployment

projectIdentifier: Default Project

orgIdentifier: default

tags: {}

stages:

- stage:

name: Rolling Deployment

identifier: Rolling_Deployment

description: ""

type: Deployment

spec:

deploymentType: Kubernetes

service:

serviceRef: Service_1

serviceInputs:

serviceDefinition:

type: Kubernetes

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources: <+input>

environment:

environmentRef: Env_1

deployToAll: false

infrastructureDefinitions:

- identifier: Infra_1

execution:

steps:

- step:

name: Rolling Deployment

identifier: rolloutDeployment

type: K8sRollingDeploy

timeout: 10m

spec:

skipDryRun: false

pruningEnabled: false

rollbackSteps:

- step:

name: Rollback Rollout Deployment

identifier: rollbackRolloutDeployment

type: K8sRollingRollback

timeout: 10m

spec:

pruningEnabled: false

tags: {}

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: StageRollback

Phase 3: Deploy to Staging

Step 1. Deployment Strategy

You have likely heard terms like blue/green and canary when it comes to deploying code and applications into production. These are common deployment strategies, available in Harness CD as stage strategies, along with others.

The deployment strategies provided by Harness are:

- Rolling

- Blue Green

- Canary

- Basic Deployments

- Multi-service

- Rolling

- Blue Green

- Canary

- K8s with Apply

- K8s with Scale

pipeline:

name: K8s Rolling Deployment

identifier: K8s_Rolling_Deployment

projectIdentifier: Default Project

orgIdentifier: default

tags: {}

stages:

- stage:

name: Rolling Deployment

identifier: Rolling_Deployment

description: ""

type: Deployment

spec:

deploymentType: Kubernetes

service:

serviceRef: Service_1

serviceInputs:

serviceDefinition:

type: Kubernetes

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources: <+input>

environment:

environmentRef: Env_1

deployToAll: false

infrastructureDefinitions:

- identifier: Infra_1

execution:

steps:

- step:

name: Rolling Deployment

identifier: rolloutDeployment

type: K8sRollingDeploy

timeout: 10m

spec:

skipDryRun: false

pruningEnabled: false

rollbackSteps:

- step:

name: Rollback Rollout Deployment

identifier: rollbackRolloutDeployment

type: K8sRollingRollback

timeout: 10m

spec:

pruningEnabled: false

tags: {}

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: StageRollback

pipeline:

name: K8s Blue Green Deployment

identifier: K8s_Blue_Green_Deployment

projectIdentifier: Default Project

orgIdentifier: default

tags: {}

stages:

- stage:

name: Blue Green Deployment

identifier: Blue_Green_Deployment

description: ""

type: Deployment

spec:

deploymentType: Kubernetes

service:

serviceRef: Service_1

serviceInputs:

serviceDefinition:

type: Kubernetes

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources: <+input>

environment:

environmentRef: Env_1

deployToAll: false

infrastructureDefinitions:

- identifier: Infra_1

execution:

steps:

- step:

name: Stage Deployment

identifier: stageDeployment

type: K8sBlueGreenDeploy

timeout: 10m

spec:

skipDryRun: false

pruningEnabled: false

- step:

name: Swap primary with stage service

identifier: bgSwapServices

type: K8sBGSwapServices

timeout: 10m

spec:

skipDryRun: false

rollbackSteps:

- step:

name: Swap primary with stage service

identifier: rollbackBgSwapServices

type: K8sBGSwapServices

timeout: 10m

spec:

skipDryRun: false

tags: {}

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: StageRollback

timeout: 10m

variables:

- name: resourceNamePrefix

type: String

description: ""

required: false

value: cdpsanitysuites-trybg

pipeline:

name: K8s Canary Deployment

identifier: K8s_Canary_Deployment

projectIdentifier: Default Project

orgIdentifier: default

tags: {}

stages:

- stage:

name: Canary Deployment

identifier: Canary_Deployment

description: ""

type: Deployment

spec:

deploymentType: Kubernetes

service:

serviceRef: Service_1

serviceInputs:

serviceDefinition:

type: Kubernetes

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources: <+input>

environment:

environmentRef: Env_1

deployToAll: false

infrastructureDefinitions:

- identifier: Infra_1

execution:

steps:

- stepGroup:

name: Canary Deployment

identifier: canaryDepoyment

steps:

- step:

name: Canary Deployment

identifier: canaryDeployment

type: K8sCanaryDeploy

timeout: 10m

spec:

instanceSelection:

type: Count

spec:

count: 1

skipDryRun: false

- step:

name: Canary Delete

identifier: canaryDelete

type: K8sCanaryDelete

timeout: 10m

spec: {}

- stepGroup:

name: Primary Deployment

identifier: primaryDepoyment

steps:

- step:

name: Rolling Deployment

identifier: rollingDeployment

type: K8sRollingDeploy

timeout: 10m

spec:

skipDryRun: false

rollbackSteps:

- step:

name: Canary Delete

identifier: rollbackCanaryDelete

type: K8sCanaryDelete

timeout: 10m

spec: {}

- step:

name: Rolling Rollback

identifier: rollingRollback

type: K8sRollingRollback

timeout: 10m

spec: {}

tags: {}

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: StageRollback

variables:

- name: resourceNamePrefix

type: String

description: ""

required: false

value: cdpsanitysuites-trycanary

timeout: 10m

pipeline:

name: K8s Deployment with Apply Step

identifier: K8s_Deployment_with_Apply_Step

projectIdentifier: Default Project

orgIdentifier: default

tags: {}

stages:

- stage:

name: Deployment with Apply Step

identifier: Deployment_with_Apply_Step

description: ""

type: Deployment

spec:

deploymentType: Kubernetes

service:

serviceRef: Service_1

serviceInputs:

serviceDefinition:

type: Kubernetes

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources: <+input>

environment:

environmentRef: Env_1

deployToAll: false

infrastructureDefinitions:

- identifier: Infra_1

execution:

steps:

- step:

type: K8sApply

name: K8s Apply

identifier: K8s_Apply

spec:

filePaths:

- namespace.yaml

- service.yaml

skipDryRun: false

skipSteadyStateCheck: false

skipRendering: false

overrides: []

timeout: 10m

rollbackSteps: []

tags: {}

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: StageRollback

timeout: 10m

variables:

- name: resourceNamePrefix

type: String

description: ""

required: false

value: cdpsanitysuites-qwerandom

pipeline:

name: K8s Deployment with Scale Step

identifier: K8s_Deployment_with_Scale_Step

projectIdentifier: Default Project

orgIdentifier: default

tags: {}

stages:

- stage:

name: Deployment With Scale Step

identifier: Deployment_With_Scale_Step

description: ""

type: Deployment

spec:

deploymentType: Kubernetes

service:

serviceRef: Service_1

serviceInputs:

serviceDefinition:

type: Kubernetes

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources: <+input>

environment:

environmentRef: Env_1

deployToAll: false

infrastructureDefinitions:

- identifier: Infra_1

execution:

steps:

- step:

name: Rollout Deployment

identifier: rolloutDeployment

type: K8sRollingDeploy

timeout: 10m

spec:

skipDryRun: false

pruningEnabled: false

- step:

type: K8sScale

name: K8sScale_1

identifier: K8sScale_1

spec:

workload: Deployment/<+stage.variables.resourceNamePrefix>-deployment

skipSteadyStateCheck: false

instanceSelection:

type: Count

spec:

count: 2

timeout: 10m

rollbackSteps:

- step:

name: Rollback Rollout Deployment

identifier: rollbackRolloutDeployment

type: K8sRollingRollback

timeout: 10m

spec:

pruningEnabled: false

tags: {}

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: StageRollback

variables:

- name: resourceNamePrefix

type: String

description: ""

required: false

value: cdpsanitysuites-tryscale

timeout: 10m

For more information, go to Deployment concepts and strategies.

Step 2. Triggers and input sets

Triggers automatically initiate pipeline execution based on specific events or conditions, such as Git events, new Helm Charts, new artifacts, or specific time intervals. Triggers in Harness CD enable faster feedback cycles, enhanced efficiency, and decreased reliance on manual intervention during the deployment process.

Here are examples of a new artifact trigger and GitHub Webhook trigger:

- Trigger On New Artifact

- GitHub Webhook Trigger

trigger:

name: trigger_on_new_artifact_v2

identifier: trigger_on_new_artifact_v2

enabled: true

tags: {}

orgIdentifier: default

projectIdentifier: default_project

pipelineIdentifier: trigger_on_new_artifact

stagesToExecute: []

source:

type: Artifact

spec:

type: DockerRegistry

spec:

connectorRef: account.Pritish_Harness

imagePath: pritishharness/harness_test

tag: <+trigger.artifact.build>

eventConditions: []

inputYaml: |

pipeline:

identifier: trigger_on_new_artifact

stages:

- stage:

identifier: test

type: Deployment

spec:

service:

serviceInputs:

serviceDefinition:

type: Kubernetes

spec:

artifacts:

primary:

sources:

- identifier: artifact

type: DockerRegistry

spec:

tag: <+lastPublished.tag>

trigger:

name: github_filechange_trigger

identifier: github_filechange_trigger

enabled: true

encryptedWebhookSecretIdentifier: ""

description: ""

tags: {}

orgIdentifier: default

stagesToExecute: []

projectIdentifier: default_project

pipelineIdentifier: github_filechange_trigger

source:

type: Webhook

spec:

type: Github

spec:

type: PullRequest

spec:

connectorRef: account.CIPHERTron

autoAbortPreviousExecutions: false

payloadConditions:

- key: changedFiles

operator: Equals

value: README.md

- key: targetBranch

operator: Equals

value: main

headerConditions: []

repoName: harness-yarn-demo

actions:

- Open

inputYaml: |

pipeline:

identifier: github_filechange_trigger

properties:

ci:

codebase:

build:

type: branch

spec:

branch: <+trigger.branch>

Phase 4: Deploy to production

Step 1. Approvals and governance (OPA)

Approvals

- Harness manual approvals: You can specify Harness user group(s) to approve or reject a pipeline at any point in its execution. During deployment, the user group members use Harness Manager to approve or reject the pipeline deployment manually.

- JIRA approvals: Jira issues can be used to approve or reject a pipeline or stage at any point in its execution. During deployment, the pipeline evaluates the fields in the Jira ticket based on criteria you define. Its approval or rejection determines if the pipeline or stage may proceed. You can add the Jira Approval step in Approval stages or in CD stages. The Jira Approval step prevents the stage execution from proceeding without an approval.

- ServiceNow approvals: You can use ServiceNow tickets to approve or reject a pipeline or stage at any point in its execution. During deployment, a ServiceNow ticket's fields are evaluated according to the criteria you define, and its approval/rejection determines if the Pipeline or stage may proceed.

- Custom approvals: Custom approval stages and steps add control gates to your pipelines by allowing you to approve or reject a pipeline or stage at any point during build execution. When you add a Custom Approval step, you add a script to the step, and then use the script results as approval or rejection criteria.

To learn more about approvals, go to: Approvals.

Governance

Harness Policy As Code uses Open Policy Agent (OPA) as the central service to store and enforce policies for the different entities and processes across the Harness platform. You can centrally define and store policies and then select where (which entities) and when (which events) they will be applied.

Here are some Harness governance examples with OPA.

- Delegate Tag Governance

- Connectors Governance

- Environment Governance

package pipeline

# Allow pipeline execution only with specific delegate tag selected

deny[msg] {

# Find all pipeline stages

stage := input.pipeline.stages[_].stage

# Find all steps in each stage

step := stage.spec.execution.steps[_].step

# Check if the step has a delegate selector

delegateSelector := step.advanced.delegateSelector

# Check if the delegate selector has the specific tag

not contains(delegateSelector.tags, "specific_tag")

# Show a human-friendly error message

msg := sprintf("Pipeline '%s' cannot be executed without selecting a delegate with tag 'specific_tag'", [input.pipeline.name])

}

This policy denies pipeline execution if a step in any stage does not have the specific delegate tag selected in its delegate selector. If the tag is not selected, the policy will show a human-friendly error message.

package pipeline

# Deny pipeline execution if a specific connector is not selected

deny[msg] {

# Find all stages ...

stage = input.pipeline.stages[_].stage

# ... that have steps ...

step = stage.spec.execution.steps[_].step

# ... that use a connector ...

connector = step.spec.connectorRef.name

# ... that is not in the allowed list

not contains(allowed_connectors, connector)

# Show a human-friendly error message

msg := sprintf("Pipeline '%s' cannot use connector '%s'", [input.pipeline.name, connector])

}

# Connectors that can be used for pipeline execution

allowed_connectors = ["MyConnector1", "MyConnector2"]

contains(arr, elem) {

arr[_] = elem

}

You can customize the allowed_connectors list to include the connectors that are allowed for pipeline execution. If a pipeline uses a connector that is not in the allowed_connectors list, the policy will deny pipeline execution and display an error message.

package pipeline

# Deny pipelines that do not use allowed environments

# NOTE: Try removing "test" from the 'allowed_environments' list to see the policy fail

deny[msg] {

# Find all deployment stages

stage = input.pipeline.stages[_].stage

stage.type == "Deployment"

# ... where the environment is not in the allow list

not contains(allowed_environments, stage.spec.infrastructure.environment.identifier)

# Show a human-friendly error message

msg := sprintf("deployment stage '%s' cannot be deployed to environment '%s'", [stage.name, stage.spec.infrastructure.environment.identifier])

}

# Deny pipelines if the environment is missing completely

deny[msg] {

# Find all deployment stages

stage = input.pipeline.stages[_].stage

stage.type == "Deployment"

# ... without an environment

not stage.spec.infrastructure.environment.identifier

# Show a human-friendly error message

msg := sprintf("deployment stage '%s' has no environment identifier", [stage.name])

}

# Environments that can be used for deployment

allowed_environments = ["dev","qa","prod"]

contains(arr, elem) {

arr[_] = elem

}

You can modify the allowed_environments list to include the environments where you want the pipeline to be executed. If the pipeline is executed in an environment that is not in the allowed_environments list, the policy will fail and display an error message.

Freeze deployments

A deployment freeze is a period of time during which no new changes are made to a system or application. This ensures that a system or application remains stable and free of errors, particularly in the lead-up to a major event or release. During a deployment freeze, only critical bug fixes and security patches might be deployed, and all other changes are put on hold until the freeze is lifted. Deployment freezes are commonly used in software development to ensure that a system is not destabilized by the introduction of new code in new application versions.

For more information, go to Freeze deployments.

Here's a sample YAML to set up deployment freeze in Harness:

freeze:

name: test-freeze

identifier: testfreeze

entityConfigs:

- name: project_specific

entities:

- type: Project

filterType: NotEquals

entityRefs:

- chaosprjyps

- CI_QS_yps

- ypsnativehelm

- type: Service

filterType: All

- type: Environment

filterType: All

- type: EnvType

filterType: Equals

entityRefs:

- Production

status: Disabled

orgIdentifier: testOrgyps

windows:

- timeZone: Asia/Calcutta

startTime: 2023-11-18 08:39 PM

duration: 30m

recurrence:

type: Monthly

description: ""

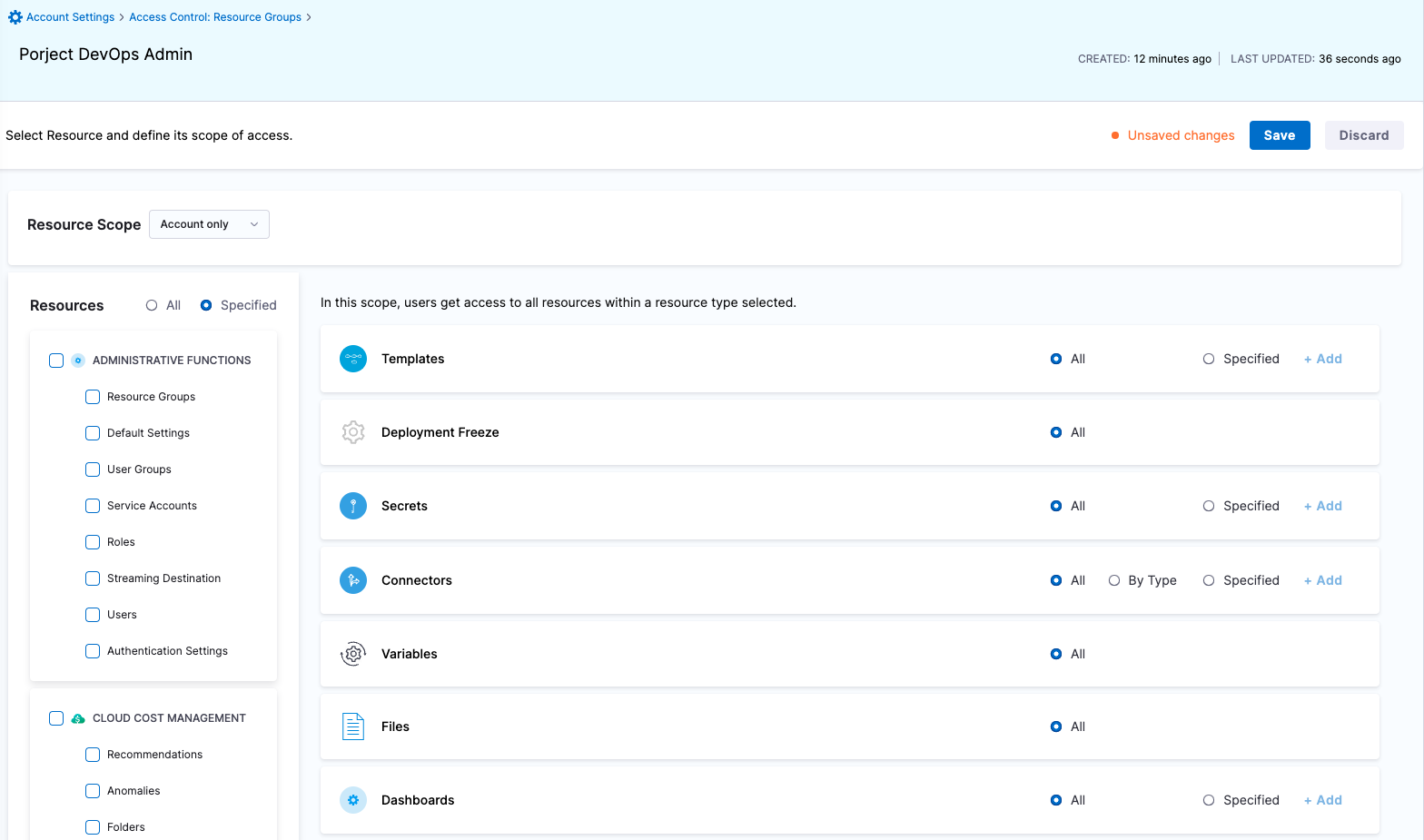

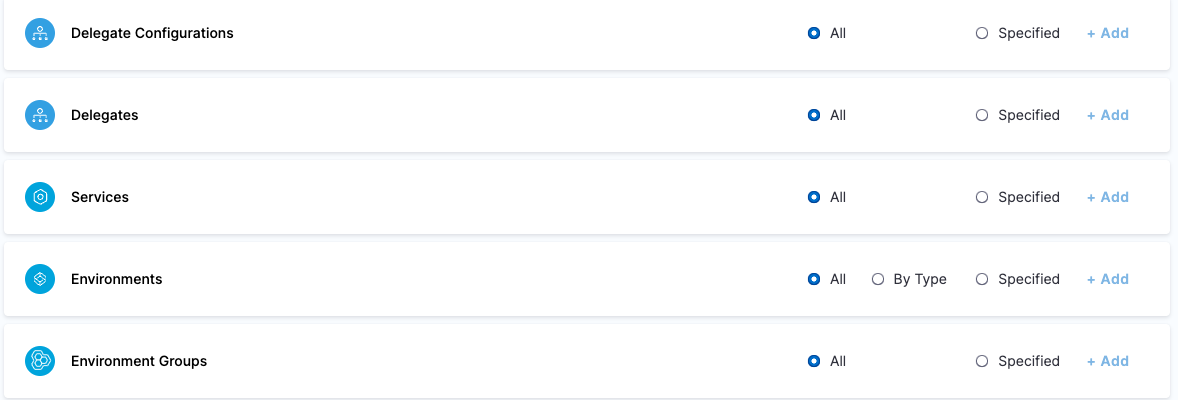

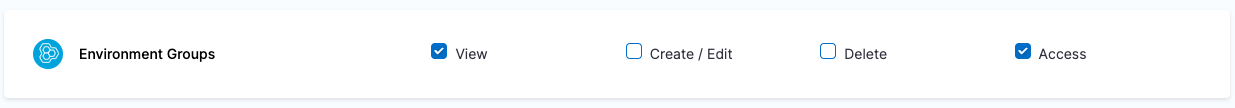

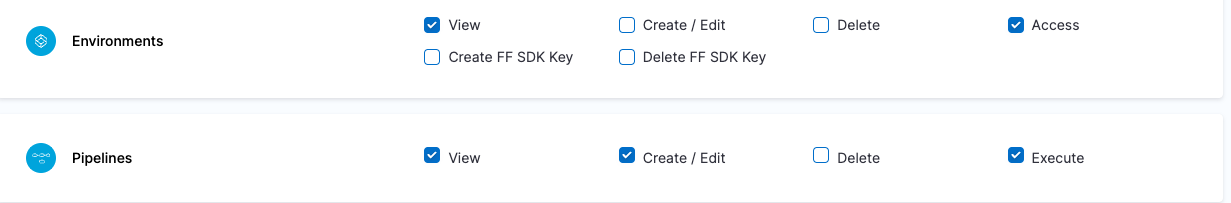

Step 2. RBAC

To perform Role-based access control (RBAC), a Harness account administrator assigns resource-related permissions to members of Harness user groups. The Center of Excellence strategy and Distributed Center of DevOps strategy are the two most popular RBAC access control strategies used.

Center of excellence RBAC strategy

| Role Type | Role Description | Harness Roles | Harness Resource Groups |

|---|---|---|---|

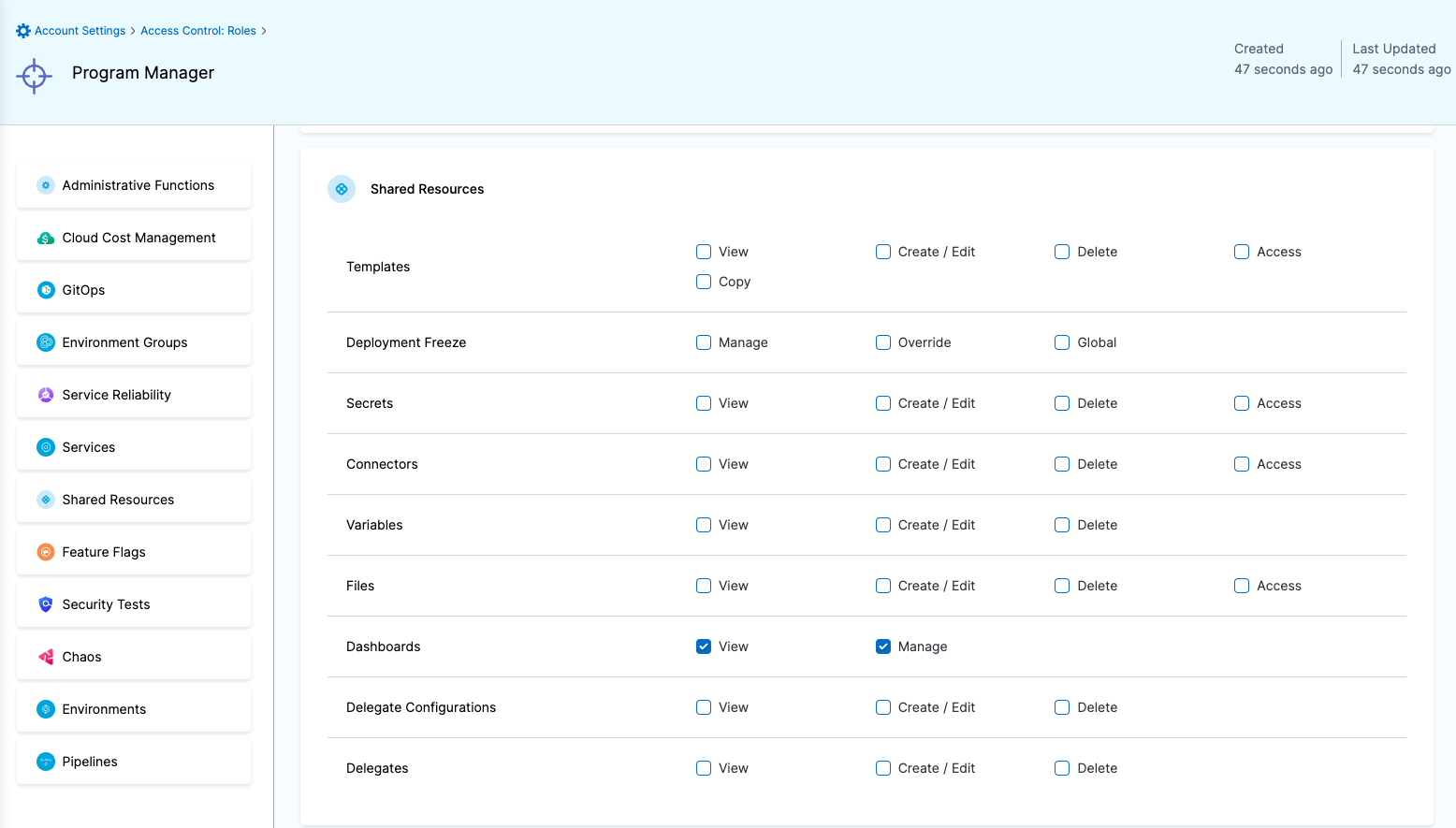

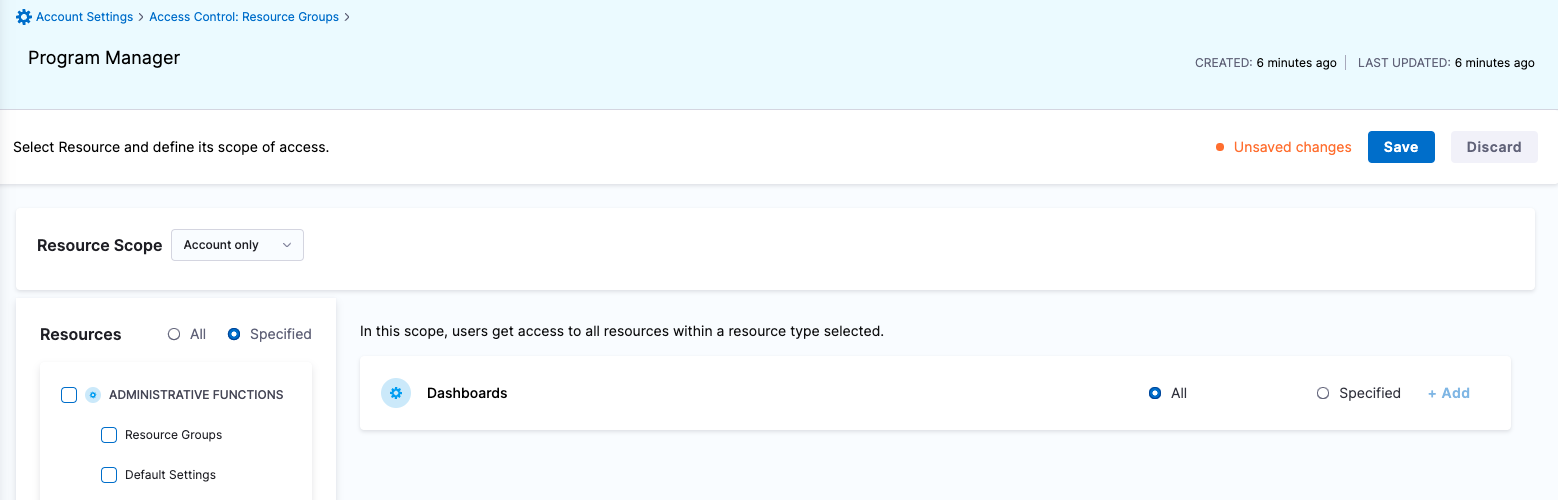

| Program Manager | Responsible for analyzing and reporting various metrics | Shared Resources->Dashboards: View & Manage | Shared Resources -> Dashboards |

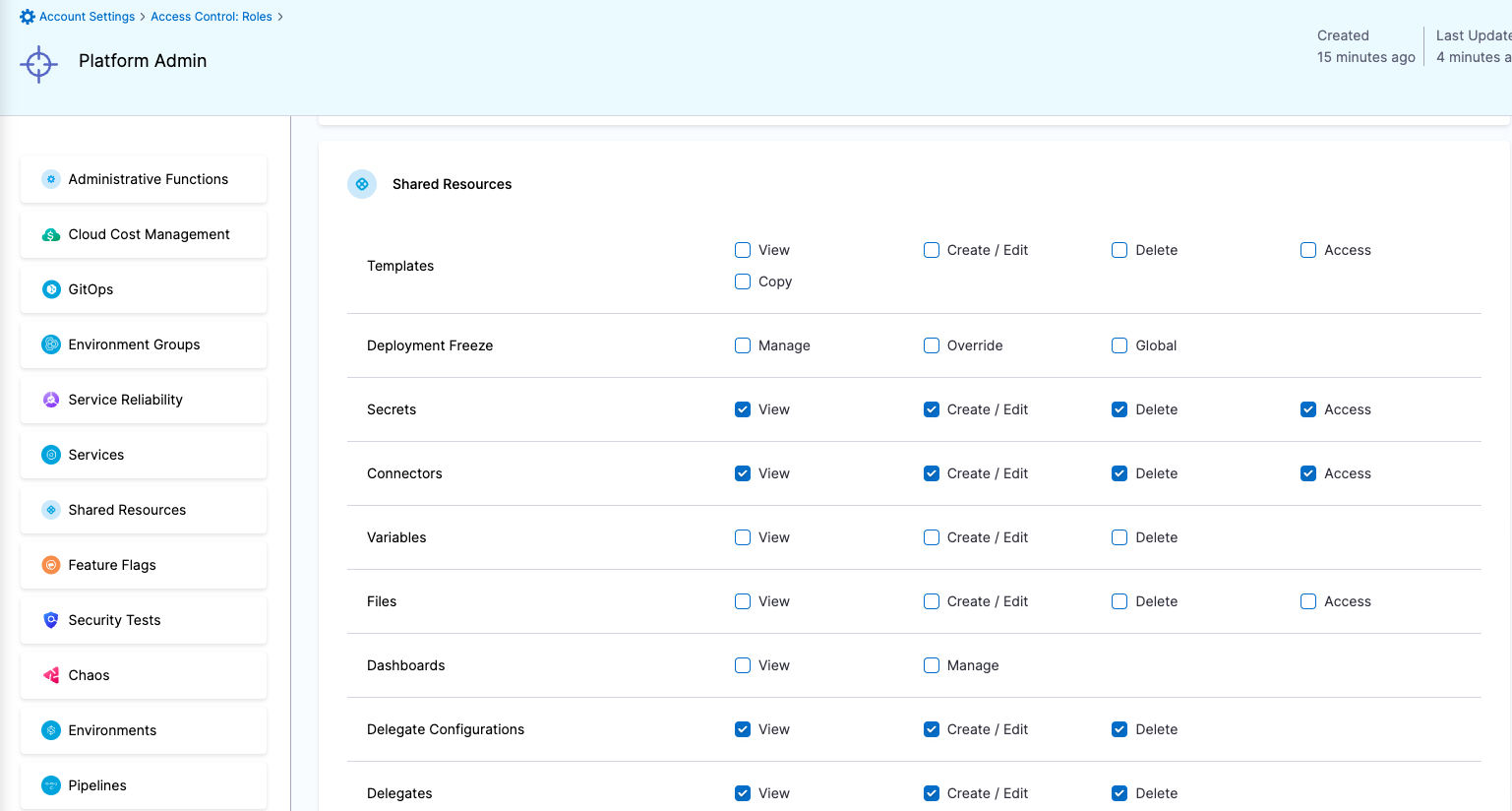

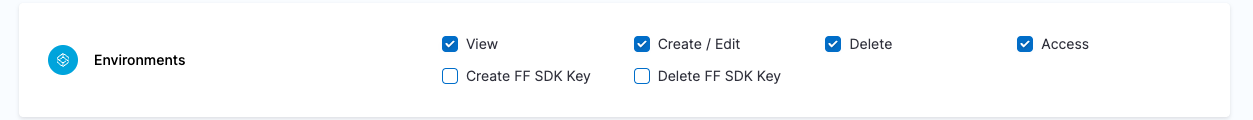

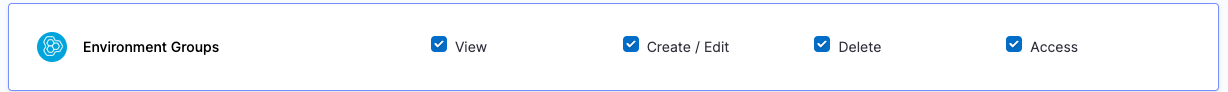

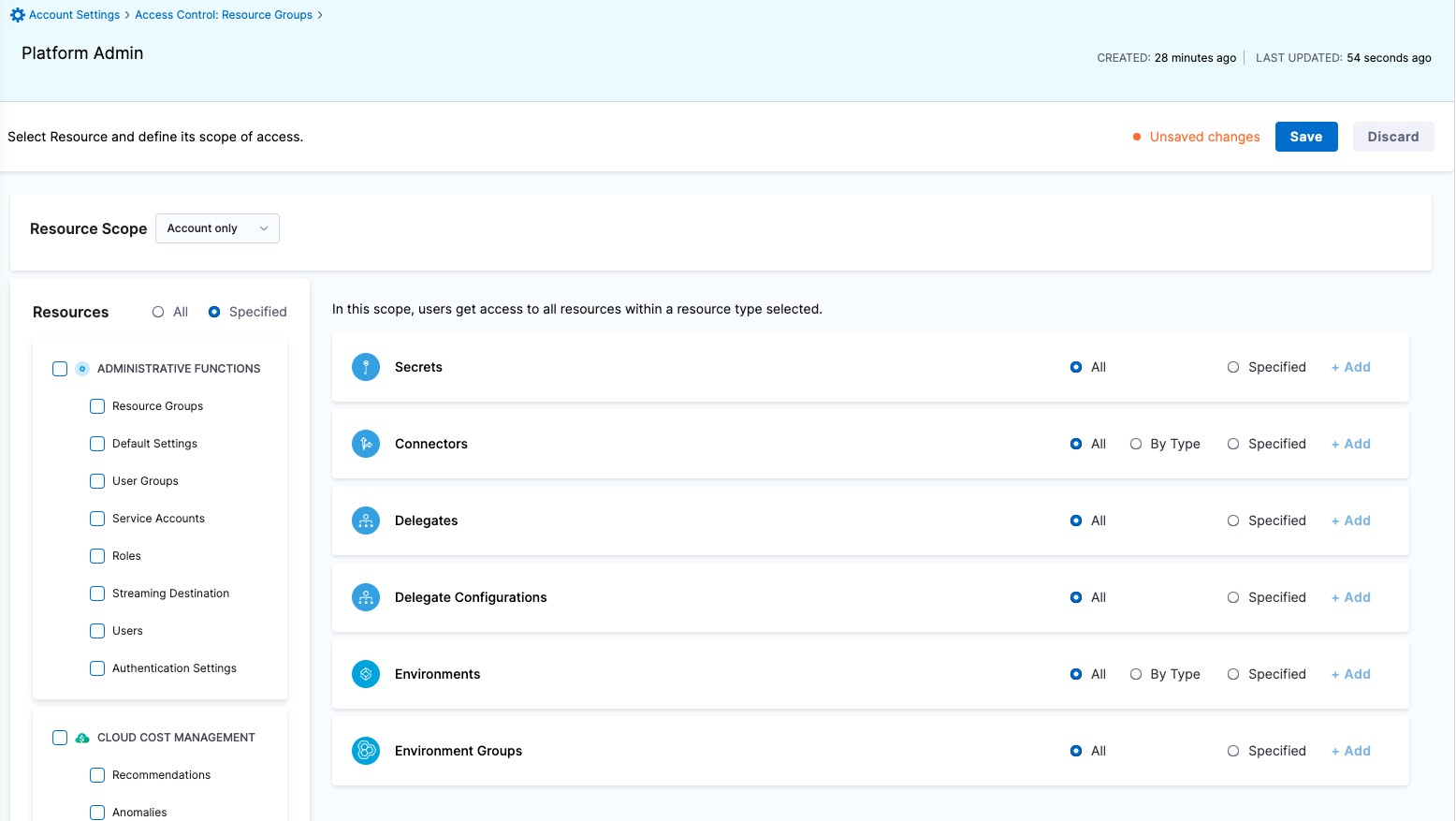

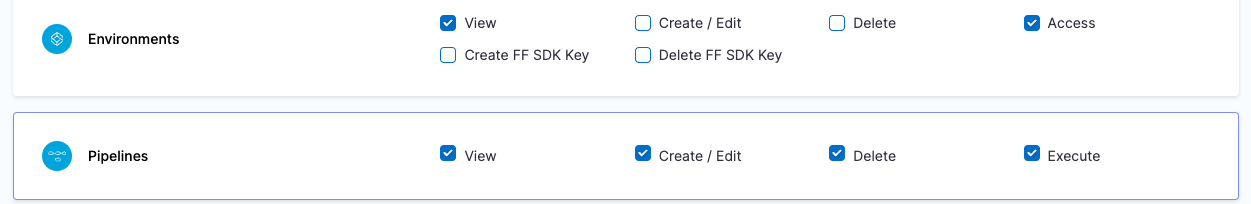

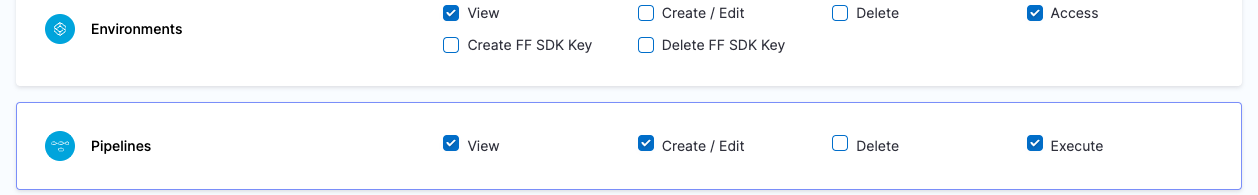

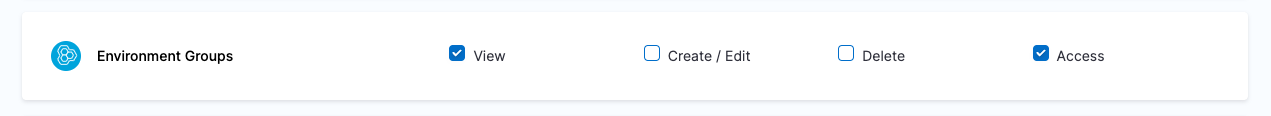

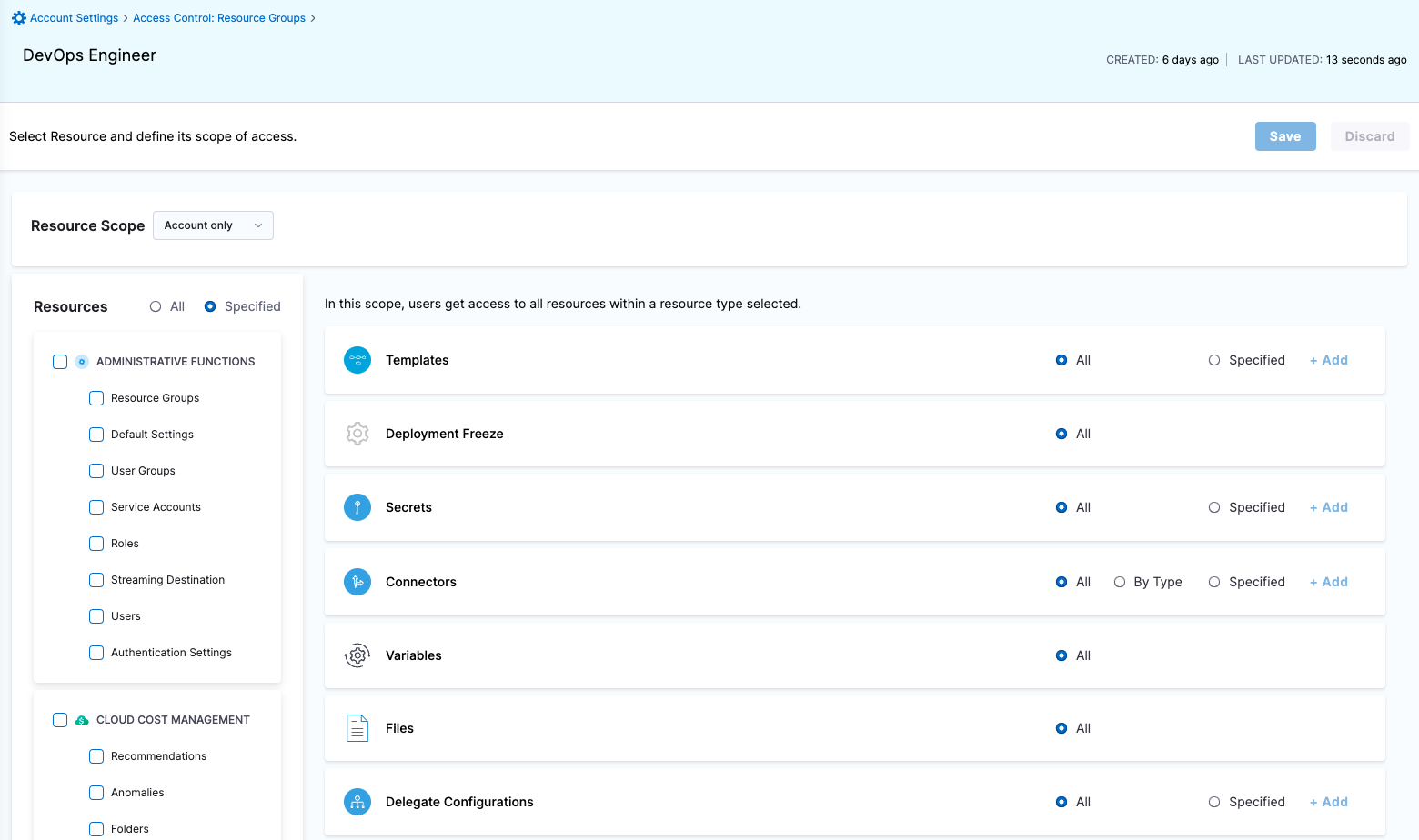

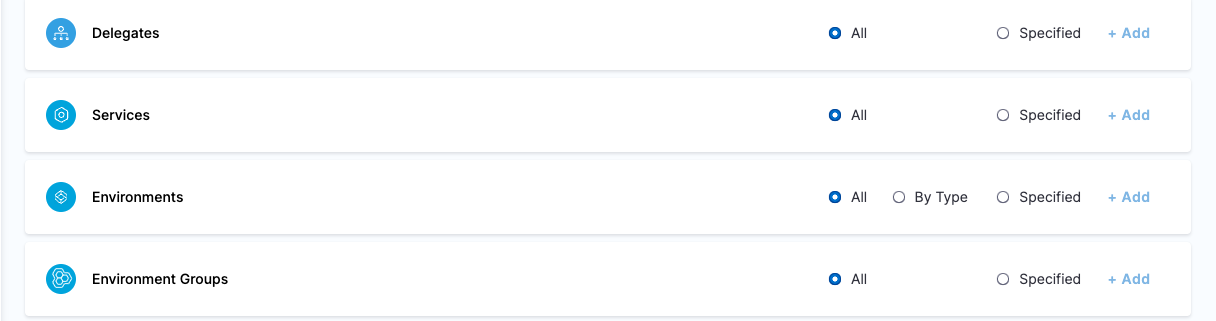

| Platform Admin | Responsible for provisioning infrastructure and managing Harness resources like Secrets, Environment, Connectors and Delegates | - Shared Resources -> Secrets: View, Create/Edit, Delete & Access - Shared Resources -> Connectors: View, Create/Edit, Delete & Access - Shared Resources -> Delegates: View, Create/Edit & Delete - Shared Resources -> Delegate Configurations: View, Create/Edit & Delete - Environments: View, Create/Edit, Delete & Access - Environment Groups: View, Create/Edit, Delete & Access | - Shared Resources -> Secrets - Shared Resources -> Connectors - Shared Resources -> Delegates - Shared Resources -> Delegate Configurations - Environments - Environment Groups |

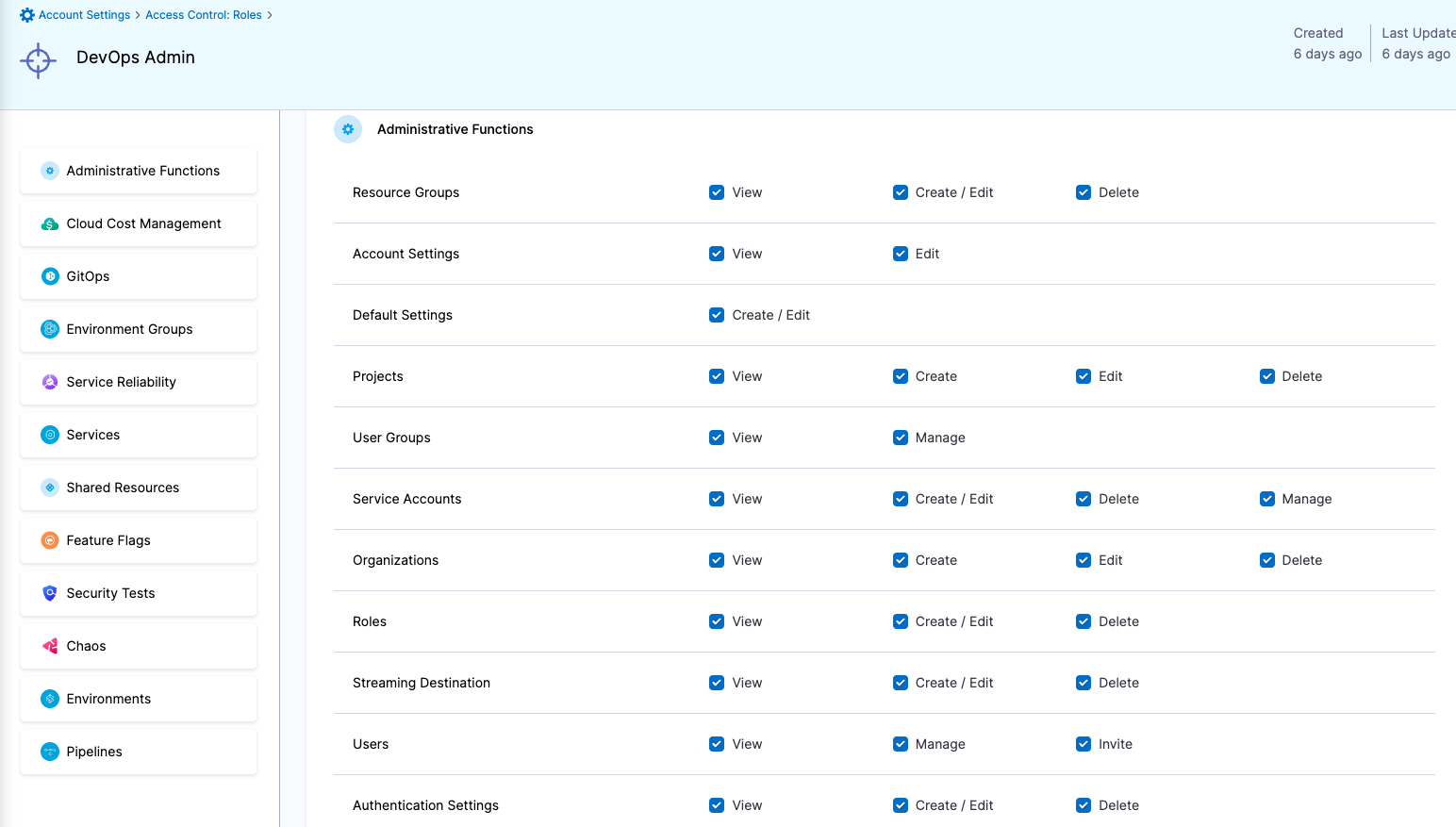

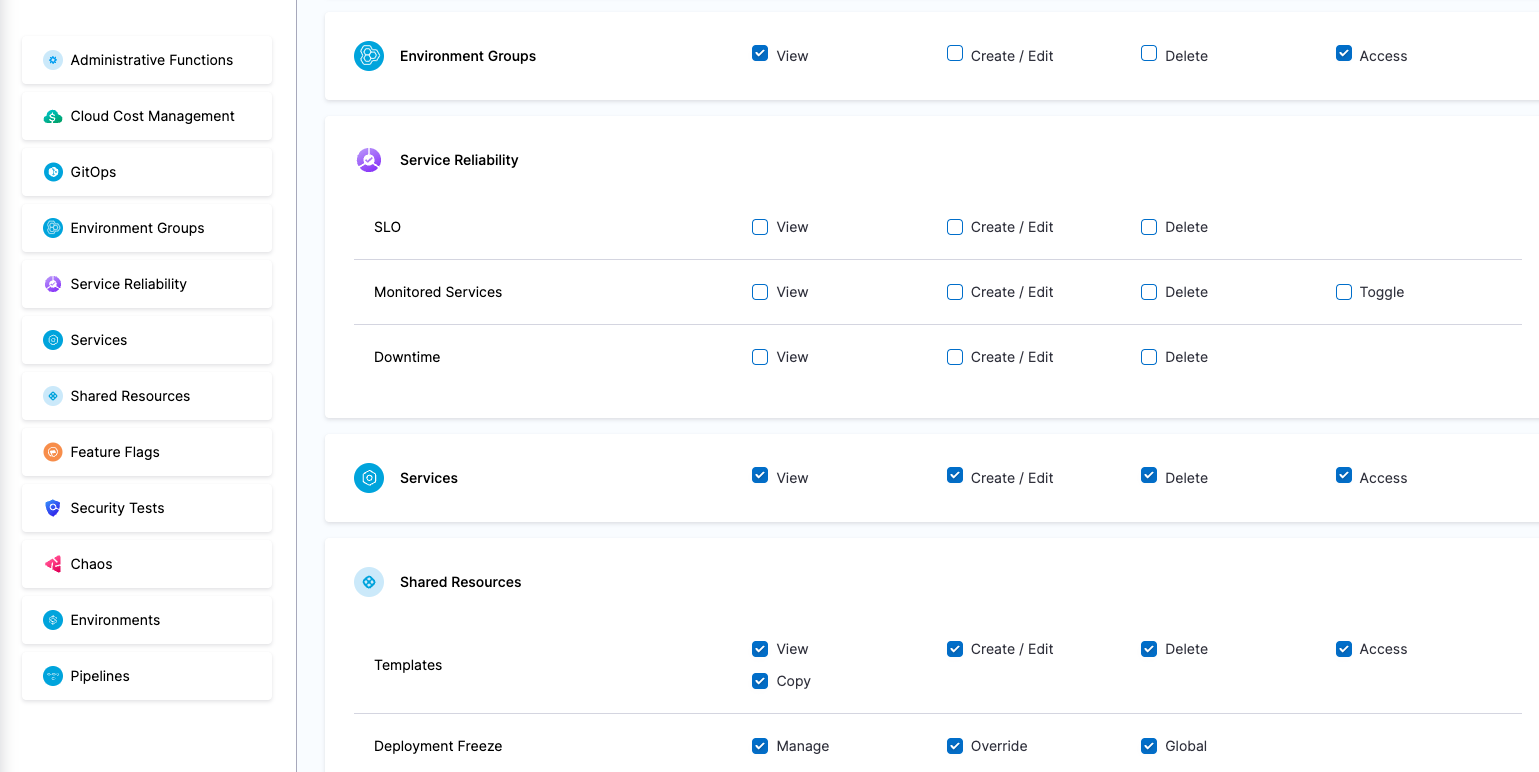

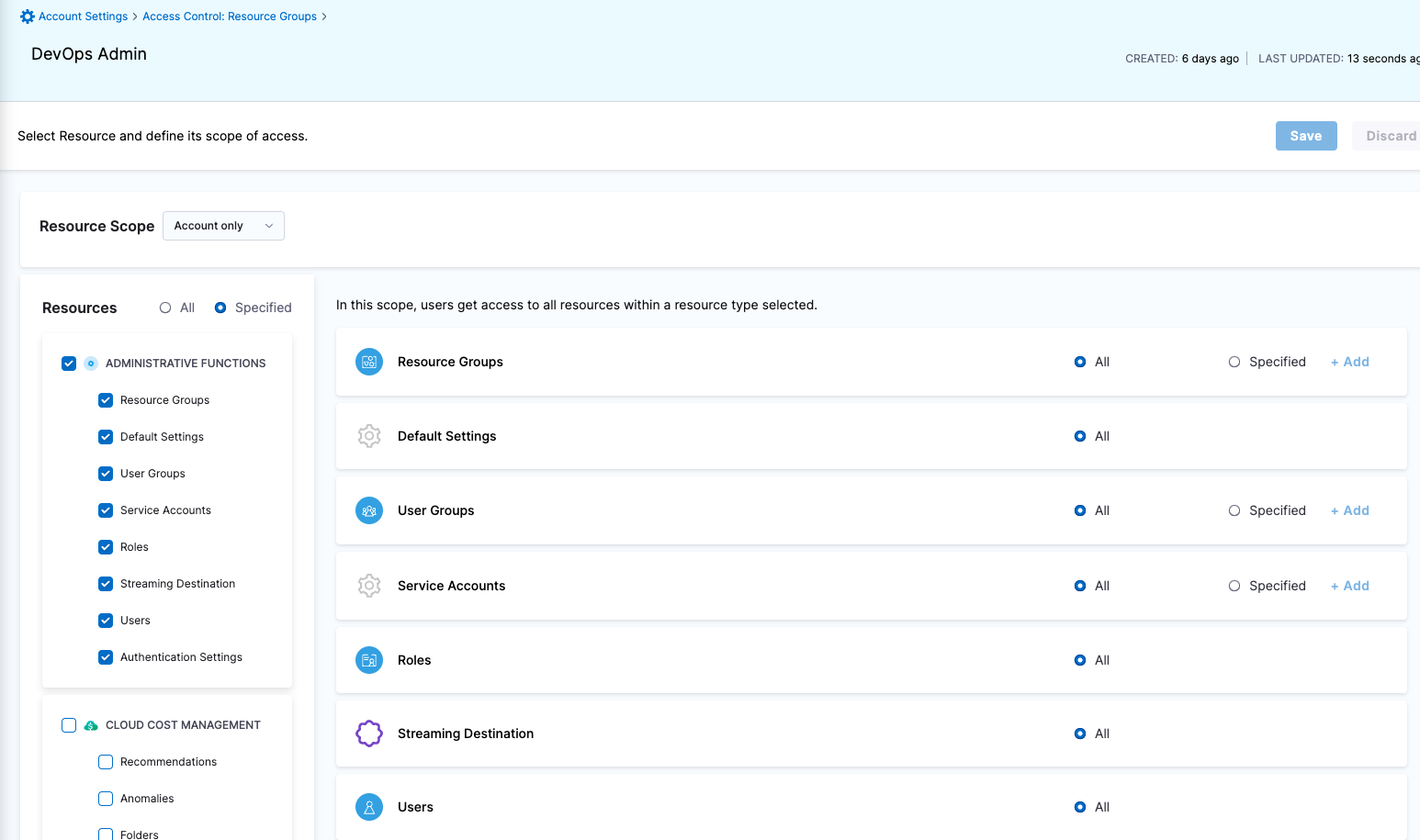

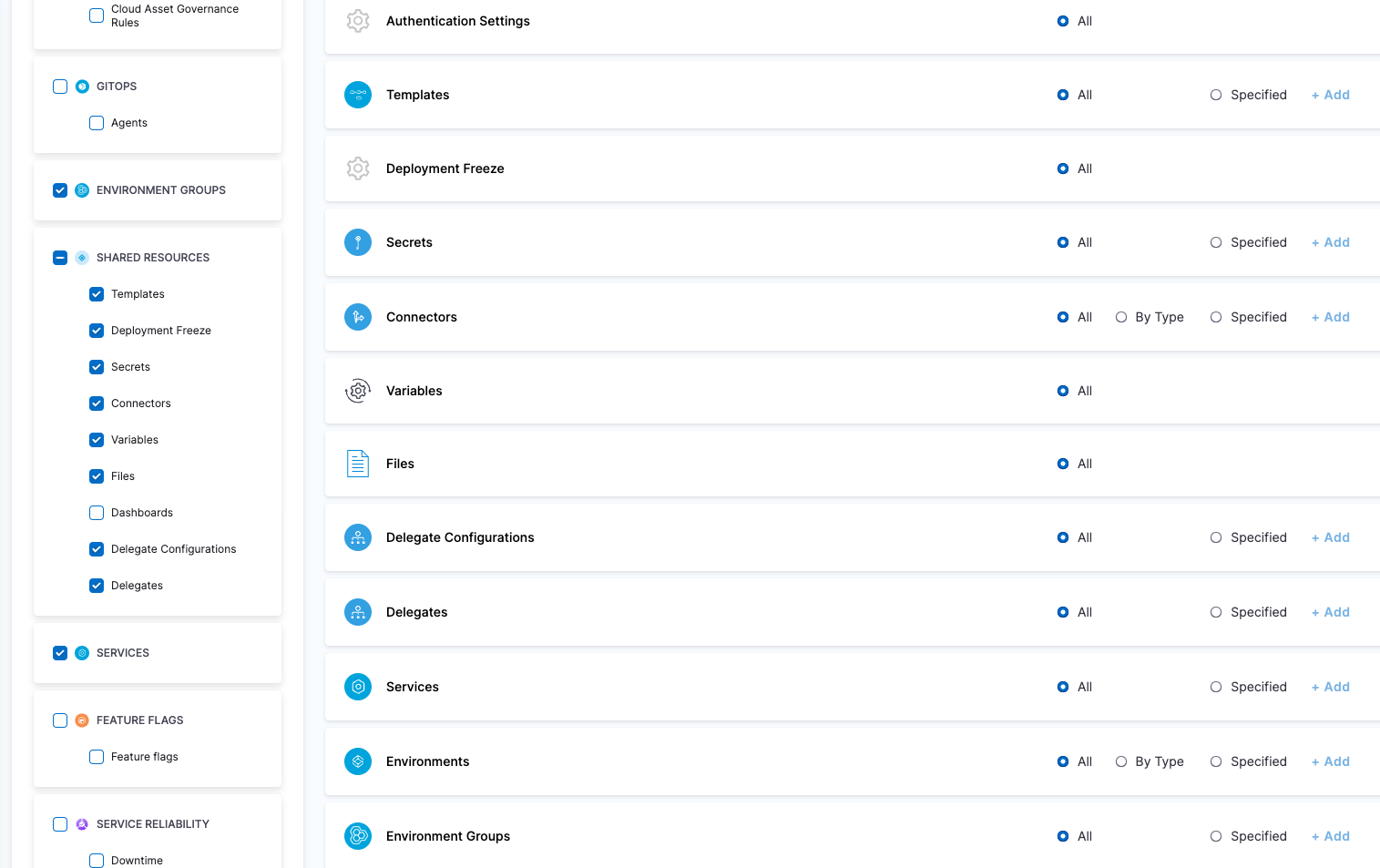

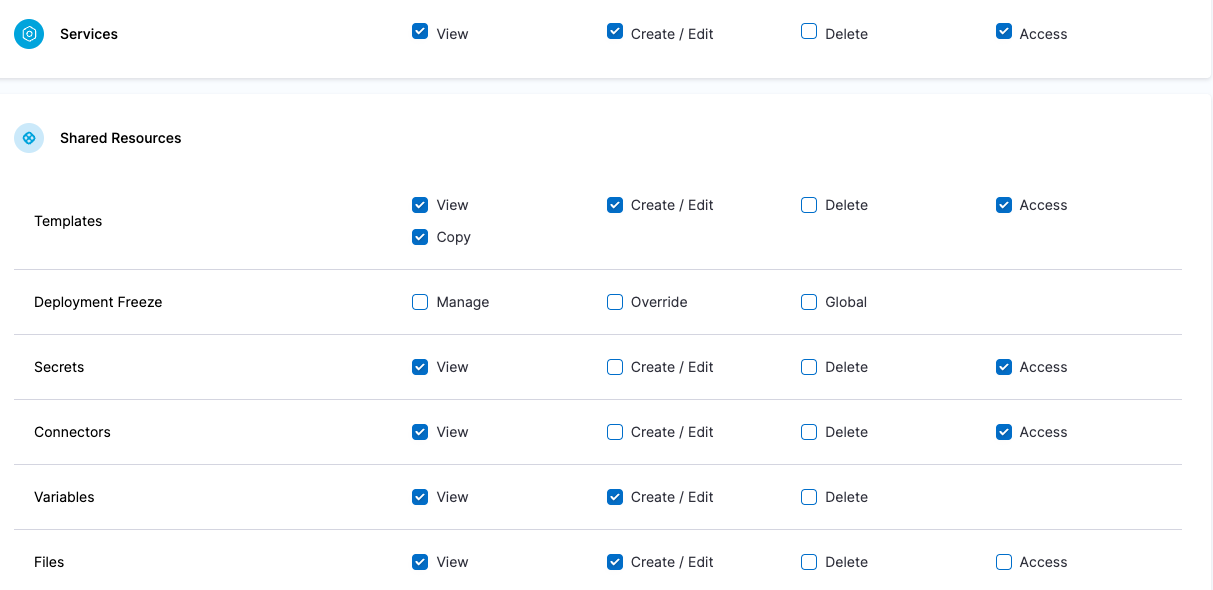

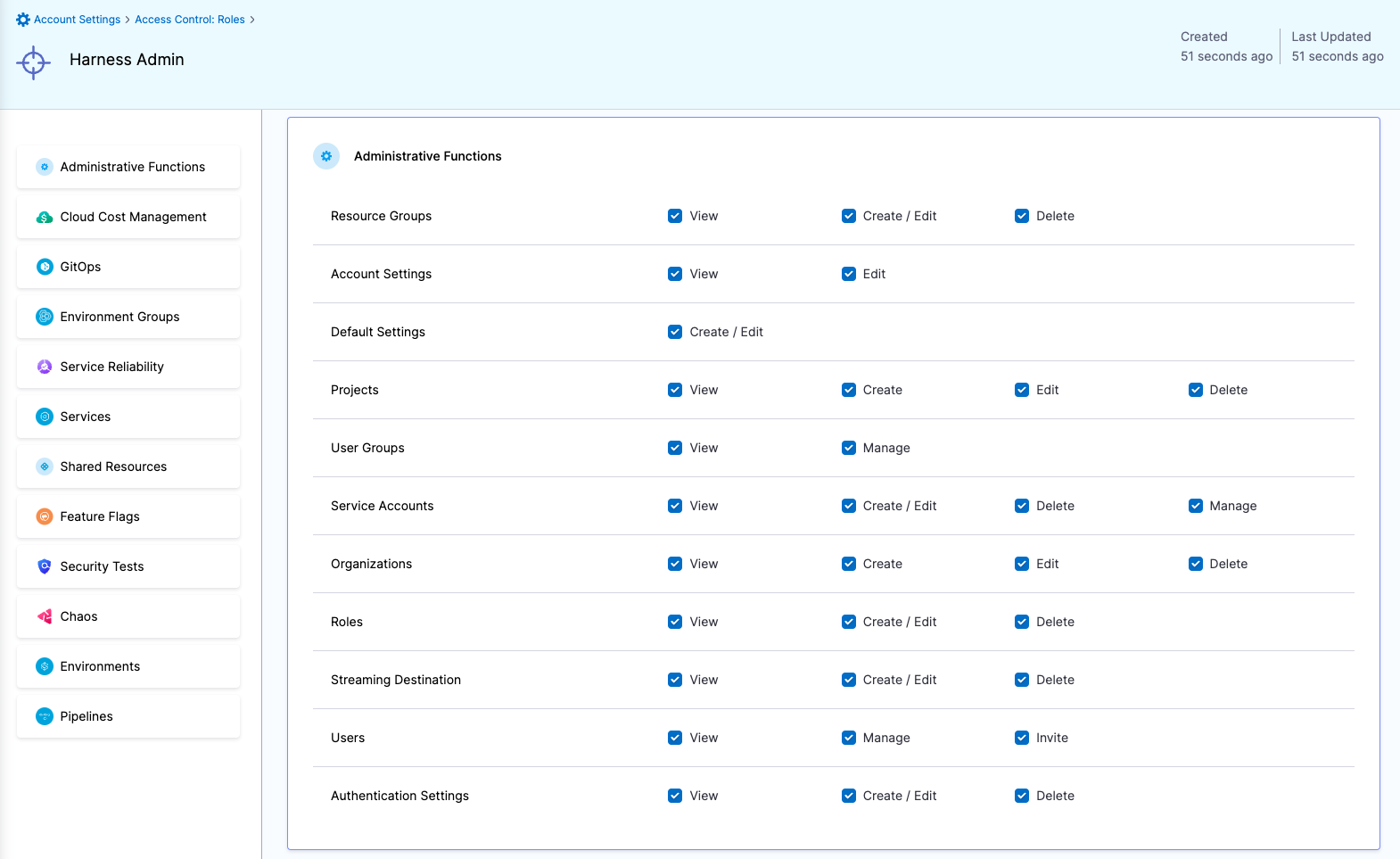

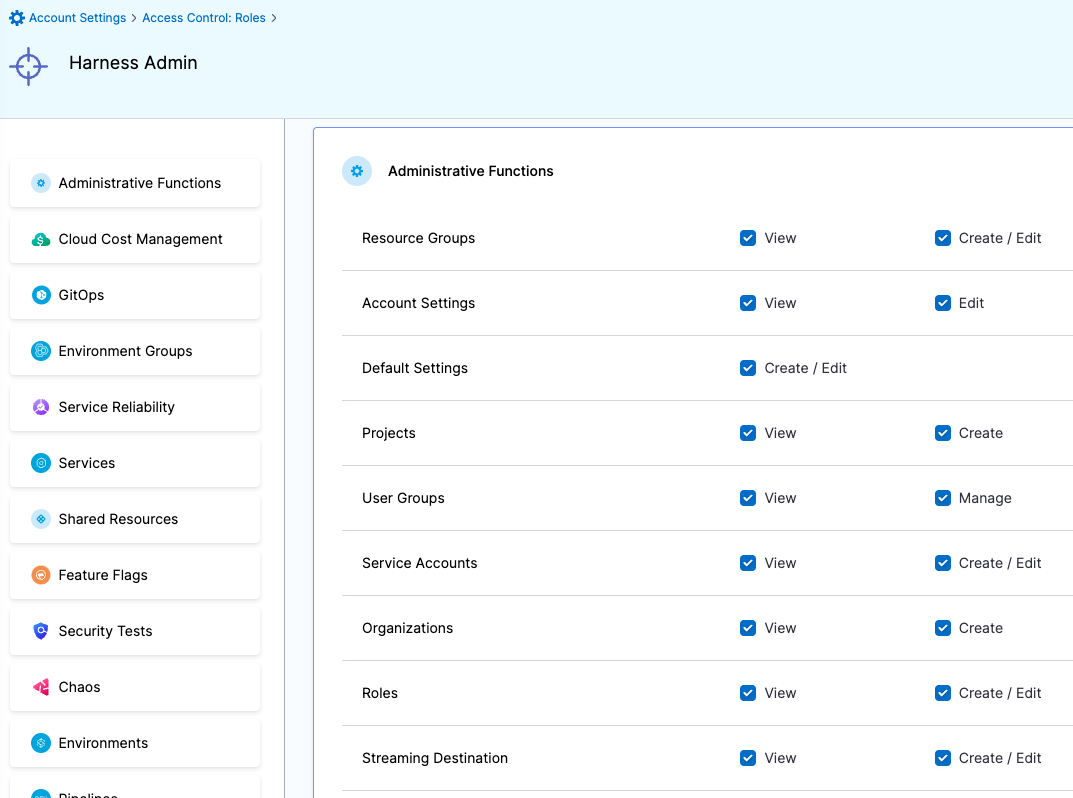

| Devops Admin | Responsible for setting up Policies to adhere to certain organizational standards, managing Users and various other things like Default Settings, Auth Settings, etc | Administrative Functions: All permissions Services: View, Create/Edit, Delete & Access Environments: View & Access Environment Groups: View & Access Shared Resources -> Templates: View, Create/Edit, Delete, Access & Copy Shared Resources -> Files: View, Create/Edit, Delete & Access Shared Resources -> Deployment Freeze: Manage, Override & Global Shared Resources -> Secrets: View & Access Shared Resources -> Connectors: View & Access Shared Resources -> Variables: View, Create/Edit & Delete Shared Resources -> Delegates: View Shared Resources -> Delegate Configurations: View Pipelines: View, Create/Edit, Delete & Execute | Administrative Functions: All Resources under it Shared Resources: All Resources under it except Dashboards Services Environments Environment Groups Pipelines |

| Devops Engineer | Responsible for managing Services, Templates, Files, Variables, Pipelines, Triggers, Input Sets etc. | Services: View, Create/Edit & Access Environments: View & Access Environment Groups: View & Access Shared Resources -> Templates: View, Create/Edit, Access & Copy Shared Resources -> Secrets: View & Access Shared Resources -> Connectors: View & Access Shared Resources -> Variables: View & Create/Edit Shared Resources -> Files: View & Create/Edit Pipelines: View, Create/Edit & Execute | Shared Resources: All Resources under it except Dashboards Services Environments Environment Groups Pipelines |

Distributed DevOps strategy

| Role Type | Role Description | Harness Roles | Harness Resource Groups | Resource Scope |

|---|---|---|---|---|

| Harness Admin | Responsible for managing Users and various other things like Default Settings, Auth Settings, etc | Administrative Functions: All permissions | Administrative Functions: All Resources under it | All (including all Organizations and Projects) |

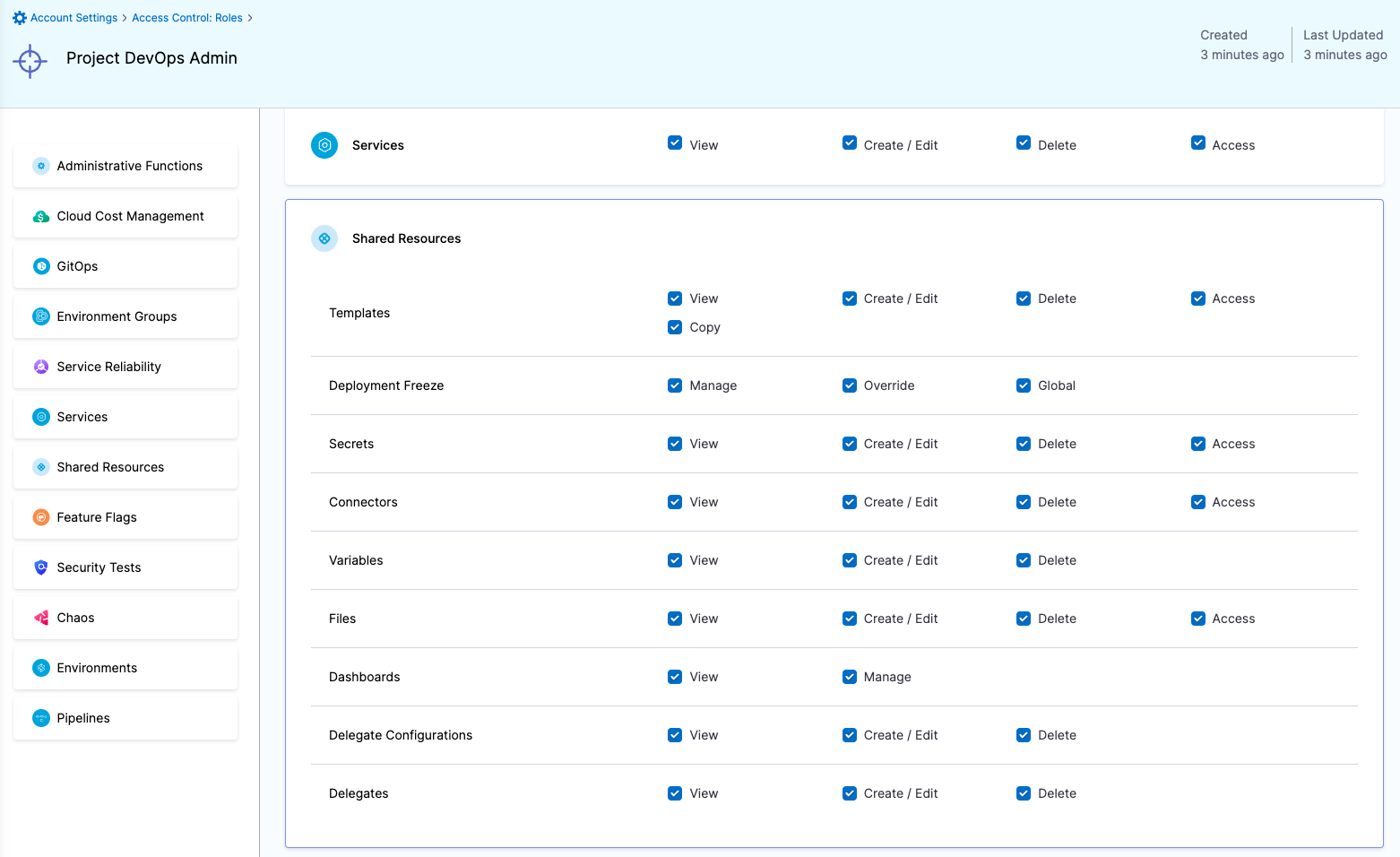

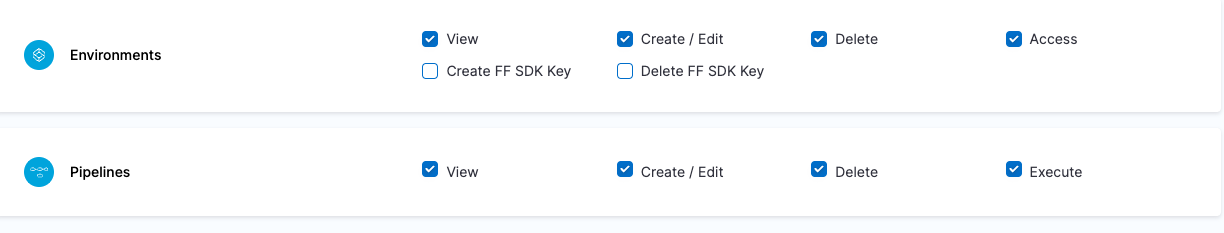

| Project DevOps Admin | Responsible for provisioning infrastructure and managing Harness resources like Secrets, Environment, Connectors and Delegates, setting up Policies to adhere to certain organizational standards and keeping an eye on all the entities of an Organization within Harness Platform | Services: View, Create/Edit, Delete & Access Shared Resources -> Templates: View, Create/Edit, Delete, Access & Copy Shared Resources -> Files: View, Create/Edit, Delete & Access Shared Resources -> Deployment Freeze: Manage, Override & Global Shared Resources -> Secrets: View, Create/Edit, Delete & Access Shared Resources -> Connectors: View, Create/Edit, Delete & Access Shared Resources -> Delegates: View, Create/Edit & Delete Shared Resources -> Delegate Configurations: View, Create/Edit & Delete Environments: View, Create/Edit, Delete & Access Environment Groups: View, Create/Edit, Delete & Access Shared Resources -> Variables: View, Create/Edit & Delete Pipelines: View, Create/Edit, Delete & Execute Shared Resources -> Dashboards: View & Manage | Shared Resources Services Environments Environment Groups Pipelines | Specified Organizations (and their Projects) |

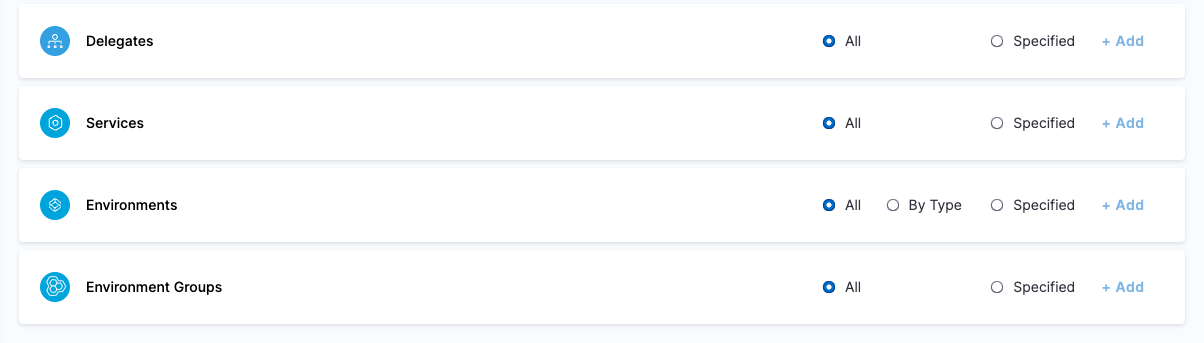

| Project DevOps Engineer | Responsible for managing Services, Templates, Files, Variables, Pipelines, Triggers, Input Sets etc. of an Organization within Harness Platform | Services: View, Create/Edit & Access Shared Resources -> Templates: View, Create/Edit, Access & Copy Shared Resources -> Secrets: View & Access Shared Resources -> Connectors: View & Access Shared Resources -> Variables: View & Create/Edit Shared Resources -> Files: View & Create/Edit Environments: View & Access Environment Groups: View & Access Pipelines: View, Create/Edit & Execute Shared Resources -> Dashboards: View | Shared Resources Services Environments Environment Groups Pipelines | Specified Organizations (and their Projects) |

- Center of Excellence

- Distruibuted DevOps

- Program Manager

- Platform Admin

- DevOps Admin

- DevOps Engineer

Roles

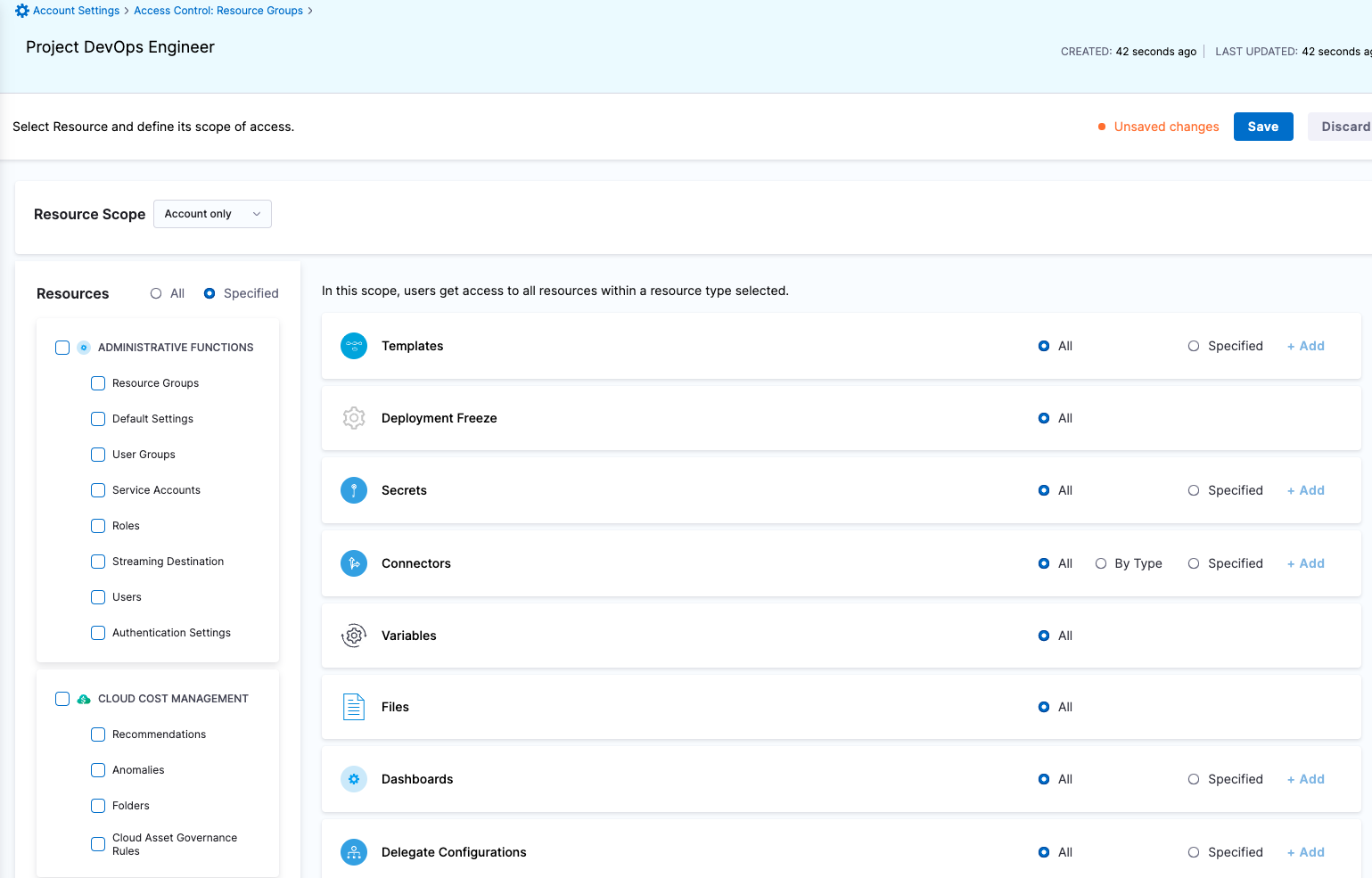

Resource Groups

Roles

Resource Groups

Roles

Resource Groups

Roles

Resource Groups

- Harness Admin

- Project DevOps Admin

- Project DevOps Engineer

Roles

Resource Groups

Roles

Resource Groups

Roles

Resource Groups

For more information, go to

Take a look at Role-based access control (RBAC) in Harness article to configure RBAC in Harness

Step 3. Continuous Verification

Harness Continuous Verification (CV) is a critical tool in the deployment pipeline that validates deployments by integrating with APMs and logging tools to verify that the deployment is running safely and efficiently.

Harness CV applies machine learning algorithms to every deployment for identifying normal behavior. This allows Harness to identify and flag anomalies in future deployments. During the Verify step, Harness CV automatically triggers a rollback if anomalies are found.

Deployment strategies for Continuous Verification:

- Continuous Verification type

- Auto

- Rolling Update

- Canary

- Blue Green

- Load Test

- Sensitivity

- Duration

- Artifact tag

- Fail on no analysis

- Health Source

For more information, go to Configure CV.

Phase 5: SSO-enabled DevOps with Infrastructure as Code (IaC)

Step 1. SSO

Harness supports Single Sign-On (SSO) with SAML, integrating with your SAML SSO provider to enable you to log your users into Harness as part of your SSO infrastructure. The user can choose between a variety of SSO integrations according to their needs.

For more information, go to Authentication.

Step 2. Templatization & Automation

Templatization

Harness enables you to add templates to create reusable logic and Harness entities (like steps, stages, and pipelines) in your pipelines. You can link templates in your pipelines or share them with your teams for improved efficiency.

Templates enhance developer productivity, reduce onboarding time, and enforce standardization across the teams that use Harness. Here's an example template that builds a JavaScript application, runs unit tests and pushes to docker registry.

Sample Golden Deployment Pipeline Template

template:

name: Golden Deploy

identifier: Golden_Deploy

type: Stage

projectIdentifier: Platform_Demo

orgIdentifier: default

spec:

type: Deployment

spec:

serviceConfig:

serviceDefinition:

type: Kubernetes

spec:

artifacts:

sidecars: []

primary:

type: Gcr

spec:

connectorRef: TestGCP_inherit

imagePath: sales-209522/platform-demo

registryHostname: us.gcr.io

tag: <+pipeline.sequenceId>

manifestOverrideSets: []

manifests:

- manifest:

identifier: HarnessAppDemoManifests

type: K8sManifest

spec:

store:

type: Github

spec:

connectorRef: Platformdemo2

gitFetchType: Branch

paths:

- k8s/manifests/namespace.yml

- k8s/manifests/volumeclaim-creation.yml

- k8s/manifests/nodeport-deployment.yml

- k8s/manifests/ingress-deployment.yml

- k8s/manifests/app-deployment.yml

branch: main

skipResourceVersioning: false

- manifest:

identifier: values

type: Values

spec:

store:

type: Github

spec:

connectorRef: Platformdemo2

gitFetchType: Branch

paths:

- k8s/values/values.yml

branch: main

serviceRef: HarnessPlatformDemoApp

infrastructure:

environmentRef: k8sProduction

infrastructureDefinition:

type: KubernetesDirect

spec:

connectorRef: platformdemok8s

namespace: <+pipeline.variables.githublogin>

releaseName: <+pipeline.variables.githublogin>

allowSimultaneousDeployments: true

infrastructureKey: ""

execution:

steps:

- step:

type: HarnessApproval

name: Approve this version

identifier: Keep_this_Version

spec:

approvalMessage: Please review the following information and approve the pipeline progression

includePipelineExecutionHistory: false

approvers:

userGroups:

- account.Field_Engineering

- account.Harness_Partners

minimumCount: 2

disallowPipelineExecutor: false

approverInputs: []

timeout: 30m

failureStrategies:

- onFailure:

errors:

- Authorization

action:

type: StageRollback

when:

stageStatus: Success

- step:

name: Rollout Deployment

identifier: rolloutDeployment

type: K8sRollingDeploy

timeout: 10m

spec:

skipDryRun: false

- stepGroup:

name: Service Reliability

identifier: Service_Reliability

steps:

- step:

type: Http

name: API Verification

identifier: Smart_Verification

spec:

url: http://<+pipeline.variables.externalDnsName>/<+pipeline.variables.githublogin>/data/api.php?func=verif

method: GET

headers: []

outputVariables:

- name: message

value: <+json.object(httpResponseBody).message>

type: String

- name: level

type: String

value: <+json.object(httpResponseBody).level>

assertion: <+json.object(httpResponseBody).level> == "ok"

requestBody: test test test

inputVariables: []

timeout: 30s

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: Ignore

- step:

type: Verify

name: Logs-Metrics Verification

identifier: verify_dev

spec:

type: Rolling

spec:

sensitivity: MEDIUM

duration: 5m

deploymentTag: <+serviceConfig.artifacts.primary.tag>

timeout: 2h

failureStrategies:

- onFailure:

errors:

- Verification

action:

type: StageRollback

spec:

timeout: 2h

onTimeout:

action:

type: StageRollback

- onFailure:

errors:

- Unknown

action:

type: ManualIntervention

spec:

timeout: 2h

onTimeout:

action:

type: Ignore

when:

stageStatus: Success

condition: <+pipeline.stages.Image_Deployment.spec.execution.steps.Service_Reliability.steps.Smart_Verification.output.outputVariables.level> == "error"

rollbackSteps:

- step:

name: Rollback Rollout Deployment

identifier: rollbackRolloutDeployment

type: K8sRollingRollback

timeout: 10m

spec:

skipDryRun: false

serviceDependencies: []

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: StageRollback

variables: []

when:

pipelineStatus: Success

versionLabel: "6.0"

Terraform Automation

The Harness Terraform Provider enables automated lifecycle management of the Harness Platform using Terraform. You can onboard onto Harness on day 1 and also make day 2 changes using this Provider. Currently the following Harness resources can be managed via the Provider.

For more information, go to Onboard with Terraform Provider.

Terraform Script to create harness resources (Services, Environments, Pipelines)

variable "platform_api_key" {}

variable "accountId" {}

variable "endpoint" {}

variable "projectIdentifier" {}

variable "orgIdentifier" {}

variable "connectorIdentifier" {}

variable "k8sMasterUrl" {}

variable "secretIdentifier" {}

variable "secretValue" {}

variable "serviceIdentifier" {}

variable "envIdentifier" {}

variable "infraIdentifier" {}

variable "pipelineIdentifier" {}

terraform {

required_providers {

harness = {

source = "harness/harness"

version = "0.16.1"

}

}

}

provider "harness" {

endpoint = "${var.endpoint}"

account_id = "${var.accountId}"

platform_api_key = "${var.platform_api_key}"

}

resource "harness_platform_project" "test" {

identifier = "${var.projectIdentifier}"

name = "${var.projectIdentifier}"

org_id = "${var.orgIdentifier}"

color = "#0063F7"

}

resource "time_sleep" "wait_30_seconds" {

depends_on = [harness_platform_project.test]

create_duration = "30s"

}

resource "harness_platform_secret_text" "inline" {

depends_on = [harness_platform_project.test, time_sleep.wait_30_seconds]

identifier = "${var.secretIdentifier}"

name = "${var.secretIdentifier}"

description = "example"

tags = ["foo:bar"]

org_id = "${var.orgIdentifier}"

project_id = "${var.projectIdentifier}"

secret_manager_identifier = "harnessSecretManager"

value_type = "Inline"

value = "${var.secretValue}"

}

resource "harness_platform_connector_kubernetes" "serviceAccount" {

depends_on = [harness_platform_project.test, harness_platform_secret_text.inline]

identifier = "${var.connectorIdentifier}"

org_id = "${var.orgIdentifier}"

project_id = "${var.projectIdentifier}"

name = "${var.connectorIdentifier}"

description = "description"

tags = ["foo:bar"]

service_account {

master_url = "${var.k8sMasterUrl}"

service_account_token_ref = "${var.secretIdentifier}"

}

}

resource "harness_platform_service" "example" {

depends_on = [harness_platform_project.test]

identifier = "${var.serviceIdentifier}"

org_id = "${var.orgIdentifier}"

project_id = "${var.projectIdentifier}"

name = "${var.serviceIdentifier}"

description = "description"

tags = ["foo:bar"]

yaml = <<-EOT

service:

name: "${var.serviceIdentifier}"

identifier: "${var.serviceIdentifier}"

tags: {}

serviceDefinition:

spec:

manifests:

- manifest:

identifier: manifest

type: K8sManifest

spec:

store:

type: Git

spec:

connectorRef: org.GitConnectorForAutomationTest

gitFetchType: Branch

paths:

- ng-automation/k8s/templates/

branch: master

valuesPaths:

- ng-automation/k8s/values.yaml

skipResourceVersioning: false

artifacts:

primary:

primaryArtifactRef: <+input>

sources:

- spec:

connectorRef: org.DockerConnectorForAutomationTest

imagePath: library/nginx

tag: latest

identifier: artifact

type: DockerRegistry

type: Kubernetes

EOT

}

resource "harness_platform_environment" "example" {

depends_on = [harness_platform_project.test]

identifier = "${var.envIdentifier}"

name = "${var.envIdentifier}"

org_id = "${var.orgIdentifier}"

project_id = "${var.projectIdentifier}"

tags = ["foo:bar", "baz"]

type = "PreProduction"

yaml = <<-EOT

environment:

name: "${var.envIdentifier}"

identifier: "${var.envIdentifier}"

description: ""

tags: {}

type: PreProduction

orgIdentifier: "${var.orgIdentifier}"

projectIdentifier: "${var.projectIdentifier}"

variables: []

EOT

}

resource "harness_platform_infrastructure" "example" {

depends_on = [harness_platform_project.test, harness_platform_environment.example]

identifier = "${var.infraIdentifier}"

name = "${var.infraIdentifier}"

org_id = "${var.orgIdentifier}"

project_id = "${var.projectIdentifier}"

env_id = "${var.envIdentifier}"

type = "KubernetesDirect"

deployment_type = "Kubernetes"

yaml = <<-EOT

infrastructureDefinition:

name: "${var.infraIdentifier}"

identifier: "${var.infraIdentifier}"

description: ""

tags: {}

orgIdentifier: "${var.orgIdentifier}"

projectIdentifier: "${var.projectIdentifier}"

environmentRef: "${var.envIdentifier}"

deploymentType: Kubernetes

type: KubernetesDirect

spec:

connectorRef: org.KubernetesConnectorForAutomationTest

namespace: default

releaseName: release-<+INFRA_KEY>

allowSimultaneousDeployments: true

EOT

}

resource "harness_platform_pipeline" "example" {

depends_on = [harness_platform_project.test]

identifier = "${var.pipelineIdentifier}"

org_id = "${var.orgIdentifier}"

project_id = "${var.projectIdentifier}"

name = "${var.pipelineIdentifier}"

yaml = <<-EOT

pipeline:

name: "${var.pipelineIdentifier}"

identifier: "${var.pipelineIdentifier}"

projectIdentifier: "${var.projectIdentifier}"

orgIdentifier: "${var.orgIdentifier}"

tags: {}

stages:

- stage:

name: stage

identifier: stage

description: ""

type: Deployment

spec:

deploymentType: Kubernetes

service:

serviceRef: "${var.serviceIdentifier}"

serviceInputs:

serviceDefinition:

type: Kubernetes

spec:

artifacts:

primary:

primaryArtifactRef: <+input>

sources: <+input>

environment:

environmentRef: "${var.envIdentifier}"

deployToAll: false

infrastructureDefinitions:

- identifier: "${var.infraIdentifier}"

execution:

steps:

- step:

name: Rollout Deployment

identifier: rolloutDeployment

type: K8sRollingDeploy

timeout: 10m

spec:

skipDryRun: false

pruningEnabled: false

rollbackSteps:

- step:

name: Rollback Rollout Deployment

identifier: rollbackRolloutDeployment

type: K8sRollingRollback

timeout: 10m

spec:

pruningEnabled: false

tags: {}

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: StageRollback

variables:

- name: resourceNamePrefix

type: String

description: ""

value: qwe

EOT

}

YAML to use the above Terraform script in Harness pipeline as custom stage

pipeline:

tags: {}

stages:

- stage:

name: Create Resource

identifier: Create_Resource

description: Create harness resources using Harness Terraform Provider

type: Custom

spec:

execution:

steps:

- step:

type: TerraformPlan

name: TerraformPlan

identifier: TerraformPlan

spec:

provisionerIdentifier: createResource

configuration:

command: Apply

configFiles:

store:

spec:

connectorRef: org.GitHubRepoConnectorForAutomationTest

gitFetchType: Branch

branch: master

folderPath: automation/terraform/K8sAutomation

type: Github

varFiles:

- varFile:

spec:

content: |-

platform_api_key = "Your Platform Api Token"

accountId = "accountID"

endpoint = "https://app.harness.io/gateway"

projectIdentifier = "Project Name to be created"

orgIdentifier = "Org where you want this project to be"

connectorIdentifier = "K8s Connector Identifier"

k8sMasterUrl = "K8s Master Url"

serviceIdentifier = "Service Identifier"

envIdentifier = "Environment Identifier"

infraIdentifier = "Infra Definition Identifier"

pipelineIdentifier = "Pipeline Identifier"

secretIdentifier = "Secret Identifier"

secretValue = "K8s ServiceAccount Token Secret Value"

identifier: terraformVariables

type: Inline

secretManagerRef: harnessSecretManager

timeout: 10m

- step:

type: TerraformApply

name: TerraformApply

identifier: TerraformApply

spec:

configuration:

type: InheritFromPlan

provisionerIdentifier: createResource

timeout: 10m

tags: {}

identifier: Harness_Terraform_Provider

name: Harness Terraform Provider

delegateSelectors: []

projectIdentifier: NGPipeAutoterraform_providerVcP4LI2gPm

orgIdentifier: Ng_Pipelines_K8s_Organisations