Setting Up the Backend with IDP Pipeline

Self-service workflows in Harness IDP are powered by Harness Pipelines. Each workflow’s backend is configured using Actions and Harness Pipelines. Below is a detailed guide to setting this up in Harness IDP.

Harness Pipelines: Backend Orchestration

Harness Pipeline acts as a robust orchestration engine for self-service workflows in Harness IDP. It is directly connected to executing specific tasks in your workflow via defined actions and pipelines.

Connecting Inputs, Actions and Harness Pipelines

When a workflow is executed, users provide input details required for pipeline execution. These inputs are passed into the pipeline through a workflow action, which triggers specific steps in the pipeline. These steps can perform tasks such as launching a CI/CD process, registering a service in the catalog, setting up infrastructure, etc.

The action accepts the Harness Pipeline URL as input, along with an authentication token that is automatically inserted into the parameters section. This seamless integration is enabled by Harness IDP being part of the broader Harness SaaS ecosystem. Users can also manage workflows via pipelines’ RBAC.

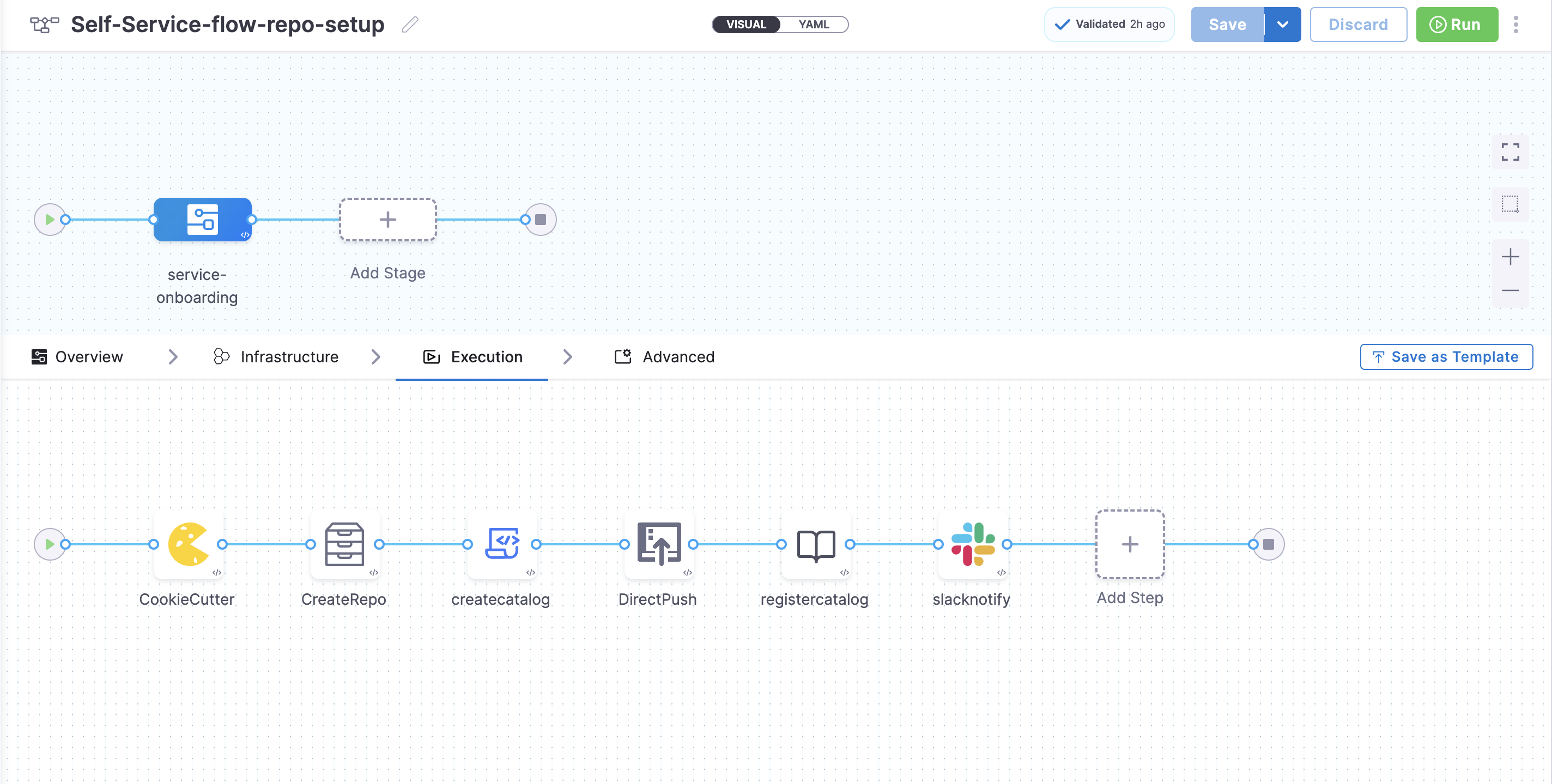

IDP Stage

Harness IDP includes a native IDP Stage where all IDP-specific tasks required for pipeline execution are pre-configured as pipeline steps within the stage. This enables quick and efficient creation of self-service workflows.

The IDP Stage provides built-in support for:

- Git cloning

- Cookiecutter templating

- Repository creation

- Catalog creation and registration

- Slack notifications

- Resource creation using Harness IaCM powered by OpenTofu

Currently, Harness-specific workflow actions support:

- IDP Stage

- Custom Stage (Available with Harness CD License or Free Tier)

- Codebase-disabled CI Stage with Run Step (Available with Harness CI License)

Creating a Harness Pipeline from Harness IDP

To create a Harness Pipeline using the IDP Stage, follow these steps:

-

In your Harness IDP, go to Admin -> Select Project.

-

Now start with Create a Pipeline.

-

Add a Name, select the type as Inline and Continue.

-

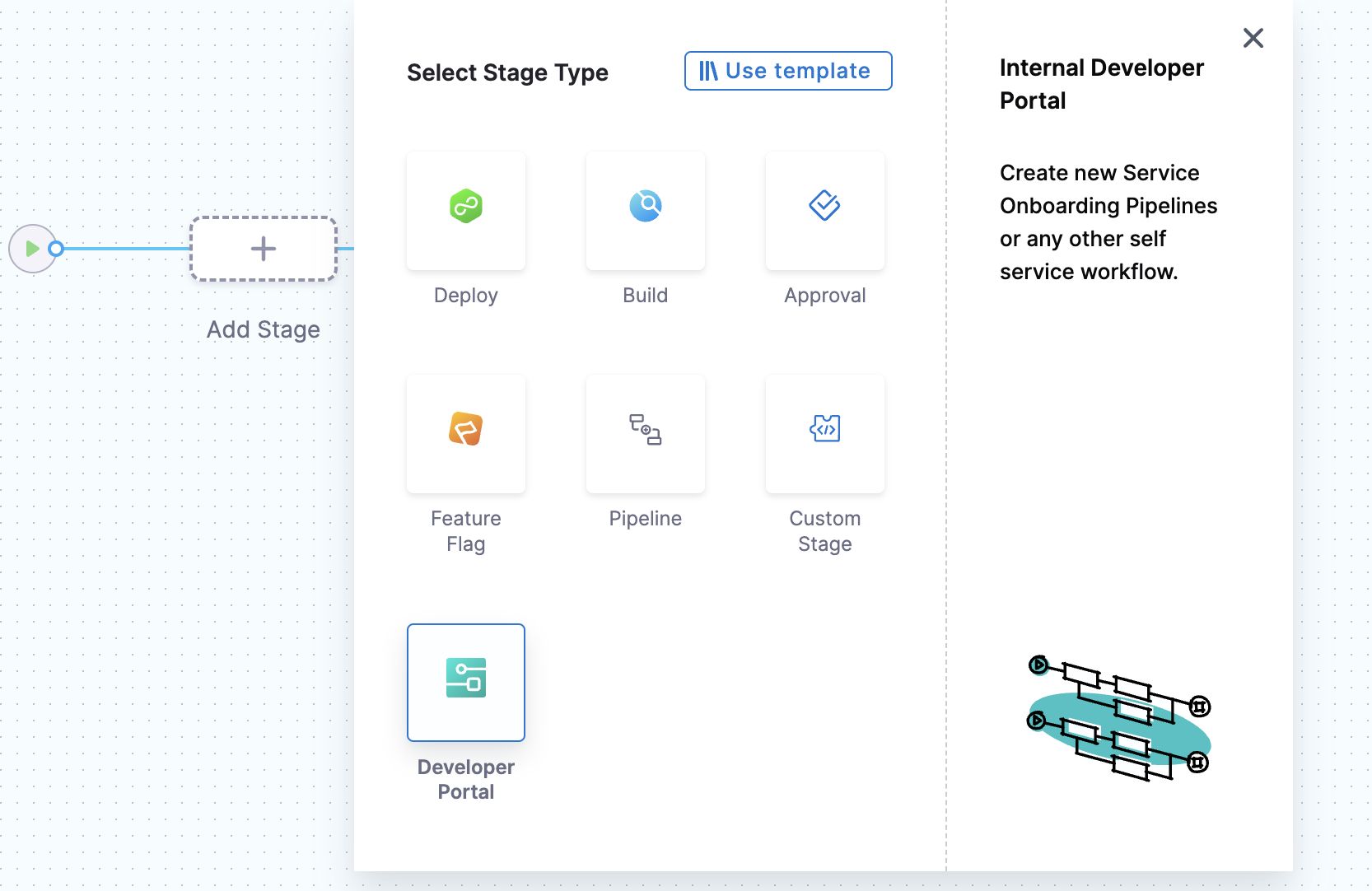

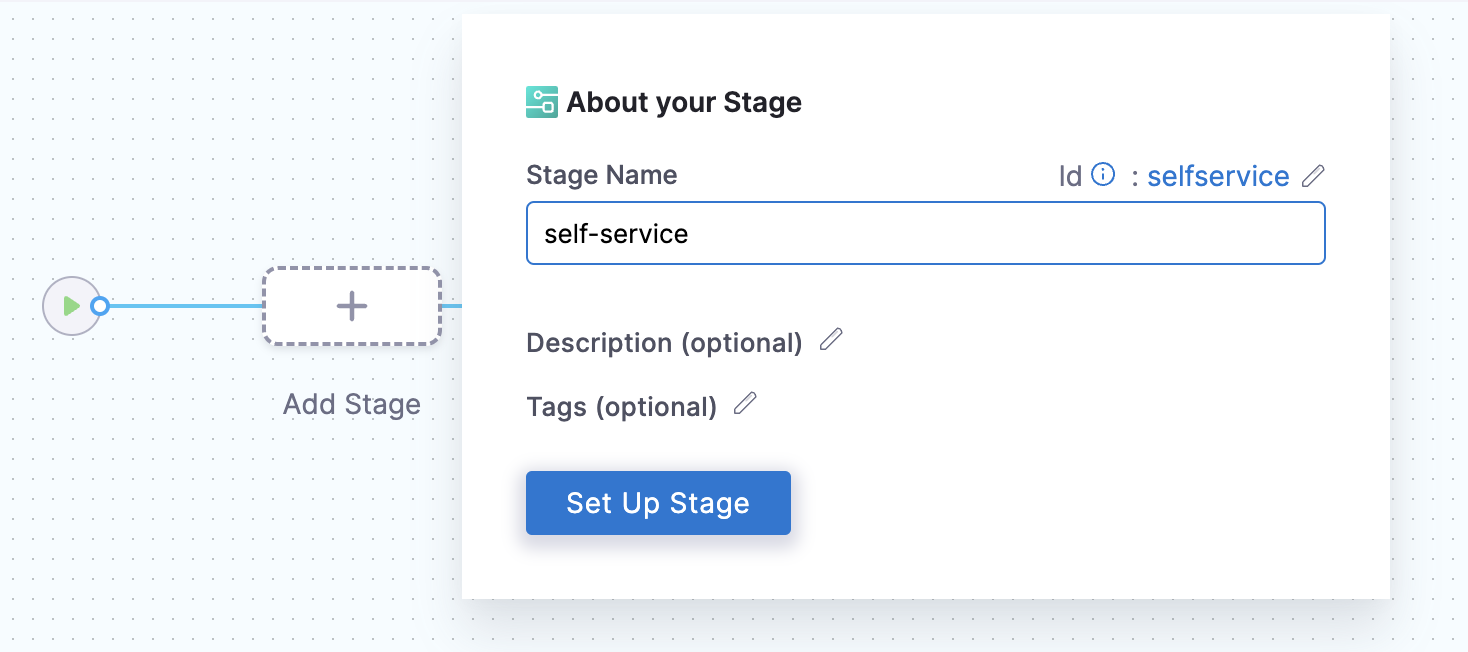

Now Select Stage Type as Developer Portal and add a name for your stage to Set Up Stage.

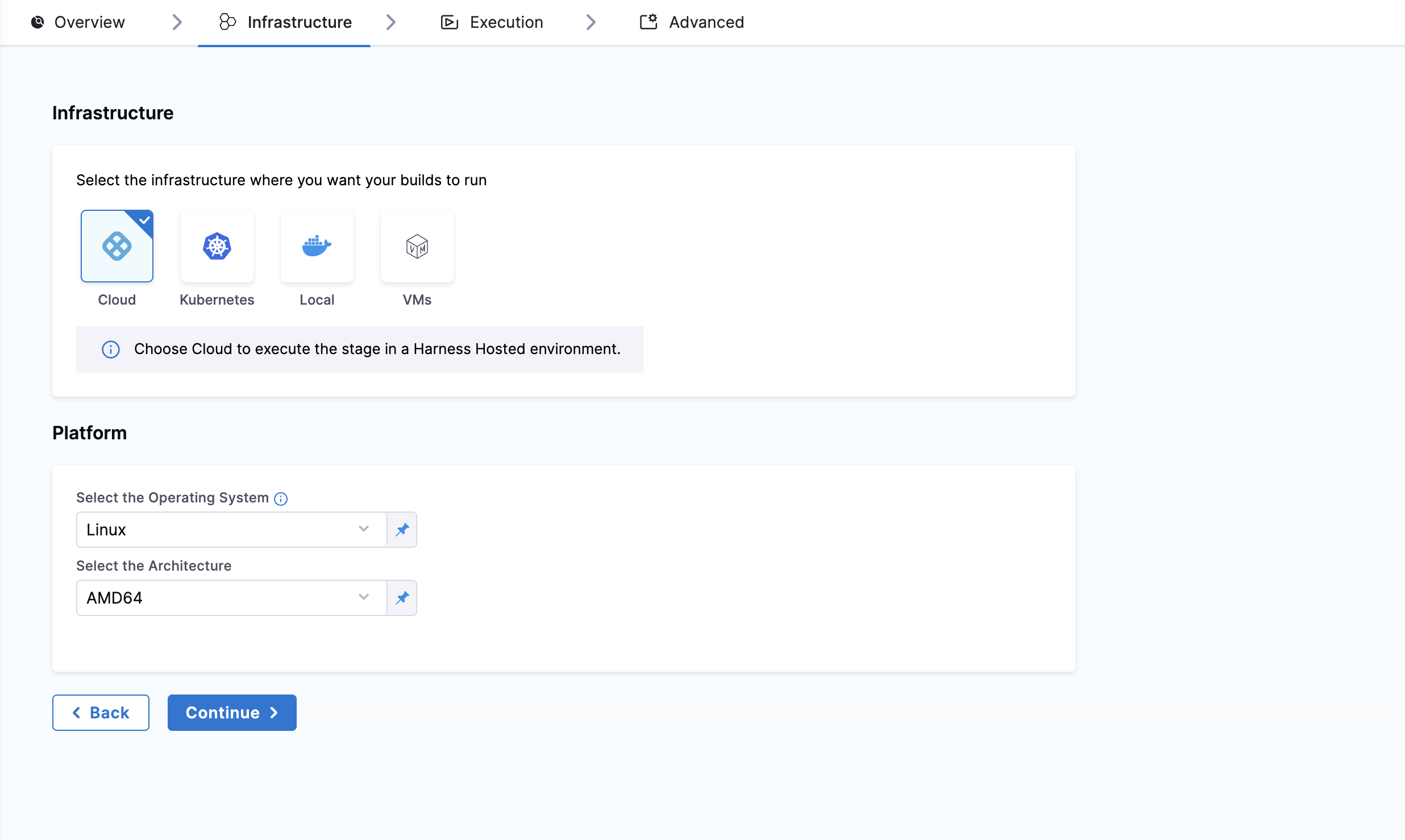

Infrastructure

Under Infrastructure tab, Harness recommends Harness Cloud, but you can also use a Kubernetes cluster, local runner or self-managed AWS/GCP/Azure VM build infrastructure.

Pipeline Variables

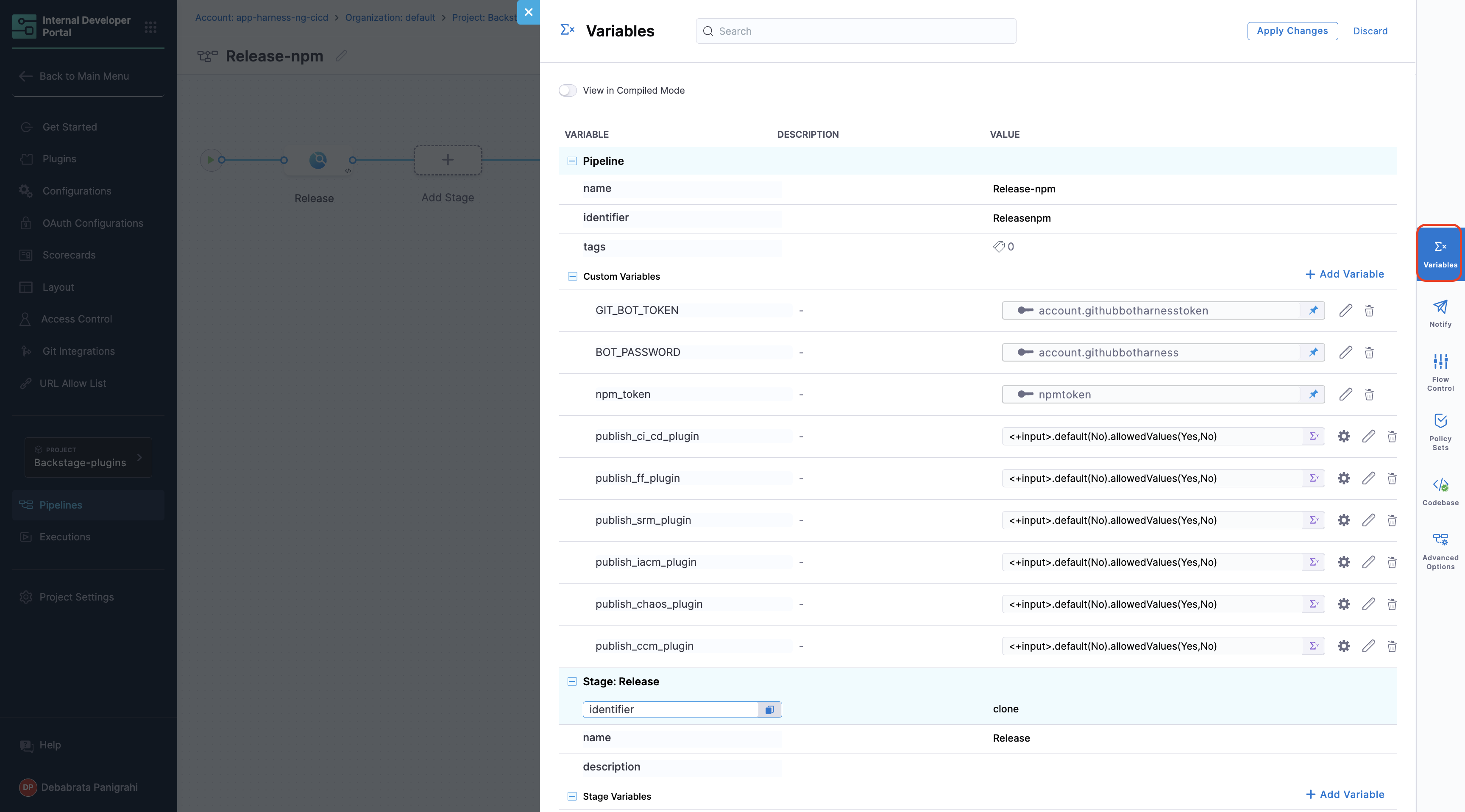

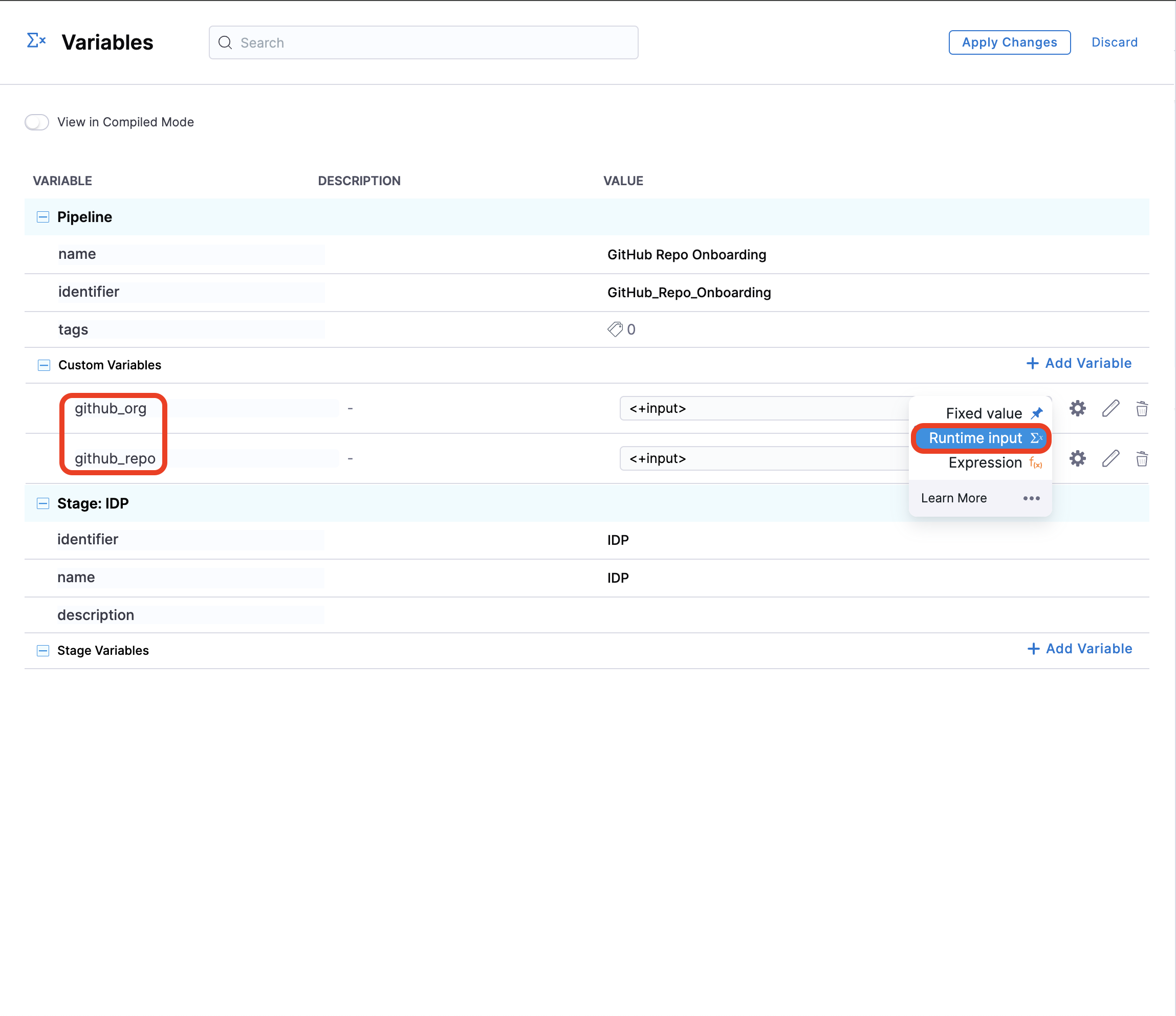

Before adding the execution steps, we need to create some pipeline variables with runtime inputs, which will be used as expression inputs in various steps during execution.

To add pipeline variables:

- Navigate to the right-hand side of your page and click on the Variables icon.

- Under Custom Variables, select +Add Variable.

- Assign a name to the variable and set the input type to Runtime.

Passing Inputs

The spec.parameters field in workflow.yaml contains the inputs required for the configuration. The keys under properties represent unique IDs for various input fields. These keys correspond to the pipeline variables that must be set as runtime inputs when configuring the pipeline. These inputs are designed to prompt the developer to provide necessary details when creating a new application.

The spec.steps field specifies a single action: triggering a Harness pipeline. This action requires mainly three inputs:

- Pipeline URL: The endpoint for the pipeline to be triggered.

- Input Set: A collection of runtime input variables that the pipeline requires.

- API Key: Used to authenticate and authorize the pipeline trigger.

All pipeline variables must be declared in the inputset within workflow.yaml, with their values aligned to the input parameters specified in the spec.parameters properties section.

Example workflow.yaml

The syntax {{ parameters.x }} is only supported within the steps section when configuring the Workflows Backend. It cannot be used in the properties section to reference another parameter.

...

spec:

parameters:

- title: Service Details

properties:

projectId:

title: Project Identifier

description: Harness Project Identifier

type: string

ui:field: HarnessProjectPicker

template_type:

title: Type of the Template

type: string

description: Type of the Template

ui:readonly: $${{ parameters.another_field}} ## NOT SUPPORTED

steps:

- id: trigger

name: Creating your react app

action: trigger:harness-custom-pipeline

input:

url: "https://app.harness.io/ng/account/account_id/module/idp/orgs/org_id/projects/project_id/pipelines/pipeline_id/pipeline-studio/?storeType=INLINE"

inputset:

project_id: ${{ parameters.projectId }} ## SUPPORTED

template_type: ${{ parameters.template_type }} ## SUPPORTED

...

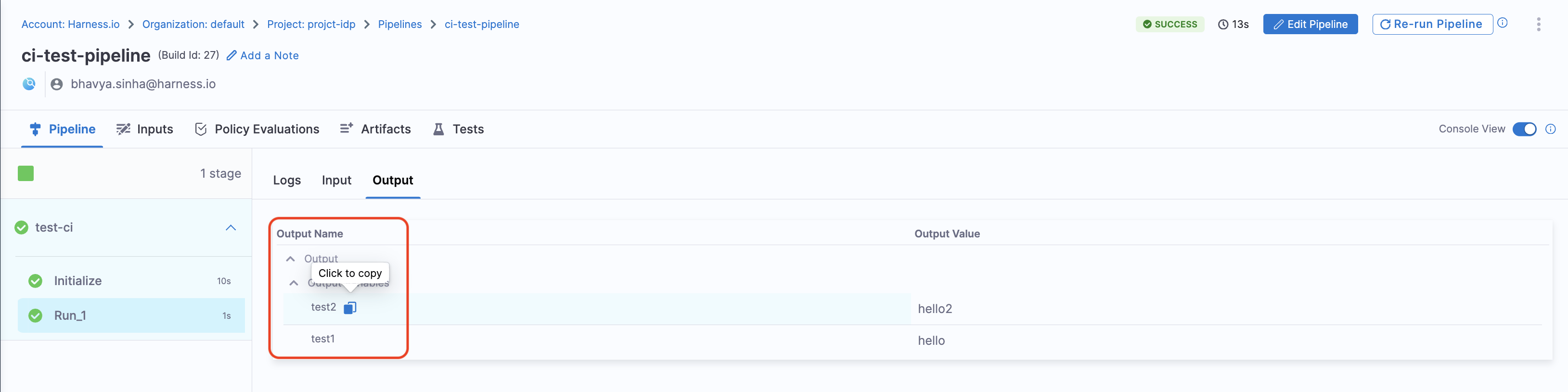

Fetching Outputs

You can configure your workflows to fetch output from the Harness Pipeline and display pipeline output variables using workflow.yaml. Here’s how you can do it:

- In your

workflow.yaml, under thestepsproperty field, setshowOutputVariablestotrue. - Define pipeline output variables under the

outputfield in your YAML configuration.

There are two ways to add output variables in the workflow syntax:

-

Directly referencing the output variable name:

${{ steps.trigger.output.test2 }}Here,

test2is the output variable created in the pipeline. -

Using the JEXL expression from execution logs:

- Copy the JEXL expression of the output variable and remove the JEXL constructs.

- Example:

${{ steps.trigger.output['pipeline.stages.testci.spec.execution.steps.Run_1.output.outputVariables.test1'] }} - In this case,

pipeline.stages.testci.spec.execution.steps.Run_1.output.outputVariables.test1is derived from:<+pipeline.stages.testci.spec.execution.steps.Run_1.output.outputVariables.test2>

This approach ensures that pipeline outputs are correctly fetched and displayed.

Example workflow.yaml

steps:

- id: trigger

name: Creating your react app

action: trigger:harness-custom-pipeline

input:

url: "https://app.harness.io/ng/account/vpCkHKsDSxK9_KYfjCTMKA/home/orgs/default/projects/communityeng/pipelines/IDP_New_NextJS_app/pipeline-studio/?storeType=INLINE"

inputset:

project_name: ${{ parameters.project_name }}

github_repo: ${{ parameters.github_repo }}

cloud_provider: ${{ parameters.provider }}

db: ${{ parameters.db }}

cache: ${{ parameters.cache }}

apikey: ${{ parameters.token }}

showOutputVariables: true

output:

text:

- title: Output Variable

content: |

Output Variable **test2** is `${{ steps.trigger.output.test2 }}`

- title: Another Output Variable

content: |

Output Variable **test1** with fqnPath is `${{ steps.trigger.output['pipeline.stages.testci.spec.execution.steps.Run_1.output.outputVariables.test1'] }}`

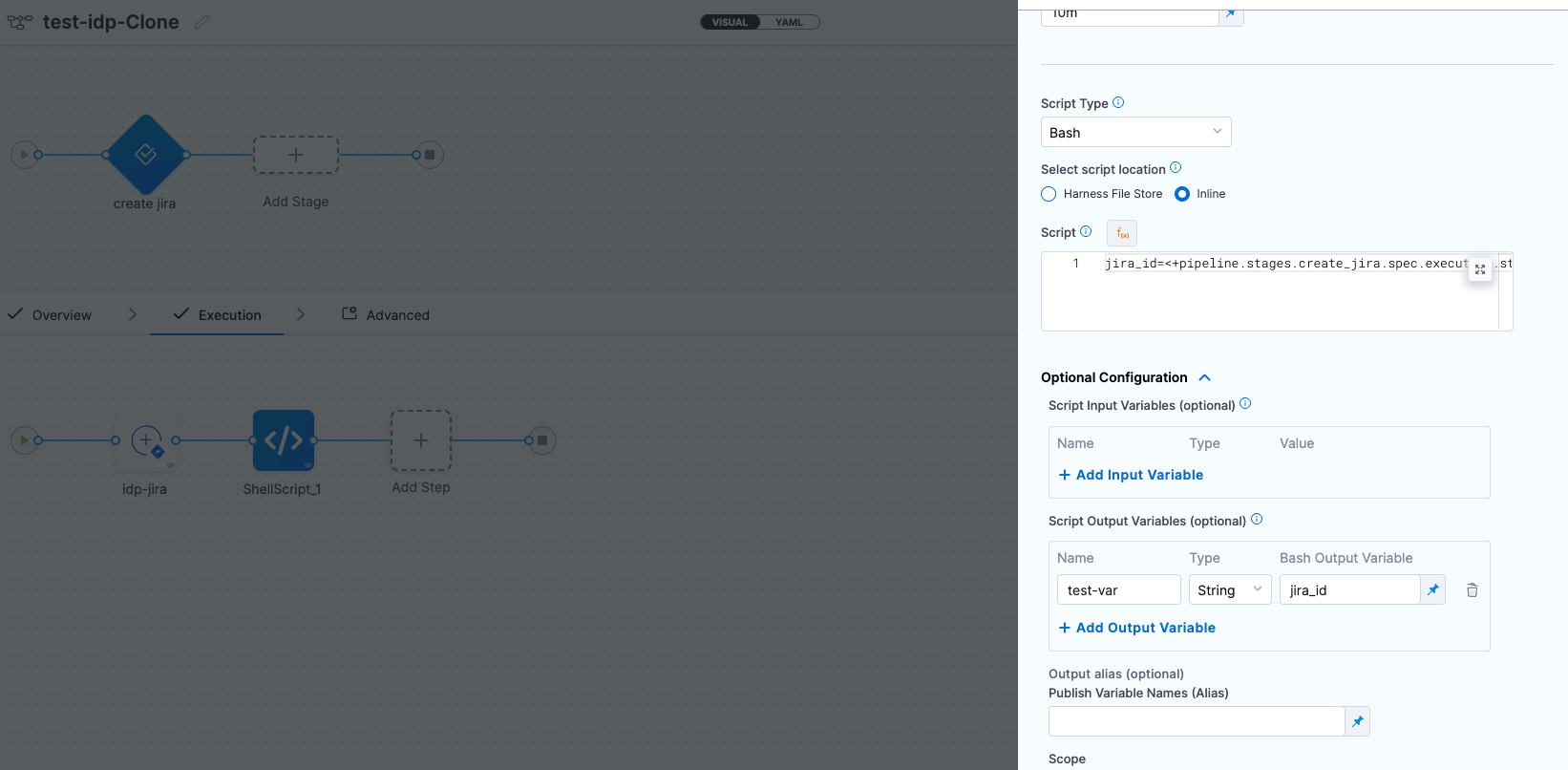

Please note that while user-defined output variables are allowed for the above use-case, you can also use system-generated variables by assigning them as a new variable under the Shell Script step, as shown below.

For example, if a system-generated output variable is jira_id, you can define it as a user-defined output variable under Optional Configuration by assigning it to a new variable, such as test-var. This newly defined variable (test-var) can then be displayed as output in the IDP workflows.

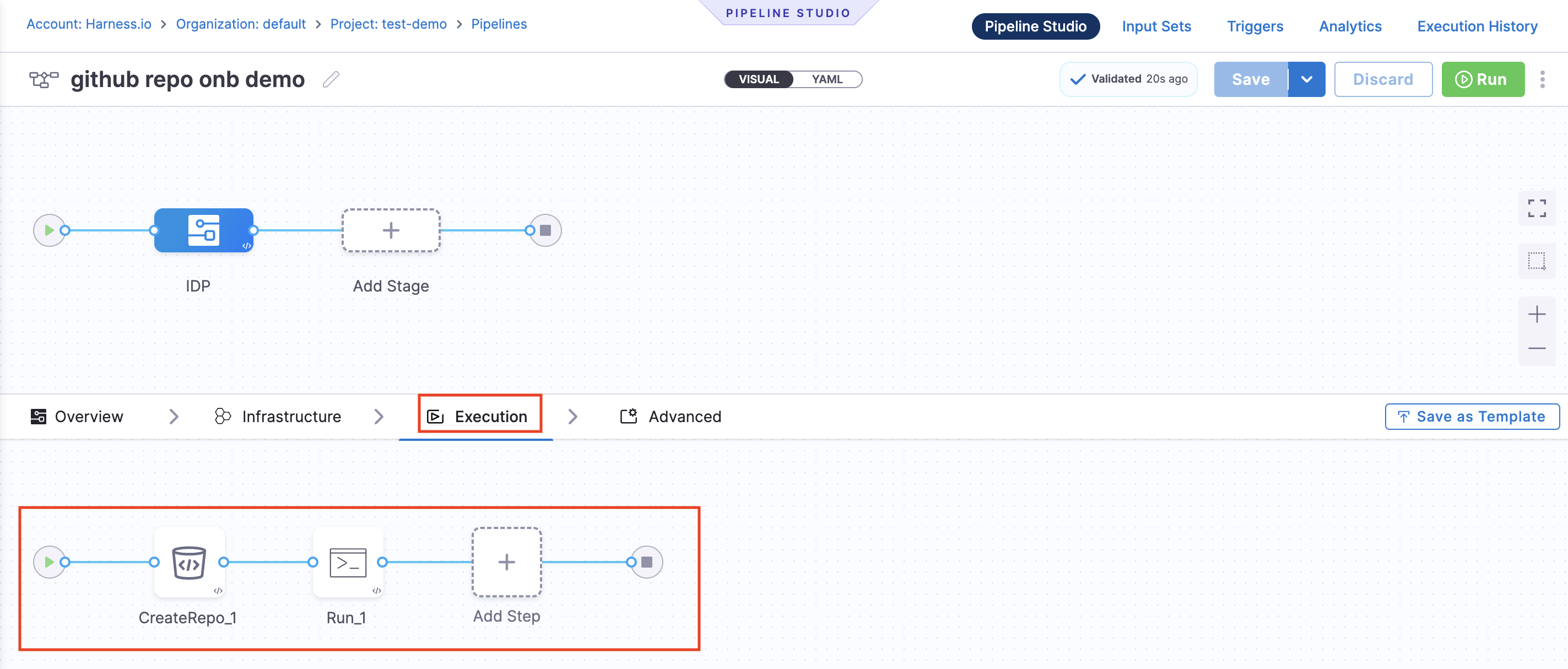

Execution Steps

You can add various execution steps (pre-included with the IDP stage) under the Execution tab. Refer to the detailed guide below for step-by-step instructions on adding and implementing IDP stage execution steps.

IDP Stage

The self-service flow in IDP is powered by the Harness Pipelines. A stage is a part of a pipeline that contains the logic to perform a major segment of a larger workflow defined in a pipeline. Stages are often based on the different workflow milestones, such as building, approving, and delivering.

The process of adding a stage to a pipeline is the same for all Harness modules. When you add a stage to a pipeline, you select the stage type, such as Developer Portal for IDP or Build for CI or Deploy for CD. The available stage settings are determined by the stage type, and, if applicable, the module associated with the selected stage type.

This functionality is limited to the modules and settings that you have access to.

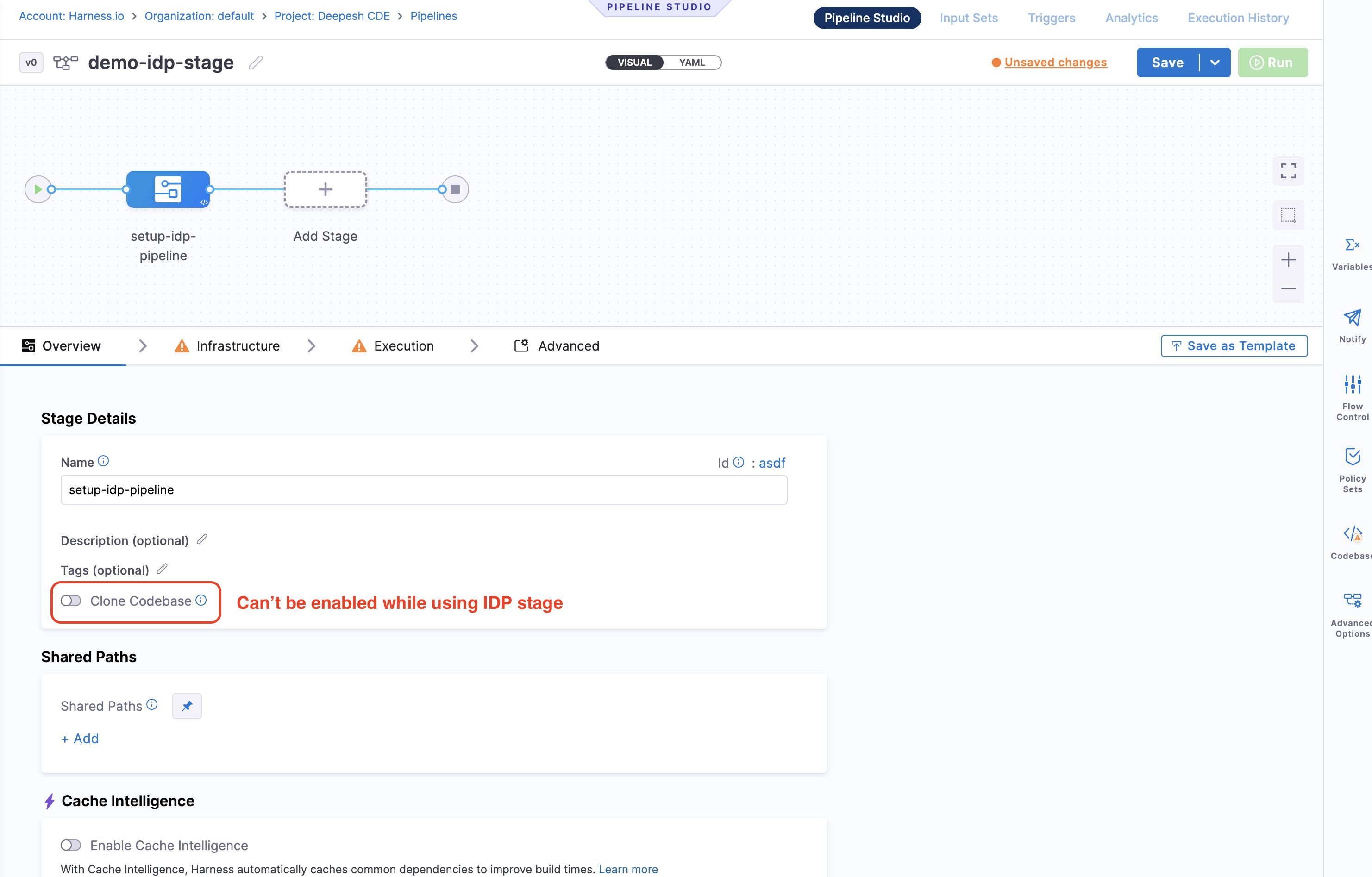

Limitations

- The "Clone Codebase (Git Clone)" action is not supported at the stage level for the IDP stage.

- Looping strategies (Parallelism, Matrix, Repeat) are not supported for the IDP stage.

1. Git Clone

(Ignore this step if your repository containing the cookiecutter template is public)

Add a Git Clone step to clone a repository into the Developer Portal stage's workspace. By cloning the repository, you gain access to the necessary code, scripts, or configurations, enabling various actions.

The Git Clone step uses a containerized step group. For more information, refer to Containerize Step Groups.

- Pipeline Studio

- YAML

- In your Developer Portal stage, under Execution, select Add Step.

- Select Git Clone.

- Configure the step using the settings described below.

Configuration Settings

-

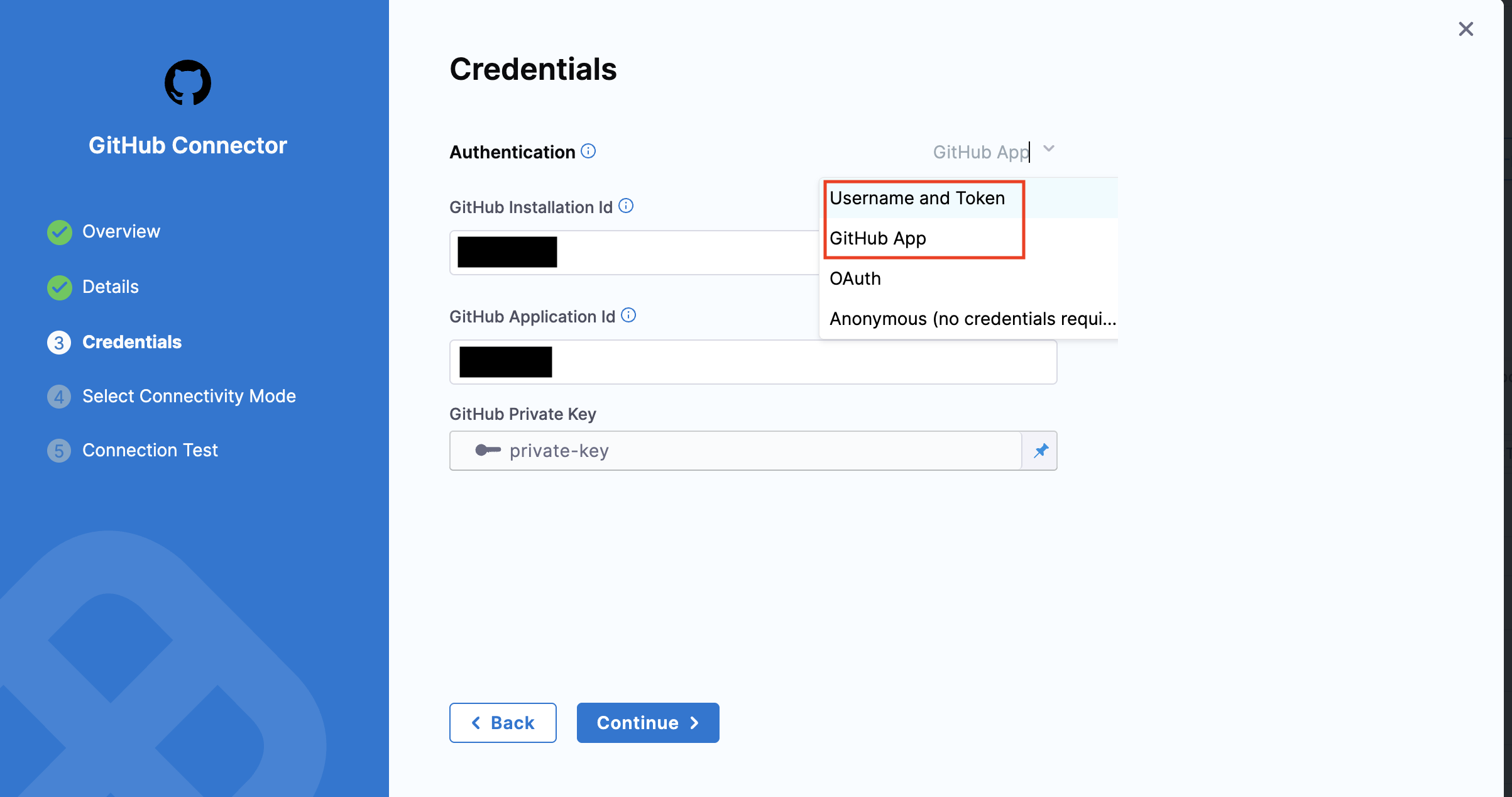

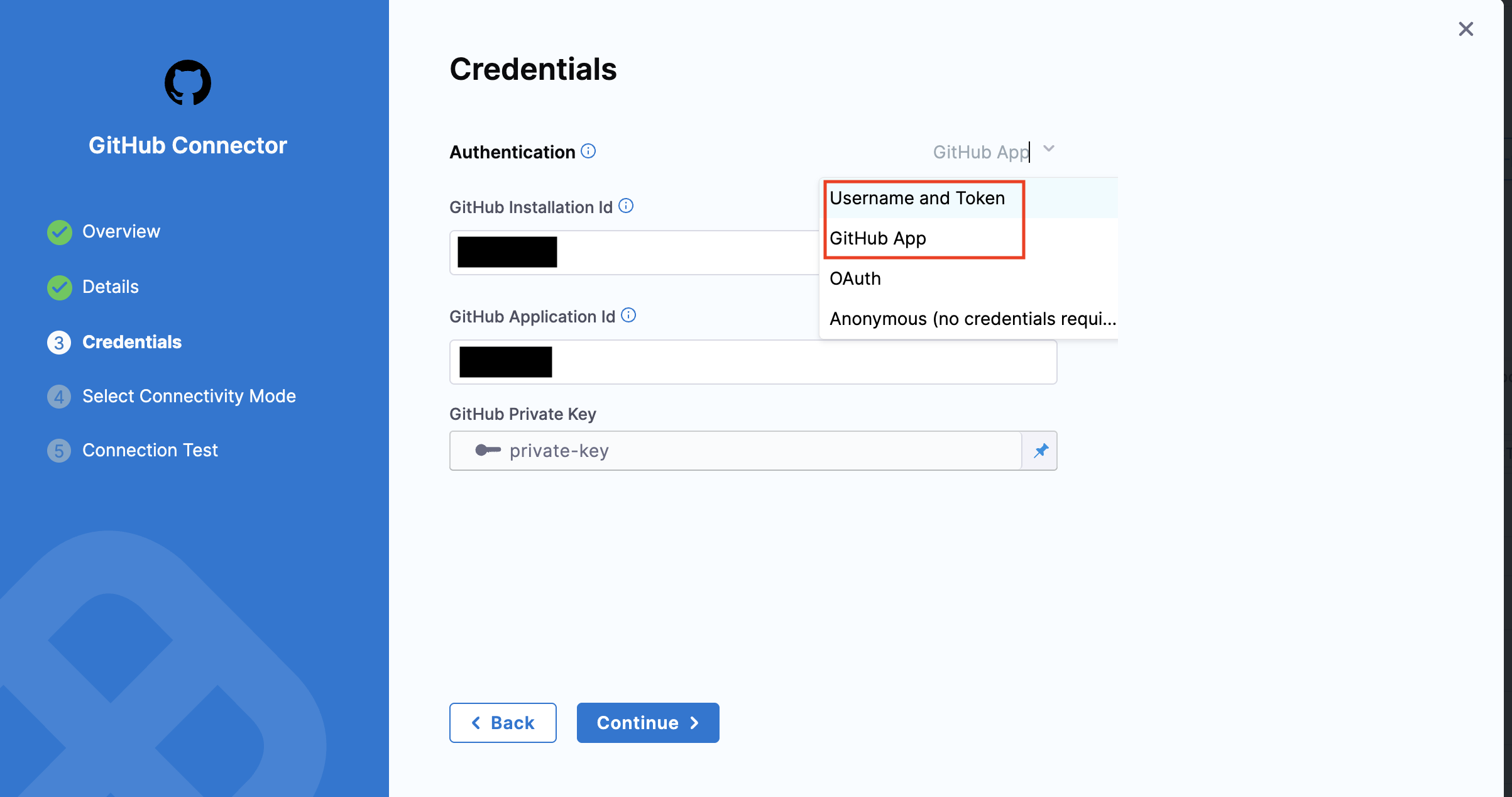

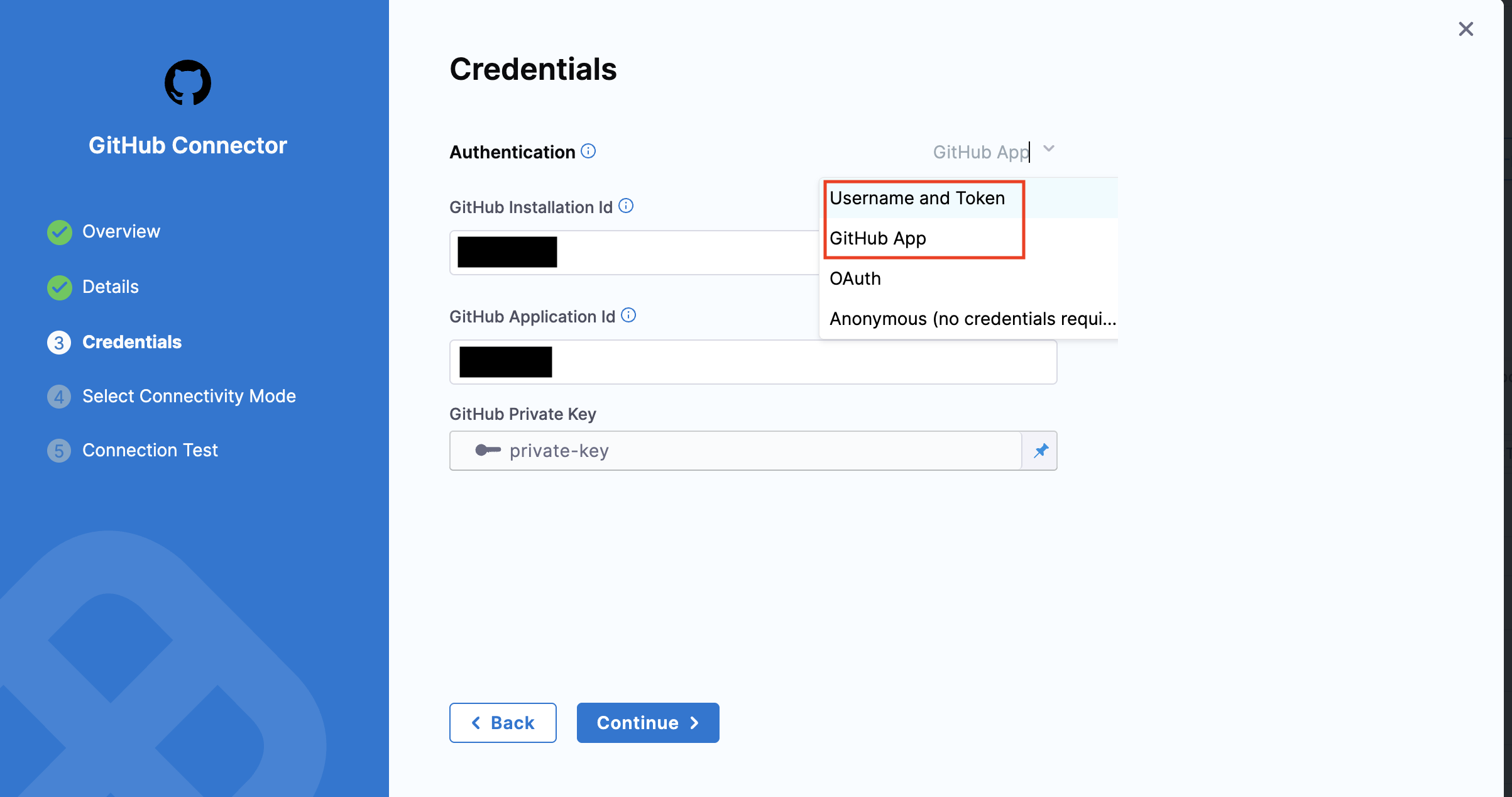

Select Git Provider: You can choose Third-party Git Provider if your code is not hosted in the Harness Code Repository.

-

Connector: You can select a connector for the source control provider hosting the code repository that you want to clone.

-

For authentication, Username-Password and GitHub App methods are supported. The connection type

sshis currently not supported for Connectors. -

You can use a GitHub App to authenticate the Harness GitHub connector. Refer to this detailed guide on how to use a GitHub App with a GitHub connector in the IDP stage.

-

The Bitbucket connector supports both Access Token and API Token authentication modes. For more details, see the reference documentation on Access Token mode and API Token mode.

Note: Please ensure that you have admin permissions on a GitHub repository within your GitHub organization and that you are able to install GitHub Apps in that repository. This setup is only supported for organization accounts, not personal GitHub accounts.

You can refer to the following resources for more information on creating code repo connectors:

- Azure Repos: Connect to Azure Repos

- Bitbucket: Bitbucket Connector Settings Reference

- GitHub: GitHub Connector Settings Reference

- GitLab: GitLab Connector Settings Reference

- Other Git Providers:

- Repository Name:

- If the connector's URL Type is set to Repository, the Repository Name is automatically populated based on the connector's configuration.

- If the connector's URL Type is set to Account, you must manually specify the repository name to clone into the stage workspace.

- Build Type, Branch Name, and Tag Name: For Build Type, choose:

- Git Branch to clone code from a specific branch.

- Git Tag to clone code from a specific commit tag. Based on your selection, specify the Branch Name or Tag Name.

You can use fixed values, runtime inputs, or variable expressions for branch and tag names. For instance, you can enter input for the branch or tag name to specify them at runtime.

-

Clone Directory (Optional): You can specify the target path in the stage workspace where the repository should be cloned.

-

Depth: You should specify the number of commits to fetch when cloning the repository. The default depth is 0, which fetches all commits from the specified branch. For more details, refer to the Git Clone Documentation.

- step:

type: GitClone

name: GitClone_1

identifier: GitClone_1

spec:

connectorRef: account.GitConnectorBzGN8G1COj

repoName: myrepo

build:

type: branch

spec:

branch: main

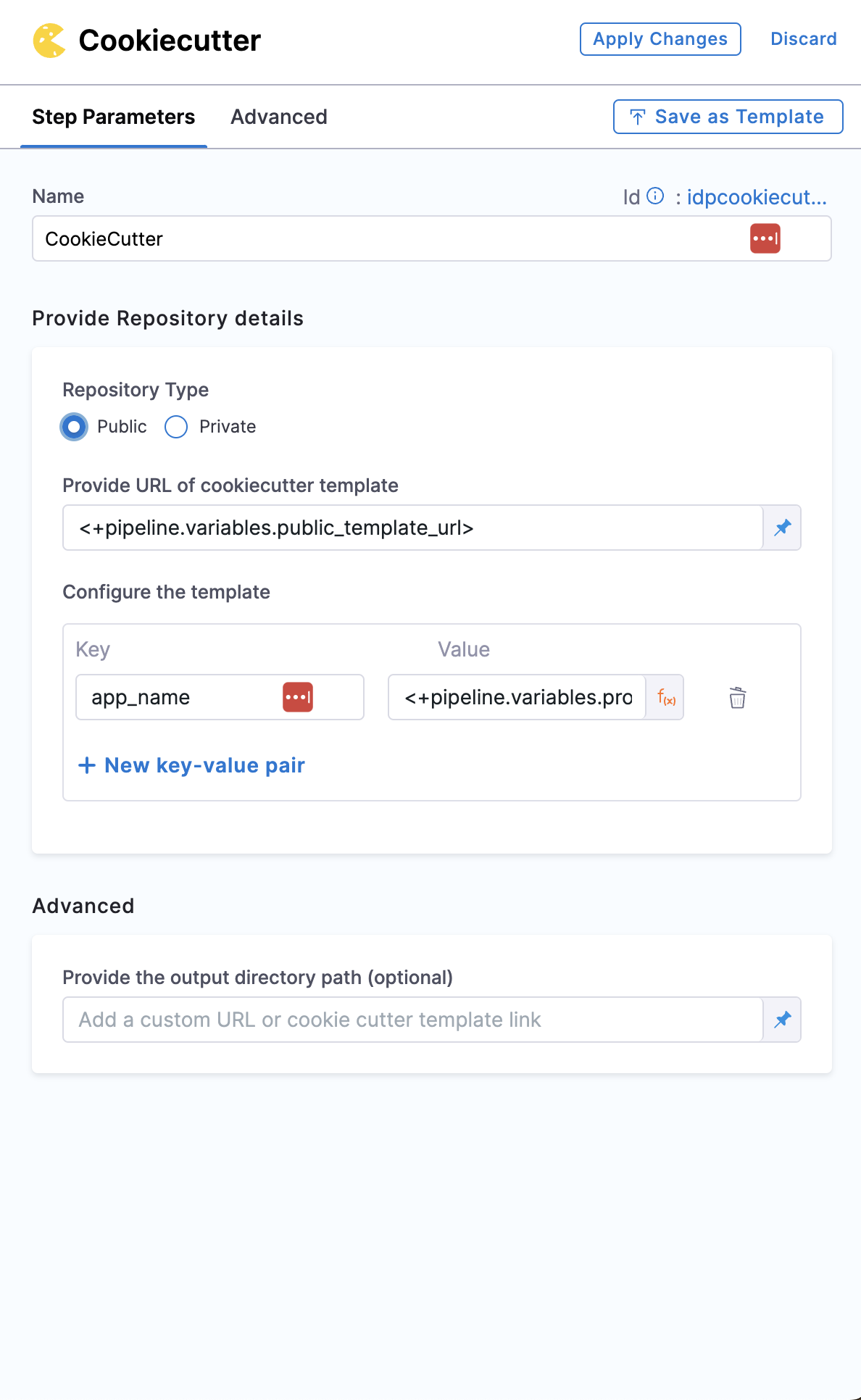

2. Cookiecutter

Cookiecutter step is used to take inputs for the cookiecutter template.

In the example provided for this step we have used pipeline variables as input for many fields, make sure you have the corresponding pipeline variable created with proper value as described under pipeline variables.

- Pipeline Studio

- YAML

Repository Type

Select the repository type in which your template is stored, which could be public or private git repository.

In case it's Private make sure you have added the gitclone step and the path for template should be the Clone Directory added in gitclone step

In case of public templates you just need to add the public URL of the template path stored in your git provider. eg https://github.com/devesh-harness/test-cookicutter

In case you have your own cookiecutter template, make sure the directory structure in the repository should be as follows

cookiecutter-something/

├── {{ cookiecutter.project_name }}/ <--------- Project template

│ └── ...

├── blah.txt <--------- Non-templated files/dirs

│ go outside

│

└── cookiecutter.json <--------- Prompts & default values

You must have:

-

A

cookiecutter.jsonfile. -

A

{{ cookiecutter.project_name }}/directory, whereproject_nameis defined in yourcookiecutter.json.

Beyond that, you can have whatever files/directories you want.

Cookiecutter runs only on the Project Template and use values mentioned in cookiecutter.json, hence when you add it on git it's suggested to have one cookiecutter template per repository with the cookiecutter.json on the root. Also in case you don't want to render a file on the execution of cookiecuuter template, but that file is inside your Project Template, add it under _copy_without_render key in your cookiecutter.json

{

"project_slug": "sample",

"_copy_without_render": [

"*.html",

"*not_rendered_dir",

"rendered_dir/not_rendered_file.ini"

]

}

Path for Template

First select the type of the input it could be a Fixed Value, Runtime input or Expression

In case of Fixed Value provide the absolute value of template URL, for e.g. https://github.com/devesh-harness/test-cookicutter

In case of Runtime Input provide the absolute value of the template URL after you run the pipeline.

In case of Expression provide the pipeline variable in JEXL format which takes the template URL as an input, this is widely used while implementing the self-service flow.

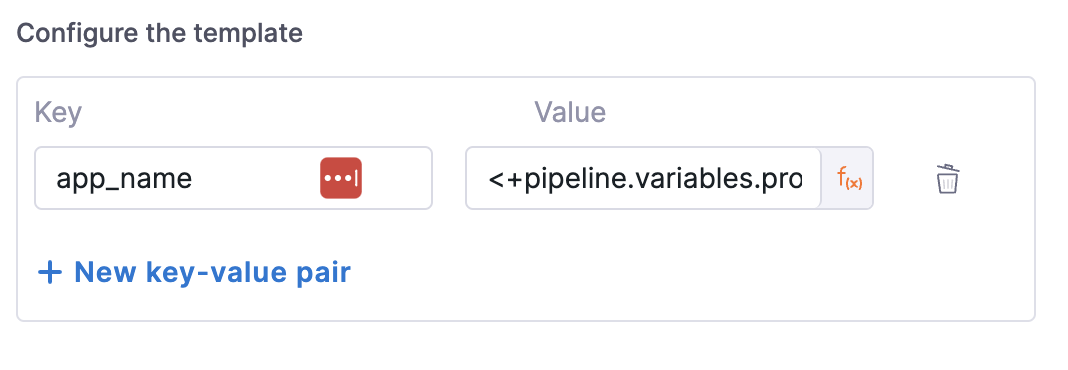

Configure Template

Provide the input required the template in terms of key value pairs in this step.

- step:

type: CookieCutter

name: CookieCutter

identifier: idpcookiecutter

spec:

templateType: public

publicTemplateUrl: <+pipeline.variables.public_template_url>

cookieCutterVariables:

app_name: <+pipeline.variables.project_name>

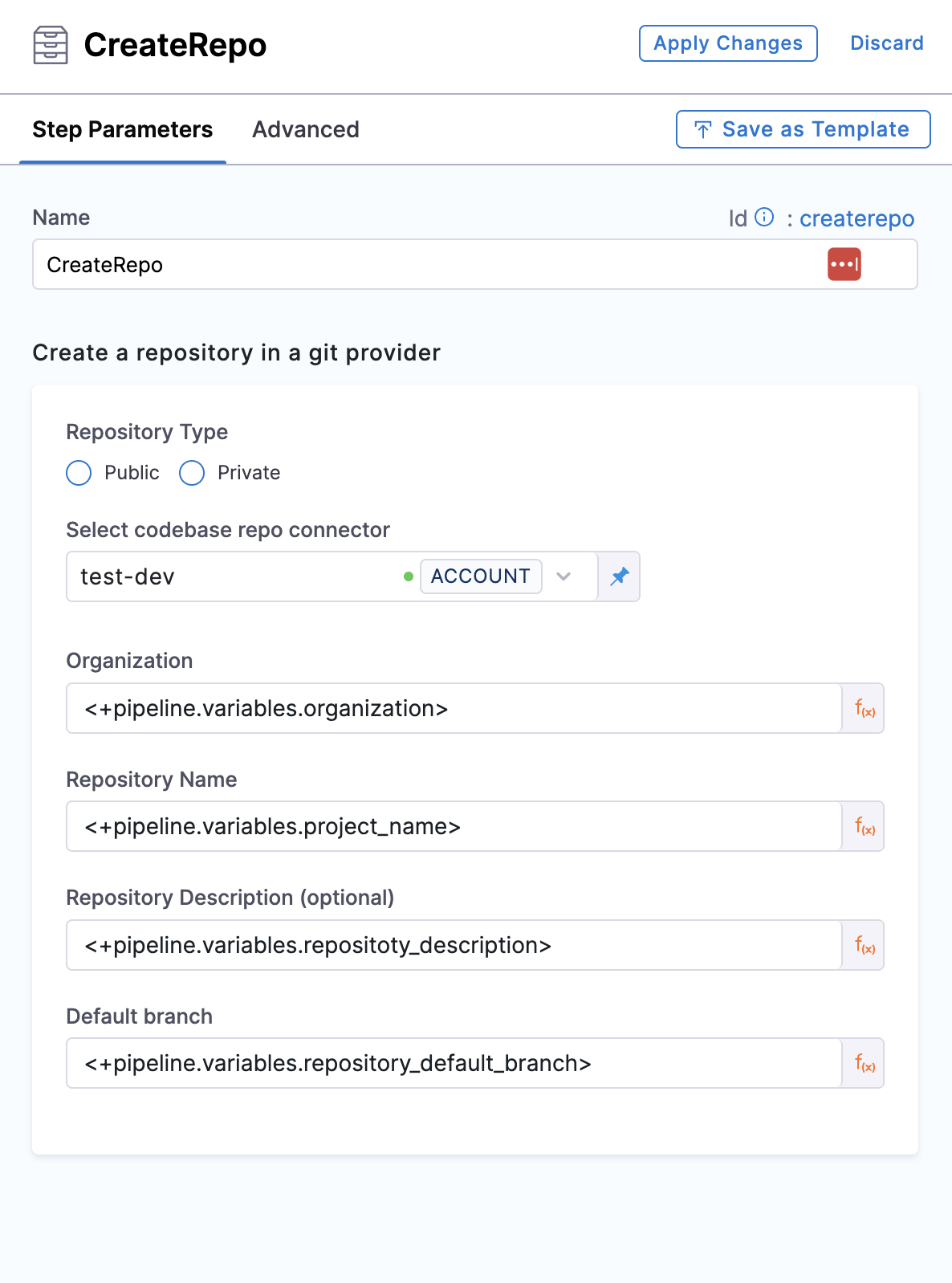

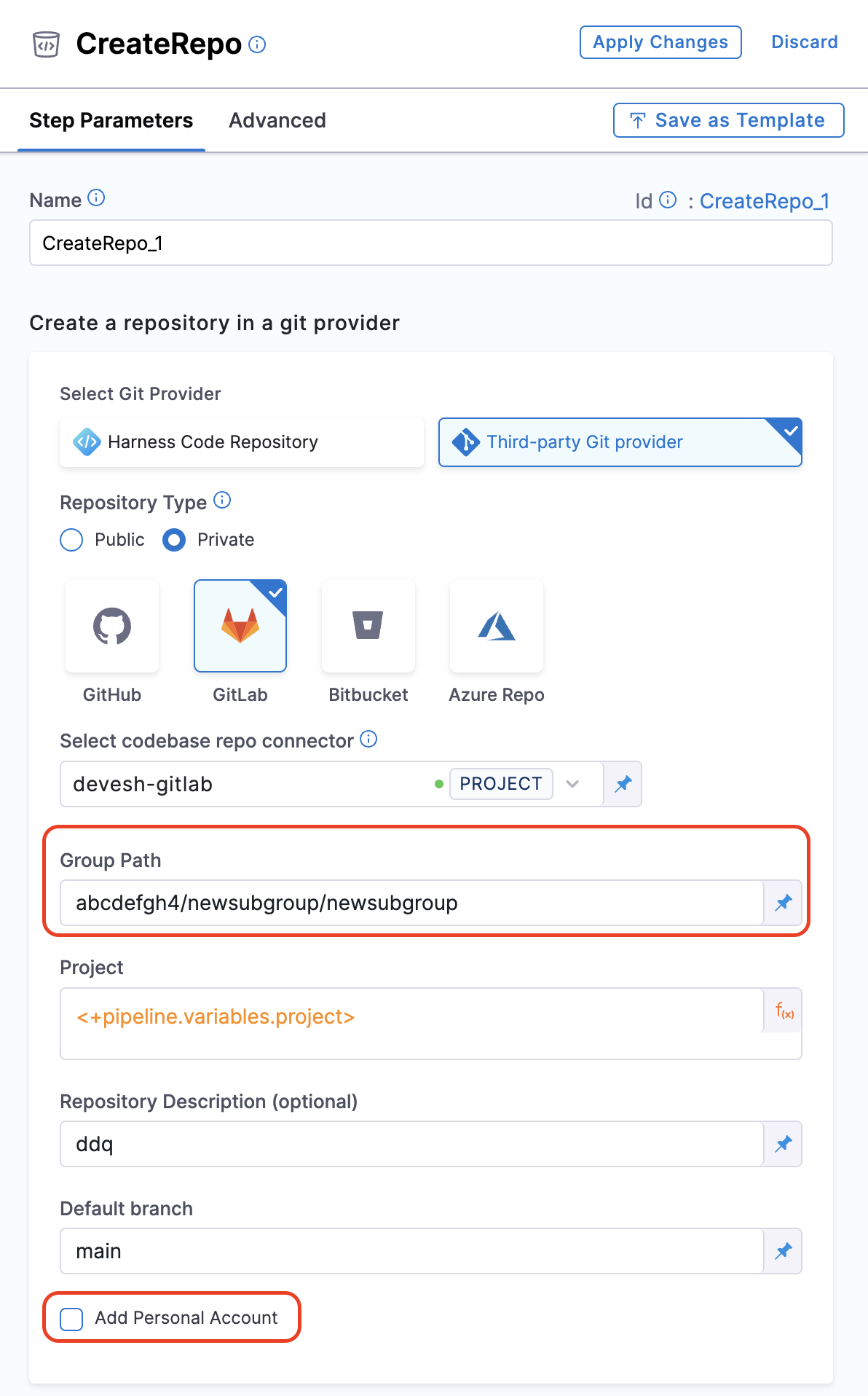

3. Create Repo

This step is to create the repository in your git provider which will be later used to add the service/app created using cookiecutter step along with the catalog which will be created in the Create Catalog step.

In the example provided for this step we have used pipeline variables as input for many fields, make sure you have the corresponding pipeline variable created with proper value as described under pipeline variables.

The git connector used under Connectors Page in IDP Admin should have fetch access to the repository getting created in this step.

- Pipeline Studio

- YAML

Repository Type

Select the repository type you want to create, which could be public or private.

Connector

-

For authentication, Username-Password and GitHub App methods are supported. The connection type

sshis currently not supported for Connectors. -

You can use a GitHub App to authenticate the Harness GitHub connector. Refer to this detailed guide on how to use a GitHub App with a GitHub connector in the IDP stage.

-

The Bitbucket connector supports only API Token authentication mode for this step. This is due to a known Bitbucket bug where repository creation is not supported with access tokens. For more details, see the reference documentation for API Token mode.

Note: Please ensure that you have admin permissions on a GitHub repository within your GitHub organization and that you are able to install GitHub Apps in that repository. This setup is only supported for organization accounts, not personal GitHub accounts.

Select a connector for the git provider that will host the code repository.

The following topics provide more information about creating code repo connectors:

- Azure Repos: Connect to Azure Repos

- Bitbucket: Bitbucket connector settings reference

- GitHub: GitHub connector settings reference

- GitLab: GitLab Connector Settings reference

Org, Repo, Description, Default Branch

For GitLab integration, you need to add the group path as well, in-case it's not a personal account

Add the org, repo name, Repo Description and Default branch for the repo you want to create.

- step:

type: CreateRepo

name: CreateRepo

identifier: createrepo

spec:

connectorRef: account.testdev

organization: <+pipeline.variables.organization>

repository: <+pipeline.variables.project_name>

repoType: <+pipeline.variables.repository_type>

description: <+pipeline.variables.repository_description>

defaultBranch: <+pipeline.variables.repository_default_branch>

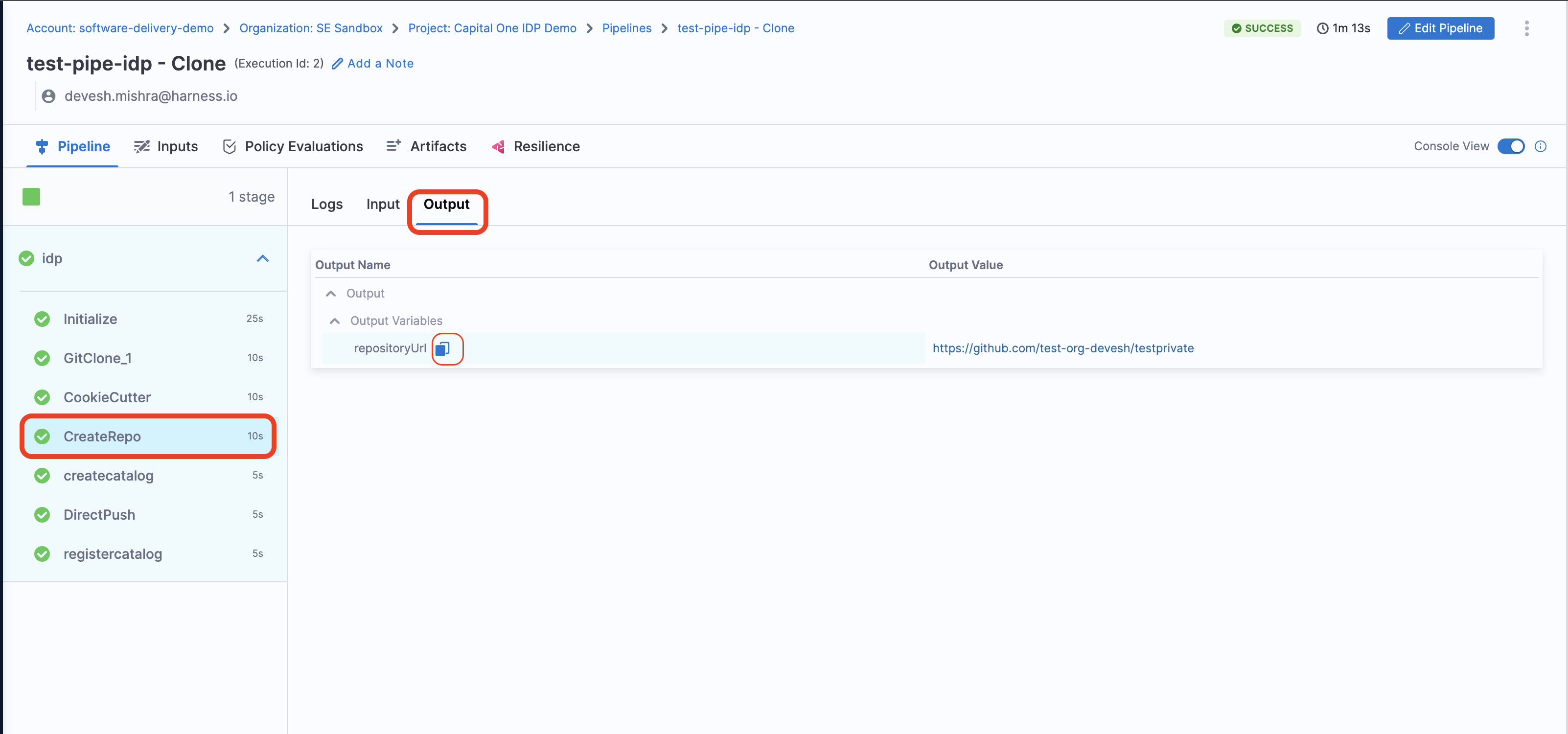

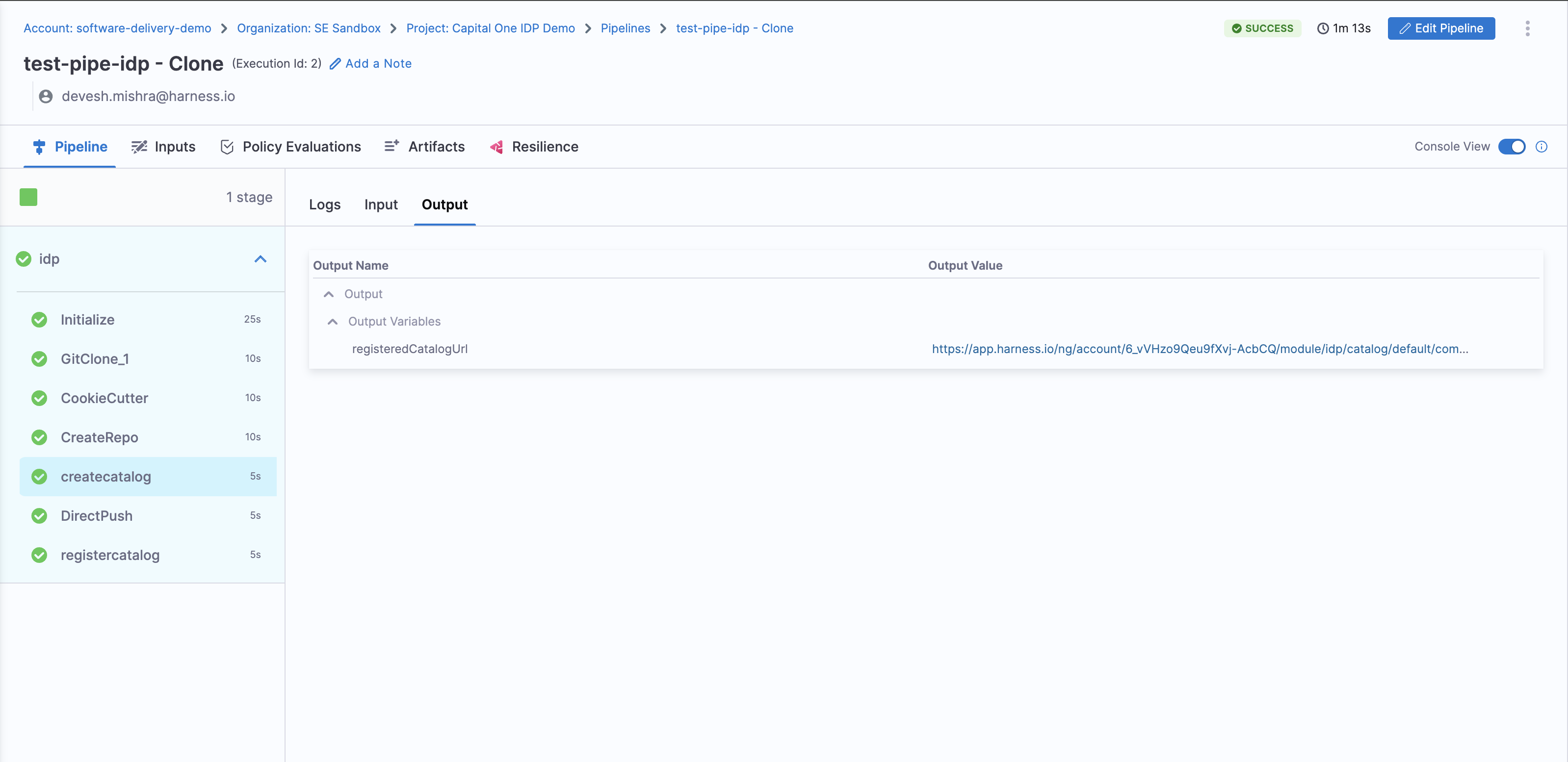

Output

Following is the output variable of this step.

- repositoryUrl : The URL of the repository created eg;

https://github.com/org-name/repo-nameand this variable could be used in other steps in the pipeline by using this JEXL expression as a stage variable<+pipeline.stages.idp.spec.execution.steps.createrepo.output.outputVariables.repositoryUrl>

These output variable could be viewed under the output tab in

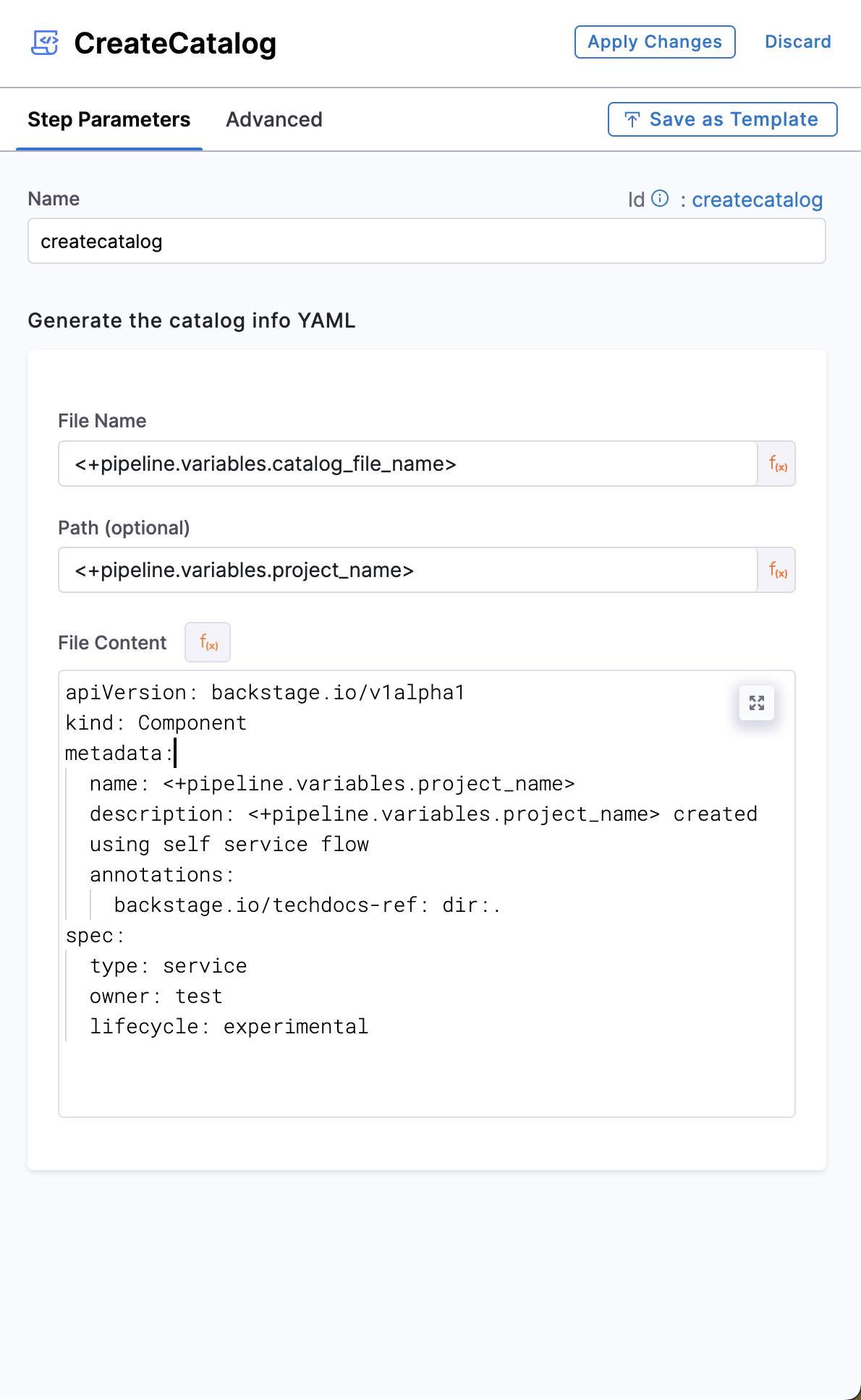

4. Create Catalog

This step is used to create the catalog-info.yaml/idp.yaml to be ued to register the software component we have created in previous step in our IDP catalog.

In the example provided for this step we have used pipeline variables as input for many fields, make sure you have the corresponding pipeline variable created with proper value as described under pipeline variables.

The git connector used under Connectors Page in IDP Admin should have fetch access to the repository the catalog-info.yaml is getting published to, for it to be registered in the catalog.

- Pipeline Studio

- YAML

File Name, Path

Name the catalog-info.yaml followed by providing a path if you don't want to register in the root of the repo created in the Create Repo step.

File Content

Add the YAML content to be added in your catalog-info.yaml file, For eg.,

apiVersion: backstage.io/v1alpha1

kind: Component

metadata:

name: <+pipeline.variables.project_name>

description: <+pipeline.variables.project_name> created using self service flow

annotations:

backstage.io/techdocs-ref: dir:.

spec:

type: service

owner: test

lifecycle: experimental

Ensure your

identifierfollows naming rules. Invalid identifiers may lead to entity registration errors.

- step:

type: CreateCatalog

name: createcatalog

identifier: createcatalog

spec:

fileName: <+pipeline.variables.catalog_file_name>

filePath: <+pipeline.variables.project_name>

fileContent: |-

apiVersion: backstage.io/v1alpha1

kind: Component

metadata:

name: <+pipeline.variables.project_name>

description: <+pipeline.variables.project_name> created using self service flow

annotations:

backstage.io/techdocs-ref: dir:.

spec:

type: service

owner: test

lifecycle: experimental

Output

Following is the output variable of this step.

- registeredCatalogUrl : The URL of the software component registered in the catalog of IDP eg;

https://app.harness.io/ng/account/**************/module/idp/catalog/default/component/component-nameand this variable could be used in other steps in the pipeline by using this JEXL expression as a stage variable<<+pipeline.stages.idp.spec.execution.steps.createcatalog.output.outputVariables.registeredCatalogUrl>>

These output variable could be viewed under the output tab in

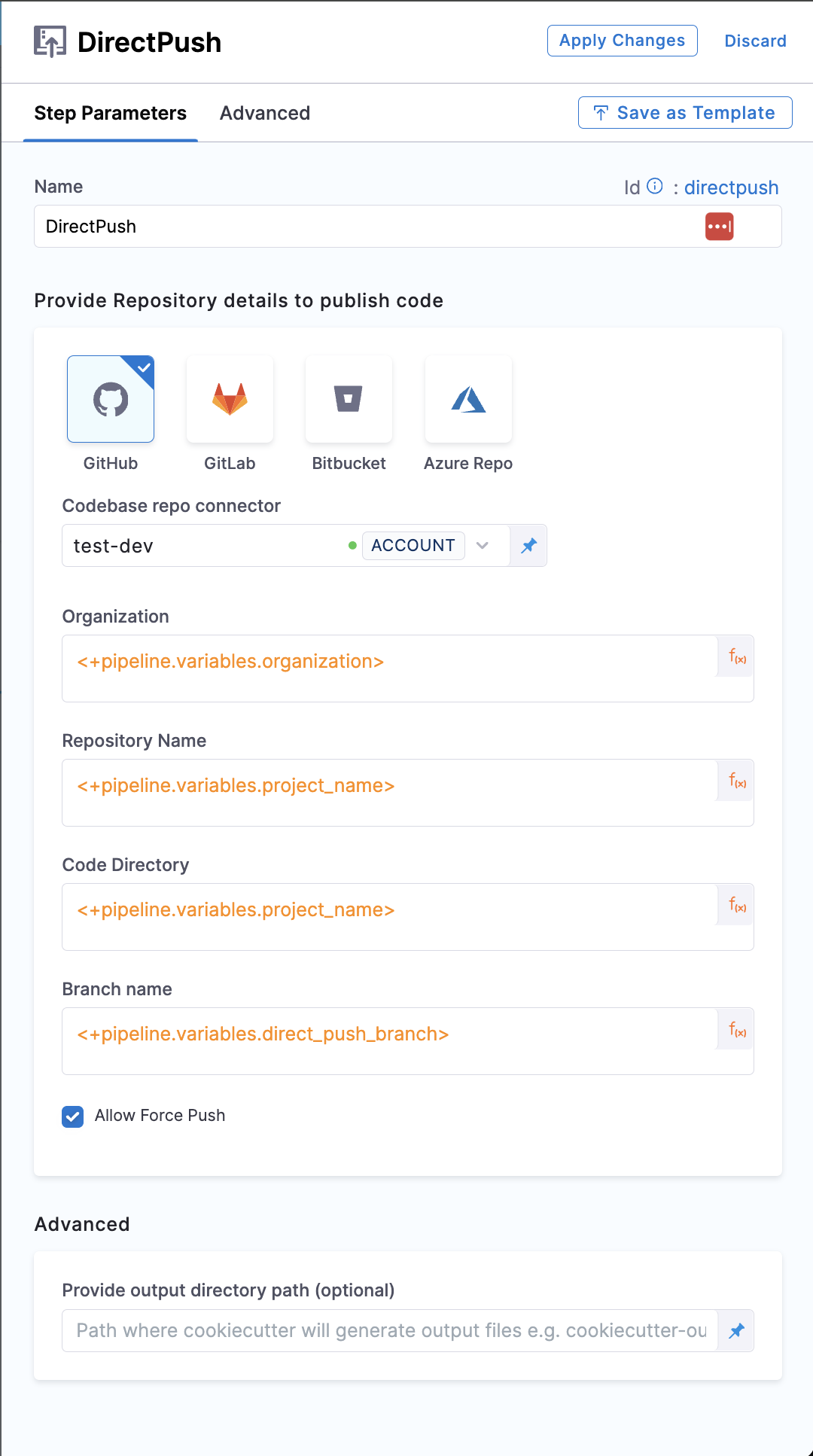

5. Direct Push

This step is used to push the service/application created using Cookiecutter step along with the catalog-info.yaml in the repo you created in previous step.

You can only push the service/application created above to repositories within the same project where the Direct Push step is being executed.

In the example provided for this step, we have used pipeline variables as input for multiple fields. Ensure that the corresponding pipeline variables are created with the appropriate values, as described in the Pipeline Variables documentation.

- Pipeline Studio

- YAML

Connector

-

For authentication, Username-Password and GitHub App methods are supported. The connection type

sshis currently not supported for Connectors. -

You can use a GitHub App to authenticate the Harness GitHub connector. Refer to this detailed guide on how to use a GitHub App with a GitHub connector in the IDP stage.

-

The Bitbucket connector supports both Access Token and API Token authentication modes. For more details, see the reference documentation on Access Token mode and API Token mode.

Note: Please ensure that you have admin permissions on a GitHub repository within your GitHub organization and that you are able to install GitHub Apps in that repository. This setup is only supported for organization accounts, not personal GitHub accounts.

Select a connector for the git provider where you want to push the code.

The following topics provide more information about creating code repo connectors:

- Azure Repos: Connect to Azure Repos

- Bitbucket: Bitbucket connector settings reference

- GitHub: GitHub connector settings reference

- GitLab: GitLab Connector Settings reference

Org, Repo, Code Directory, Branch

For GitLab integration, you need to add the group path as well. In case of using the personal account, make sure you add the account-id in the path

Add the Org, Repo Name, Repo Description and Branch Name where you want to push the code.

Allow Force Push

This when enabled or set to true, will be able to overwrite the changes to Default branch set in the Create Repo step.

- step:

type: DirectPush

name: DirectPush

identifier: directpush

spec:

connectorType: Github

forcePush: true

connectorRef: account.testdev

organization: <+pipeline.variables.organization>

repository: <+pipeline.variables.project_name>

codeDirectory: <+pipeline.variables.project_name>

branch: <+pipeline.variables.direct_push_branch>

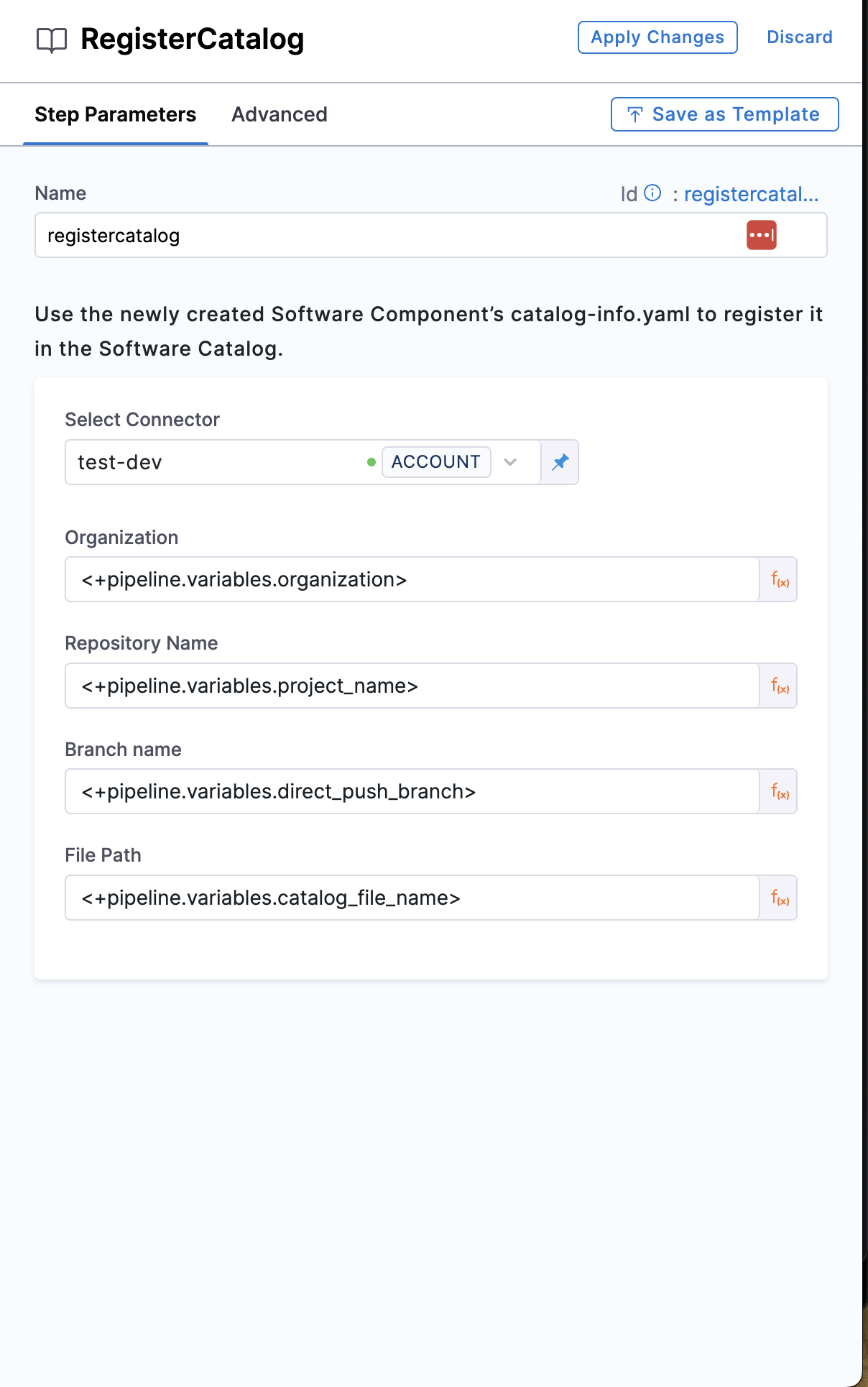

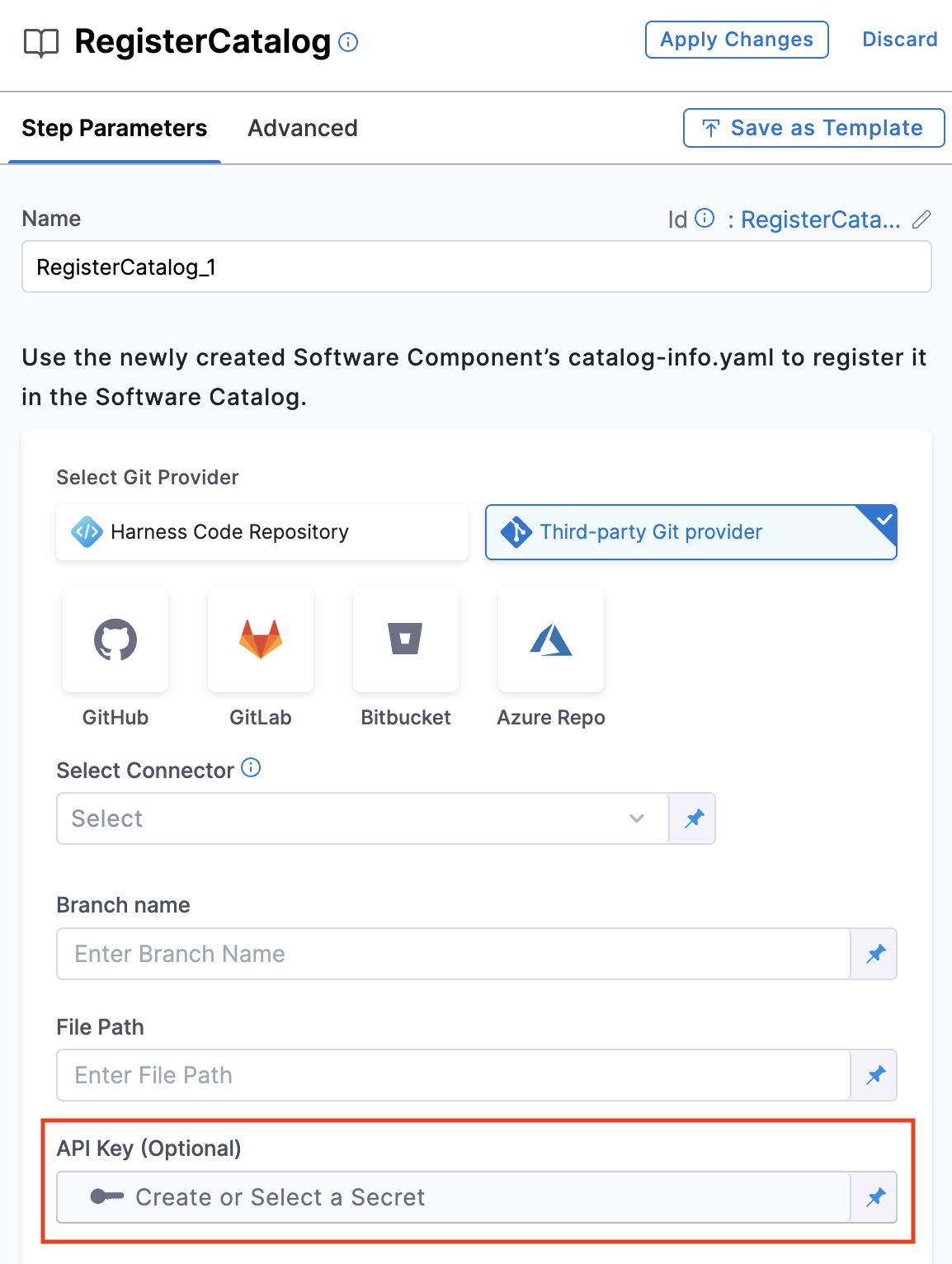

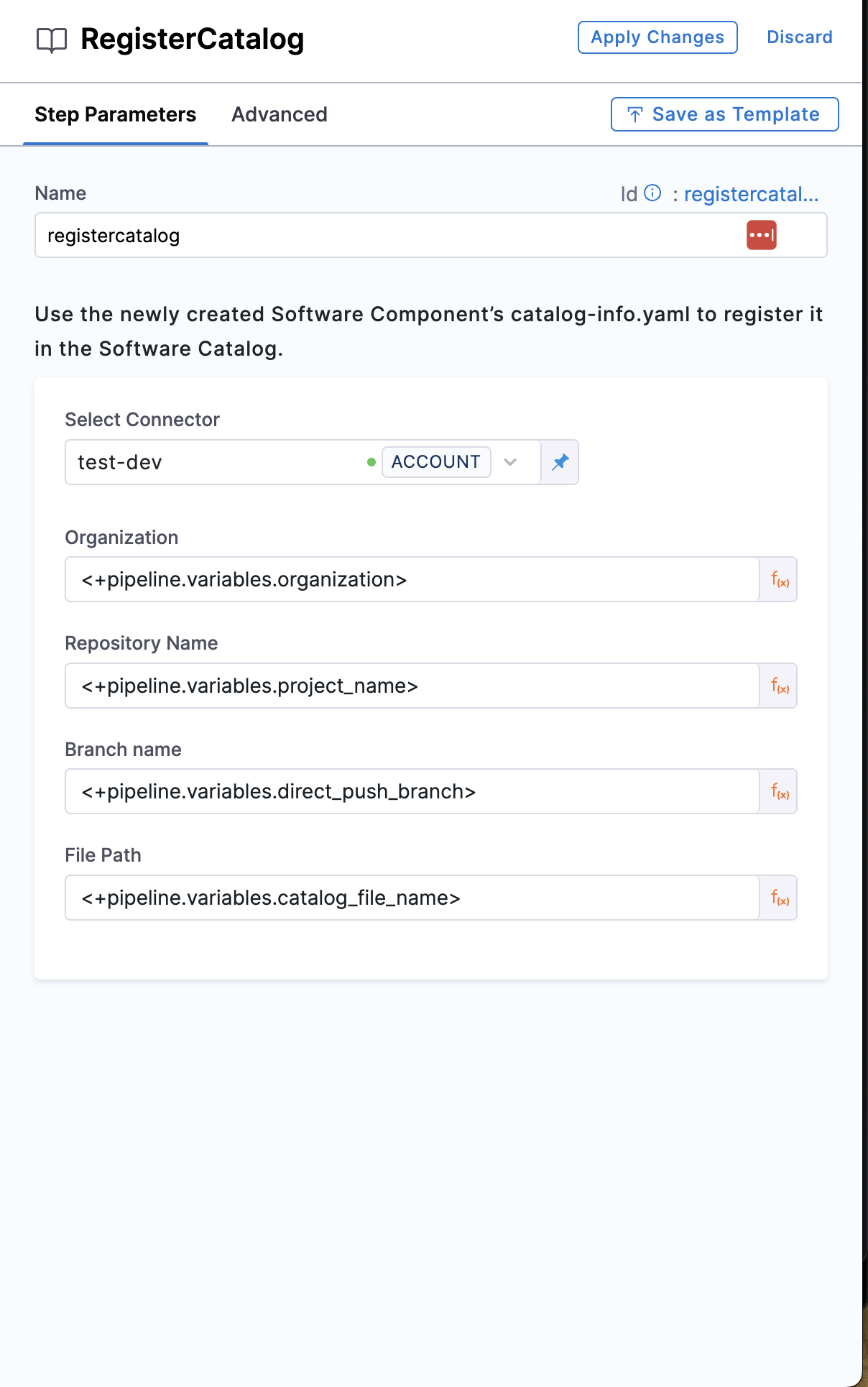

6. Register Catalog

This step is used to register the software component created in the Catalog of Harness IDP using catalog-info.yaml.

In the example provided for this step we have used pipeline variables as input for many fields, make sure you have the corresponding pipeline variable created with proper value as described under pipeline variables.

- Pipeline Studio

- YAML

Connector

-

For authentication, Username-Password and GitHub App methods are supported. The connection type

sshis currently not supported for Connectors. -

You can use a GitHub App to authenticate the Harness GitHub connector. Refer to this detailed guide on how to use a GitHub App with a GitHub connector in the IDP stage.

-

The Bitbucket connector supports both Access Token and API Token authentication modes. For more details, see the reference documentation on Access Token mode and API Token mode.

Note: Please ensure that you have admin permissions on a GitHub repository within your GitHub organization and that you are able to install GitHub Apps in that repository. This setup is only supported for organization accounts, not personal GitHub accounts.

Select a connector for the git provider where your catalog-info.yaml is stored.

The following topics provide more information about creating code repo connectors:

- Azure Repos: Connect to Azure Repos

- Bitbucket: Bitbucket connector settings reference

- GitHub: GitHub connector settings reference

- GitLab: GitLab Connector Settings reference

Org, Repo, Branch, File Path

For GitLab integration, you need to add the group path as well. In case of using the personal account, make sure you add the account-id in the path

Add the Org, Repo Name, Branch and the File path relative to the root of the repository, where your catalog-info.yaml is present.

- step:

type: RegisterCatalog

name: registercatalog

identifier: registercatalog

spec:

connectorRef: account.testdev

repository: <+pipeline.variables.project_name>

organization: <+pipeline.variables.organization>

filePath: <+pipeline.variables.catalog_file_name>

branch: <+pipeline.variables.direct_push_branch>

API Key Support

Harness IDP now supports the use of a Harness API Key in the Register Catalog step.

With this feature, users can configure the API Key by selecting the "API Token" field in the Harness UI. This option is available under Advanced Settings in the Pipelines tab. Enabling this ensures that the API Key is utilized for catalog registration in IDP.

By integrating the API Key, the pipeline execution remains seamless, ensuring it functions correctly when triggered from another pipeline or through a trigger.

This step is optional. You can proceed with executing your pipeline without an API key. In that case, the user context will be used for catalog registration.

Output Variable

Following is the output variable of this step.

- catalogInfoUrl : The URL of the

catalog-info.yamlstored in your git provider where you created the repo in the CreateRepo step eg;https://github.com/org-name/repo-name/blob/code/catalog-info.yamland this variable could be used in other steps in the pipeline by using this JEXL expression as a stage variable<<+pipeline.stages.idp.spec.execution.steps.registercatalog.output.outputVariables.catalogInfoUrl>>

These output variable could be viewed under the output tab in

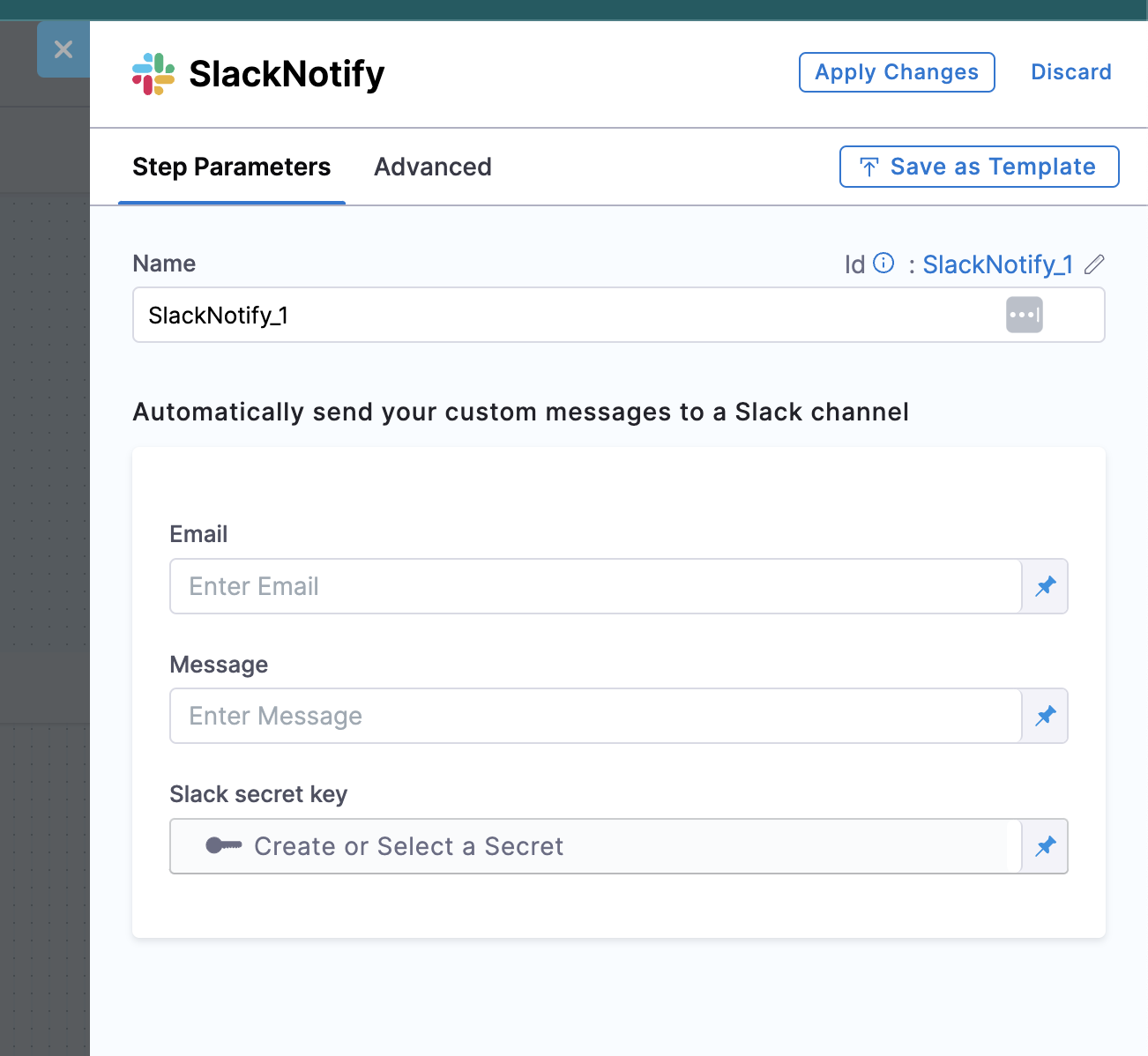

7. Slack Notify

This step is used to notify individual developers once the pipeline is executed successfully and your Software component is registered successfully in your Software Catalog.

In the example provided for this step we have used pipeline variables as input for many fields, make sure you have the corresponding pipeline variable created with proper value as described under pipeline variables.

- YAML

- Pipeline Studio

- step:

type: SlackNotify

name: slacknotify

identifier: slacknotify

spec:

emailId: <+pipeline.variables.email_id>

messageContent: " Hello <+pipeline.variables.project_name> project is created using flows in Harness IDP,\\n*Created Catalog Yaml -* <<+pipeline.stages.serviceonboarding.spec.execution.steps.registercatalog.output.outputVariables.catalogInfoUrl>|Link>\\n*Created Repository -* <<+pipeline.stages.serviceonboarding.spec.execution.steps.createrepo.output.outputVariables.repositoryUrl>|Link>\\n*Registered Catalog -* <<+pipeline.stages.serviceonboarding.spec.execution.steps.createcatalog.output.outputVariables.registeredCatalogUrl>|Link>"

token: slacksecrettestws

The output of the steps like Create Repo, Register Catalog in the JEXL format has been used to construct the messageContent for slack notification.

The token is the Bot-tokens

- Add the Name of the Step

The output of the steps like Create Repo, Register Catalog in the JEXL format has been used to construct the Message for Slack notification.

Slack Email ID

Use the email ID you have used to register in Slack.

Slack secret key

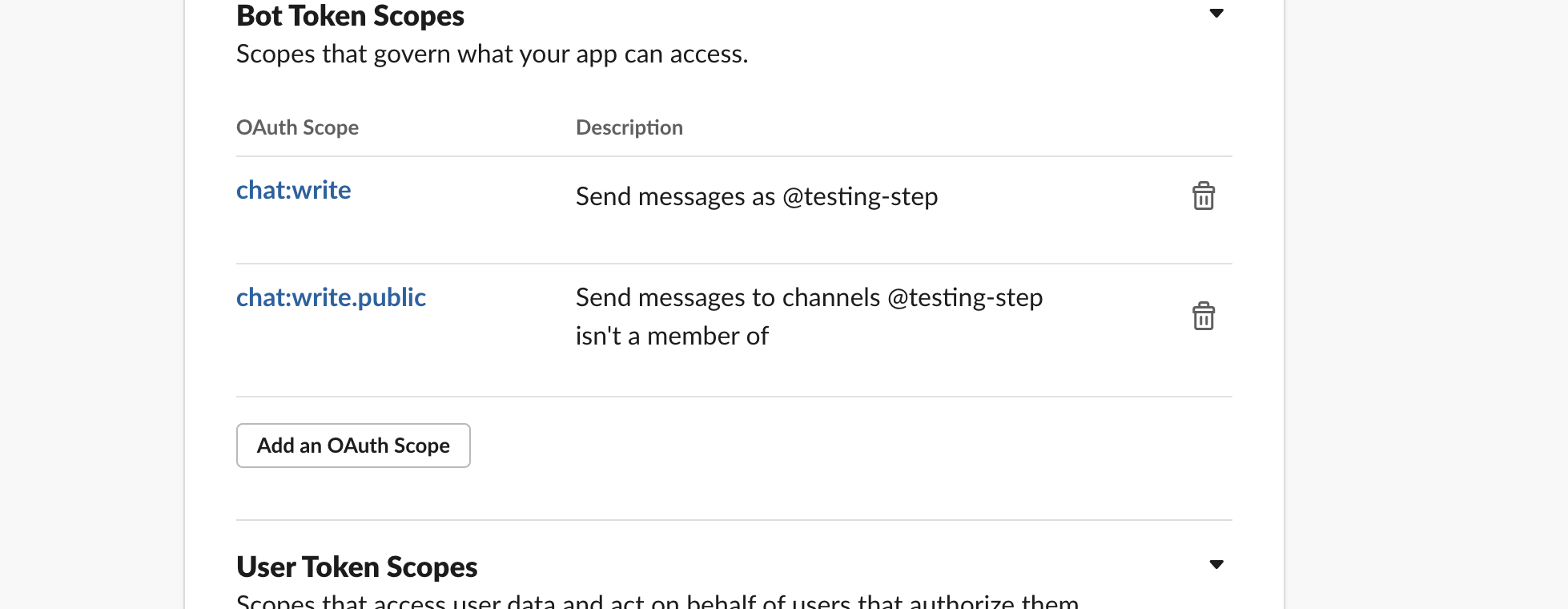

The Slack Secret Key are the Bot-tokens created with the following permissions.

- chat:write

- chat:write.public

- users:read.email

- users:read

Read more on how to create bot-tokens.

- Now create a new secret and add this as a Secret under the Slack Secret Key.

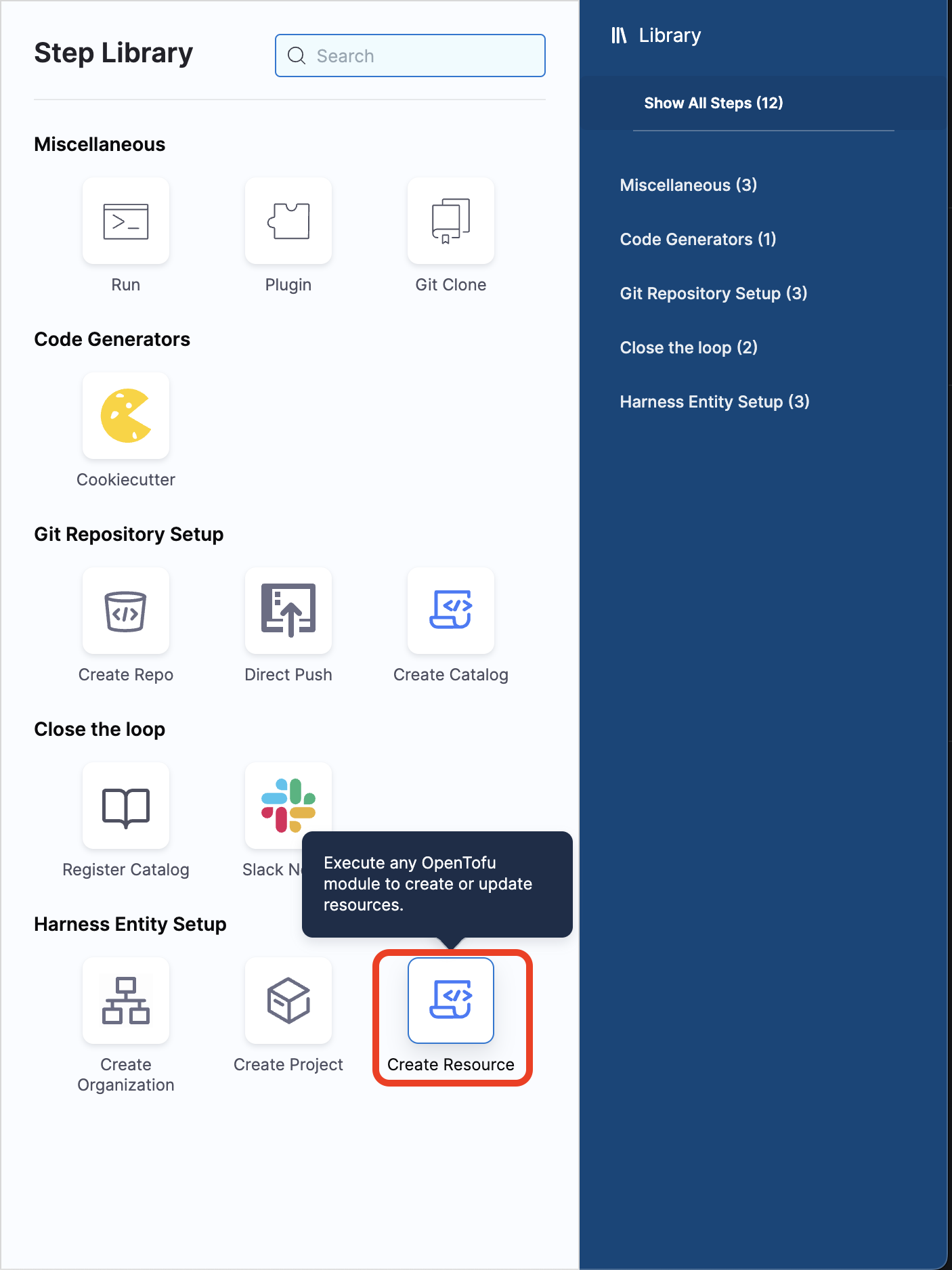

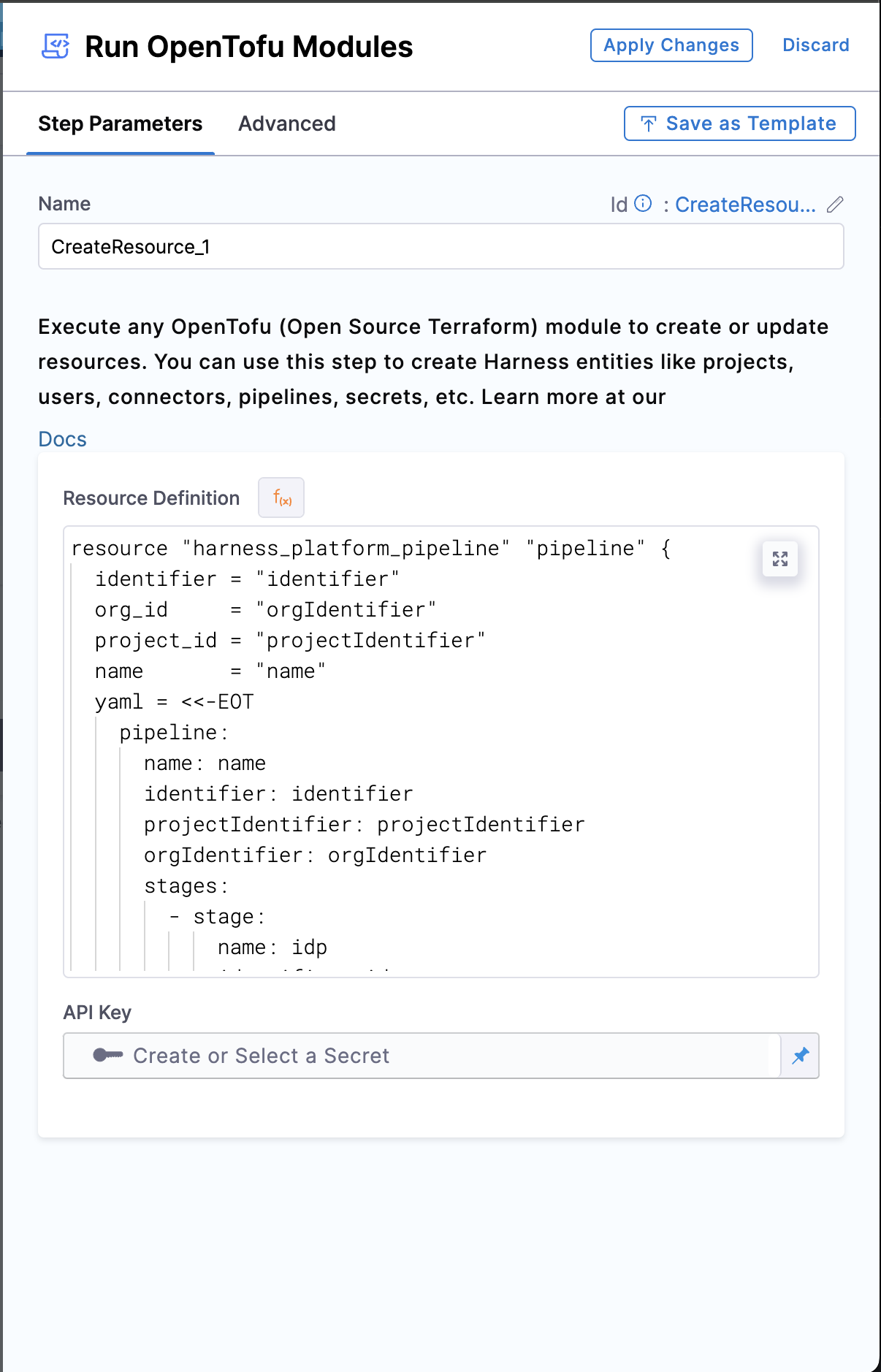

8. Create Resource

This step in developer portal stage allows you to execute only OpenTofu (Open Source Terraform) module related to Harness Terraform Provider to create or update resources. You can use this step to create Harness entities like projects, users, connectors, pipelines, secrets, etc.

- YAML

- Pipeline Studio

- step:

type: CreateResource

name: CreateResource_1

identifier: CreateResource_1

spec:

resourceDefinition: |-

resource "harness_platform_pipeline" "pipeline" {

identifier = "identifier"

org_id = "orgIdentifier"

project_id = "projectIdentifier"

name = "name"

yaml = <<-EOT

pipeline:

name: name

identifier: identifier

projectIdentifier: projectIdentifier

orgIdentifier: orgIdentifier

stages:

- stage:

name: idp

identifier: idp

description: ""

type: IDP

spec:

platform:

os: Linux

arch: Amd64

runtime:

type: Cloud

spec: {}

execution:

steps:

- step:

type: Run

name: Run_1

identifier: Run_1

description: <+input>

spec:

shell: Sh

command: echo "Hello"

tags: {}

EOT

}

xApiKey: Harness PAT for the account you want to create the pipeline

This step comes with a sample Resource Definition to create a Harness Pipeline with a Run Step. This contains dummy values, hence won't work consider replacing it with the resource definition of yours. Also refer to Harness Terraform Provider to help you with Harness Resource definitions.

The xApiKey is the Harness PAT for the account where you want to create the pipeline.

- You can select the step Create Resource from the Step Library.

- You can add a Name to the step followed by adding the Resource Definition and create a Harness Entity using Resources supported by our Harness Terraform Provider. Likewise, you'll find an already existing sample Resource Definition by default, that can create a Harness Pipeline with a Run Step.

9. Update Catalog Property

This step is used to update the catalog metadata for your entities. For example, you want to add the latest build version for your service in your catalog using this step in your CI pipeline and update the data in your catalog.

- You can select the step Update Catalog Property from the Step Library.

-

Now under the Select the Catalog entity and the properties to be updated you have two options

- Update single Catalog Entity

- Update multiple catalog Entities

-

Permission: For anyone to update catalog property need to have edit catalog permission.

- Update a single Catalog Entity

- Update Multiple Catalog Entities

- To update a single Catalog Entity, select the Catalog Entity from the dropdown. You need to type at least the first three characters in the field for the entity options to appear.

-

Now add the property, you want to update/add and the value against it. e.g.,

<+metadata.testCoverage>,<+metadata.additionalInfo.onShorelead>. -

The API Key field is optional. If left empty, the credentials of the user executing the pipeline will be used to update the catalog entity.

-

To update multiple Catalog entities, you need to add the property to be updated across the entities followed by

entity-refand corresponding values. -

In case you want to add a default value across all the entities then update the field under Default Value.

-

The API Key field is optional. If left empty, the credentials of the user executing the pipeline will be used to update the catalog entity.

-

Under Advanced you can select the mode

- Available Modes:

- replace (default): Completely replaces the existing value with the new one provided in the value field.

- append: Adds new values to the existing array (or other types to be appended like maps or key-value pairs).

- Available Modes:

Note:

appendonly works with data types that can hold multiple values, such as arrays or maps. It does not apply to simple data types like strings.

10. Run Step

You can use the Run step to to run commands or scripts in your Harness Pipeline.

In order for the Run step to execute your commands, the build environment must have the necessary binaries for those commands. Depending on the stage's build infrastructure, Run steps can use binaries that exist in the build environment or pull an image, such as a public or private Docker image, that contains the required binaries.

Please refer to detailed steps and settings here to understand this step in detail: Run Step Settings

11. Plugin Step

You can use the Plugin step to run different plugins in your Harness Pipeline.

Please refer to detailed steps and settings here to understand this step in detail: Plugin Step Settings

Example Pipeline

- YAML

- Pipeline Studio

pipeline:

name: Self-Service-flow-repo-setup

identifier: SelfServiceflowreposetup

projectIdentifier: projctidp

orgIdentifier: default

tags: {}

stages:

- stage:

name: service-onboarding

identifier: serviceonboarding

description: ""

type: IDP

spec:

infrastructure:

type: KubernetesDirect

spec:

connectorRef: idptest

namespace: harness-delegate

automountServiceAccountToken: true

nodeSelector: {}

os: Linux

execution:

steps:

- step:

type: CookieCutter

name: CookieCutter

identifier: idpcookiecutter

spec:

templateType: <+pipeline.variables.template_type>

publicTemplateUrl: <+pipeline.variables.public_template_url>

cookieCutterVariables:

app_name: <+pipeline.variables.project_name>

- step:

type: CreateRepo

name: CreateRepo

identifier: createrepo

spec:

connectorRef: account.testdev

organization: <+pipeline.variables.organization>

repository: <+pipeline.variables.project_name>

repoType: <+pipeline.variables.repository_type>

description: <+pipeline.variables.repository_description>

defaultBranch: <+pipeline.variables.repository_default_branch>

- step:

type: CreateCatalog

name: createcatalog

identifier: createcatalog

spec:

fileName: <+pipeline.variables.catalog_file_name>

filePath: <+pipeline.variables.project_name>

fileContent: |-

apiVersion: backstage.io/v1alpha1

kind: Component

metadata:

name: <+pipeline.variables.project_name>

description: <+pipeline.variables.project_name> created using self service flow

annotations:

backstage.io/techdocs-ref: dir:.

spec:

type: service

owner: test

lifecycle: experimental

- step:

type: DirectPush

name: DirectPush

identifier: directpush

spec:

connectorRef: account.testdev

repository: <+pipeline.variables.project_name>

organization: <+pipeline.variables.organization>

codeDirectory: <+pipeline.variables.project_name>

branch: <+pipeline.variables.direct_push_branch>

- step:

type: RegisterCatalog

name: registercatalog

identifier: registercatalog

spec:

connectorRef: account.testdev

repository: <+pipeline.variables.project_name>

organization: <+pipeline.variables.organization>

filePath: <+pipeline.variables.catalog_file_name>

branch: <+pipeline.variables.direct_push_branch>

- step:

type: SlackNotify

name: slacknotify

identifier: slacknotify

spec:

email: <+pipeline.variables.email_id>

messageContent: " Hello <@<+pipeline.variables.email_id>>, <+pipeline.variables.project_name> project is created using flows in Harness IDP,\\n*Created Catalog Yaml -* <<+pipeline.stages.serviceonboarding.spec.execution.steps.registercatalog.output.outputVariables.catalogInfoUrl>|Link>\\n*Created Repository -* <<+pipeline.stages.serviceonboarding.spec.execution.steps.createrepo.output.outputVariables.repositoryUrl>|Link>\\n*Registered Catlog -* <<+pipeline.stages.serviceonboarding.spec.execution.steps.createcatalog.output.outputVariables.registeredCatalogUrl>|Link>"

token: slacksecrettestws

cloneCodebase: false

caching:

enabled: false

paths: []

variables:

- name: test_content

type: String

description: ""

required: false

value: devesh

- name: project_name

type: String

description: ""

required: false

value: <+input>

- name: organization

type: String

description: ""

required: false

value: test-org-devesh

- name: template_type

type: String

description: ""

required: false

value: <+input>.default(public).allowedValues(public,private)

- name: public_template_url

type: String

description: ""

required: false

value: <+input>

- name: repository_type

type: String

description: ""

required: false

value: <+input>.default(private).allowedValues(private,public)

- name: repositoty_description

type: String

description: ""

required: false

value: <+input>

- name: repository_default_branch

type: String

description: ""

required: false

value: <+input>

- name: direct_push_branch

type: String

description: ""

required: false

value: <+input>

- name: catalog_file_name

type: String

description: ""

required: false

value: catalog-info.yaml

- name: email_id

type: String

description: ""

required: false

value: <+input>

Specify the Harness IDP Images used in your Pipeline

You can use the Harness IDP execution-config API to specify or update the Harness IDP images used in your infrastructure by specifying image tags.

Certain steps are common across different stages in Harness Pipeline, but the images used in each of them is specific to the stage they are part of, like Run Step.

Here's a list of Harness IDP images used in the IDP stage:

cookieCutter: Used to take inputs for the cookiecutter template.createRepo: Used to create the repository in your git providerdirectPush: Used to push the service/application created using Cookiecutter step along with the catalog-info.yaml in the repo you created in previous step.registerCatalog: Used to register the software component created in the Harness IDP Catalog.slackNotify: Used to notify individual developers once the pipeline is executed successfully and your Software component is registered successfully in your Software Catalog.createResource: Used to create Harness entities like projects, users, connectors, pipelines, secrets, etc.updateCatalogProperty: Used to update the catalog metadata for your entitiescreateOrganisationcreateProjectcreateCatalog

API key authentication is required. For more information about API keys, go to Manage API keys. For more information about authentication, go to the Harness API documentation.

-

Send a

get-default-configrequest to get a list of the latest Harness IDP Workflows executed. You can use theinfraparameter to getk8images orVMimages.curl --location --request GET "https://app.harness.io/gateway/idp/execution-config/get-default-config?accountIdentifier=$YOUR_HARNESS_ACCOUNT_ID&infra=K8" \

--header 'X-API-KEY: $API_KEY'The response payload shows the latest supported images and their tags, for example:

{

"status": "SUCCESS",

"data": {

"cookieCutter": "harness/cookiecutter:1.9.1",

"createRepo": "harness/createrepo:1.9.0",

"directPush": "harness/directpush:1.9.0",

"registerCatalog": "harness/registercatalog:1.9.0",

"createCatalog": "harness/createcatalog:1.9.0",

"slackNotify": "harness/slacknotify:1.9.0",

"createOrganisation": "harness/createorganisation:1.9.0",

"createProject": "harness/createproject:1.9.0"

},

"metaData": null,

"correlationId": "08919155-a6d6-4bd3-8401-6b86318c85ca"

} -

Send a

get-customer-configrequest to get the build images that your IDP pipelines currently use. WhenoverridesOnlyistrue, which is the default value, this endpoint returns the non-default images that your pipeline uses.curl --location --request GET "https://app.harness.io/gateway/idp/execution-config/get-customer-config?accountIdentifier=$YOUR_HARNESS_ACCOUNT_ID&infra=K8&overridesOnly=true" \

--header 'X-API-KEY: $API_KEY'If the response contains

null, your pipeline is using all default images, for example:{

"status": "SUCCESS",

"data": {},

"metaData": null,

"correlationId": "11ce1bc8-b337-4687-9ab9-e13d553ae82f"

} -

Send a

update-config(POST) request with a list of the images you want to update and the new tags to apply.curl --location --request POST "https://app.harness.io/gateway/idp/execution-config/update-config?accountIdentifier=$YOUR_HARNESS_ACCOUNT_ID&infra=K8" \

--header 'X-API-KEY: $API_KEY' \

--header 'Content-Type: application/json' \

--data-raw '[

{

"field": "registerCatalog",

"value": "harness/registercatalog:1.9.0"

},

{

"field": "slackNotify",

"value": "harness/slacknotify:1.9.0"

}

]' -

To reset one or more images to their defaults, send a

reset-config(POST) request with a list of the images to reset.curl --location --request POST "https://app.harness.io/gateway/idp/execution-config/reset-config?accountIdentifier=$YOUR_HARNESS_ACCOUNT_ID&infra=K8" \

--header 'X-API-KEY: $API_KEY' \

--header 'Content-Type: application/json' \

--data-raw '[

{

"field": "registerCatalog"

},

{

"field": "createRepo"

}

]'